Margin (machine learning)

In machine learning the margin of a single data point is defined to be the distance from the data point to a decision boundary. Note that there are many distances and decision boundaries that may be appropriate for certain datasets and goals. A margin classifier is a classifier that explicitly utilizes the margin of each example while learning a classifier. There are theoretical justifications (based on the VC dimension) as to why maximizing the margin (under some suitable constraints) may be beneficial for machine learning and statistical inferences algorithms.

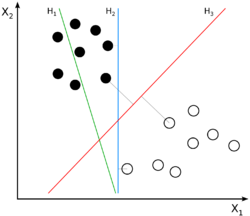

There are many hyperplanes that might classify the data. One reasonable choice as the best hyperplane is the one that represents the largest separation, or margin, between the two classes. So we choose the hyperplane so that the distance from it to the nearest data point on each side is maximized. If such a hyperplane exists, it is known as the maximum-margin hyperplane and the linear classifier it defines is known as a maximum margin classifier; or equivalently, the perceptron of optimal stability.[citation needed]

|