Gradient method

From HandWiki

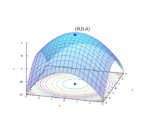

In optimization, a gradient method is an algorithm to solve problems of the form

- [math]\displaystyle{ \min_{x\in\mathbb R^n}\; f(x) }[/math]

with the search directions defined by the gradient of the function at the current point. Examples of gradient methods are the gradient descent and the conjugate gradient.

See also

- Gradient descent

- Stochastic gradient descent

- Coordinate descent

- Frank–Wolfe algorithm

- Landweber iteration

- Random coordinate descent

- Conjugate gradient method

- Derivation of the conjugate gradient method

- Nonlinear conjugate gradient method

- Biconjugate gradient method

- Biconjugate gradient stabilized method

References

- Elijah Polak (1997). Optimization : Algorithms and Consistent Approximations. Springer-Verlag. ISBN 0-387-94971-2.

|