Causal graph

In statistics, econometrics, epidemiology, genetics and related disciplines, causal graphs (also known as path diagrams, causal Bayesian networks or DAGs) are probabilistic graphical models used to encode assumptions about the data-generating process. Causal graphs can be used for communication and for inference. They are complementary to other forms of causal reasoning, for instance using causal equality notation. As communication devices, the graphs provide formal and transparent representation of the causal assumptions that researchers may wish to convey and defend. As inference tools, the graphs enable researchers to estimate effect sizes from non-experimental data,[1][2][3][4][5] derive testable implications of the assumptions encoded,[1][6][7][8] test for external validity,[9] and manage missing data[10] and selection bias.[11]

Causal graphs were first used by the geneticist Sewall Wright[12] under the rubric "path diagrams". They were later adopted by social scientists[13][14][15][16][17][18] and, to a lesser extent, by economists.[19] These models were initially confined to linear equations with fixed parameters. Modern developments have extended graphical models to non-parametric analysis, and thus achieved a generality and flexibility that has transformed causal analysis in computer science, epidemiology,[20] and social science.[21]

Construction and terminology

The causal graph can be drawn in the following way. Each variable in the model has a corresponding vertex or node and an arrow is drawn from a variable X to a variable Y whenever Y is judged to respond to changes in X when all other variables are being held constant. Variables connected to Y through direct arrows are called parents of Y, or "direct causes of Y," and are denoted by Pa(Y).

Causal models often include "error terms" or "omitted factors" which represent all unmeasured factors that influence a variable Y when Pa(Y) are held constant. In most cases, error terms are excluded from the graph. However, if the graph author suspects that the error terms of any two variables are dependent (e.g. the two variables have an unobserved or latent common cause) then a bidirected arc is drawn between them. Thus, the presence of latent variables is taken into account through the correlations they induce between the error terms, as represented by bidirected arcs.

Fundamental tools

A fundamental tool in graphical analysis is d-separation, which allows researchers to determine, by inspection, whether the causal structure implies that two sets of variables are independent given a third set. In recursive models without correlated error terms (sometimes called Markovian), these conditional independences represent all of the model's testable implications.[22]

Example

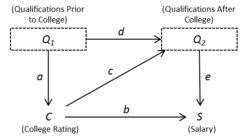

Suppose we wish to estimate the effect of attending an elite college on future earnings. Simply regressing earnings on college rating will not give an unbiased estimate of the target effect because elite colleges are highly selective, and students attending them are likely to have qualifications for high-earning jobs prior to attending the school. Assuming that the causal relationships are linear, this background knowledge can be expressed in the following structural equation model (SEM) specification.

Model 1

- [math]\displaystyle{ \begin{align} Q_1 &= U_1\\ C &= a \cdot Q_1 + U_2\\ Q_2 &= c \cdot C + d \cdot Q_1 + U_3\\ S &= b \cdot C + e \cdot Q_2 + U_4, \end{align} }[/math]

where [math]\displaystyle{ Q_1 }[/math] represents the individual's qualifications prior to college, [math]\displaystyle{ Q_2 }[/math] represents qualifications after college, [math]\displaystyle{ C }[/math] contains attributes representing the quality of the college attended, and [math]\displaystyle{ S }[/math] the individual's salary.

Figure 1 is a causal graph that represents this model specification. Each variable in the model has a corresponding node or vertex in the graph. Additionally, for each equation, arrows are drawn from the independent variables to the dependent variables. These arrows reflect the direction of causation. In some cases, we may label the arrow with its corresponding structural coefficient as in Figure 1.

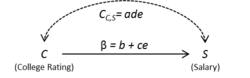

If [math]\displaystyle{ Q_1 }[/math] and [math]\displaystyle{ Q_2 }[/math] are unobserved or latent variables their influence on [math]\displaystyle{ C }[/math] and [math]\displaystyle{ S }[/math] can be attributed to their error terms. By removing them, we obtain the following model specification:

Model 2

- [math]\displaystyle{ \begin{align} C &= U_C \\ S &= \beta C + U_S \end{align} }[/math]

The background information specified by Model 1 imply that the error term of [math]\displaystyle{ S }[/math], [math]\displaystyle{ U_S }[/math], is correlated with C's error term, [math]\displaystyle{ U_C }[/math]. As a result, we add a bidirected arc between S and C, as in Figure 2.

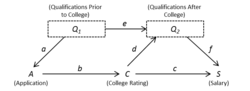

Since [math]\displaystyle{ U_S }[/math] is correlated with [math]\displaystyle{ U_C }[/math] and, therefore, [math]\displaystyle{ C }[/math], [math]\displaystyle{ C }[/math] is endogenous and [math]\displaystyle{ \beta }[/math] is not identified in Model 2. However, if we include the strength of an individual's college application, [math]\displaystyle{ A }[/math], as shown in Figure 3, we obtain the following model:

Model 3

- [math]\displaystyle{ \begin{align} Q_1 &= U_1\\ A &= a \cdot Q_1 + U_2 \\ C &= b \cdot A + U_3\\ Q_2 &= e \cdot Q_1 + d \cdot C + U_4\\ S &= c \cdot C + f \cdot Q_2 + U_5, \end{align} }[/math]

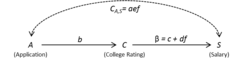

By removing the latent variables from the model specification we obtain:

Model 4

- [math]\displaystyle{ \begin{align} A &= a \cdot Q_1 + U_A \\ C &= b \cdot A + U_C\\ S &= \beta \cdot C + U_S, \end{align} }[/math]

with [math]\displaystyle{ U_A }[/math] correlated with [math]\displaystyle{ U_S }[/math].

Now, [math]\displaystyle{ \beta }[/math] is identified and can be estimated using the regression of [math]\displaystyle{ S }[/math] on [math]\displaystyle{ C }[/math] and [math]\displaystyle{ A }[/math]. This can be verified using the single-door criterion,[1][23] a necessary and sufficient graphical condition for the identification of a structural coefficients, like [math]\displaystyle{ \beta }[/math], using regression.

References

- ↑ 1.0 1.1 1.2 Pearl, Judea (2000). Causality. Cambridge, MA: MIT Press. ISBN 9780521773621. https://archive.org/details/causalitymodelsr0000pear.

- ↑ Tian, Jin; Pearl, Judea (2002). "A general identification condition for causal effects". Proceedings of the Eighteenth National Conference on Artificial Intelligence. ISBN 978-0-262-51129-2. https://escholarship.org/uc/item/17r754xz.

- ↑ Shpitser, Ilya; Pearl, Judea (2008). "Complete Identification Methods for the Causal Hierarchy". Journal of Machine Learning Research 9: 1941–1979. http://www.jmlr.org/papers/volume9/shpitser08a/shpitser08a.pdf.

- ↑ Huang, Y.; Valtorta, M. (2006). "Identifiability in causal bayesian networks: A sound and complete algorithm". Proceedings of AAAI. https://www.aaai.org/Papers/AAAI/2006/AAAI06-180.pdf.

- ↑ Bareinboim, Elias; Pearl, Judea (2012). "Causal Inference by Surrogate Experiments: z-Identifiability". Proceedings of the Twenty-Eighth Conference on Uncertainty in Artificial Intelligence. ISBN 978-0-9749039-8-9. Bibcode: 2012arXiv1210.4842B.

- ↑ Tian, Jin; Pearl, Judea (2002). "On the Testable Implications of Causal Models with Hidden Variables". Proceedings of the Eighteenth Conference on Uncertainty in Artificial Intelligence. pp. 519–27. ISBN 978-1-55860-897-9. Bibcode: 2013arXiv1301.0608T.

- ↑ Shpitser, Ilya; Pearl, Judea (2008). "Dormant Independence". Proceedings of AAAI.

- ↑ Chen, Bryant; Pearl, Judea (2014). "Testable Implications of Linear Structural Equation Models". Proceedings of AAAI 28. doi:10.1609/aaai.v28i1.9065. https://ojs.aaai.org/index.php/AAAI/article/download/9065/8924.

- ↑ Bareinmboim, Elias; Pearl, Judea (2014). "External Validity: From do-calculus to Transportability across Populations". Statistical Science 29 (4): 579–595. doi:10.1214/14-sts486.

- ↑ Mohan, Karthika; Pearl, Judea; Tian, Jin (2013). "Graphical Models for Inference with Missing Data". Advances in Neural Information Processing Systems. https://proceedings.neurips.cc/paper/2013/file/0ff8033cf9437c213ee13937b1c4c455-Paper.pdf.

- ↑ Bareinboim, Elias; Tian, Jin; Pearl, Judea (2014). "Recovering from Selection Bias in Causal and Statistical Inference". Proceedings of AAAI 28. doi:10.1609/aaai.v28i1.9074. https://ojs.aaai.org/index.php/AAAI/article/view/9074/8933.

- ↑ Wright, S. (1921). "Correlation and causation". Journal of Agricultural Research 20: 557–585.

- ↑ Blalock, H. M. (1960). "Correlational analysis and causal inferences". American Anthropologist 62 (4): 624–631. doi:10.1525/aa.1960.62.4.02a00060.

- ↑ Duncan, O. D. (1966). "Path analysis: Sociological examples.". American Journal of Sociology 72: 1–16. doi:10.1086/224256.

- ↑ Duncan, O. D. (1976). "Introduction to structural equation models". American Journal of Sociology 82 (3): 731–733. doi:10.1086/226377.

- ↑ Jöreskog, K. G. (1969). "A general approach to confirmatory maximum likelihood factor analysis". Psychometrika 34 (2): 183–202. doi:10.1007/bf02289343.

- ↑ Goldberger, A. S.; Duncan, O. D. (1973). Structural equation models in the social sciences. New York: Seminar Press.

- ↑ Goldberger, A. S. (1972). "Structural equation models in the social sciences". Econometrica 40 (6): 979–1001. doi:10.2307/1913851.

- ↑ White, Halbert; Chalak, Karim; Lu, Xun (2011). "Linking granger causality and the pearl causal model with settable systems". Causality in Time Series Challenges in Machine Learning 5. http://proceedings.mlr.press/v12/white11/white11.pdf.

- ↑ Rothman, Kenneth J.; Greenland, Sander; Lash, Timothy (2008). Modern epidemiology. Lippincott Williams & Wilkins.

- ↑ Morgan, S. L.; Winship, C. (2007). Counterfactuals and causal inference: Methods and principles for social research.. New York: Cambridge University Press.

- ↑ Geiger, Dan; Pearl, Judea (1993). "Logical and Algorithmic Properties of Conditional Independence". Annals of Statistics 21 (4): 2001–2021. doi:10.1214/aos/1176349407.

- ↑ Chen, B.; Pearl, J (2014). "Graphical Tools for Linear Structural Equation Modeling". Technical Report. https://apps.dtic.mil/sti/pdfs/ADA609131.pdf.

|