PLS (complexity)

In computational complexity theory, Polynomial Local Search (PLS) is a complexity class that models the difficulty of finding a locally optimal solution to an optimization problem. The main characteristics of problems that lie in PLS are that the cost of a solution can be calculated in polynomial time and the neighborhood of a solution can be searched in polynomial time. Therefore it is possible to verify whether or not a solution is a local optimum in polynomial time. Furthermore, depending on the problem and the algorithm that is used for solving the problem, it might be faster to find a local optimum instead of a global optimum.

Description

When searching for a local optimum, there are two interesting issues to deal with: First how to find a local optimum, and second how long it takes to find a local optimum. For many local search algorithms, it is not known, whether they can find a local optimum in polynomial time or not.[1] So to answer the question of how long it takes to find a local optimum, Johnson, Papadimitriou and Yannakakis [2] introduced the complexity class PLS in their paper "How easy is local search?". It contains local search problems for which the local optimality can be verified in polynomial time.

A local search problem is in PLS, if the following properties are satisfied:

- The size of every solution is polynomially bounded in the size of the instance [math]\displaystyle{ I }[/math].

- It is possible to find some solution of a problem instance in polynomial time.

- It is possible to calculate the cost of each solution in polynomial time.

- It is possible to find all neighbors of each solution in polynomial time.

With these properties, it is possible to find for each solution [math]\displaystyle{ s }[/math] the best neighboring solution or if there is no such better neighboring solution, state that [math]\displaystyle{ s }[/math] is a local optimum.

Example

Consider the following instance [math]\displaystyle{ I }[/math] of the Max-2Sat Problem: [math]\displaystyle{ (x_{1} \vee x_{2}) \wedge (\neg x_{1} \vee x_{3}) \wedge (\neg x_{2} \vee x_{3}) }[/math]. The aim is to find an assignment, that maximizes the sum of the satisfied clauses.

A solution [math]\displaystyle{ s }[/math] for that instance is a bit string that assigns every [math]\displaystyle{ x_{i} }[/math] the value 0 or 1. In this case, a solution consists of 3 bits, for example [math]\displaystyle{ s=000 }[/math], which stands for the assignment of [math]\displaystyle{ x_{1} }[/math] to [math]\displaystyle{ x_{3} }[/math] with the value 0. The set of solutions [math]\displaystyle{ F_{L}(I) }[/math] is the set of all possible assignments of [math]\displaystyle{ x_{1} }[/math], [math]\displaystyle{ x_{2} }[/math] and [math]\displaystyle{ x_{3} }[/math].

The cost of each solution is the number of satisfied clauses, so [math]\displaystyle{ c_{L}(I, s=000)=2 }[/math] because the second and third clause are satisfied.

The Flip-neighbor of a solution [math]\displaystyle{ s }[/math] is reached by flipping one bit of the bit string [math]\displaystyle{ s }[/math], so the neighbors of [math]\displaystyle{ s }[/math] are [math]\displaystyle{ N(I,000)=\{100, 010, 001\} }[/math] with the following costs:

[math]\displaystyle{ c_{L}(I, 100)=2 }[/math]

[math]\displaystyle{ c_{L}(I, 010)=2 }[/math]

[math]\displaystyle{ c_{L}(I, 001)=2 }[/math]

There are no neighbors with better costs than [math]\displaystyle{ s }[/math], if we are looking for a solution with maximum cost. Even though [math]\displaystyle{ s }[/math] is not a global optimum (which for example would be a solution [math]\displaystyle{ s'=111 }[/math] that satisfies all clauses and has [math]\displaystyle{ c_{L}(I, s')=3 }[/math]), [math]\displaystyle{ s }[/math] is a local optimum, because none of its neighbors has better costs.

Intuitively it can be argued that this problem lies in PLS, because:

- It is possible to find a solution to an instance in polynomial time, for example by setting all bits to 0.

- It is possible to calculate the cost of a solution in polynomial time, by going once through the whole instance and counting the clauses that are satisfied.

- It is possible to find all neighbors of a solution in polynomial time, by taking the set of solutions that differ from [math]\displaystyle{ s }[/math] in exactly one bit.

If we are simply counting the number of satisfied clauses, the problem can be solved in polynomial time since the number of possible costs is polynomial. However, if we assign each clause a positive integer weight (and seek to locally maximize the sum of weights of satisfied clauses), the problem becomes PLS-complete (below).

Formal Definition

A local search problem [math]\displaystyle{ L }[/math] has a set [math]\displaystyle{ D_L }[/math] of instances which are encoded using strings over a finite alphabet [math]\displaystyle{ \Sigma }[/math]. For each instance [math]\displaystyle{ I }[/math] there exists a finite solution set [math]\displaystyle{ F_L(I) }[/math]. Let [math]\displaystyle{ R }[/math] be the relation that models [math]\displaystyle{ L }[/math]. The relation [math]\displaystyle{ R \subseteq D_L \times F_L(I) := \{(I, s) \mid I \in D_{L}, s \in F_{L}(I)\} }[/math] is in PLS [2][3][4] if:

- The size of every solution [math]\displaystyle{ s \in F_{L}(I) }[/math] is polynomial bounded in the size of [math]\displaystyle{ I }[/math]

- Problem instances [math]\displaystyle{ I \in D_{L} }[/math] and solutions [math]\displaystyle{ s \in F_{L}(I) }[/math] are polynomial time verifiable

- There is a polynomial time computable function [math]\displaystyle{ A: D_{L} \rightarrow F_{L}(I) }[/math] that returns for each instance [math]\displaystyle{ I \in D_{L} }[/math] some solution [math]\displaystyle{ s \in F_{L}(I) }[/math]

- There is a polynomial time computable function [math]\displaystyle{ B: D_L \times F_L(I) \rightarrow \mathbb{R} ^{+} }[/math] [5] that returns for each solution [math]\displaystyle{ s \in F_L(I) }[/math] of an instance [math]\displaystyle{ I \in D_L }[/math] the cost [math]\displaystyle{ c_L(I, s) }[/math]

- There is a polynomial time computable function [math]\displaystyle{ N: D_L \times F_L(I) \rightarrow Powerset(F_L(I)) }[/math] that returns the set of neighbors for an instance-solution pair

- There is a polynomial time computable function [math]\displaystyle{ C: D_L \times F_L(I) \rightarrow N(I, s) \cup \{OPT\} }[/math] that returns a neighboring solution [math]\displaystyle{ s' }[/math] with better cost than solution [math]\displaystyle{ s }[/math], or states that [math]\displaystyle{ s }[/math] is locally optimal

- For every instance [math]\displaystyle{ I \in D_L }[/math], [math]\displaystyle{ R }[/math] exactly contains the pairs [math]\displaystyle{ (I, s) }[/math] where [math]\displaystyle{ s }[/math] is a local optimal solution of [math]\displaystyle{ I }[/math]

An instance [math]\displaystyle{ D_L }[/math] has the structure of an implicit graph (also called Transition graph [6]), the vertices being the solutions with two solutions [math]\displaystyle{ s, s' \in F_L(I) }[/math] connected by a directed arc iff [math]\displaystyle{ s' \in N(I, s) }[/math].

A local optimum is a solution [math]\displaystyle{ s }[/math], that has no neighbor with better costs. In the implicit graph, a local optimum is a sink. A neighborhood where every local optimum is a global optimum, which is a solution with the best possible cost, is called an exact neighborhood.[6][1]

Alternative Definition

The class PLS is the class containing all problems that can be reduced in polynomial time to the problem Sink-of-DAG[7] (also called Local-Opt [8]): Given two integers [math]\displaystyle{ n }[/math] and [math]\displaystyle{ m }[/math] and two Boolean circuits [math]\displaystyle{ S: \{0,1\}^n \rightarrow \{0,1\}^n }[/math] such that [math]\displaystyle{ S(0^n) \neq 0^n }[/math] and [math]\displaystyle{ V: \{0,1\}^n \rightarrow \{0,1, .., 2^m-1\} }[/math], find a vertex [math]\displaystyle{ x \in \{0,1\}^n }[/math] such that [math]\displaystyle{ S(x)\neq x }[/math] and either [math]\displaystyle{ S(S(x)) = S(x) }[/math] or [math]\displaystyle{ V(S(x)) \leq V(x) }[/math].

Example neighborhood structures

Example neighborhood structures for problems with boolean variables (or bit strings) as solution:

- Flip[2] - The neighborhood of a solution [math]\displaystyle{ s = x_1 , ..., x_n }[/math] can be achieved by negating (flipping) one arbitrary input bit [math]\displaystyle{ x_i }[/math]. So one solution [math]\displaystyle{ s }[/math] and all its neighbors [math]\displaystyle{ r \in N (I, s) }[/math] have Hamming distance one: [math]\displaystyle{ H(s, r) = 1 }[/math].

- Kernighan-Lin[2][6] - A solution [math]\displaystyle{ r }[/math] is a neighbor of solution [math]\displaystyle{ s }[/math] if [math]\displaystyle{ r }[/math] can be obtained from [math]\displaystyle{ s }[/math] by a sequence of greedy flips, where no bit is flipped twice. This means, starting with [math]\displaystyle{ s }[/math], the Flip-neighbor [math]\displaystyle{ s_1 }[/math] of [math]\displaystyle{ s }[/math] with the best cost, or the least loss of cost, is chosen to be a neighbor of s in the Kernighan-Lin structure. As well as best (or least worst) neighbor of [math]\displaystyle{ s_1 }[/math] , and so on, until [math]\displaystyle{ s_i }[/math] is a solution where every bit of [math]\displaystyle{ s }[/math] is negated. Note that it is not allowed to flip a bit back, if it once has been flipped.

- k-Flip[9] - A solution [math]\displaystyle{ r }[/math] is a neighbor of solution [math]\displaystyle{ s }[/math] if the Hamming distance [math]\displaystyle{ H }[/math] between [math]\displaystyle{ s }[/math] and [math]\displaystyle{ r }[/math] is at most [math]\displaystyle{ k }[/math], so [math]\displaystyle{ H(s, r) \leq k }[/math].

Example neighborhood structures for problems on graphs:

- Swap[10] - A partition [math]\displaystyle{ (P_2, P_3) }[/math] of nodes in a graph is a neighbor of a partition [math]\displaystyle{ (P_0, P_1) }[/math] if [math]\displaystyle{ (P_2, P_3) }[/math] can be obtained from [math]\displaystyle{ (P_0, P_1) }[/math] by swapping one node [math]\displaystyle{ p_0 \in P_0 }[/math] with a node [math]\displaystyle{ p_1 \in P_1 }[/math].

- Kernighan-Lin[1][2] - A partition [math]\displaystyle{ (P_2, P_3) }[/math] is a neighbor of [math]\displaystyle{ (P_0, P_1) }[/math] if [math]\displaystyle{ (P_2, P_3) }[/math] can be obtained by a greedy sequence of swaps from nodes in [math]\displaystyle{ P_0 }[/math] with nodes in [math]\displaystyle{ P_1 }[/math]. This means the two nodes [math]\displaystyle{ p_0 \in P_0 }[/math] and [math]\displaystyle{ p_1 \in P_1 }[/math] are swapped, where the partition [math]\displaystyle{ ((P_0 \setminus p_0) \cup p1, (P_1 \setminus p_1) \cup p_0) }[/math] gains the highest possible weight, or loses the least possible weight. Note that no node is allowed to be swapped twice.

- Fiduccia-Matheyses [1][11] - This neighborhood is similar to the Kernighan-Lin neighborhood structure, it is a greedy sequence of swaps, except that each swap happens in two steps. First the [math]\displaystyle{ p_0 \in P_0 }[/math] with the most gain of cost, or the least loss of cost, is swapped to [math]\displaystyle{ P_1 }[/math], then the node [math]\displaystyle{ p_1 \in P_1 }[/math] with the most cost, or the least loss of cost is swapped to [math]\displaystyle{ P_0 }[/math] to balance the partitions again. Experiments have shown that Fiduccia-Mattheyses has a smaller run time in each iteration of the standard algorithm, though it sometimes finds an inferior local optimum.

- FM-Swap[1] - This neighborhood structure is based on the Fiduccia-Mattheyses neighborhood structure. Each solution [math]\displaystyle{ s = (P_0, P_1) }[/math] has only one neighbor, the partition obtained after the first swap of the Fiduccia-Mattheyses.

The standard Algorithm

Consider the following computational problem: Given some instance [math]\displaystyle{ I }[/math] of a PLS problem [math]\displaystyle{ L }[/math], find a locally optimal solution [math]\displaystyle{ s \in F_L(I) }[/math] such that [math]\displaystyle{ c_L(I, s') \geq c_L(I, s) }[/math] for all [math]\displaystyle{ s' \in N(I, s) }[/math].

Every local search problem can be solved using the following iterative improvement algorithm:[2]

- Use [math]\displaystyle{ A_L }[/math] to find an initial solution [math]\displaystyle{ s }[/math]

- Use algorithm [math]\displaystyle{ C_L }[/math] to find a better solution [math]\displaystyle{ s' \in N(I, s) }[/math]. If such a solution exists, replace [math]\displaystyle{ s }[/math] by [math]\displaystyle{ s' }[/math] and repeat step 2, else return [math]\displaystyle{ s }[/math]

Unfortunately, it generally takes an exponential number of improvement steps to find a local optimum even if the problem [math]\displaystyle{ L }[/math] can be solved exactly in polynomial time.[2] It is not necessary always to use the standard algorithm, there may be a different, faster algorithm for a certain problem. For example a local search algorithm used for Linear programming is the Simplex algorithm.

The run time of the standard algorithm is pseudo-polynomial in the number of different costs of a solution.[12]

The space the standard algorithm needs is only polynomial. It only needs to save the current solution [math]\displaystyle{ s }[/math], which is polynomial bounded by definition.[1]

Reductions

A Reduction of one problem to another may be used to show that the second problem is at least as difficult as the first. In particular, a PLS-reduction is used to prove that a local search problem that lies in PLS is also PLS-complete, by reducing a PLS-complete Problem to the one that shall be proven to be PLS-complete.

PLS-reduction

A local search problem [math]\displaystyle{ L_1 }[/math] is PLS-reducible[2] to a local search problem [math]\displaystyle{ L_2 }[/math] if there are two polynomial time functions [math]\displaystyle{ f : D_1 \rightarrow D_2 }[/math] and [math]\displaystyle{ g: D_1 \times F_2(f(I_1)) \rightarrow F_1(I_1) }[/math] such that:

- if [math]\displaystyle{ I_1 }[/math] is an instance of [math]\displaystyle{ L_1 }[/math] , then [math]\displaystyle{ f (I_1 ) }[/math] is an instance of [math]\displaystyle{ L_2 }[/math]

- if [math]\displaystyle{ s_2 }[/math] is a solution for [math]\displaystyle{ f (I_1 ) }[/math] of [math]\displaystyle{ L_2 }[/math] , then [math]\displaystyle{ g(I_1 , s_2 ) }[/math] is a solution for [math]\displaystyle{ I_1 }[/math] of [math]\displaystyle{ L_1 }[/math]

- if [math]\displaystyle{ s_2 }[/math] is a local optimum for instance [math]\displaystyle{ f (I_1 ) }[/math] of [math]\displaystyle{ L_2 }[/math] , then [math]\displaystyle{ g(I_1 , s_2 ) }[/math] has to be a local optimum for instance [math]\displaystyle{ I_1 }[/math] of [math]\displaystyle{ L_1 }[/math]

It is sufficient to only map the local optima of [math]\displaystyle{ f(I_1) }[/math] to the local optima of [math]\displaystyle{ I_1 }[/math], and to map all other solutions for example to the standard solution returned by [math]\displaystyle{ A_{1} }[/math].[6]

PLS-reductions are transitive.[2]

Tight PLS-reduction

Definition Transition graph

The transition graph[6] [math]\displaystyle{ T_I }[/math] of an instance [math]\displaystyle{ I }[/math] of a problem [math]\displaystyle{ L }[/math] is a directed graph. The nodes represent all elements of the finite set of solutions [math]\displaystyle{ F_L(I) }[/math] and the edges point from one solution to the neighbor with strictly better cost. Therefore it is an acyclic graph. A sink, which is a node with no outgoing edges, is a local optimum. The height of a vertex [math]\displaystyle{ v }[/math] is the length of the shortest path from [math]\displaystyle{ v }[/math] to the nearest sink. The height of the transition graph is the largest of the heights of all vertices, so it is the height of the largest shortest possible path from a node to its nearest sink.

Definition Tight PLS-reduction

A PLS-reduction [math]\displaystyle{ (f, g) }[/math] from a local search problem [math]\displaystyle{ L_1 }[/math] to a local search problem [math]\displaystyle{ L_2 }[/math] is a tight PLS-reduction[10] if for any instance [math]\displaystyle{ I_1 }[/math] of [math]\displaystyle{ L_1 }[/math], a subset [math]\displaystyle{ R }[/math] of solutions of instance [math]\displaystyle{ I_2 = f (I_1 ) }[/math] of [math]\displaystyle{ L_2 }[/math] can be chosen, so that the following properties are satisfied:

- [math]\displaystyle{ R }[/math] contains, among other solutions, all local optima of [math]\displaystyle{ I_2 }[/math]

- For every solution [math]\displaystyle{ p }[/math] of [math]\displaystyle{ I_1 }[/math] , a solution [math]\displaystyle{ q \in R }[/math] of [math]\displaystyle{ I_2 = f (I_1 ) }[/math] can be constructed in polynomial time, so that [math]\displaystyle{ g(I_1 , q) = p }[/math]

- If the transition graph [math]\displaystyle{ T_{f (I_1 )} }[/math] of [math]\displaystyle{ f (I_1 ) }[/math] contains a direct path from [math]\displaystyle{ q }[/math] to [math]\displaystyle{ q_0 }[/math], and [math]\displaystyle{ q, q_0 \in R }[/math], but all internal path vertices are outside [math]\displaystyle{ R }[/math], then for the corresponding solutions [math]\displaystyle{ p = g(I_1 , q) }[/math] and [math]\displaystyle{ p_0 = g(I_1 , q_0 ) }[/math] holds either [math]\displaystyle{ p = p_0 }[/math] or [math]\displaystyle{ T_{I_1} }[/math] contains an edge from [math]\displaystyle{ p }[/math] to [math]\displaystyle{ p_0 }[/math]

Relationship to other complexity classes

PLS lies between the functional versions of P and NP: FP ⊆ PLS ⊆ FNP.[2]

PLS also is a subclass of TFNP,[13] that describes computational problems in which a solution is guaranteed to exist and can be recognized in polynomial time. For a problem in PLS, a solution is guaranteed to exist because the minimum-cost vertex of the entire graph is a valid solution, and the validity of a solution can be checked by computing its neighbors and comparing the costs of each one to another.

It is also proven that if a PLS problem is NP-hard, then NP = co-NP.[2]

PLS-completeness

Definition

A local search problem [math]\displaystyle{ L }[/math] is PLS-complete,[2] if

- [math]\displaystyle{ L }[/math] is in PLS

- every problem in PLS can be PLS-reduced to [math]\displaystyle{ L }[/math]

The optimization version of the circuit problem under the Flip neighborhood structure has been shown to be a first PLS-complete problem.[2]

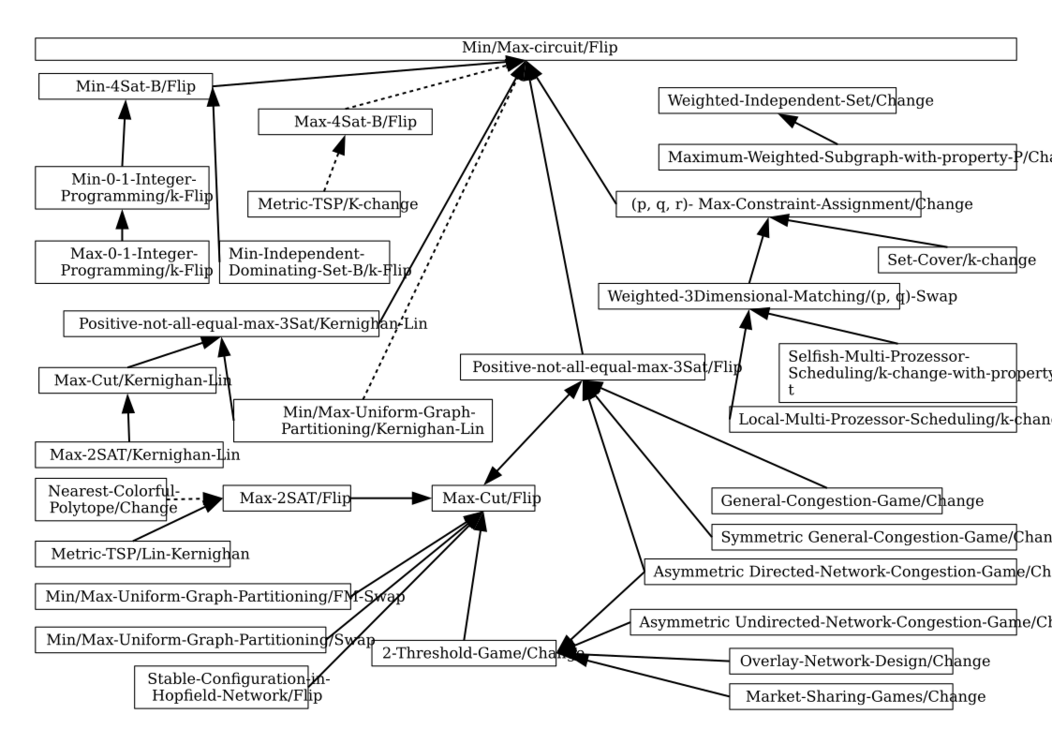

List of PLS-complete Problems

This is an incomplete list of some known problems that are PLS-complete. The problems here are the weighted versions; for example, Max-2SAT/Flip is weighted even though Max-2SAT ordinarily refers to the unweighted version.

Notation: Problem / Neighborhood structure

- Min/Max-circuit/Flip has been proven to be the first PLS-complete problem.[2]

- Sink-of-DAG is complete by definition.

- Positive-not-all-equal-max-3Sat/Flip has been proven to be PLS-complete via a tight PLS-reduction from Min/Max-circuit/Flip to Positive-not-all-equal-max-3Sat/Flip. Note that Positive-not-all-equal-max-3Sat/Flip can be reduced from Max-Cut/Flip too.[10]

- Positive-not-all-equal-max-3Sat/Kernighan-Lin has been proven to be PLS-complete via a tight PLS-reduction from Min/Max-circuit/Flip to Positive-not-all-equal-max-3Sat/Kernighan-Lin.[1]

- Max-2Sat/Flip has been proven to be PLS-complete via a tight PLS-reduction from Max-Cut/Flip to Max-2Sat/Flip.[1][10]

- Min-4Sat-B/Flip has been proven to be PLS-complete via a tight PLS-reduction from Min-circuit/Flip to Min-4Sat-B/Flip.[9]

- Max-4Sat-B/Flip(or CNF-SAT) has been proven to be PLS-complete via a PLS-reduction from Max-circuit/Flip to Max-4Sat-B/Flip.[14]

- Max-4Sat-(B=3)/Flip has been proven to be PLS-complete via a PLS-reduction from Max-circuit/Flip to Max-4Sat-(B=3)/Flip.[15]

- Max-Uniform-Graph-Partitioning/Swap has been proven to be PLS-complete via a tight PLS-reduction from Max-Cut/Flip to Max-Uniform-Graph-partitioning/Swap.[10]

- Max-Uniform-Graph-Partitioning/Fiduccia-Matheyses is stated to be PLS-complete without proof.[1]

- Max-Uniform-Graph-Partitioning/FM-Swap has been proven to be PLS-complete via a tight PLS-reduction from Max-Cut/Flip to Max-Uniform-Graph-partitioning/FM-Swap.[10]

- Max-Uniform-Graph-Partitioning/Kernighan-Lin has been proven to be PLS-complete via a PLS-reduction from Min/Max-circuit/Flip to Max-Uniform-Graph-Partitioning/Kernighan-Lin.[2] There is also a tight PLS-reduction from Positive-not-all-equal-max-3Sat/Kernighan-Lin to Max-Uniform-Graph-Partitioning/Kernighan-Lin.[1]

- Max-Cut/Flip has been proven to be PLS-complete via a tight PLS-reduction from Positive-not-all-equal-max-3Sat/Flip to Max-Cut/Flip.[1][10]

- Max-Cut/Kernighan-Lin is claimed to be PLS-complete without proof.[6]

- Min-Independent-Dominating-Set-B/k-Flip has been proven to be PLS-complete via a tight PLS-reduction from Min-4Sat-B′/Flip to Min-Independent-Dominating-Set-B/k-Flip.[9]

- Weighted-Independent-Set/Change is claimed to be PLS-complete without proof.[2][10][6]

- Maximum-Weighted-Subgraph-with-property-P/Change is PLS-complete if property P = "has no edges", as it then equals Weighted-Independent-Set/Change. It has also been proven to be PLS-complete for a general hereditary, non-trivial property P via a tight PLS-reduction from Weighted-Independent-Set/Change to Maximum-Weighted-Subgraph-with-property-P/Change.[16]

- Set-Cover/k-change has been proven to be PLS-complete for each k ≥ 2 via a tight PLS-reduction from (3, 2, r)-Max-Constraint-Assignment/Change to Set-Cover/k-change.[17]

- Metric-TSP/k-Change has been proven to be PLS-complete via a PLS-reduction from Max-4Sat-B/Flip to Metric-TSP/k-Change.[15]

- Metric-TSP/Lin-Kernighan has been proven to be PLS-complete via a tight PLS-reduction from Max-2Sat/Flip to Metric-TSP/Lin-Kernighan.[18]

- Local-Multi-Processor-Scheduling/k-change has been proven to be PLS-complete via a tight PLS-reduction from Weighted-3Dimensional-Matching/(p, q)-Swap to Local-Multi-Processor-scheduling/(2p+q)-change, where (2p + q) ≥ 8.[5]

- Selfish-Multi-Processor-Scheduling/k-change-with-property-t has been proven to be PLS-complete via a tight PLS-reduction from Weighted-3Dimensional-Matching/(p, q)-Swap to (2p+q)-Selfish-Multi-Processor-Scheduling/k-change-with-property-t, where (2p + q) ≥ 8.[5]

- Finding a pure Nash Equilibrium in a General-Congestion-Game/Change has been proven PLS-complete via a tight PLS-reduction from Positive-not-all-equal-max-3Sat/Flip to General-Congestion-Game/Change.[19]

- Finding a pure Nash Equilibrium in a Symmetric General-Congestion-Game/Change has been proven to be PLS-complete via a tight PLS-reduction from an asymmetric General-Congestion-Game/Change to symmetric General-Congestion-Game/Change.[19]

- Finding a pure Nash Equilibrium in an Asymmetric Directed-Network-Congestion-Games/Change has been proven to be PLS-complete via a tight reduction from Positive-not-all-equal-max-3Sat/Flip to Directed-Network-Congestion-Games/Change [19] and also via a tight PLS-reduction from 2-Threshold-Games/Change to Directed-Network-Congestion-Games/Change.[20]

- Finding a pure Nash Equilibrium in an Asymmetric Undirected-Network-Congestion-Games/Change has been proven to be PLS-complete via a tight PLS-reduction from 2-Threshold-Games/Change to Asymmetric Undirected-Network-Congestion-Games/Change.[20]

- Finding a pure Nash Equilibrium in a Symmetric Distance-Bounded-Network-Congestion-Games has been proven to be PLS-complete via a tight PLS-reduction from 2-Threshold-Games to Symmetric Distance-Bounded-Network-Congestion-Games.[21]

- Finding a pure Nash Equilibrium in a 2-Threshold-Game/Change has been proven to be PLS-complete via a tight reduction from Max-Cut/Flip to 2-Threshold-Game/Change.[20]

- Finding a pure Nash Equilibrium in Market-Sharing-Game/Change with polynomial bounded costs has been proven to be PLS-complete via a tight PLS-reduction from 2-Threshold-Games/Change to Market-Sharing-Game/Change.[20]

- Finding a pure Nash Equilibrium in an Overlay-Network-Design/Change has been proven to be PLS-complete via a reduction from 2-Threshold-Games/Change to Overlay-Network-Design/Change. Analogously to the proof of asymmetric Directed-Network-Congestion-Game/Change, the reduction is tight.[20]

- Min-0-1-Integer Programming/k-Flip has been proven to be PLS-complete via a tight PLS-reduction from Min-4Sat-B′/Flip to Min-0-1-Integer Programming/k-Flip.[9]

- Max-0-1-Integer Programming/k-Flip is claimed to be PLS-complete because of PLS-reduction to Max-0-1-Integer Programming/k-Flip, but the proof is left out.[9]

- (p, q, r)-Max-Constraint-Assignment

- (3, 2, 3)-Max-Constraint-Assignment-3-partite/Change has been proven to be PLS-complete via a tight PLS-reduction from Circuit/Flip to (3, 2, 3)-Max-Constraint-Assignment-3-partite/Change.[22]

- (2, 3, 6)-Max-Constraint-Assignment-2-partite/Change has been proven to be PLS-complete via a tight PLS-reduction from Circuit/Flip to (2, 3, 6)-Max-Constraint-Assignment-2-partite/Change.[22]

- (6, 2, 2)-Max-Constraint-Assignment/Change has been proven to be PLS-complete via a tight reduction from Circuit/Flip to (6,2, 2)-Max-Constraint-Assignment/Change.[22]

- (4, 3, 3)-Max-Constraint-Assignment/Change equals Max-4Sat-(B=3)/Flip and has been proven to be PLS-complete via a PLS-reduction from Max-circuit/Flip.[15] It is claimed that the reduction can be extended so tightness is obtained.[22]

- Nearest-Colorful-Polytope/Change has been proven to be PLS-complete via a PLS-reduction from Max-2Sat/Flip to Nearest-Colorful-Polytope/Change.[3]

- Stable-Configuration/Flip in a Hopfield network has been proven to be PLS-complete if the thresholds are 0 and the weights are negative via a tight PLS-reduction from Max-Cut/Flip to Stable-Configuration/Flip.[1][10][18]

- Weighted-3Dimensional-Matching/(p, q)-Swap has been proven to be PLS-complete for p ≥9 and q ≥ 15 via a tight PLS-reduction from (2, 3, r)-Max-Constraint-Assignment-2-partite/Change to Weighted-3Dimensional-Matching/(p, q)-Swap.[5]

- The problem Real-Local-Opt (finding the ɛ local optimum of a λ-Lipschitz continuous objective function [math]\displaystyle{ V:[0,1]^3 \rightarrow [0,1] }[/math] and a neighborhood function [math]\displaystyle{ S:[0,1]^3 \rightarrow [0,1]^3 }[/math]) is PLS-complete.[8]

- Finding a local fitness peak in a biological fitness landscapes specified by the NK-model/Point-mutation with K ≥ 2 was proven to be PLS-complete via a tight PLS-reduction from Max-2SAT/Flip.[23]

Relations to other complexity classes

Fearnley, Goldberg, Hollender and Savani[24] proved that a complexity class called CLS is equal to the intersection of PPAD and PLS.

Further reading

- Equilibria, fixed points, and complexity classes: a survey.[25]

References

- Yannakakis, Mihalis (2009), "Equilibria, fixed points, and complexity classes", Computer Science Review 3 (2): 71–85, doi:10.1016/j.cosrev.2009.03.004.

- ↑ 1.00 1.01 1.02 1.03 1.04 1.05 1.06 1.07 1.08 1.09 1.10 1.11 Yannakakis, Mihalis (2003). Local search in combinatorial optimization - Computational complexity. Princeton University Press. pp. 19–55. ISBN 9780691115221.

- ↑ 2.00 2.01 2.02 2.03 2.04 2.05 2.06 2.07 2.08 2.09 2.10 2.11 2.12 2.13 2.14 2.15 Johnson, David S; Papadimitriou, Christos H; Yannakakis, Mihalis (1988). "How easy is local search?". Journal of Computer and System Sciences 37 (1): 79–100. doi:10.1016/0022-0000(88)90046-3.

- ↑ 3.0 3.1 Mulzer, Wolfgang; Stein, Yannik (14 March 2018). "Computational Aspects of the Colorful Caratheodory Theorem". Discrete & Computational Geometry 60 (3): 720–755. doi:10.1007/s00454-018-9979-y. Bibcode: 2014arXiv1412.3347M.

- ↑ 4.0 4.1 Borzechowski, Michaela. "The complexity class Polynomial Local Search (PLS) and PLS-complete problems". http://www.mi.fu-berlin.de/inf/groups/ag-ti/theses/download/Borzechowski16.pdf.

- ↑ 5.0 5.1 5.2 5.3 Dumrauf, Dominic; Monien, Burkhard; Tiemann, Karsten (2009). "Multiprocessor Scheduling is PLS-Complete" (in en). System Sciences, 2009. HICSS'09. 42nd Hawaii International Conference on: 1–10. https://www.researchgate.net/publication/224373381.

- ↑ 6.0 6.1 6.2 6.3 6.4 6.5 6.6 Michiels, Wil; Aarts, Emile; Korst, Jan (2010). Theoretical aspects of local search. Springer Science & Business Media. ISBN 9783642071485.

- ↑ Fearnley, John; Gordon, Spencer; Mehta, Ruta; Savani, Rahul (December 2020). "Unique end of potential line". Journal of Computer and System Sciences 114: 1–35. doi:10.1016/j.jcss.2020.05.007. https://drops.dagstuhl.de/opus/volltexte/2019/10632/.

- ↑ 8.0 8.1 Daskalakis, Constantinos; Papadimitriou, Christos (23 January 2011). "Continuous Local Search". Proceedings of the Twenty-Second Annual ACM-SIAM Symposium on Discrete Algorithms: 790–804. doi:10.1137/1.9781611973082.62. ISBN 978-0-89871-993-2.

- ↑ 9.0 9.1 9.2 9.3 9.4 Klauck, Hartmut (1996). "On the hardness of global and local approximation". Proceedings of the 5th Scandinavian Workshop on Algorithm Theory: 88–99. https://www.researchgate.net/publication/221209488.

- ↑ 10.0 10.1 10.2 10.3 10.4 10.5 10.6 10.7 10.8 Schäffer, Alejandro A.; Yannakakis, Mihalis (February 1991). "Simple Local Search Problems that are Hard to Solve". SIAM Journal on Computing 20 (1): 56–87. doi:10.1137/0220004.

- ↑ Fiduccia, C. M.; Mattheyses, R. M. (1982). "A Linear-time Heuristic for Improving Network Partitions". Proceedings of the 19th Design Automation Conference: 175–181. ISBN 9780897910200. https://dl.acm.org/citation.cfm?id=809204.

- ↑ Angel, Eric; Christopoulos, Petros; Zissimopoulos, Vassilis (2014). Paradigms of Combinatorial Optimization: Problems and New Approaches - Local Search: Complexity and Approximation (2 ed.). John Wiley & Sons, Inc., Hoboken. pp. 435–471. doi:10.1002/9781119005353.ch14. ISBN 9781119005353.

- ↑ Megiddo, Nimrod; Papadimitriou, Christos H (1991). "On total functions, existence theorems and computational complexity". Theoretical Computer Science 81 (2): 317–324. doi:10.1016/0304-3975(91)90200-L.

- ↑ Krentel, M. (1 August 1990). "On Finding and Verifying Locally Optimal Solutions". SIAM Journal on Computing 19 (4): 742–749. doi:10.1137/0219052. ISSN 0097-5397.

- ↑ 15.0 15.1 15.2 Krentel, Mark W. (1989). "Structure in locally optimal solutions". 30th Annual Symposium on Foundations of Computer Science. pp. 216–221. doi:10.1109/SFCS.1989.63481. ISBN 0-8186-1982-1. https://www.researchgate.net/publication/3501884.

- ↑ Shimozono, Shinichi (1997). "Finding optimal subgraphs by local search". Theoretical Computer Science 172 (1): 265–271. doi:10.1016/S0304-3975(96)00135-1.

- ↑ Dumrauf, Dominic; Süß, Tim (2010). "On the Complexity of Local Search for Weighted Standard Set Problems" (in en). CiE 2010: Programs, Proofs, Processes. Lecture Notes in Computer Science. 6158. Springer, Berlin, Heidelberg. pp. 132–140. doi:10.1007/978-3-642-13962-8_15. ISBN 978-3-642-13961-1.

- ↑ 18.0 18.1 Papadimitriou, C.H.; Schäffer, A. A.; Yannakakis, M. (1990). "On the complexity of local search". Proceedings of the twenty-second annual ACM symposium on Theory of computing - STOC '90. pp. 438–445. doi:10.1145/100216.100274. ISBN 0897913612. http://dl.acm.org/citation.cfm?doid=100216.100274.

- ↑ 19.0 19.1 19.2 Fabrikant, Alex; Papadimitriou, Christos; Talwar, Kunal (2004). "The complexity of pure Nash equilibria". Proceedings of the thirty-sixth annual ACM symposium on Theory of computing. ACM. pp. 604–612. doi:10.1145/1007352.1007445. ISBN 978-1581138528.

- ↑ 20.0 20.1 20.2 20.3 20.4 Ackermann, Heiner; Röglin, Heiko; Vöcking, Berthold (2008). "On the Impact of Combinatorial Structure on Congestion Games". J. ACM 55 (6): 25:1–25:22. doi:10.1145/1455248.1455249. ISSN 0004-5411.

- ↑ Yang, Yichen; Jia, Kai; Rinard, Martin (2022). "On the Impact of Player Capability on Congestion Games". Algorithmic Game Theory. Lecture Notes in Computer Science. 13584. pp. 311–328. doi:10.1007/978-3-031-15714-1_18. ISBN 978-3-031-15713-4. https://link.springer.com/chapter/10.1007/978-3-031-15714-1_18.

- ↑ 22.0 22.1 22.2 22.3 Dumrauf, Dominic; Monien, Burkhard (2013). "On the PLS-complexity of Maximum Constraint Assignment". Theor. Comput. Sci. 469: 24–52. doi:10.1016/j.tcs.2012.10.044. ISSN 0304-3975.

- ↑ Kaznatcheev, Artem (2019). "Computational Complexity as an Ultimate Constraint on Evolution". Genetics 212 (1): 245–265. doi:10.1534/genetics.119.302000. PMID 30833289.

- ↑ Fearnley, John; Goldberg, Paul; Hollender, Alexandros; Savani, Rahul (2022-12-19). "The Complexity of Gradient Descent: CLS = PPAD ∩ PLS". Journal of the ACM 70 (1): 7:1–7:74. doi:10.1145/3568163. ISSN 0004-5411. https://doi.org/10.1145/3568163.

- ↑ Yannakakis, Mihalis (2009-05-01). "Equilibria, fixed points, and complexity classes" (in en). Computer Science Review 3 (2): 71–85. doi:10.1016/j.cosrev.2009.03.004. ISSN 1574-0137. https://www.sciencedirect.com/science/article/pii/S1574013709000161.

|