Motion analysis

Motion analysis is used in computer vision, image processing, high-speed photography and machine vision that studies methods and applications in which two or more consecutive images from an image sequences, e.g., produced by a video camera or high-speed camera, are processed to produce information based on the apparent motion in the images. In some applications, the camera is fixed relative to the scene and objects are moving around in the scene, in some applications the scene is more or less fixed and the camera is moving, and in some cases both the camera and the scene are moving. The motion analysis processing can in the simplest case be to detect motion, i.e., find the points in the image where something is moving. More complex types of processing can be to track a specific object in the image over time, to group points that belong to the same rigid object that is moving in the scene, or to determine the magnitude and direction of the motion of every point in the image. The information that is produced is often related to a specific image in the sequence, corresponding to a specific time-point, but then depends also on the neighboring images. This means that motion analysis can produce time-dependent information about motion.

Applications of motion analysis can be found in rather diverse areas, such as surveillance, medicine, film industry, automotive crash safety,[1] ballistic firearm studies,[2] biological science,[3] flame propagation,[4] and navigation of autonomous vehicles to name a few examples.

Background

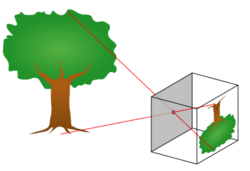

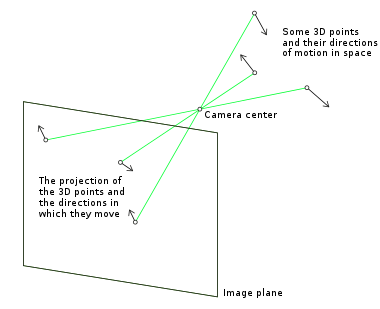

A video camera can be seen as an approximation of a pinhole camera, which means that each point in the image is illuminated by some (normally one) point in the scene in front of the camera, usually by means of light that the scene point reflects from a light source. Each visible point in the scene is projected along a straight line that passes through the camera aperture and intersects the image plane. This means that at a specific point in time, each point in the image refers to a specific point in the scene. This scene point has a position relative to the camera, and if this relative position changes, it corresponds to a relative motion in 3D. It is a relative motion since it does not matter if it is the scene point, or the camera, or both, that are moving. It is only when there is a change in the relative position that the camera is able to detect that some motion has happened. By projecting the relative 3D motion of all visible points back into the image, the result is the motion field, describing the apparent motion of each image point in terms of a magnitude and direction of velocity of that point in the image plane. A consequence of this observation is that if the relative 3D motion of some scene points are along their projection lines, the corresponding apparent motion is zero.

The camera measures the intensity of light at each image point, a light field. In practice, a digital camera measures this light field at discrete points, pixels, but given that the pixels are sufficiently dense, the pixel intensities can be used to represent most characteristics of the light field that falls onto the image plane. A common assumption of motion analysis is that the light reflected from the scene points does not vary over time. As a consequence, if an intensity I has been observed at some point in the image, at some point in time, the same intensity I will be observed at a position that is displaced relative to the first one as a consequence of the apparent motion. Another common assumption is that there is a fair amount of variation in the detected intensity over the pixels in an image. A consequence of this assumption is that if the scene point that corresponds to a certain pixel in the image has a relative 3D motion, then the pixel intensity is likely to change over time.

Methods

Motion detection

One of the simplest type of motion analysis is to detect image points that refer to moving points in the scene. The typical result of this processing is a binary image where all image points (pixels) that relate to moving points in the scene are set to 1 and all other points are set to 0. This binary image is then further processed, e.g., to remove noise, group neighboring pixels, and label objects. Motion detection can be done using several methods; the two main groups are differential methods and methods based on background segmentation.

Applications

Human motion analysis

In the areas of medicine, sports,[5] video surveillance, physical therapy,[6] and kinesiology,[7] human motion analysis has become an investigative and diagnostic tool. See the section on motion capture for more detail on the technologies. Human motion analysis can be divided into three categories: human activity recognition, human motion tracking, and analysis of body and body part movement.

Human activity recognition is most commonly used for video surveillance, specifically automatic motion monitoring for security purposes. Most efforts in this area rely on state-space approaches, in which sequences of static postures are statistically analyzed and compared to modeled movements. Template-matching is an alternative method whereby static shape patterns are compared to pre-existing prototypes.[8]

Human motion tracking can be performed in two or three dimensions. Depending on the complexity of analysis, representations of the human body range from basic stick figures to volumetric models. Tracking relies on the correspondence of image features between consecutive frames of video, taking into consideration information such as position, color, shape, and texture. Edge detection can be performed by comparing the color and/or contrast of adjacent pixels, looking specifically for discontinuities or rapid changes.[9] Three-dimensional tracking is fundamentally identical to two-dimensional tracking, with the added factor of spatial calibration.[8]

Motion analysis of body parts is critical in the medical field. In postural and gait analysis, joint angles are used to track the location and orientation of body parts. Gait analysis is also used in sports to optimize athletic performance or to identify motions that may cause injury or strain. Tracking software that does not require the use of optical markers is especially important in these fields, where the use of markers may impede natural movement.[8][10]

Motion analysis in manufacturing

Motion analysis is also applicable in the manufacturing process.[11] Using high speed video cameras and motion analysis software, one can monitor and analyze assembly lines and production machines to detect inefficiencies or malfunctions. Manufacturers of sports equipment, such as baseball bats and hockey sticks, also use high speed video analysis to study the impact of projectiles. An experimental setup for this type of study typically uses a triggering device, external sensors (e.g., accelerometers, strain gauges), data acquisition modules, a high-speed camera, and a computer for storing the synchronized video and data. Motion analysis software calculates parameters such as distance, velocity, acceleration, and deformation angles as functions of time. This data is then used to design equipment for optimal performance.[12]

Additional applications for motion analysis

The object and feature detecting capabilities of motion analysis software can be applied to count and track particles, such as bacteria,[13][14] viruses,[15] "ionic polymer-metal composites",[16][17] micron-sized polystyrene beads,[18] aphids,[19] and projectiles.[20]

See also

References

- ↑ Munsch, Marie. "Lateral Glazing Characterization Under Head Impact:experimental and Numerical Investigation". http://www-nrd.nhtsa.dot.gov/pdf/esv/esv21/09-0184.pdf. Retrieved 20 December 2013.

- ↑ "Handgun Wounding Effects Due to Bullet Rotational Velocity". Archived from the original on 22 December 2013. https://web.archive.org/web/20131222013624/http://www.brassfetcher.com/Wounding%20Theory/Handgun%20Wounding%20Effects%20due%20to%20Rotational%20Velocity.pdf. Retrieved 18 February 2013.

- ↑ Anderson first Christopher V. (2010). "Ballistic tongue projection in chameleons maintains high performance at low temperature". Proceedings of the National Academy of Sciences of the United States of America (Department of Integrative Biology, University of South Florida, Tampa, FL 33620, PNAS March 23, 2010 vol. 107 no. 12 5495–5499) 107 (12): 5495–9. doi:10.1073/pnas.0910778107. PMID 20212130. PMC 2851764. Bibcode: 2010PNAS..107.5495A. http://www.pnas.org/content/107/12/5495.full.pdf. Retrieved 2 June 2010.

- ↑ Mogi, Toshio. "Self-ignition and flame propagation of high-pressure hydrogen jet during sudden discharge from a pipers". International Journal of Hydrogen Energy 34 ( 2009 ) 5810 – 5816. http://www.me.aoyama.ac.jp/~aerospacelab/Publications/03mogi.pdf. Retrieved 28 April 2009.

- ↑ Payton, Carl J.. "Biomechanical Evaluation of Movement in Sport and Exercise". http://8pic.ir/images/gyep7tzyfkryix6vlu.pdf. Retrieved 8 January 2014.

- ↑ "Markerless Motion Capture + Motion Analysis | EuMotus" (in en-US). https://www.eumotus.com.

- ↑ Hedrick, Tyson L. (2011). "Morphological and kinematic basis of the hummingbird flight stroke: scaling of flight muscle transmission ratio". Proceedings. Biological Sciences 279 (1735): 1986–1992. doi:10.1098/rspb.2011.2238. PMID 22171086.

- ↑ 8.0 8.1 8.2 Aggarwal, JK and Q Cai. "Human Motion Analysis: A Review." Computer Vision and Image Understanding 73, no. 3 (1999): 428-440.

- ↑ Fan, J, EA El-Kwae, M-S Hacid, and F Liang. "Novel tracking-based moving object extraction algorithm." J Electron Imaging 11, 393 (2002).

- ↑ Green, RD, L Guan, and JA Burne. "Video analysis of gait for diagnosing movement disorders." J Electron Imaging 9, 16 (2000).

- ↑ Longana, M.L.. "High-strain rate imaging & full-field optical techniques for material characterization". http://www.southampton.ac.uk/damtol/images/Longana_NCCnov2012.pdf. Retrieved Nov 22, 2012.

- ↑ Masi, CG. "Vision improves bat performance." Vision Systems Design. June 2006

- ↑ Borrok, M. J., et al. (2009). Structure-based design of a periplasmic binding protein antagonist that prevents domain closure. ACS Chemical Biology, 4, 447-456.

- ↑ Borrok, M. J., Kolonko, E. M., and Kiessling, L. L. (2008). Chemical probes of bacterial signal transduction reveal that repellents stabilize and attractants destabilize the chemoreceptor array. ACS Chemical Biology, 3, 101-109.

- ↑ Shopov, A. et al. "Improvements in image analysis and fluorescence microscopy to discriminate and enumerate bacteria and viruses in aquatic samples, or cells, and to analyze sprays and fragmenting debris." Aquatic Microbial Ecology 22 (2000): 103-110.

- ↑ Park, J. K., and Moore, R. B. (2009). Influence of ordered morphology on the anisotropic actuation in uniaxially oriented electroactive polymer systems. ACS Applied Materials & Interfaces, 1, 697-702.

- ↑ Phillips, A. K., and Moore, R. B. (2005). Ionic actuators based on novel sulfonated ethylene vinyl alcohol copolymer membranes. Polymer, 46, 7788-7802.

- ↑ Nott, M. (2005). Teaching Brownian motion: demonstrations and role play. School Science Review, 86, 18-28.

- ↑ Kay, S., and Steinkraus, D. C. (2005). Effect of Neozygites fresenii infection on cotton aphid movement. AAES Research Series 543, 245-248. Fayetteville, AR: Arkansas Agricultural Experiment Station. Available from http://arkansasagnews.uark.edu/543-43.pdf

- ↑ Sparks, C. et al. "Comparison and Validation of Smooth Particle Hydrodynamics (SPH) and Coupled Euler Lagrange (CEL) Techniques for Modeling Hydrodynamic Ram." 46th AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference, Austin, Texas, Apr. 18-21, 2005.

|