Chroma feature

In Western music, the term chroma feature or chromagram closely relates to twelve different pitch classes. Chroma-based features, which are also referred to as "pitch class profiles", are a powerful tool for analyzing music whose pitches can be meaningfully categorized (often into twelve categories) and whose tuning approximates to the equal-tempered scale. One main property of chroma features is that they capture harmonic and melodic characteristics of music, while being robust to changes in timbre and instrumentation.

Definition

The underlying observation is that humans perceive two musical pitches as similar in color if they differ by an octave. Based on this observation, a pitch can be separated into two components, which are referred to as tone height and chroma.[1] Assuming the equal-tempered scale, one considers twelve chroma values represented by the set

- {C, C♯, D, D♯, E , F, F♯, G, G♯, A, A♯, B}

that consists of the twelve pitch spelling attributes as used in Western music notation. Note that in the equal-tempered scale different pitch spellings such C♯ and D♭ refer to the same chroma. Enumerating the chroma values, one can identify the set of chroma values with the set of integers {1,2,...,12}, where 1 refers to chroma C, 2 to C♯, and so on. A pitch class is defined as the set of all pitches that share the same chroma. For example, using the scientific pitch notation, the pitch class corresponding to the chroma C is the set

- {..., C−2, C−1, C0, C1, C2, C3 ...}

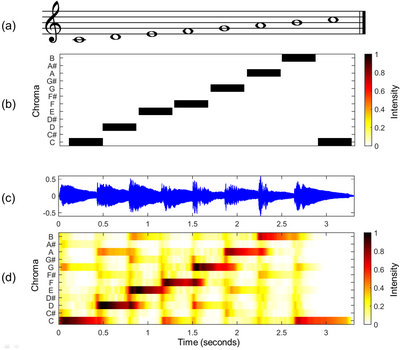

consisting of all pitches separated by an integer number of octaves. Given a music representation (e.g. a musical score or an audio recording), the main idea of chroma features is to aggregate for a given local time window (e.g. specified in beats or in seconds) all information that relates to a given chroma into a single coefficient. Shifting the time window across the music representation results in a sequence of chroma features each expressing how the representation's pitch content within the time window is spread over the twelve chroma bands. The resulting time-chroma representation is also referred to as chromagram. The figure above shows chromagrams for a C-major scale, once obtained from a musical score and once from an audio recording. Because of the close relation between the terms chroma and pitch class, chroma features are also referred to as pitch class profiles.

Applications

Identifying pitches that differ by an octave, chroma features show a high degree of robustness to variations in timbre and closely correlate to the musical aspect of harmony. This is the reason why chroma features are a well-established tool for processing and analyzing music data.[2] For example, basically every chord recognition procedure relies on some kind of chroma representation.[3][4][5][6] Also, chroma features have become the de facto standard for tasks such as music alignment and synchronization[7][8] as well as audio structure analysis.[9] Finally, chroma features have turned out to be a powerful mid-level feature representation in content-based audio retrieval such as cover song identification,[10][11] audio matching[12][13][14][15] or audio hashing.[16][17]

Computation of audio chromagrams

There are many ways for converting an audio recording into a chromagram. For example, the conversion of an audio recording into a chroma representation (or chromagram) may be performed either by using short-time Fourier transforms in combination with binning strategies[18][19][20] or by employing suitable multirate filter banks.[12] Furthermore, the properties of chroma features can be significantly changed by introducing suitable pre- and post-processing steps modifying spectral, temporal, and dynamical aspects. This leads to a large number of chroma variants, which may show a quite different behavior in the context of a specific music analysis scenario.[21]

See also

- Time-frequency analysis

- Time-frequency analysis for music signal

- Pitch (music)

- Musical theory

References

- ↑ Shepard, Roger N. (1964). "Circularity in judgments of relative pitch". Journal of the Acoustical Society of America 36 (212): 2346–2353. doi:10.1121/1.1919362. Bibcode: 1964ASAJ...36.2346S.

- ↑ Müller, Meinard (2015). Fundamentals of Music Processing. Springer. doi:10.1007/978-3-319-21945-5. ISBN 978-3-319-21944-8. http://www.music-processing.de.

- ↑ Cho, Taemin; Bello, Juan Pablo (2014). "On the Relative Importance of Individual Components of Chord Recognition Systems". IEEE/ACM Transactions on Audio, Speech, and Language Processing 22 (2): 477–4920. doi:10.1109/TASLP.2013.2295926.

- ↑ Mauch, Matthias; Dixon, Simon (2010). "Simultaneous estimation of chords and musical context from audio". IEEE Transactions on Audio, Speech, and Language Processing 18 (6): 138–153. doi:10.1109/TASL.2009.2032947.

- ↑ Fujishima, Takuya (1999). "Realtime Chord Recognition of Musical Sound: a System Using Common Lisp Music". Proceedings of the International Computer Music Conference: 464–467.

- ↑ Jiang, Nanzhu; Grosche, Peter; Konz, Verena; Müller, Meinard (2011). "Analyzing Chroma Feature Types for Automated Chord Recognition". Proceedings of the AES Conference on Semantic Audio. https://www.audiolabs-erlangen.de/content/05-fau/professor/00-mueller/03-publications/2011_JiangGroscheKonzMueller_ChordRecognitionEvaluation_AES42-Ilmenau.pdf.

- ↑ Hu, Ning; Dannenberg, Roger B.; Tzanetakis, George (2003). "Polyphonic Audio Matching and Alignment for Music Retrieval". Proceedings of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics.

- ↑ Ewert, Sebastian; Müller, Meinard; Grosche, Peter (2009). "High resolution audio synchronization using chroma onset features". 2009 IEEE International Conference on Acoustics, Speech and Signal Processing. pp. 1869–1872. doi:10.1109/ICASSP.2009.4959972. ISBN 978-1-4244-2353-8. https://www.audiolabs-erlangen.de/content/05-fau/professor/00-mueller/03-publications/2009_EwertMuellerGrosche_HighResAudioSync_ICASSP.pdf.

- ↑ Paulus, Jouni; Müller, Meinard; Klapuri, Anssi (2010). "Audio-based Music Structure Analysis". Proceedings of the International Conference on Music Information Retrieval: 625–636. http://ismir2010.ismir.net/proceedings/ismir2010-107.pdf.

- ↑ Ellis, Daniel P.W.; Poliner, Graham (2007). "Identifying 'Cover Songs' with Chroma Features and Dynamic Programming Beat Tracking". Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing.

- ↑ Serrà, Joan; Gómez, Emilia; Herrera, Perfecto; Serra, Xavier (2008). "Chroma Binary Similarity and Local Alignment Applied to Cover Song Identification". IEEE Transactions on Audio, Speech, and Language Processing 16 (6): 1138–1151. doi:10.1109/TASL.2008.924595.

- ↑ 12.0 12.1 Müller, Meinard; Kurth, Frank; Clausen, Michael (2005). "Audio Matching via Chroma-Based Statistical Features". Proceedings of the International Conference on Music Information Retrieval: 288–295. http://ismir2005.ismir.net/proceedings/1019.pdf.

- ↑ Kurth, Frank; Müller, Meinard (2008). "Efficient Index-Based Audio Matching". IEEE Transactions on Audio, Speech, and Language Processing 16 (2): 382–395. doi:10.1109/TASL.2007.911552.

- ↑ Müller, Meinard (2015). Music Synchronization. In Fundamentals of Music Processing, chapter 3, pages 115-166. Springer. ISBN 978-3-319-21944-8. http://www.music-processing.de.

- ↑ Kurth, Frank; Müller, Meinard (2008). "Efficient Index-Based Audio Matching". IEEE Transactions on Audio, Speech, and Language Processing 16 (2): 382–395. doi:10.1109/TASL.2007.911552.

- ↑ Yu, Yi; Crucianu, Michel; Oria, Vincent; Damiani, Ernesto (2010). "Combining multi-probe histogram and order-statistics based LSH for scalable audio content retrieval". Proceedings of the international conference on Multimedia - MM '10. Proceedings of the 18th International Conference on Multimedia 2010. pp. 381–390. doi:10.1145/1873951.1874004. ISBN 9781605589336.

- ↑ Yu, Yi; Crucianu, Michel; Oria, Vincent; Chen, Lei (2009). "Local summarization and multi-level LSH for retrieving multi-variant audio tracks". Proceedings of the seventeen ACM international conference on Multimedia - MM '09. Proceedings of the 17th International Conference on Multimedia 2009. pp. 341–350. doi:10.1145/1631272.1631320. ISBN 9781605586083.

- ↑ Bartsch, Mark A.; Wakefield, Gregory H. (2005). "Audio thumbnailing of popular music using chroma-based representations". IEEE Transactions on Multimedia 7 (1): 96–104. doi:10.1109/TMM.2004.840597.

- ↑ Gómez, Emilia (2006). "Tonal Description of Music Audio Signals". PhD Thesis, UPF Barcelona, Spain.

- ↑ Müller, Meinard (2015). Music Synchronization. In Fundamentals of Music Processing, chapter 3, pages 115-166. Springer. ISBN 978-3-319-21944-8. http://www.music-processing.de.

- ↑ Müller, Meinard; Ewert, Sebastian (2011). "Chroma Toolbox: MATLAB Implementations For Extracting Variants of Chroma-Based Audio Features". Proceedings of the International Society for Music Information Retrieval Conference: 215–220. https://www.audiolabs-erlangen.de/content/05-fau/professor/00-mueller/03-publications/2011_MuellerEwert_ChromaToolbox_ISMIR.pdf.

External links

- Chroma Toolbox Free MATLAB implementations of various chroma types of pitch-based and chroma-based audio features

- Harmonic Pitch Class Profile plugin

|