Dragon protocol

The Dragon Protocol[1] is an update based cache coherence protocol used in multi-processor systems. Write propagation is performed by directly updating all the cached values across multiple processors. Update based protocols such as the Dragon protocol perform efficiently when a write to a cache block is followed by several reads made by other processors, since the updated cache block is readily available across caches associated with all the processors.

States

Each cache block resides in one of the four states: exclusive-clean, shared-clean, shared-modified and modify.

- Exclusive-clean (E): This means that the cache block was first fetched by the current processor and has not been accessed by any other processor since.

- Shared clean (Sc): This means that the cache block definitely exists in multiple processor’s caches, and that the current processor is not the last one to write the block. States E and Sc are maintained separately by the protocol to prevent read-write operations on cache blocks that are not shared, from inducing bus transactions, and hence slowing down the execution. This is a common occurrence in single threaded programs.

- Shared modified (Sm): This means that the block exists in caches of multiple processors, and the current processor is the last one to modify the block. Consequently, the current processor is called the owner of the block. Unlike the invalidation protocols, the block doesn’t need to be up to date in the main memory, but only in the processor. It is the processor’s responsibility to update the main memory when the cache block is evicted.

- Modify (M): This means that only one processor has the memory block, and also that it has modified the value since it has been brought in from the memory.

For any given pair of caches, the permitted states of a given cache block in conjunction with the states of the other cache's states, are as follows (the states abbreviated in the order above):

| E | Sc | Sm | M | |

|---|---|---|---|---|

| E | ||||

| Sc | ||||

| Sm | ||||

| M |

Transactions

There are 4 processor transactions and 2 bus transactions.

Processor Read (PrRd): This happens when the processor completes a successful read on a certain cache block placed in its cache.

Processor Write (PrWr): This happens when the processor completes a successful write on a certain cache block placed in its cache. This makes the processor to be the latest to update the cache block.

Processor Read Miss (PrRdMiss): This happens when the processor fails to read a cache block from its cache, and needs to fetch the block from either the memory or another cache.

Processor Write Miss (PrWrMiss): This happens when the processor fails to write to a cache block from its cache, and needs to fetch the block from the memory or another cache and then write to it. This again makes the processor to be the latest to update the cache block.

Bus Read (BusRd): This happens when a processor requests the bus to fetch the latest value of the cache block, whether it be from the main memory or another processor’s cache.

Flush: This happens when a processor places an entire cache block on the bus. This is to reflect the changes made by the processor to the cached block in the main memory.

Bus Update (BusUpd): This happens when a processor modifies a cache block, and other processors need an update in their respective cache blocks. This is unique to only write update protocols. BusUpd takes shorter time when compared to Flush operation, since writes made to caches are faster than to memory. Another point to note is that a cache cannot update its local copy of a cache block and then request the bus to send out a bus update. If this does happen, then it might be possible that two caches independently update their local copy and then request for the bus. They would then see the two writes simultaneously which would not follow Sequential consistency.

A shared line is also required to indicate whether a certain cache block is available in multiple caches. This is required because one of the caches could evict the block without needing to update the other blocks. The shared line helps reduce memory and bus transactions in some cases where the block is available in only one cache and a bus update is hence, not required. Such a dedicated line to detect sharing is seen across write-update protocols, like the Firefly protocol and implemented based on bus standards such as Futurebus (IEEE standard P896.1).[2]

Transitions

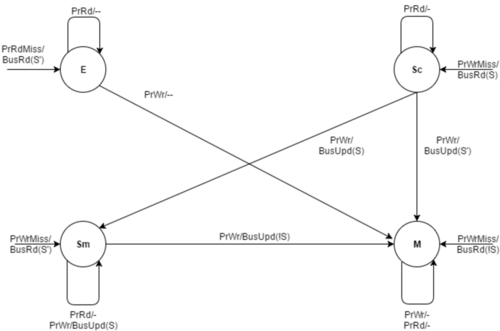

Processor-initiated transitions

Based on the current state of the block and the transaction initiated by the processor, the cache block undergoes one of the following state transitions:

- When a processor read-miss (PrRdMiss) occurs, and the cache block is not shared, the state transitions to Exclusive

- When a processor read-miss (PrRdMiss) occurs, and the cache block is shared, the state transitions to state Shared Clean

- When a processor write-miss (PrWrMiss) occurs, and the cache block is shared, the state transitions to Shared Modified and the processor becomes the owner.

- When a processor write-miss (PrWrMiss) occurs, and the cache block is not shared, the state transitions to Modified

- When there is a processor read (PrRd) hit, the state of the cache block does not change, and retains the value. This is because it is just a read command and it does not generate any bus transactions

- If the cache block is in the Modified state, and the processor writes (PrWr ) the block, there is no transition as the block is not being shared.

- When the cache block is in Shared Modified state, and a processor writes (PrWr), but the shared line is not asserted, the state transitions to Modified.

- If the cache block is in the Shared Modified state when a write (PrWr) occurs and the shared line is asserted, a bus update (BusUpd) is generated to update the other cache block.

- If the cache block is in the Shared Clean state when a write (PrWr) occurs and the shared line is asserted, a bus update (BusUpd) is generated to update the other cache block and the state changes to Shared Modified.

- But if the cache block is in the Shared Clean state when a write (PrWr) occurs, but the shared line is not asserted, the state transitions to Modified, and no bus transactions are generated.

- When the block is in Exclusive state, and the processor writes (PrWr) to it, it will be changed to the Modified state.

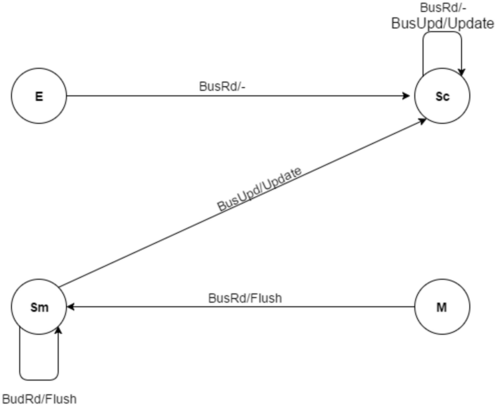

Bus-initiated transitions

Based on the current state of the block and the transaction initiated by the bus, the cache block undergoes one of the following state transitions:

- If the cache block is in Modified, and a Bus Read (BusRd) is issued, a Flush is issued to update the main memory and the state transitions to Shared Modified, as the block is now in multiple caches.

- If the cache block is in Shared Modified state, and a bus read (BusRd), a Flush is issued to update the main memory, and state remains the same.

- If the cache block is in Shared Modified state, and a bus update (BusUpd) transaction is issued, the state transitions to Shared Clean, all the caches are updated.

- If the cache block is in Shared Clean state, and it receives a bus read (BusRd) or a bus update (BusUpd) it continues to retain its state as the value is still shared. However, in the case of an Update, it will update the value in the cache block.

- If the cache block is in the Exclusive state and the bus reads the value (BusRd), the state will transition to Shared Clean, as the block is no longer only residing in one cache

Low Level Design Choices

The processor with the cache block in Sm state is responsible for updating main memory when the cache block is replaced. But if the main memory is updated whenever a bus update transaction occurs, there is no need for separate Sm and Sc states, and the protocol can afford a single shared state. However, this causes a lot more memory transactions[3] which can slow down the system, especially when multiple processors are writing to the same cache block.

Broadcasting the replacement of Sc block

The protocol allows the cache blocks in the Sc state to be replaced silently without any bus activity. If a broadcast was made to let other caches know that a Sc block is being replaced, they could test the shared line and move to state E if there were no other sharers. The advantage of having a block in state E is that if the block is later written, it goes to M state and there is no need to generate a bus update transaction. So at the cost of broadcasting the replacements of Sc blocks, we are able to avoid bus update transactions. And since broadcasting replacements is not time critical, if a cache is not required to process the replacement right away, there is no downside. On the other hand, if a cache does not process an update right away, it may lead to out-of-order updates. In such cases a three state update protocol, like the Firefly protocol, may have performance advantages.

Comparisons

Dragon vs Write invalidate protocols

Write Invalidate is another set of cache coherence protocols, where once a cache block is modified, the other values of the same block in other caches are not updated, but invalidated.[4] Write invalidate protocols are more efficient in cases where are there are many subsequent writes to the same cache block, as the invalidation happens once and further bus transactions by other processors are avoided. However, the write update protocol is more efficient in cases where a write to a block is followed by multiple reads to the same block. Since we are updating the other cached values once we write it, they have access to the data immediately. In such a case. the write invalidate protocol is highly disadvantageous because every time a cache block is modified in another cache, the rest of the caches need will encounter a coherence miss, and initiate a bus transaction to read the new value. In contrast, the write update protocol tends to, at times, keep the values of the block updated for longer than necessary, which will lead to an increase in other types of misses, i.e. conflict and capacity misses.

Dragon vs Firefly protocol

In case of Firefly, cache-to-cache transfers of modified blocks are also written back to main memory at the same time. But since accesses made to the main memory are slower by orders of magnitude when compared to caches, it requires added complexity of performing a write back as a separate bus operation. In any case, it results in lower performance.[5] This problem is avoided altogether in case of Dragon protocol, since the shared blocks are not written back to memory at all. However, this comes at the cost of one added state (Shared-modified).

References

- ↑ Atkinson, Russell R.; McCreight, Edward M. (1987-01-01). "The dragon processor". Proceedings of the second international conference on Architectural support for programming languages and operating systems. ASPLOS II. Los Alamitos, CA, USA: IEEE Computer Society Press. pp. 65–69. doi:10.1145/36206.36185. ISBN 978-0818608056.

- ↑ Stenström, Per (1990-06-01). "A Survey of Cache Coherence Schemes for Multiprocessors". Computer 23 (6): 12–24. doi:10.1109/2.55497. ISSN 0018-9162.

- ↑ Culler, David; Singh, Jaswinder Pal; Gupta, Anoop (1999). Parallel Computer Architecture, 1st Edition | David Culler, Jaswinder Pal Singh, Anoop Gupta |. Gulf Professional. ISBN 9781558603431. http://store.elsevier.com/Parallel-Computer-Architecture/David-Culler/isbn-9781558603431/. Retrieved 2016-10-24.

- ↑ Solihin, Yan (2015). Fundamentals of Parallel Multicore Architecture. Chapman and Hall/CRC. pp. 205–206, 231–232. ISBN 9781482211184.

- ↑ Archibald, James; Baer, Jean-Loup (1986-09-01). "Cache Coherence Protocols: Evaluation Using a Multiprocessor Simulation Model". ACM Trans. Comput. Syst. 4 (4): 273–298. doi:10.1145/6513.6514. ISSN 0734-2071.

See also

- Coherence protocol

- Firefly protocol

- MESI protocol

- MOSI protocol

- MOESI protocol

- MESIF protocol

|