Residual time

In the theory of renewal processes, a part of the mathematical theory of probability, the residual time or the forward recurrence time is the time between any given time [math]\displaystyle{ t }[/math] and the next epoch of the renewal process under consideration. In the context of random walks, it is also known as overshoot. Another way to phrase residual time is "how much more time is there to wait?". The residual time is very important in most of the practical applications of renewal processes:

- In queueing theory, it determines the remaining time, that a newly arriving customer to a non-empty queue has to wait until being served.[1]

- In wireless networking, it determines, for example, the remaining lifetime of a wireless link on arrival of a new packet.

- In dependability studies, it models the remaining lifetime of a component.

- etc.

Formal definition

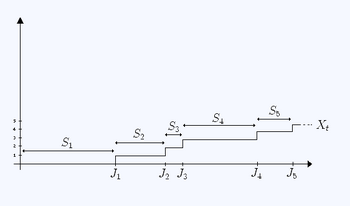

Consider a renewal process [math]\displaystyle{ \{N(t),t\geq0\} }[/math], with holding times [math]\displaystyle{ S_{i} }[/math] and jump times (or renewal epochs) [math]\displaystyle{ J_{i} }[/math], and [math]\displaystyle{ i\in\mathbb{N} }[/math]. The holding times [math]\displaystyle{ S_{i} }[/math] are non-negative, independent, identically distributed random variables and the renewal process is defined as [math]\displaystyle{ N(t) = \sup\{n: J_{n} \leq t\} }[/math]. Then, to a given time [math]\displaystyle{ t }[/math], there corresponds uniquely an [math]\displaystyle{ N(t) }[/math], such that:

- [math]\displaystyle{ J_{N(t)} \leq t \lt J_{N(t)+1}. \, }[/math]

The residual time (or excess time) is given by the time [math]\displaystyle{ Y(t) }[/math] from [math]\displaystyle{ t }[/math] to the next renewal epoch.

- [math]\displaystyle{ Y(t) = J_{N(t)+1} - t. \, }[/math]

Probability distribution of the residual time

Let the cumulative distribution function of the holding times [math]\displaystyle{ S_{i} }[/math] be [math]\displaystyle{ F(t) = Pr[S_{i} \leq t] }[/math] and recall that the renewal function of a process is [math]\displaystyle{ m(t) = \mathbb{E}[N(t)] }[/math]. Then, for a given time [math]\displaystyle{ t }[/math], the cumulative distribution function of [math]\displaystyle{ Y(t) }[/math] is calculated as:[2]

- [math]\displaystyle{ \Phi(x,t) = \Pr[Y(t) \leq x] = F(t+x) - \int_0^t \left[1 - F(t+x-y)\right]dm(y) }[/math]

Differentiating with respect to [math]\displaystyle{ x }[/math], the probability density function can be written as

- [math]\displaystyle{ \phi(x, t) = f(t+x) + \int_0^t f(u+x) m'(t-u) du, }[/math]

where we have substituted [math]\displaystyle{ u = t-y. }[/math] From elementary renewal theory, [math]\displaystyle{ m'(t) \rightarrow 1/\mu }[/math] as [math]\displaystyle{ t \rightarrow \infty }[/math], where [math]\displaystyle{ \mu }[/math] is the mean of the distribution [math]\displaystyle{ F }[/math]. If we consider the limiting distribution as [math]\displaystyle{ t \rightarrow \infty }[/math], assuming that [math]\displaystyle{ f(t) \rightarrow 0 }[/math] as [math]\displaystyle{ t \rightarrow \infty }[/math], we have the limiting pdf as

- [math]\displaystyle{ \phi(x) = \frac{1}{\mu} \int_0^\infty f(u+x) du = \frac{1}{\mu} \int_x^\infty f(v) dv = \frac{1 - F(x)}{\mu}. }[/math]

Likewise, the cumulative distribution of the residual time is

- [math]\displaystyle{ \Phi(x) = \frac{1}{\mu} \int_0^x [1 - F(u)] du. }[/math]

For large [math]\displaystyle{ t }[/math], the distribution is independent of [math]\displaystyle{ t }[/math], making it a stationary distribution. An interesting fact is that the limiting distribution of forward recurrence time (or residual time) has the same form as the limiting distribution of the backward recurrence time (or age). This distribution is always J-shaped, with mode at zero.

The first two moments of this limiting distribution [math]\displaystyle{ \Phi }[/math] are:

- [math]\displaystyle{ E[Y] = \frac{\mu_2}{2\mu} = \frac{\mu^2 + \sigma^2}{2\mu}, }[/math]

- [math]\displaystyle{ E[Y^2] = \frac{\mu_3}{3\mu}, }[/math]

where [math]\displaystyle{ \sigma^2 }[/math] is the variance of [math]\displaystyle{ F }[/math] and [math]\displaystyle{ \mu_2 }[/math] and [math]\displaystyle{ \mu_3 }[/math] are its second and third moments.

Waiting time paradox

The fact that [math]\displaystyle{ E[Y] = \frac{\mu^2 + \sigma^2}{2\mu} \gt \frac{\mu}{2} }[/math] (for [math]\displaystyle{ \sigma \gt 0 }[/math]) is also known variously as the waiting time paradox, inspection paradox, or the paradox of renewal theory. The paradox arises from the fact that the average waiting time until the next renewal, assuming that the reference time point [math]\displaystyle{ t }[/math] is uniform randomly selected within the inter-renewal interval, is larger than the average inter-renewal interval [math]\displaystyle{ \frac{\mu}{2} }[/math]. The average waiting is [math]\displaystyle{ E[Y] = \frac{\mu}{2} }[/math] only when [math]\displaystyle{ \sigma^2 = 0 }[/math], that is when the renewals are always punctual or deterministic.

Special case: Markovian holding times

When the holding times [math]\displaystyle{ S_{i} }[/math] are exponentially distributed with [math]\displaystyle{ F(t) = 1 - e^{-\lambda t} }[/math], the residual times are also exponentially distributed. That is because [math]\displaystyle{ m(t) = \lambda t }[/math] and:

- [math]\displaystyle{ \Pr[Y(t) \leq x] = \left[1-e^{-\lambda(t+x)}\right] - \int_0^t \left[1 - 1+e^{-\lambda(t+x-y)}\right]d(\lambda y) = 1 - e^{-\lambda x}. }[/math]

This is a known characteristic of the exponential distribution, i.e., its memoryless property. Intuitively, this means that it does not matter how long it has been since the last renewal epoch, the remaining time is still probabilistically the same as in the beginning of the holding time interval.

Related notions

Renewal theory texts usually also define the spent time or the backward recurrence time (or the current lifetime) as [math]\displaystyle{ Z(t) = t - J_{N(t)} }[/math]. Its distribution can be calculated in a similar way to that of the residual time. Likewise, the total life time is the sum of backward recurrence time and forward recurrence time.

References

|