Szemerédi regularity lemma

In extremal graph theory, Szemerédi’s regularity lemma states that a graph can be partitioned into a bounded number of parts so that the edges between parts behave regularly. This lemma shows properties of random graphs can be applied to arbitrary graphs like the number of embedded copies for a given subgraph. Endre Szemerédi proved a weak version of the lemma over bipartite graphs for his theorem on arithmetic progressions in 1975 and later generalized it to arbitrary graphs in 1978. Related results have been proven for different notions of regularity on partitions and over hypergraphs.

Statement

To state Szemerédi's regularity lemma formally, we must formalize what the edge distribution between parts behaving 'almost randomly' really means. By 'almost random', we're referring to a notion called ε-regularity. To understand what this means, we first state some definitions. In what follows G is a graph with vertex set V.

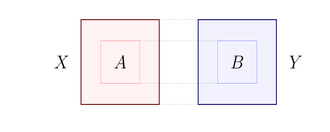

Definition 1. Let X, Y be disjoint subsets of V. The edge density of the pair (X, Y) is defined as:

- [math]\displaystyle{ d(X,Y) := \frac{\left| E(X,Y) \right|}{|X||Y|} }[/math]

where E(X, Y) denotes the set of edges having one end vertex in X and one in Y.

We call a pair of parts ε-regular if, whenever you take a large subset of each part, their edge density isn't too far off the edge density of the pair of parts. Formally,

Definition 2. For ε > 0, a pair of vertex sets X and Y is called ε-regular, if for all subsets A ⊆ X, B ⊆ Y satisfying |A| ≥ ε|X|, |B| ≥ ε|Y|, we have

- [math]\displaystyle{ \left| d(X,Y) - d(A,B) \right| \le \varepsilon. }[/math]

The natural way to define an ε-regular partition should be one where each pair of parts is ε-regular. However, some graphs, such as the half graphs, require many pairs of partitions (but a small fraction of all pairs) to be irregular.[1] So we shall define ε-regular partitions to be one where most pairs of parts are ε-regular.

Definition 3. A partition of [math]\displaystyle{ V }[/math] into [math]\displaystyle{ k }[/math] sets [math]\displaystyle{ \mathcal{P}=\{V_1,\ldots,V_k\} }[/math] is called an [math]\displaystyle{ \varepsilon }[/math]-regular partition if

- [math]\displaystyle{ \sum_{(V_i,V_j)\text{ not }\varepsilon\text{-regular}} |V_i||V_j|\leq\varepsilon|V(G)|^2 }[/math]

Now we can state the lemma:

Szemerédi's Regularity Lemma. For every ε > 0 and positive integer m there exists an integer M such that if G is a graph with at least M vertices, there exists an integer k in the range m ≤ k ≤ M and an ε-regular partition of the vertex set of G into k sets.

The bound M for the number of parts in the partition of the graph given by the proofs of Szemeredi's regularity lemma is very large, given by a O(ε−5)-level iterated exponential of m. At one time it was hoped that the true bound was much smaller, which would have had several useful applications. However (Gowers 1997) found examples of graphs for which M does indeed grow very fast and is at least as large as a ε−1/16-level iterated exponential of m.

Proof

We shall find an ε-regular partition for a given graph following an algorithm. A rough outline:

- Start with an arbitrary partition (could just be 1 part)

- While the partition isn't ε-regular:

- Find the subsets which witness ε-irregularity for each irregular pair.

- Simultaneously refine the partition using all the witnessing subsets.

We apply a technique called the energy increment argument to show that this process terminates after a bounded number of steps. Basically, we define a monovariant which must increase by a certain amount in each step, but it's bounded above and thus cannot increase indefinitely. This monovariant is called 'energy' as it's an [math]\displaystyle{ L^2 }[/math] quantity.

Definition 4. Let U, W be subsets of V. Set [math]\displaystyle{ |V| = n }[/math]. The energy of the pair (U, W) is defined as:

- [math]\displaystyle{ q(U,W) := \frac{|U||W|}{n^2}d(U,W)^2 }[/math]

For partitions [math]\displaystyle{ \mathcal{P}_U=\{U_1,\ldots,U_k\} }[/math] of U and [math]\displaystyle{ \mathcal{P}_W = \{W_1,\ldots,W_l\} }[/math] of W, we define the energy to be the sum of the energies between each pair of parts:

- [math]\displaystyle{ q(\mathcal{P}_U,\mathcal{P}_W) := \sum_{i=1}^k\sum_{j=1}^lq(U_i,W_j) }[/math]

Finally, for a partition [math]\displaystyle{ \mathcal{P}=\{V_1,\ldots,V_k\} }[/math] of V, define the energy of [math]\displaystyle{ \mathcal{P} }[/math] to be [math]\displaystyle{ q(\mathcal{P},\mathcal{P}) }[/math]. Specifically,

- [math]\displaystyle{ q(\mathcal{P})=\sum_{i=1}^k\sum_{j=1}^kq(V_i,V_j)=\sum_{i=1}^k\sum_{j=1}^k\frac{|V_i||V_j|}{n^2}d(V_i,V_j)^2 }[/math]

Observe that energy is between 0 and 1 because edge density is bounded above by 1:

- [math]\displaystyle{ q(\mathcal{P})=\sum_{i=1}^k\sum_{j=1}^k\frac{|V_i||V_j|}{n^2}d(V_i,V_j)^2\leq\sum_{i=1}^k\sum_{j=1}^k\frac{|V_i||V_j|}{n^2}=1 }[/math]

Now, we start by proving that energy does not decrease upon refinement.

Lemma 1. (Energy is nondecreasing under partitioning) For any partitions [math]\displaystyle{ \mathcal{P}_U }[/math] and [math]\displaystyle{ \mathcal{P}_W }[/math] of vertex sets [math]\displaystyle{ U }[/math] and [math]\displaystyle{ W }[/math], [math]\displaystyle{ q(\mathcal{P}_U,\mathcal{P}_W)\geq q(U,W) }[/math].

Proof: Let [math]\displaystyle{ \mathcal{P}_U=\{U_1,\ldots,U_k\} }[/math] and [math]\displaystyle{ \mathcal{P}_W=\{W_1,\ldots,W_l\} }[/math]. Choose vertices [math]\displaystyle{ x }[/math] uniformly from [math]\displaystyle{ U }[/math] and [math]\displaystyle{ y }[/math] uniformly from [math]\displaystyle{ W }[/math], with [math]\displaystyle{ x }[/math] in part [math]\displaystyle{ U_i }[/math] and [math]\displaystyle{ y }[/math] in part [math]\displaystyle{ W_j }[/math]. Then define the random variable [math]\displaystyle{ Z=d(U_i,W_j) }[/math]. Let us look at properties of [math]\displaystyle{ Z }[/math]. The expectation is

- [math]\displaystyle{ \mathbb{E}[Z]=\sum_{i=1}^k\sum_{j=1}^l\frac{|U_i|}{|U|}\frac{|W_j|}{|W|}d(U_i,W_j)=\frac{e(U,W)}{|U||W|}=d(U,W) }[/math]

The second moment is

- [math]\displaystyle{ \mathbb{E}[Z^2]=\sum_{i=1}^k\sum_{j=1}^l\frac{|U_i|}{|U|}\frac{|W_j|}{|W|}d(U_i,W_j)^2=\frac{n^2}{|U||W|}q(\mathcal{P}_U,\mathcal{P}_W) }[/math]

By convexity, [math]\displaystyle{ \mathbb{E}[Z^2]\geq\mathbb{E}[Z]^2 }[/math]. Rearranging, we get that [math]\displaystyle{ q(\mathcal{P}_U,\mathcal{P}_W) \ge q(U,W) }[/math] for all [math]\displaystyle{ U,W }[/math].[math]\displaystyle{ \square }[/math]

If each part of [math]\displaystyle{ \mathcal{P} }[/math] is further partitioned, the new partition is called a refinement of [math]\displaystyle{ \mathcal{P} }[/math]. Now, if [math]\displaystyle{ \mathcal{P}=\{V_1,\ldots,V_m\} }[/math], applying Lemma 1 to each pair [math]\displaystyle{ (V_i,V_j) }[/math] proves that for every refinement [math]\displaystyle{ \mathcal{P'} }[/math] of [math]\displaystyle{ \mathcal{P} }[/math], [math]\displaystyle{ q(\mathcal{P'}) \ge q(\mathcal{P}) }[/math]. Thus the refinement step in the algorithm doesn't lose any energy.

Lemma 2. (Energy boost lemma) If [math]\displaystyle{ (U,W) }[/math] is not [math]\displaystyle{ \varepsilon }[/math]-regular as witnessed by [math]\displaystyle{ U_1\subset U,W_1\subset W }[/math], then,

- [math]\displaystyle{ q\left(\{U_1,U\backslash U_1\},\{W_1,W\backslash W_1\}\right)\gt q(U,W)+\varepsilon^4\frac{|U||W|}{n^2} }[/math]

Proof: Define [math]\displaystyle{ Z }[/math] as above. Then,

- [math]\displaystyle{ Var(Z) = \mathbb{E}[Z^2]-\mathbb{E}[Z]^2 = \frac{n^2}{|U||W|}\left(q\left(\{U_1,U\backslash U_1\},\{W_1,W\backslash W_1\}\right)-q(U,W)\right) }[/math]

But observe that [math]\displaystyle{ |Z-\mathbb{E}[Z]|=|d(U_1,W_1)-d(U,W)| }[/math] with probability [math]\displaystyle{ \frac{|U_1|}{|U|}\frac{|W_1|}{|W|} }[/math](corresponding to [math]\displaystyle{ x\in U_1 }[/math] and [math]\displaystyle{ y\in W_1 }[/math]), so

- [math]\displaystyle{ Var(Z) = \mathbb{E}[(Z-\mathbb{E}[Z])^2] \geq \frac{|U_1|}{|U|}\frac{|W_1|}{|W|}(d(U_1,W_1)-d(U,W))^2 \gt \varepsilon\cdot\varepsilon\cdot\varepsilon^2 }[/math] [math]\displaystyle{ \square }[/math]

Now we can prove the energy increment argument, which shows that energy increases substantially in each iteration of the algorithm.

Lemma 3 (Energy increment lemma) If a partition [math]\displaystyle{ \mathcal{P}=\{V_1,\ldots,V_k\} }[/math] of [math]\displaystyle{ V(G) }[/math] is not [math]\displaystyle{ \varepsilon }[/math]-regular, then there exists a refinement [math]\displaystyle{ \mathcal{Q} }[/math] of [math]\displaystyle{ \mathcal{P} }[/math] where every [math]\displaystyle{ V_i }[/math] is partitioned into at most [math]\displaystyle{ 2^k }[/math] parts such that

- [math]\displaystyle{ q(\mathcal{Q})\geq q(\mathcal{P})+\varepsilon^5. }[/math]

Proof: For all [math]\displaystyle{ (i,j) }[/math] such that [math]\displaystyle{ (V_i,V_j) }[/math] is not [math]\displaystyle{ \varepsilon }[/math]-regular, find [math]\displaystyle{ A^{i,j}\subset V_i }[/math] and [math]\displaystyle{ A^{j,i}\subset V_j }[/math] that witness irregularity (do this simultaneously for all irregular pairs). Let [math]\displaystyle{ \mathcal{Q} }[/math] be a common refinement of [math]\displaystyle{ \mathcal{P} }[/math] by [math]\displaystyle{ A^{i,j} }[/math]'s. Each [math]\displaystyle{ V_i }[/math] is partitioned into at most [math]\displaystyle{ 2^k }[/math] parts as desired. Then,

- [math]\displaystyle{ q(\mathcal{Q}) = \sum_{(i,j)\in[k]^2} q(\mathcal{Q}_{V_i},\mathcal{Q}_{V_j}) = \sum_{(V_i,V_j)\text{ }\varepsilon\text{-regular}}q(\mathcal{Q}_{V_i},\mathcal{Q}_{V_j})+\sum_{(V_i,V_j)\text{ not }\varepsilon\text{-regular}}q(\mathcal{Q}_{V_i},\mathcal{Q}_{V_j}) }[/math]

Where [math]\displaystyle{ \mathcal{Q}_{V_i} }[/math] is the partition of [math]\displaystyle{ V_i }[/math] given by [math]\displaystyle{ \mathcal{Q} }[/math]. By Lemma 1, the above quantity is at least

- [math]\displaystyle{ \sum_{(V_i,V_j)\text{ }\varepsilon\text{-regular}}q(V_i,V_j)+\sum_{(V_i,V_j)\text{ not }\varepsilon\text{-regular}}q(\{A^{i,j},V_i\backslash A^{i,j}\},\{A^{j,i},V_j\backslash A^{j,i}\}) }[/math]

Since [math]\displaystyle{ V_i }[/math] is cut by [math]\displaystyle{ A^{i,j} }[/math] when creating [math]\displaystyle{ \mathcal{Q} }[/math], so [math]\displaystyle{ \mathcal{Q}_{V_i} }[/math] is a refinement of [math]\displaystyle{ \{A^{i,j},V_i\backslash A^{i,j}\} }[/math]. By lemma 2, the above sum is at least

- [math]\displaystyle{ \sum_{(i,j)\in[k]^2}q(V_i,V_j)+\sum_{(V_i,V_j)\text{ not }\varepsilon\text{-regular}}\varepsilon^4\frac{|V_i||V_j|}{n^2} }[/math]

But the second sum is at least [math]\displaystyle{ \varepsilon^5 }[/math] since [math]\displaystyle{ \mathcal{P} }[/math] is not [math]\displaystyle{ \varepsilon }[/math]-regular, so we deduce the desired inequality. [math]\displaystyle{ \square }[/math]

Now, starting from any partition, we can keep applying Lemma 3 as long as the resulting partition isn't [math]\displaystyle{ \varepsilon }[/math]-regular. But in each step energy increases by [math]\displaystyle{ \varepsilon^5 }[/math], and it's bounded above by 1. Then this process can be repeated at most [math]\displaystyle{ \varepsilon^{-5} }[/math] times, before it terminates and we must have an [math]\displaystyle{ \varepsilon }[/math]-regular partition.

Applications

Graph counting lemma

If we have enough information about the regularity of a graph, we can count the number of copies of a specific subgraph within the graph up to small error.

Graph Counting Lemma. Let [math]\displaystyle{ H }[/math] be a graph with [math]\displaystyle{ V(H)=[k] }[/math], and let [math]\displaystyle{ \varepsilon\gt 0 }[/math]. Let [math]\displaystyle{ G }[/math] be an [math]\displaystyle{ n }[/math]-vertex graph with vertex sets [math]\displaystyle{ V_1,\dots,V_k\subseteq V(G) }[/math] such that [math]\displaystyle{ (V_i,V_j) }[/math] is [math]\displaystyle{ \varepsilon }[/math]-regular whenever [math]\displaystyle{ \{i,j\}\in E(H) }[/math]. Then, the number of labeled copies of [math]\displaystyle{ H }[/math] in [math]\displaystyle{ G }[/math] is within [math]\displaystyle{ e(H)\varepsilon|V_1|\cdots|V_k| }[/math] of

- [math]\displaystyle{ \left(\prod_{\{i,j\}\in E(H)}d(V_i,V_j)\right)\left(\prod_{i=1}^k|V_i|\right). }[/math]

This can be combined with Szemerédi's regularity lemma to prove the Graph removal lemma. The graph removal lemma can be used to prove Roth's Theorem on Arithmetic Progressions,[2] and a generalization of it, the hypergraph removal lemma, can be used to prove Szemerédi's theorem.[3]

The graph removal lemma generalizes to induced subgraphs, by considering edge edits instead of only edge deletions. This was proved by Alon, Fischer, Krivelevich, and Szegedy in 2000.[4] However, this required a stronger variation of the regularity lemma.

Szemerédi's regularity lemma does not provide meaningful results in sparse graphs. Since sparse graphs have subconstant edge densities, [math]\displaystyle{ \varepsilon }[/math]-regularity is trivially satisfied. Even though the result seems purely theoretical, some attempts [5][6] have been made to use the regularity method as compression technique for large graphs.

Frieze-Kannan regularity

A different notion of regularity was introduced by Frieze and Kannan, known as the weak regularity lemma.[7] This lemma defines a weaker notion of regularity than that of Szemerédi which uses better bounds and can be used in efficient algorithms.

Given a graph [math]\displaystyle{ G=(V,E) }[/math], a partition of its vertices [math]\displaystyle{ \mathcal{P} = \{ V_1, \ldots, V_k\} }[/math] is said to be Frieze-Kannan [math]\displaystyle{ \epsilon }[/math]-regular if for any pair of sets [math]\displaystyle{ S,T \subseteq V }[/math]:

- [math]\displaystyle{ \left| e(S,T) - \sum_{i,j=1}^k d(V_i, V_j) |S\cap V_i| |T\cap V_j|\right| \leq \epsilon |V|^2 }[/math]

The weak regularity lemma for graphs states that every graph has a weak [math]\displaystyle{ \epsilon }[/math]-regular partition into at most [math]\displaystyle{ 4^{\epsilon^{-2}} }[/math] parts.

This notion can be extended to graphons by defining a stepping operator. Given a graphon [math]\displaystyle{ W }[/math] and a partition [math]\displaystyle{ \mathcal{P} }[/math] of [math]\displaystyle{ [0,1] }[/math], we can define [math]\displaystyle{ W_{\mathcal{P}} }[/math] as a step-graphon with steps given by [math]\displaystyle{ \mathcal{P} }[/math] and values given by averaging [math]\displaystyle{ W }[/math] over each step.

A partition [math]\displaystyle{ \mathcal{P} }[/math] is weak [math]\displaystyle{ \epsilon }[/math]-regular if:

- [math]\displaystyle{ \| W - W_{\mathcal{P}} \|_{\square} \leq \epsilon }[/math]

The weak regularity lemma for graphons states that every graphon has a weak [math]\displaystyle{ \epsilon }[/math]-regular partition into at most [math]\displaystyle{ 4^{\epsilon^{-2}} }[/math] parts. As with Szemerédi's regularity lemma, the weak regularity also induces a counting lemma.

Algorithmic Applications

One of the initial motivations for the development of the weak regularity lemma was the search for an efficient algorithm for estimating the maximum cut in a dense graph.[7] It has been shown that approximating the max-cut problem beyond 16/17 is NP-hard,[8] however an algorithmic version of the weak regularity lemma gives an efficient algorithm for approximating the max-cut for dense graphs within an [math]\displaystyle{ \epsilon n^2 }[/math] additive error.[7] These ideas have been further developed into efficient sampling algorithms for estimating max-cut in dense graphs.[9]

The smaller bounds of the weak regularity lemma allow for efficient algorithms to find an [math]\displaystyle{ \epsilon }[/math]-regular partition.[10] Graph regularity has further been used in various area of theoretical computer science, such as matrix multiplication[11] and communication complexity.[12]

Strong regularity lemma

The strong regularity lemma is a stronger variation of the regularity lemma proven by Alon, Fischer, Krivelevich, and Szegedy in 2000.[4] Intuitively, it provides information between non-regular pairs and could be applied to prove the induced graph removal lemma.

Statement

For any infinite sequence of constants [math]\displaystyle{ \epsilon_0\ge \epsilon_1 \ge ...\gt 0 }[/math], there exists an integer [math]\displaystyle{ M }[/math] such that for any graph [math]\displaystyle{ G }[/math], we can obtain two (equitable) partitions [math]\displaystyle{ \mathcal{P} }[/math] and [math]\displaystyle{ \mathcal{Q} }[/math] such that the following properties are satisfied:

- [math]\displaystyle{ \mathcal{Q} }[/math] refines [math]\displaystyle{ \mathcal{P} }[/math], that is every part of [math]\displaystyle{ \mathcal{P} }[/math] is the union of some collection of parts in [math]\displaystyle{ \mathcal{Q} }[/math].

- [math]\displaystyle{ \mathcal{P} }[/math] is [math]\displaystyle{ \epsilon_0 }[/math]-regular and [math]\displaystyle{ \mathcal{Q} }[/math] is [math]\displaystyle{ \epsilon_{|\mathcal{P}|} }[/math]-regular.

- [math]\displaystyle{ q(\mathcal{Q})\lt q(\mathcal{P})+\epsilon_0 }[/math]

- [math]\displaystyle{ |\mathcal{Q}|\le M }[/math]

Proof

We apply the regularity lemma repeatedly to prove the stronger version. A rough outline:

- Start with [math]\displaystyle{ \mathcal P_0 }[/math] be an [math]\displaystyle{ \epsilon_0 }[/math] regular partition

- Repeatedly find its refinement [math]\displaystyle{ \mathcal Q }[/math] that is [math]\displaystyle{ \epsilon_{|\mathcal P|} }[/math] regular. If the energy increment of [math]\displaystyle{ \mathcal Q \le \epsilon_0 }[/math], we simply return [math]\displaystyle{ (\mathcal P, \mathcal Q) }[/math]. Otherwise, we replace [math]\displaystyle{ \mathcal P }[/math] with [math]\displaystyle{ \mathcal Q }[/math] and continue.

We start with [math]\displaystyle{ \mathcal P_0 }[/math] be an [math]\displaystyle{ \epsilon_0 }[/math] regular partition of [math]\displaystyle{ G }[/math] with [math]\displaystyle{ \le M(\epsilon_0) }[/math] parts. Here [math]\displaystyle{ M(t) }[/math] corresponds to the bound of parts in regularity lemma when [math]\displaystyle{ \epsilon=t }[/math].

Now for [math]\displaystyle{ i=0, 1, \cdots }[/math], we set [math]\displaystyle{ \mathcal{P_{i+1}} }[/math] to be an [math]\displaystyle{ \epsilon_{|P_i|} }[/math]regular refinement of [math]\displaystyle{ \mathcal{P_i} }[/math] with [math]\displaystyle{ \le M(\epsilon_{|P_i|})|\mathcal P_i| }[/math] parts. By the energy increment argument, [math]\displaystyle{ q(\mathcal P_{i+1}) \ge q(\mathcal P_{i}) }[/math]. Since the energy is bounded in [math]\displaystyle{ [0, 1] }[/math], there must be some [math]\displaystyle{ i \le 1/\epsilon_0+1 }[/math] such that [math]\displaystyle{ q(\mathcal P_{i+1})- q(\mathcal P_{i}) \lt \epsilon_0 }[/math]. We return [math]\displaystyle{ (\mathcal P_i, \mathcal P_{i+1}) }[/math] as [math]\displaystyle{ (\mathcal P, \mathcal Q) }[/math].

By our choice of [math]\displaystyle{ \mathcal P_{i+1}, }[/math] the regular and refinement conditions hold. The energy condition holds trivially. Now we argue for the number of parts. We use induction to show that [math]\displaystyle{ \forall i }[/math], there exists [math]\displaystyle{ M_i }[/math] such that [math]\displaystyle{ |\mathcal P_i| \le M_i }[/math]. By setting [math]\displaystyle{ M_0=M(\epsilon_0) }[/math], we have [math]\displaystyle{ |\mathcal P_0|\le M_0 }[/math]. Note that when [math]\displaystyle{ |P_i|\le M_i }[/math], [math]\displaystyle{ |P_{i+1}| \le M(\epsilon_{|P_i|})|\mathcal P_i| \le M(\epsilon_{|M_i|}) M_i }[/math], so we could set [math]\displaystyle{ M_{i+1}=M(\epsilon_{|M_i|}) M_i }[/math] and the statement is true for [math]\displaystyle{ i+1 }[/math]. By setting [math]\displaystyle{ M=\max_{i\le 1/\epsilon_0+2} M_i }[/math], we have [math]\displaystyle{ |P|, |Q| \le M. }[/math]

Remarks on equitable

A partition is equitable if the sizes of any two sets differ by at most [math]\displaystyle{ 1 }[/math]. By equitizing in each round of iteration, the proof of regularity lemma could be accustomed to prove the equitable version of regularity lemma. And by replacing the regularity lemma with its equitable version, the proof above could prove the equitable version of strong regularity lemma where [math]\displaystyle{ \mathcal{P} }[/math] and [math]\displaystyle{ \mathcal{Q} }[/math] are equitable partitions.

A useful Corollary

Statement

For any infinite sequence of constants [math]\displaystyle{ \epsilon_0\ge \epsilon_1 \ge ...\gt 0 }[/math], there exists [math]\displaystyle{ \delta\gt 0 }[/math] such that there exists a partition [math]\displaystyle{ \mathcal{P}={V_1,...,V_k} }[/math] and subsets [math]\displaystyle{ W_i \subset V_i }[/math] for each [math]\displaystyle{ i }[/math] where the following properties are satisfied:

- [math]\displaystyle{ |W_i|\gt \delta n }[/math]

- [math]\displaystyle{ (W_i,W_j) }[/math] is [math]\displaystyle{ \epsilon_{|\mathcal{P}|} }[/math]-regular for each pair [math]\displaystyle{ 1\le i\le j\le k }[/math]

- [math]\displaystyle{ |d(W_i,W_j)-d(V_i,V_j)|\le \epsilon_0 }[/math] for all but [math]\displaystyle{ \epsilon_0 |\mathcal{P}|^2 }[/math] pairs [math]\displaystyle{ 1\le i\le j\le k }[/math]

Motivation

The corollary explores deeper the small energy increment. It gives us a partition together with subsets with large sizes from each part, which are pairwise regular. In addition, the density between the corresponding subset pairs differs "not much" from the density between the corresponding parts.

Proof of corollary

We'll only prove the weaker result where the second condition only requires [math]\displaystyle{ (W_i,W_j) }[/math] to be [math]\displaystyle{ \epsilon_{|\mathcal{P}|} }[/math]-regular for [math]\displaystyle{ 1\le i\lt j\le k }[/math]. The full version can be proved by picking more subsets from each part that are mostly pairwise regular and combine them together.

Let [math]\displaystyle{ r=\epsilon_0^3/20 }[/math]. We apply the strong regularity lemma to find equitable [math]\displaystyle{ \mathcal P }[/math] that is a [math]\displaystyle{ r }[/math] regular partition and equitable [math]\displaystyle{ \mathcal Q }[/math] that is a [math]\displaystyle{ r/|P|^4 }[/math] regular refinement of [math]\displaystyle{ \mathcal P }[/math] , such that [math]\displaystyle{ q(\mathcal Q)-q(\mathcal P) \le r }[/math] and [math]\displaystyle{ |\mathcal Q|\le M }[/math].

Now assume that [math]\displaystyle{ P=\{V_1, \cdots, V_k\} }[/math], we randomly pick a vertex [math]\displaystyle{ v_i }[/math] from each [math]\displaystyle{ V_i }[/math] and let [math]\displaystyle{ W_i }[/math] to be the set that contains [math]\displaystyle{ v_i }[/math] in [math]\displaystyle{ \mathcal Q }[/math]. We argue that the subsets [math]\displaystyle{ W_i }[/math] satisfy all the conditions with probability [math]\displaystyle{ \gt 0 }[/math].

By setting [math]\displaystyle{ \delta = \frac{1}{2M} }[/math] the first condition is trivially true since [math]\displaystyle{ \mathcal Q }[/math] is an equitable partition. Since at most [math]\displaystyle{ \frac{r}{|P|^4}\binom{n}{2}\le \epsilon_0\frac{|V_i||V_j|}{3|P|^2} }[/math] vertex pairs live between irregular pairs in [math]\displaystyle{ \mathcal Q }[/math] , the probability that the pair [math]\displaystyle{ W_i }[/math] and [math]\displaystyle{ W_j }[/math] is irregular [math]\displaystyle{ \le \frac{1}{3|P|^2} }[/math], by union bound, the probability that at least one pair [math]\displaystyle{ W_i }[/math], [math]\displaystyle{ W_i }[/math] is irregular [math]\displaystyle{ \le 1/3 }[/math]. Note that

[math]\displaystyle{ \begin{align}r&\ge q(\mathcal Q)-q(\mathcal P)\\&=\sum_{i, j}\frac{|V_i||V_j|}{n^2}\mathbb E|d(W_i, W_j)-d(V_i, V_j)|^2\\ &\ge \sum_{i, j}\frac{1}{4|P|^2}\mathbb E|d(W_i, W_j)-d(V_i, V_j)|^2\\&=\frac{1}{4|P|^2}\mathbb E\sum_{i,j} |d(W_i, W_j)-d(V_i, V_j)|^2 \end{align} }[/math]

So by Markov's inequality [math]\displaystyle{ P(\sum_{i,j} |d(W_i, W_j)-d(V_i, V_j)|^2\ge 8|P|^2r)\le 1/2 }[/math], so with probability [math]\displaystyle{ \ge 1/2 }[/math], at most [math]\displaystyle{ \epsilon_0 |P|^2 }[/math] pairs could have [math]\displaystyle{ d(W_i, W_j)-d(V_i, V_j) \ge \epsilon_0 }[/math]. By union bound, the probability that all conditions hold [math]\displaystyle{ \ge 1-1/2-1/3 \gt 0 }[/math].

History and Extensions

(Szemerédi 1975) first introduced a weaker version of this lemma, restricted to bipartite graphs, in order to prove Szemerédi's theorem,[13] and in (Szemerédi 1978) he proved the full lemma.[14] Extensions of the regularity method to hypergraphs were obtained by Rödl and his collaborators[15][16][17] and Gowers.[18][19]

János Komlós, Gábor Sárközy and Endre Szemerédi later (in 1997) proved in the blow-up lemma[20][21] that the regular pairs in Szemerédi regularity lemma behave like complete bipartite graphs under the correct conditions. The lemma allowed for deeper exploration into the nature of embeddings of large sparse graphs into dense graphs.

The first constructive version was provided by Alon, Duke, Lefmann, Rödl and Yuster.[22] Subsequently, Frieze and Kannan gave a different version and extended it to hypergraphs.[23] They later produced a different construction due to Alan Frieze and Ravi Kannan that uses singular values of matrices.[24] One can find more efficient non-deterministic algorithms, as formally detailed in Terence Tao's blog[25] and implicitly mentioned in various papers.[26][27][28]

An inequality of Terence Tao extends the Szemerédi regularity lemma, by revisiting it from the perspective of probability theory and information theory instead of graph theory.[29] Terence Tao has also provided a proof of the lemma based on spectral theory, using the adjacency matrices of graphs.[30]

It is not possible to prove a variant of the regularity lemma in which all pairs of partition sets are regular. Some graphs, such as the half graphs, require many pairs of partitions (but a small fraction of all pairs) to be irregular.[1]

It is a common variant in the definition of an [math]\displaystyle{ \varepsilon }[/math]-regular partition to require that the vertex sets all have the same size, while collecting the leftover vertices in an "error"-set [math]\displaystyle{ V_0 }[/math] whose size is at most an [math]\displaystyle{ \varepsilon }[/math]-fraction of the size of the vertex set of [math]\displaystyle{ G }[/math].

A stronger variation of the regularity lemma was proven by Alon, Fischer, Krivelevich, and Szegedy while proving the induced graph removal lemma. This works with a sequence of [math]\displaystyle{ \varepsilon }[/math] instead of just one, and shows that there exists a partition with an extremely regular refinement, where the refinement doesn't have too large of an energy increment.

Szemerédi's regularity lemma can be interpreted as saying that the space of all graphs is totally bounded (and hence precompact) in a suitable metric (the cut distance). Limits in this metric can be represented by graphons; another version of the regularity lemma simply states that the space of graphons is compact.[31]

References

- ↑ 1.0 1.1 "Bounds for graph regularity and removal lemmas", Geometric and Functional Analysis 22 (5): 1191–1256, 2012, doi:10.1007/s00039-012-0171-x

- ↑ "On certain sets of integers", Journal of the London Mathematical Society 28 (1): 104–109, 1953, doi:10.1112/jlms/s1-28.1.104

- ↑ "A variant of the hypergraph removal lemma", Journal of Combinatorial Theory, Series A 113 (7): 1257–1280, 2006, doi:10.1016/j.jcta.2005.11.006

- ↑ 4.0 4.1 "Efficient testing of large graphs", Combinatorica 20 (4): 451–476, 2000, doi:10.1007/s004930070001

- ↑ Pelosin, Francesco (2018). Graph Compression Using The Regularity Method (MSc thesis). Ca' Foscari University of Venice. arXiv:1810.07275.

- ↑ Fiorucci, Marco; Pelosin, Francesco; Pelillo, Marcello (February 2020). "Separating structure from noise in large graphs using the regularity lemma". Pattern Recognition 98: 107070. doi:10.1016/j.patcog.2019.107070. Bibcode: 2020PatRe..9807070F.

- ↑ 7.0 7.1 7.2 "Quick Approximation to Matrices and Applications", Combinatorica 19 (2): 175–220, 1999, doi:10.1007/s004930050052

- ↑ "Some Optimal Inapproximability Results", Journal of the ACM 48 (4): 798–859, 2001, doi:10.1145/502090.502098

- ↑ "Random sampling and approximation of MAX-CSPs", Journal of Computer and System Sciences 67 (2): 212–243, 2003, doi:10.1016/S0022-0000(03)00008-4

- ↑ Dellamonica, Domingos; Kalyanasundaram, Subrahmanyam; Martin, Daniel (2012), "Random sampling and approximation of MAX-CSPs", SIAM Journal on Discrete Mathematics 26 (1): 15–29, doi:10.1137/110846373

- ↑ Bansal, Nikhil; Williams, Ryan (2009), "Regularity Lemmas and Combinatorial Algorithms", 2009 50th Annual IEEE Symposium on Foundations of Computer Science, pp. 745–754, doi:10.1109/FOCS.2009.76, ISBN 978-1-4244-5116-6

- ↑ "On the Communication Complexity of Graph Properties", Proceedings of the twentieth annual ACM symposium on Theory of computing - STOC '88, 26, Association for Computing Machinery, 1988, pp. 186–191, doi:10.1145/62212.62228, ISBN 0897912640

- ↑ Szemerédi, Endre (1975), "On sets of integers containing no k elements in arithmetic progression", Polska Akademia Nauk. Instytut Matematyczny. Acta Arithmetica 27: 199–245, doi:10.4064/aa-27-1-199-245, http://matwbn.icm.edu.pl/tresc.php?wyd=6&tom=27.

- ↑ Szemerédi, Endre (1978), "Regular partitions of graphs", Problèmes combinatoires et théorie des graphes (Colloq. Internat. CNRS, Univ. Orsay, Orsay, 1976), 260, Paris: CNRS, pp. 399–401.

- ↑ Frankl, Peter; Rödl, Vojtěch (2002), "Extremal problems on set systems", Random Structures & Algorithms 20 (2): 131–164, doi:10.1002/rsa.10017.abs.

- ↑ Rödl, Vojtěch; Skokan, Jozef (2004), "Regularity lemma for k-uniform hypergraphs", Random Structures & Algorithms 25 (1): 1–42, doi:10.1002/rsa.20017.

- ↑ Nagle, Brendan; Rödl, Vojtěch; Schacht, Mathias (2006), "The counting lemma for regular k-uniform hypergraphs", Random Structures & Algorithms 28 (2): 113–179, doi:10.1002/rsa.20117.

- ↑ Gowers, W. T. (2006), "Quasirandomness, counting and regularity for 3-uniform hypergraphs", Combinatorics, Probability and Computing 15 (1–2): 143–184, doi:10.1017/S0963548305007236.

- ↑ Gowers, W. T. (2007), "Hypergraph regularity and the multidimensional Szemerédi theorem", Annals of Mathematics, Second Series 166 (3): 897–946, doi:10.4007/annals.2007.166.897, Bibcode: 2007arXiv0710.3032G.

- ↑ "Blow-up lemma", Combinatorica 17 (1): 109–123, 1997, doi:10.1007/BF01196135

- ↑ "An algorithmic version of the blow-up lemma", Random Structures & Algorithms 12 (3): 297–312, 1998, doi:10.1002/(SICI)1098-2418(199805)12:3<297::AID-RSA5>3.3.CO;2-W

- ↑ N. Alon and R. A. Duke and H. Lefmann and V. Rödl and R. Yuster (1994). "The Algorithmic Aspects of the Regularity Lemma". Journal of Algorithms 16: 80–109. doi:10.1006/jagm.1994.1005.

- ↑ A. Frieze and R. Kannan (1996). "The regularity lemma and approximation schemes for dense problems". FOCS '96: Proceedings of the 37th Annual Symposium on Foundations of Computer Science: pp. 12–20. doi:10.1109/SFCS.1996.548459. ISBN 0-8186-7594-2.

- ↑ A. Frieze and R. Kannan (1999). "A Simple Algorithm for Constructing Szemerédi's Regularity Partition". Electronic Journal of Combinatorics 6: R17. doi:10.37236/1449. http://www.math.cmu.edu/~af1p/Texfiles/svreg.pdf.

- ↑ Szemeredi's regularity lemma via random partitions, 2009, https://terrytao.wordpress.com/2009/04/26/szemeredis-regularity-lemma-via-random-partitions

- ↑ "Every monotone graph property is testable", SIAM J. Comput. 38 (2): 505–522, 2008, doi:10.1137/050633445, ISSN 0097-5397

- ↑ A Simple Regularization of Hypergraphs, 2006, Bibcode: 2006math.....12838I

- ↑ Austin, Tim (2008), "On exchangeable random variables and the statistics of large graphs and hypergraphs", Probability Surveys 5: 80–145, doi:10.1214/08-PS124, Bibcode: 2008arXiv0801.1698A

- ↑ "Szemerédi's regularity lemma revisited", Contributions to Discrete Mathematics 1 (1): 8–28, 2006, doi:10.11575/cdm.v1i1.61900, Bibcode: 2005math......4472T.

- ↑ The spectral proof of the Szemeredi regularity lemma, 2012, https://terrytao.wordpress.com/2012/12/03/the-spectral-proof-of-the-szemeredi-regularity-lemma/

- ↑ Lovász, László; Szegedy, Balázs (2007), "Szemerédi's lemma for the analyst", Geometric and Functional Analysis 17: 252–270, doi:10.1007/s00039-007-0599-6, ISSN 1016-443X

Further reading

- "Szemerédi's regularity lemma and its applications in graph theory", Combinatorics, Paul Erdős is eighty, Vol. 2 (Keszthely, 1993), Bolyai Soc. Math. Stud., 2, János Bolyai Math. Soc., Budapest, 1996, pp. 295–352.

- "The regularity lemma and its applications in graph theory", Theoretical aspects of computer science (Tehran, 2000), Lecture Notes in Computer Science, 2292, Springer, Berlin, 2002, pp. 84–112, doi:10.1007/3-540-45878-6_3, ISBN 978-3-540-43328-6.

External links

- Edmonds, Chelsea; Koutsoukou-Argyraki, Angeliki; Paulson, Lawrence C. Szemerédi's regularity lemma (Formal proof development in Isabelle/HOL, Archive of Formal Proofs)

|