In-situ processing

In-situ processing also known as in-storage processing (ISP) is a computer science term that refers to processing data where it resides. In-situ means "situated in the original, natural, or existing place or position." An in-situ process processes data where it is stored, such as in solid-state drives (SSDs) or memory devices like NVDIMM, rather than sending the data to a computer's central processing unit (CPU). The technology utilizes embedded processing engines inside the storage devices to make them capable of running user applications in-place, so data does not need to leave the device to be processed. The technology is not new, but modern SSD architecture, as well as the availability of powerful embedded processors, make it more appealing to run user applications in-place.[1] SSDs deliver higher data throughput in comparison to hard disk drives (HDDs). Additionally, in contrast to the HDDs, the SSDs can handle multiple I/O commands at the same time.

The SSDs contain a considerable amount of processing horsepower for managing flash memory array and providing a high-speed interface to host machines. These processing capabilities can provide an environment to run user applications in-place. The computational storage device (CSD) term refers to an SSD which is capable of running user applications in-place. In an efficient CSD architecture, the embedded in-storage processing subsystem has access to the data stored in flash memory array through a low-power and high-speed link. The deployment of such CSDs in clusters can increase the overall performance and efficiency of big data and high-performance computing (HPC) applications.[1]

Reducing data transfer bottlenecks

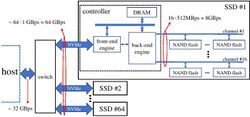

Webscale data center designers have been trying to develop storage architectures that favor high-capacity hosts. In the following figure (from [1]), such a storage system is shown where 64 SSDs are attached to a host. For the sake of simplicity, only the details of one SSD are demonstrated. Modern SSDs usually contain 16 or more flash memory channels which can be utilized concurrently for flash memory array I/O operations. Considering 512 MB/s bandwidth per channel, the internal bandwidth of an SSD with 16 flash memory channels is about 8 GB/s. This huge bandwidth decreases to about 1 GB/s due to the complexity of the host interface software and hardware architecture. In other words, the accumulated bandwidth of all internal channels of the 64 SSDs reaches the multiplication of the number of SSDs, the number of channels per SSD, and 512 MB/s (bandwidth of each channel) which is equal to 512 GB/s. While the accumulated bandwidth of the SSDs’ external interfaces is equal to 64 multiply by 1 GB/s (the host interface bandwidth of each SSD) which is 64 GB/s. However, In order to talk to the host, all SSDs required to be connected to a PCIe switch. Hence, the available bandwidth of the host is limited to 32 GB/s.

Overall, there is a 16X gap between the accumulated internal bandwidth of all SSDs and the bandwidth available to the host. In other words, for reading 32 TB of data, the host needs 16 minutes while internal components of the SSDs can read the same amount of data in about 1 minute. Additionally, in such storage systems, data need to continuously move through the complex hardware and software stack between hosts and storage units, which imposes a considerable amount of energy consumption and dramatically decreases the energy efficiency of large data centers. Hence, storage architects need to develop techniques to decrease data movement, and ISP technology has been introduced to overcome the aforementioned challenges by moving the process to data.

Efficiency and utilization

The computational storage technology minimizes the data movements in a cluster and also increases the processing horsepower of the cluster by augmenting power-efficient processing engines to the whole system. This technology can potentially be applied to both HDDs and SSDs; however, modern SSD architecture provides better tools for developing such technologies. The SSDs which can run user application in-place are called computational storage devices (CSDs). These storage units are augmentable processing resources, which means they are not designed to replace the high-end processors of modern servers. Instead, they can collaborate with the host’s CPU and augment their efficient processing horsepower to the system. The scientific article “Computational storage: an efficient and scalable platform for big data and HPC applications”[1] which is published by Springer Publishing under open access policy (free for the public to access) shows the benefits of CSD utilization in the clusters.

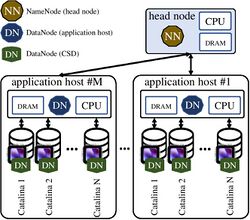

Examples of in-storage processing can be seen in fields like visualization efforts,[2] biology[3] and chemistry. This showcases how this technology allows for actions and results to be seen more efficiently than through data movement, regardless of the data being moved. The following figures (from [1]) show how CSDs can be utilized in an Apache Hadoop cluster and on a Message Passing Interface-based distributed environment.

Industry

In the storage industry, implementations from several companies are now available, including from NGD Systems,[4] ScaleFlux[5] and Eideticom.[6] Other companies have tried to do similar work in the past, including Micron Technology[7] and Samsung. The approach from all of these are the same direction, managing or processing data where it resided.

NGD Systems was the first company to create in-situ processing storage and has produced two versions of the device since 2017. The Catalina-1 was a standalone SSD that offered 24 TB of flash along with processing.[4] A second product called Newport was released in 2018 that offered up to 32 TB of flash memory.[8][9]

ScaleFlux uses a CSS-1000 NVMe device that uses host resourcing and kernel changes to address the device and use Host resources to manage up to 6.4 TB flash on the device, or base SSD.[10] Eideticom utilizes a device called a No-Load DRAM-only NVMe device as an accelerator with no actual flash storage for persistent data.[11] Micron called their version ‘Scale In’ at a Flash Memory Summit (FMS) event in 2013 but was never able to productize it and was based on a SATA SSD in production.[7] Samsung has worked on various versions of devices from KV Store and others.[12]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 Torabzadehkashi, Mahdi; Rezaei, Siavash; HeydariGorji, Ali; Bobarshad, Hossein; Alves, Vladimir; Bagherzadeh, Nader (15 November 2019). "Computational storage: an efficient and scalable platform for big data and HPC applications". Journal of Big Data 6 (100). doi:10.1186/s40537-019-0265-5.

- ↑ Raffin, Bruno (December 2014). "In-Situ_2014". ftp://ftp.inf.ufrgs.br/pub/geyer/PDP-Pos/slidesAlunos/Slides2015-2/AulasExtras/in-situ-Bruno-RAFFIN-INRIA.pdf.

- ↑ "In situ Structural Biology" (in en). Utrecht University. 2016-03-17. https://www.uu.nl/en/research/cryo-em/research.

- ↑ 4.0 4.1 "Computational storage takes spotlight in new NGD Systems SSD" (in en-US). Tech Target. 2020-02-13. https://searchstorage.techtarget.com/news/252459062/Computational-storage-takes-spotlight-in-new-NGD-Systems-SSD.

- ↑ "What if I told you that flash drives could do their own processing?" (in en-US). The Register. 2020-02-13. https://www.theregister.co.uk/2018/02/13/bringing_compute_to_flash_drives_to_unleash_in_situ_processing/.

- ↑ "IDC Innovators: Computational Storage, 2019" (in en-US). IDC. 2020-02-13. https://www.idc.com/getdoc.jsp?containerId=US45416319.

- ↑ 7.0 7.1 Doller, Ed (14 August 2013). "Micron Scale In Keynote - 2013 FMS". https://www.flashmemorysummit.com/English/Collaterals/Proceedings/2013/20130814_Keynote5_Doller.pdf.

- ↑ "NGD Systems Releases First 16TB NVMe Computational U.2 SSD" (in en-US). Storage Review. 2020-02-13. https://www.storagereview.com/node/6973.

- ↑ "$20M for Upstart Storage Device Firm NGD" (in en-US). Orange County Business Journal. 2020-02-13. https://www.ocbj.com/news/2020/feb/10/20m-upstart-storage-device-firm-ngd/.

- ↑ "Data-Driven Computational Storage Server Solution (Compute and Storage Acceleration Solution) : Inspur" (in en-US). http://xeonscalable.inspursystems.com/solution/data-driven-computational-storage-server-solution/.

- ↑ "Modern Storage Technologies in 2020: What You Need to Know" (in en-gb). 2020-02-13. https://bigstep.com/blog/modern-storage-technologies-in-2020.

- ↑ Do, Jaeyoung; Kee, Yang-Suk; Patel, Jignesh M.; Park, Chanik; Park, Kwanghyun; DeWitt, David J. (2013-06-22). "Query processing on smart SSDs". Query processing on smart SSDs: opportunities and challenges. ACM. pp. 1221–1230. doi:10.1145/2463676.2465295. ISBN 9781450320375.

|