CUSUM

| CUSUM chart | |

|---|---|

| Originally proposed by | E. S. Page |

| Process observations | |

| Rational subgroup size | n = 1 |

| Measurement type | Cumulative sum of a quality characteristic |

| Quality characteristic type | Variables data |

| Underlying distribution | Normal distribution |

| Performance | |

| Size of shift to detect | ≤ 1.5σ |

| Process variation chart | |

| Not applicable | |

| Process mean chart | |

| Center line | The target value, T, of the quality characteristic |

| Upper control limit | |

| Lower control limit | |

| Plotted statistic | |

In statistical quality control, the CUSUM (or cumulative sum control chart) is a sequential analysis technique developed by E. S. Page of the University of Cambridge. It is typically used for monitoring change detection.[1] CUSUM was announced in Biometrika, in 1954, a few years after the publication of Wald's sequential probability ratio test (SPRT).[2]

E. S. Page referred to a "quality number" , by which he meant a parameter of the probability distribution; for example, the mean. He devised CUSUM as a method to determine changes in it, and proposed a criterion for deciding when to take corrective action. When the CUSUM method is applied to changes in mean, it can be used for step detection of a time series.

A few years later, George Alfred Barnard developed a visualization method, the V-mask chart, to detect both increases and decreases in .[3]

Method

As its name implies, CUSUM involves the calculation of a cumulative sum (which is what makes it "sequential"). Samples from a process are assigned weights , and summed as follows:

When the value of S exceeds a certain threshold value, a change in value has been found. The above formula only detects changes in the positive direction. When negative changes need to be found as well, the min operation should be used instead of the max operation, and this time a change has been found when the value of S is below the (negative) value of the threshold value.

Page did not explicitly say that represents the likelihood function, but this is common usage.

Note that this differs from SPRT by always using zero function as the lower "holding barrier" rather than a lower "holding barrier".[1] Also, CUSUM does not require the use of the likelihood function.

As a means of assessing CUSUM's performance, Page defined the average run length (A.R.L.) metric; "the expected number of articles sampled before action is taken." He further wrote:[2]

When the quality of the output is satisfactory the A.R.L. is a measure of the expense incurred by the scheme when it gives false alarms, i.e., Type I errors (Neyman & Pearson, 1936[4]). On the other hand, for constant poor quality the A.R.L. measures the delay and thus the amount of scrap produced before the rectifying action is taken, i.e., Type II errors.

Example

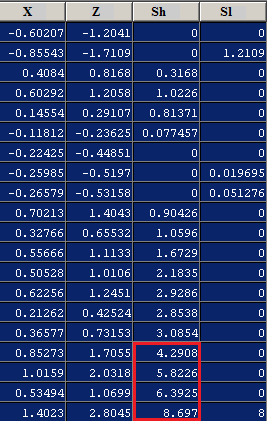

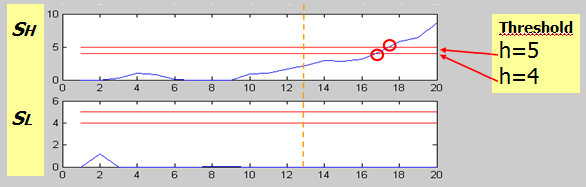

The following example shows 20 observations of a process with a mean of 0 and a standard deviation of 0.5.

From the column, it can be seen that never deviates by 3 standard deviations (), so simply alerting on a high deviation will not detect a failure, whereas CUSUM shows that the value exceeds 4 at the 17th observation.

| Column | Description |

|---|---|

| The observations of the process with an expected mean of 0 and an expected standard deviation of 0.5 | |

| The normalized observations, i.e. centered around the mean and scaled by the standard deviation | |

| The high CUSUM value, detecting a positive anomaly, | |

| The low CUSUM value, detecting a negative anomaly, |

where is a critical level parameter (tunable, same as threshold T) that's used to adjust the sensitivity of change detection: larger makes CUSUM less sensitive to the change and vice versa.

Variants

Cumulative observed-minus-expected plots[1] are a related method.

References

- ↑ 1.0 1.1 1.2 Grigg et al. (2003). "The Use of Risk-Adjusted CUSUM and RSPRT Charts for Monitoring in Medical Contexts". Statistical Methods in Medical Research 12 (2): 147–170. doi:10.1177/096228020301200205. PMID 12665208.

- ↑ 2.0 2.1 Page, E. S. (June 1954). "Continuous Inspection Scheme". Biometrika 41 (1/2): 100–115. doi:10.1093/biomet/41.1-2.100.

- ↑ Barnard, G.A. (1959). "Control charts and stochastic processes". Journal of the Royal Statistical Society B (Methodological) (21, number 2): 239–71.

- ↑ "Sufficient statistics and uniformly most powerful tests of statistical hypotheses". Statistical Research Memoirs I: 113–137.

Further reading

- Michèle Basseville and Igor V. Nikiforov (April 1993). Detection of Abrupt Changes: Theory and Application. Englewood Cliffs, NJ: Prentice-Hall. ISBN 0-13-126780-9. http://www.irisa.fr/sisthem/kniga/.

- Mishra, S., Vanli, O. A., & Park, C (2015). "A Multivariate Cumulative Sum Method for Continuous Damage Monitoring with Lamb-wave Sensors", International Journal of Prognostics and Health Management, ISSN 2153-2648

External links

|