Color Cell Compression

Color Cell Compression is a lossy image compression algorithm developed by Campbell et al.,[1][2][3] in 1986, which can be considered an early forerunner of modern texture compression algorithms, such as S3 Texture Compression and Adaptive Scalable Texture Compression. It is closely related to Block Truncation Coding,[4] another lossy image compression algorithm, which predates Color Cell Compression, in that it uses the dominant luminance of a block of pixels to partition said pixels into two representative colors. The primary difference between Block Truncation Coding and Color Cell Compression is that the former was designed to compress grayscale images and the latter was designed to compress color images. Also, Block Truncation Coding requires that the standard deviation of the colors of pixels in a block be computed in order to compress an image, whereas Color Cell Compression does not use the standard deviation. Both algorithms, though, can compress an image down to effectively 2 bits per pixel.

Algorithm

Compression

The Color Cell Compression algorithm processes an image in eight steps, although one of the steps (step #6) is optional. It is assumed here that the input is a 24 bits/pixel image, as assumed in the original journal article, although other bit depths could be used.

- For each 8-bit RGB octet triple contained in each 24-bit color value in the input image, the NTSC luminance is computed using the following formula:[1][2][3]

- The image is now subdivided into 4-pixel by 4-pixel blocks, and, the arithmetic mean of the luminance of each pixel in the block is used to select a representative luminance value.[1][2][3]

- Each block of pixels is then divided into two groups. One group consists of pixels in the current block where each pixel's luminance is greater than or equal to the representative luminance for the current block. The second group of pixels consists of pixels in the current block where each pixel's luminance is less than the representative luminance for the current block. Whether a pixel in the current block belongs to a certain group is determined by a binary "0" or a "1" value in another, separate, 16-entry bitmap.[1][2][3]

- Two representative 24-bit colors are now selected for each block of pixels by computing two arithmetic means. The first arithmetic mean is the arithmetic mean of all of the pixels belonging to the first group of pixels where the luminance of each pixel is a "1" in the luminance bitmap. The second 24-bit representative color is selected similarly, by taking the arithmetic mean of all the 24-bit color pixels in the second group where each pixel corresponds to a "0" in the luminance bitmap.[1][2][3]

- The luminance bitmap is stored in a temporary location and then the two 24-bit representative colors for the current block are appended to the bitmap. At this stage, the image has been compressed into a 16-entry bitmap with two 24-bit binary values appended. The total size of the compressed block is now 16 bits for the luminance bitmap, and two 24-bit binary quantities for each representative color, yielding a total size of 64 bits, which, when divided by 16 (the number of pixels in the block), yields 4 i.e. 4 bits per pixel.[1][2][3]

- Each compressed block of pixels is modified by truncating each of the two 24-bit representative colors to 15 bits. This step is optional, and the algorithm can terminate at this point, if desired, as the compressed blocks at this stage have a total size of bits, which, when divided by 16, yields 2.875 bits per pixel. If this step is performed, then the 15-bit truncated color values can be used in the next step to create a smaller histogram. Also, since each 15-bit binary color vector is presumably stored in a 16-bit word, then the 16th bit can be used to improve the image quality by specifying which one of two lookup tables should be used.[1][2][3]

- A histogram of all the 24-bit colors in the original 24-bit color image, or the truncated 15-bit color vectors, is created. In a naïve implementation, the histogram is consulted to choose 256 of the most frequently used colors which are then put into a 256-entry array, where each entry consists of three octets of a 24-bit per pixel color value. The histogram method of selecting the most appropriate colors for the original 24-bit per pixel color image can instead be replaced by a vector quantization class algorithm such as the median cut algorithm or K-means clustering[citation needed] which usually yields better results.[1][2][3]

- The final step consists of taking the current block of pixels and determining which 24-bit per pixel color in the 256-entry lookup table most closely match the two representative colors for each block. The two 8-bit indices pointing to colors in the lookup table are now appended to the 16-bit luminance bitmap. This yields a total compressed size of bits, which, when divided by 16, yields 2 bits per pixel.[1][2][3]

Decompression

Decompression is very easy and straightforward. To reconstruct each compressed 4-pixel by 4-pixel block, the 16-bit luminance bitmap is consulted for each block. Depending on whether an element of the bitmap is 1 or 0, one of the two 8-bit indices into the lookup table is selected and then dereferenced and the corresponding 24-bit per pixel color value is retrieved.[1][2][3]

Performance and image quality

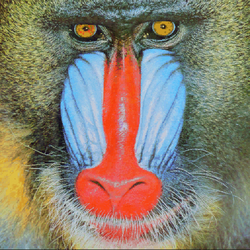

In spite of its very simple mechanism, the algorithm yields surprisingly good results on photographic images,[1][2][3] and it has the advantage of being very fast to decode with limited hardware. Although far surpassed in compression ratio by later block-transform coding methods such as JPEG, it has the advantage of very simple decompression and fast random access into the compressed image.

Apple Video (RPZA) and S3 Texture Compression employ the same principle of encoding 4×4-pixel blocks based on two representative colors. They refine CCC by expanding each entry in the luminance bitmap to two bits, where the additional two values represent a weighted average: one-third of one color and two-thirds of the other. To work around S3's patent, the Super Simple Texture Compression (S2TC) library was created to encode CCC data in a format compatible with S3TC decoders and to reinterpret S3TC as CCC with minor quality loss.

See also

References

- ↑ 1.00 1.01 1.02 1.03 1.04 1.05 1.06 1.07 1.08 1.09 1.10 Campbell, G.; Defanti, T. A.; Frederiksen, J.; Joyce, S. A.; Leske, L. A. (1986). "Two bit/pixel full color encoding". Proceedings of the 13th annual conference on Computer graphics and interactive techniques - SIGGRAPH '86. pp. 215. doi:10.1145/15922.15910. ISBN 978-0-89791-196-2.

- ↑ 2.00 2.01 2.02 2.03 2.04 2.05 2.06 2.07 2.08 2.09 2.10 Pins, Markus (1991). "Extensions of the Color-Cell-Compression-Algorithm". Computer Animation '91. pp. 241–251. doi:10.1007/978-4-431-66890-9_17. ISBN 978-4-431-66892-3.

- ↑ 3.00 3.01 3.02 3.03 3.04 3.05 3.06 3.07 3.08 3.09 3.10 Lamparter, Bernd Effelsberg, Wolfgang (June 2005). "EXtended color cell compression — A runtime-efficient compression scheme for software video". Multimedia: Advanced Teleservices and High-Speed Communication Architectures. Lecture Notes in Computer Science. 868. pp. 181–190. doi:10.1007/3-540-58494-3_16. ISBN 978-3-540-58494-0. http://madoc.bib.uni-mannheim.de/madoc/volltexte/2004/836/.[yes|permanent dead link|dead link}}]

- ↑ Wennersten, P.; Ström, J. (2009). "Table-based Alpha Compression". Computer Graphics Forum 28 (2): 687. doi:10.1111/j.1467-8659.2009.01409.x. http://www.jacobstrom.com/publications/alphalineWennerstenStrom09.pdf.

|