Data organization for low power

Power consumption in relation to physical size of electronic hardware has increased as the components have become smaller and more densely packed. Coupled with high operating frequencies, this has led to unacceptable levels of power dissipation. Memory accounts for a high proportion of the power consumed, and this contribution may be reduced by optimizing data organization – the way data is stored.[1]

Motivation

Power optimization in high memory density electronic systems has become one of the major challenges for devices such as mobile phones, embedded systems, and wireless devices. As the number of cores on a single chip is growing the power consumption by the devices also increases. Studies on power consumption distribution in smartphones and data-centers have shown that the memory subsystem consumes around 40% of the total power. In server systems, the study reveals that the memory consumes around 1.5 times the core power consumption.[2]

Memory data organization of low energy address bus

System level buses such as off-chip buses or long on-chip buses between IP blocks are often major sources of energy consumption due to their large load capacitance. Experimental results have shown that the bus activity for memory access can be reduced to 50% by organizing the data. Consider the case of compiling the code written in C programming language:

int A[4][4], B[4][4];

for (i = 0; i < 4; i++) {

for (j = 0; j < 4; j++) {

B[i][j] = A[j][i];

}

}

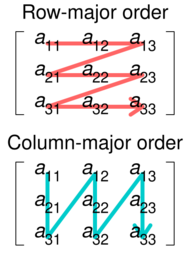

Most existing C compilers place a multidimensional array in row-major form, that is row by row: this is shown in the "unoptimized" column in the adjoining table. As a result, no memory access while running this code has sequential memory access because elements in columns are accessed sequentially. But it is possible to change the way in which they are placed in memory so as to maximize the number of sequential accesses from memory. This can be achieved by ordering the data as shown in the "optimized" column of the table. Such redistribution of data by the compiler can significantly reduce energy consumption due to memory access.[3]

| unoptimized | optimized |

|---|---|

| A[0][0] | A[0][0] |

| A[0][1] | B[0][0] |

| A[0][2] | A[1][0] |

| A[0][3] | B[0][1] |

| A[0][0] | A[2][0] |

| A[1][0] | B[0][2] |

| A[1][1] | A[3][0] |

| . | B[0][3] |

| . | A[0][1] |

| B[0][0] | B[1][0] |

| B[0][1] | A[1][1] |

| B[0][2] | B[1][1] |

| B[0][3] | . |

| B[1][0] | . |

| . | . |

| . | A[3][3] |

| B[3][3] | B[3][3] |

Data structure transformations

This method involves source code transformations that either modifies the data structure included in the source code or introduces new data structures or, possibly, modifies the access mode and the access paths with the aim of lowering power consumption. Certain techniques are used to perform such transformations.

Array declaration sorting

The basic idea is to modify the local array declaration ordering, so that the arrays more frequently accessed are placed on top of the stack in such a way that the memory locations frequently used are accessed directly. To achieve this, the array declarations are reorganized to place first the more frequently accessed arrays, requiring either a static estimation or a dynamic analysis of frequency of access of the local arrays.

Array scope modification (local to global)

In any computation program, local variables are stored in stack of a program and global variables are stored in data memory. This method involves converting local arrays into global arrays so that they are stored in data memory instead of stack. The location of a global array can be determined at compile time, whereas local array location can only be determined when the subprogram is called and depends on the stack pointer value. As a consequence, the global arrays are accessed with offset addressing mode with constant 0 while local arrays, excluding the first, are accessed with constant offset different from 0, and this achieves an energy reduction.

Array resizing (temporary array insertion)

In this method, elements that are accessed more frequently are identified via profiling or static considerations. A copy of these elements is then stored in a temporary array which can be accessed without any data cache miss. This results in a significant system energy reduction, but it can also reduce performance.[1]

Using scratchpad memory

On-chip caches use static RAM that consumes between 25% and 50% of the total chip power and occupies about 50% of the total chip area. Scratchpad memory occupies less area than on-chip caches. This will typically reduce the energy consumption of the memory unit, because less area implies reduction in the total switched capacitance. Current embedded processors particularly in the area of multimedia applications and graphic controllers have on-chip scratch pad memories. In cache memory systems, the mapping of program elements is done during run time, whereas in scratchpad memory systems this is done either by the user or automatically by the compiler using a suitable algorithm.[4]

See also

References

- ↑ 1.0 1.1 Brandolese, Carlo; Fornaciari, William; Salice, Fabio; Sciuto, Donatella (October 2002). "The Impact of Source Code Transformations on Software Power and Energy Consumption". Journal of Circuits, Systems and Computers 11 (5): 477–502. doi:10.1142/S0218126602000586. https://www.researchgate.net/publication/220337903_The_Impact_of_Source_Code_Transformations_on_Software_Power_and_Energy_Consumption.

- ↑ Panda, P.R.; Patel, V.; Shah, P.; Sharma, N.; Srinivasan, V.; Sarma, D. (3–7 January 2015). "Power Optimization Techniques for DDR3 SDRAM". 28th International Conference on VLSI Design (VLSID), 2015. IEEE. pp. 310–315. doi:10.1109/VLSID.2015.59.

- ↑ "Power Optimization Techniques for DDR3 SDRAM"

- ↑ "Scratchpad Memory: A Design Alternative for Cache On-chip memory in Embedded Systems". http://robertdick.org/aeos/reading/banakar-scratchpad.pdf.

|