Finance:Quasi-Monte Carlo methods in finance

High-dimensional integrals in hundreds or thousands of variables occur commonly in finance. These integrals have to be computed numerically to within a threshold [math]\displaystyle{ \epsilon }[/math]. If the integral is of dimension [math]\displaystyle{ d }[/math] then in the worst case, where one has a guarantee of error at most [math]\displaystyle{ \epsilon }[/math], the computational complexity is typically of order [math]\displaystyle{ \epsilon^{-d} }[/math]. That is, the problem suffers the curse of dimensionality. In 1977 P. Boyle, University of Waterloo, proposed using Monte Carlo (MC) to evaluate options.[1] Starting in early 1992, J. F. Traub, Columbia University, and a graduate student at the time, S. Paskov, used quasi-Monte Carlo (QMC) to price a Collateralized mortgage obligation with parameters specified by Goldman Sachs. Even though it was believed by the world's leading experts that QMC should not be used for high-dimensional integration, Paskov and Traub found that QMC beat MC by one to three orders of magnitude and also enjoyed other desirable attributes. Their results were first published[2] in 1995. Today QMC is widely used in the financial sector to value financial derivatives; see list of books below. QMC is not a panacea for all high-dimensional integrals. A number of explanations have been proposed for why QMC is so good for financial derivatives. This continues to be a very fruitful research area.

Monte Carlo and quasi-Monte Carlo methods

Integrals in hundreds or thousands of variables are common in computational finance. These have to be approximated numerically to within an error threshold [math]\displaystyle{ \epsilon }[/math]. It is well known that if a worst case guarantee of error at most [math]\displaystyle{ \epsilon }[/math] is required then the computational complexity of integration may be exponential in [math]\displaystyle{ d }[/math], the dimension of the integrand; See [3] Ch. 3 for details. To break this curse of dimensionality one can use the Monte Carlo (MC) method defined by

- [math]\displaystyle{ \varphi^{\mathop{\rm MC}}(f)=\frac 1n \sum_{i=1}^nf(x_i), }[/math]

where the evaluation points [math]\displaystyle{ x_i }[/math] are randomly chosen. It is well known that the expected error of Monte Carlo is of order [math]\displaystyle{ n^{-1/2} }[/math]. Thus, the cost of the algorithm that has error [math]\displaystyle{ \epsilon }[/math] is of order [math]\displaystyle{ \epsilon^{-2} }[/math] breaking the curse of dimensionality.

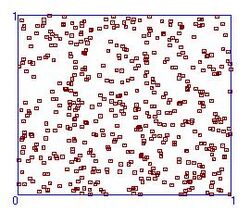

Of course in computational practice pseudo-random points are used. Figure 1 shows the distribution of 500 pseudo-random points on the unit square.

Note there are regions where there are no points and other regions where there are clusters of points. It would be desirable to sample the integrand at uniformly distributed points. A rectangular grid would be uniform but even if there were only 2 grid points in each Cartesian direction there would be [math]\displaystyle{ 2^d }[/math] points. So the desideratum should be as few points as possible chosen as uniform as possible.

It turns out there is a well-developed part of number theory which deals exactly with this desideratum. Discrepancy is a measure of deviation from uniformity so what one wants are low discrepancy sequences (LDS).[4] Numerous LDS have been created named after their inventors, e.g.

- Halton

- Hammersley

- Sobol

- Faure

- Niederreiter

Figure 2. gives the distribution of 500 LDS points.

The quasi-Monte Carlo (QMC) method is defined by

- [math]\displaystyle{ \varphi^{\mathop{\rm QMC}}(f)=\frac 1n \sum_{i=1}^nf(x_i), }[/math]

where the [math]\displaystyle{ x_i }[/math] belong to an LDS. The standard terminology quasi-Monte Carlo is somewhat unfortunate since MC is a randomized method whereas QMC is purely deterministic.

The uniform distribution of LDS is desirable. But the worst case error of QMC is of order

- [math]\displaystyle{ \frac{(\log n)^d}{n}, }[/math]

where [math]\displaystyle{ n }[/math] is the number of sample points. See [4] for the theory of LDS and references to the literature. The rate of convergence of LDS may be contrasted with the expected rate of convergence of MC which is [math]\displaystyle{ n^{-1/2} }[/math]. For [math]\displaystyle{ d }[/math] small the rate of convergence of QMC is faster than MC but for [math]\displaystyle{ d }[/math] large the factor [math]\displaystyle{ (\log n)^d }[/math] is devastating. For example, if [math]\displaystyle{ d=360 }[/math], then even with [math]\displaystyle{ \log n=2 }[/math] the QMC error is proportional to [math]\displaystyle{ 2^{360} }[/math]. Thus, it was widely believed by the world's leading experts that QMC should not be used for high-dimensional integration. For example, in 1992 Bratley, Fox and Niederreiter[5] performed extensive testing on certain mathematical problems. They conclude "in high-dimensional problems (say [math]\displaystyle{ d \gt 12 }[/math]), QMC seems to offer no practical advantage over MC". In 1993, Rensburg and Torrie[6] compared QMC with MC for the numerical estimation of high-dimensional integrals which occur in computing virial coefficients for the hard-sphere fluid. They conclude QMC is more effective than MC only if [math]\displaystyle{ d\lt 10 }[/math]. As we shall see, tests on 360-dimensional integrals arising from a collateralized mortgage obligation (CMO) lead to very different conclusions.

Woźniakowski's 1991 paper[7] showing the connection between average case complexity of integration and QMC led to new interest in QMC. Woźniakowski's result received considerable coverage in the scientific press[8] .[9] In early 1992, I. T. Vanderhoof, New York University, became aware of Woźniakowski's result and gave Woźniakowski's colleague J. F. Traub, Columbia University, a CMO with parameters set by Goldman Sachs. This CMO had 10 tranches each requiring the computation of a 360 dimensional integral. Traub asked a Ph.D. student, Spassimir Paskov, to compare QMC with MC for the CMO. In 1992 Paskov built a software system called FinDer and ran extensive tests. To the Columbia's research group's surprise and initial disbelief Paskov reported that QMC was always superior to MC in a number of ways. Details are given below. Preliminary results were presented by Paskov and Traub to a number of Wall Street firms in Fall 1993 and Spring 1994. The firms were initially skeptical of the claim that QMC was superior to MC for pricing financial derivatives. A January 1994 article in Scientific American by Traub and Woźniakowski[9] discussed the theoretical issues and reported that "Preliminary results obtained by testing certain finance problems suggests the superiority of the deterministic methods in practice". In Fall 1994 Paskov wrote a Columbia University Computer Science Report which appeared in slightly modified form in 1997.[10]

In Fall 1995 Paskov and Traub published a paper in the "Journal of Portfolio Management".[2] They compared MC and two QMC methods. The two deterministic methods used Sobol and Halton points. Since better LDS were created later, no comparison will be made between Sobol and Halton sequences. The experiments drew the following conclusions regarding the performance of MC and QMC on the 10 tranche CMO:

- QMC methods converge significantly faster than MC

- MC is sensitive to the initial seed

- The convergence of QMC is smoother than the convergence of MC. This makes automatic termination easier for QMC.

To summarize, QMC beats MC for the CMO on accuracy, confidence level, and speed.

This paper was followed by reports on tests by a number of researchers which also led to the conclusion the QMC is superior to MC for a variety of high-dimensional finance problems. This includes papers by Caflisch and Morokoff (1996),[11] Joy, Boyle, Tan (1996),[12] Ninomiya and Tezuka (1996),[13] Papageorgiou and Traub (1996),[14] Ackworth, Broadie and Glasserman (1997),[15] Kucherenko and co-authors [16] [17]

Further testing of the CMO[14] was carried out by Anargyros Papageorgiou, who developed an improved version of the FinDer software system. The new results include the following:

- Small number of sample points: For the hardest CMO tranche QMC using the generalized Faure LDS due to S. Tezuka[18] achieves accuracy [math]\displaystyle{ 10^{-2} }[/math] with just 170 points. MC requires 2700 points for the same accuracy. The significance of this is that due to future interest rates and prepayment rates being unknown, financial firms are content with accuracy of [math]\displaystyle{ 10^{-2} }[/math].

- Large number of sample points: The advantage of QMC over MC is further amplified as the sample size and accuracy demands grow. In particular, QMC is 20 to 50 times faster than MC with moderate sample sizes, and can be up to 1000 times faster than MC[14] when high accuracy is desired QMC.

Currently the highest reported dimension for which QMC outperforms MC is 65536.[19] The software is the Sobol' Sequence generator SobolSeq65536 which generates Sobol' Sequences satisfying Property A for all dimensions and Property A' for the adjacent dimensions. SobolSeq generators outperform all other known generators both in speed and accuracy [20]

Theoretical explanations

The results reported so far in this article are empirical. A number of possible theoretical explanations have been advanced. This has been a very research rich area leading to powerful new concepts but a definite answer has not been obtained.

A possible explanation of why QMC is good for finance is the following. Consider a tranche of the CMO mentioned earlier. The integral gives expected future cash flows from a basket of 30-year mortgages at 360 monthly intervals. Because of the discounted value of money variables representing future times are increasingly less important. In a seminal paper I. Sloan and H. Woźniakowski[21] introduced the idea of weighted spaces. In these spaces the dependence on the successive variables can be moderated by weights. If the weights decrease sufficiently rapidly the curse of dimensionality is broken even with a worst case guarantee. This paper led to a great amount of work on the tractability of integration and other problems.[22] A problem is tractable when its complexity is of order [math]\displaystyle{ \epsilon^{-p} }[/math] and [math]\displaystyle{ p }[/math] is independent of the dimension.

On the other hand, effective dimension was proposed by Caflisch, Morokoff and Owen[23] as an indicator of the difficulty of high-dimensional integration. The purpose was to explain the remarkable success of quasi-Monte Carlo (QMC) in approximating the very-high-dimensional integrals in finance. They argued that the integrands are of low effective dimension and that is why QMC is much faster than Monte Carlo (MC). The impact of the arguments of Caflisch et al.[23] was great. A number of papers deal with the relationship between the error of QMC and the effective dimension[24] .[16] [17] [25]

It is known that QMC fails for certain functions that have high effective dimension.[5] However, low effective dimension is not a necessary condition for QMC to beat MC and for high-dimensional integration to be tractable. In 2005, Tezuka[26] exhibited a class of functions of [math]\displaystyle{ d }[/math] variables, all with maximum effective dimension equal to [math]\displaystyle{ d }[/math]. For these functions QMC is very fast since its convergence rate is of order [math]\displaystyle{ n^{-1} }[/math], where [math]\displaystyle{ n }[/math] is the number of function evaluations.

Isotropic integrals

QMC can also be superior to MC and to other methods for isotropic problems, that is, problems where all variables are equally important. For example, Papageorgiou and Traub[27] reported test results on the model integration problems suggested by the physicist B. D. Keister[28]

- [math]\displaystyle{ \left(\frac 1{2\pi}\right)^{d/2} \int_{\mathbb R^d}\cos(\|x\| )e^{-\| x\|^2}\,dx, }[/math]

where [math]\displaystyle{ \|\cdot\| }[/math] denotes the Euclidean norm and [math]\displaystyle{ d=25 }[/math]. Keister reports that using a standard numerical method some 220,000 points were needed to obtain a relative error on the order of [math]\displaystyle{ 10^{-2} }[/math]. A QMC calculation using the generalized Faure low discrepancy sequence[18] (QMC-GF) used only 500 points to obtain the same relative error. The same integral was tested for a range of values of [math]\displaystyle{ d }[/math] up to [math]\displaystyle{ d=100 }[/math]. Its error was

- [math]\displaystyle{ c\cdot n^{-1}, }[/math]

[math]\displaystyle{ c\lt 110 }[/math], where [math]\displaystyle{ n }[/math] is the number of evaluations of [math]\displaystyle{ f }[/math]. This may be compared with the MC method whose error was proportional to [math]\displaystyle{ n^{-1/2} }[/math].

These are empirical results. In a theoretical investigation Papageorgiou[29] proved that the convergence rate of QMC for a class of [math]\displaystyle{ d }[/math]-dimensional isotropic integrals which includes the integral defined above is of the order

- [math]\displaystyle{ \sqrt{\log n}/n. }[/math]

This is with a worst case guarantee compared to the expected convergence rate of [math]\displaystyle{ n^{-1/2} }[/math] of Monte Carlo and shows the superiority of QMC for this type of integral.

In another theoretical investigation Papageorgiou[30] presented sufficient conditions for fast QMC convergence. The conditions apply to isotropic and non-isotropic problems and, in particular, to a number of problems in computational finance. He presented classes of functions where even in the worst case the convergence rate of QMC is of order

- [math]\displaystyle{ n^{-1+p(\log n)^{-1/2}}, }[/math]

where [math]\displaystyle{ p\ge 0 }[/math] is a constant that depends on the class of functions.

But this is only a sufficient condition and leaves open the major question we pose in the next section.

Open questions

- Characterize for which high-dimensional integration problems QMC is superior to MC.

- Characterize types of financial instruments for which QMC is superior to MC.

See also

Resources

Books

- Bruno Dupire (1998). Monte Carlo: methodologies and applications for pricing and risk management. Risk. ISBN 1-899332-91-X.

- Paul Glasserman (2003). Monte Carlo methods in financial engineering. Springer-Verlag. ISBN 0-387-00451-3.

- Peter Jaeckel (2002). Monte Carlo methods in finance. John Wiley and Sons. ISBN 0-471-49741-X.

- Don L. McLeish (2005). Monte Carlo Simulation & Finance. ISBN 0-471-67778-7.

- Christian P. Robert, George Casella (2004). Monte Carlo Statistical Methods. ISBN 0-387-21239-6. https://archive.org/details/springer_10.1007-978-1-4757-4145-2.

Models

- Spreadsheets available for download, Prof. Marco Dias, PUC-Rio

References

- ↑ Boyle, P. (1977), Options: a Monte Carlo approach, J. Financial Economics, 4, 323-338.

- ↑ 2.0 2.1 Paskov, S. H. and Traub, J. F. (1995), Faster evaluation of financial derivatives, J. Portfolio Management, 22(1), 113-120.

- ↑ Traub, J. F and Werschulz, A. G. (1998), Complexity and Information, Cambridge University Press, Cambridge, UK.

- ↑ 4.0 4.1 Niederreiter, H. (1992), Random Number Generation and Quasi-Monte Carlo Methods, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, Philadelphia.

- ↑ 5.0 5.1 Bratley, P., Fox, B. L. and Niederreiter, H. (1992), Implementation and tests of low-discrepancy sequences, ACM Transactions on Modelling and Computer Simulation, Vol. 2, No. 3, 195-213.

- ↑ van Rensburg, E. J. J. and Torrie, G. M. (1993), Estimation of multidimensional integrals: is Monte Carlo the best method? J. Phys. A: Math. Gen., 26(4), 943-953.

- ↑ Woźniakowski, H. (1991), Average case complexity of multivariate integration, Bull. Amer. Math. Soc. (New Ser.), 24(1), 185-194.

- ↑ Cipra, Barry Arthur (1991), Multivariate Integration: It ain't so tough (on average), SIAM NEWS, 28 March.

- ↑ 9.0 9.1 Traub, J. F. and Woźniakowski, H. (1994), Breaking intractability, Scientific American, 270(1), January, 102-107.

- ↑ Paskov, S. H., New methodologies for valuing derivatives, 545-582, in Mathematics of Derivative Securities, S. Pliska and M. Dempster eds., Cambridge University Press, Cambridge.

- ↑ Caflisch, R. E. and Morokoff, W. (1996), Quasi-Monte Carlo computation of a finance problem, 15-30, in Proceedings Workshop on Quasi-Monte Carlo Methods and their Applications, 11 December 1995, K.-T. Fang and F. Hickernell eds., Hong Kong Baptist University.

- ↑ Joy, C., Boyle, P. P. and Tang, K. S. (1996), Quasi-Monte Carlo methods in numerical finance, Management Science, 42(6), 926-938.

- ↑ Ninomiya, S. and Tezuka, S. (1996), Toward real-time pricing of complex financial derivatives, Appl. Math. Finance, 3, 1-20.

- ↑ 14.0 14.1 14.2 Papageorgiou, A. and Traub, J. F. (1996), Beating Monte Carlo, Risk, 9(6), 63-65.

- ↑ Ackworth, P., Broadie, M. and Glasserman, P. (1997), A comparison of some Monte Carlo techniques for option pricing, 1-18, in Monte Carlo and Quasi-Monte Carlo Methods '96, H. Hellekalek, P. Larcher and G. Zinterhof eds., Springer Verlag, New York.

- ↑ 16.0 16.1 Kucherenko S., Shah N. The Importance of being Global.Application of Global Sensitivity Analysis in Monte Carlo option Pricing Wilmott, 82-91, July 2007. http://www.broda.co.uk/gsa/wilmott_GSA_SK.pdf

- ↑ 17.0 17.1 Bianchetti M., Kucherenko S., Scoleri S., Pricing and Risk Management with High-Dimensional Quasi Monte Carlo and Global Sensitivity Analysis, Wilmott, July, pp. 46-70, 2015, http://www.broda.co.uk/doc/PricingRiskManagement_Sobol.pdf

- ↑ 18.0 18.1 Tezuka, S., Uniform Random Numbers:Theory and Practice, Kluwer, Netherlands.

- ↑ BRODA Ltd. http://www.broda.co.uk

- ↑ Sobol’ I., Asotsky D. , Kreinin A. , Kucherenko S. (2012) Construction and Comparison of High-Dimensional Sobol’ Generators, Wilmott, Nov, 64-79

- ↑ Sloan, I. and Woźniakowski, H. (1998), When are quasi-Monte Carlo algorithms efficient for high dimensional integrals?, J. Complexity, 14(1), 1-33.

- ↑ Novak, E. and Wozniakowski, H. (2008), Tractability of multivariate problems, European Mathematical Society, Zurich (forthcoming).

- ↑ 23.0 23.1 Caflisch, R. E., Morokoff, W. and Owen, A. B. (1997), Valuation of mortgage backed securities using Brownian bridges to reduce effective dimension, Journal of Computational Finance, 1, 27-46.

- ↑ Hickernell, F. J. (1998), Lattice rules: how well do they measure up?, in P. Hellekalek and G. Larcher (Eds.), Random and Quasi-Random Point Sets, Springer, 109-166.

- ↑ Wang, X. and Sloan, I. H. (2005), Why are high-dimensional finance problems often of low effective dimension?, SIAM Journal on Scientific Computing, 27(1), 159-183.

- ↑ Tezuka, S. (2005), On the necessity of low-effective dimension, Journal of Complexity, 21, 710-721.

- ↑ Papageorgiou, A. and Traub, J. F. (1997), Faster evaluation of multidimensional integrals, Computers in Physics, 11(6), 574-578.

- ↑ Keister, B. D. (1996), Multidimensional quadrature algorithms, Computers in Physics, 10(20), 119-122.

- ↑ Papageorgiou, A. (2001), Fast convergence of quasi-Monte Carlo for a class of isotropic integrals, Math. Comp., 70, 297-306.

- ↑ Papageorgiou, A. (2003), Sufficient conditions for fast quasi-Monte Carlo convergence, J. Complexity, 19(3), 332-351.

|