Generalized distributive law

The generalized distributive law (GDL) is a generalization of the distributive property which gives rise to a general message passing algorithm.[1] It is a synthesis of the work of many authors in the information theory, digital communications, signal processing, statistics, and artificial intelligence communities. The law and algorithm were introduced in a semi-tutorial by Srinivas M. Aji and Robert J. McEliece with the same title.[1]

Introduction

"The distributive law in mathematics is the law relating the operations of multiplication and addition, stated symbolically, ; that is, the monomial factor is distributed, or separately applied, to each term of the binomial factor , resulting in the product " - Britannica[2]

As it can be observed from the definition, application of distributive law to an arithmetic expression reduces the number of operations in it. In the previous example the total number of operations reduced from three (two multiplications and an addition in ) to two (one multiplication and one addition in ). Generalization of distributive law leads to a large family of fast algorithms. This includes the FFT and Viterbi algorithm.

This is explained in a more formal way in the example below:

where and are real-valued functions, and (say)

Here we are "marginalizing out" the independent variables (, , and ) to obtain the result. When we are calculating the computational complexity, we can see that for each pairs of , there are terms due to the triplet which needs to take part in the evaluation of with each step having one addition and one multiplication. Therefore, the total number of computations needed is . Hence the asymptotic complexity of the above function is .

If we apply the distributive law to the RHS of the equation, we get the following:

This implies that can be described as a product where and

Now, when we are calculating the computational complexity, we can see that there are additions in and each and there are multiplications when we are using the product to evaluate . Therefore, the total number of computations needed is . Hence the asymptotic complexity of calculating reduces to from . This shows by an example that applying distributive law reduces the computational complexity which is one of the good features of a "fast algorithm".

History

Some of the problems that used distributive law to solve can be grouped as follows

1. Decoding algorithms

A GDL like algorithm was used by Gallager's for decoding low density parity-check codes. Based on Gallager's work Tanner introduced the Tanner graph and expressed Gallagers work in message passing form. The tanners graph also helped explain the Viterbi algorithm.

It is observed by Forney that Viterbi's maximum likelihood decoding of convolutional codes also used algorithms of GDL-like generality.

2. Forward-backward algorithm

The forward backward algorithm helped as an algorithm for tracking the states in the markov chain. And this also was used the algorithm of GDL like generality

3. Artificial intelligence

The notion of junction trees has been used to solve many problems in AI. Also the concept of bucket elimination used many of the concepts.

The MPF problem

MPF or marginalize a product function is a general computational problem which as special case includes many classical problems such as computation of discrete Hadamard transform, maximum likelihood decoding of a linear code over a memory-less channel, and matrix chain multiplication. The power of the GDL lies in the fact that it applies to situations in which additions and multiplications are generalized. A commutative semiring is a good framework for explaining this behavior. It is defined over a set with operators "" and "" where and are a commutative monoids and the distributive law holds.

Let be variables such that where is a finite set and . Here . If and , let , , , , and

Let where . Suppose a function is defined as , where is a commutative semiring. Also, are named the local domains and as the local kernels.

Now the global kernel is defined as :

Definition of MPF problem: For one or more indices , compute a table of the values of -marginalization of the global kernel , which is the function defined as

Here is the complement of with respect to and the is called the objective function, or the objective function at . It can observed that the computation of the objective function in the obvious way needs operations. This is because there are additions and multiplications needed in the computation of the objective function. The GDL algorithm which is explained in the next section can reduce this computational complexity.

The following is an example of the MPF problem. Let and be variables such that and . Here and . The given functions using these variables are and and we need to calculate and defined as:

Here local domains and local kernels are defined as follows:

| local domains | local kernels |

|---|---|

where is the objective function and is the objective function.

Consider another example where and is a real valued function. Now, we shall consider the MPF problem where the commutative semiring is defined as the set of real numbers with ordinary addition and multiplication and the local domains and local kernels are defined as follows:

| local domains | local kernels |

|---|---|

Now since the global kernel is defined as the product of the local kernels, it is

and the objective function at the local domain is

This is the Hadamard transform of the function . Hence we can see that the computation of Hadamard transform is a special case of the MPF problem. More examples can be demonstrated to prove that the MPF problem forms special cases of many classical problem as explained above whose details can be found at[1]

GDL: an algorithm for solving the MPF problem

If one can find a relationship among the elements of a given set , then one can solve the MPF problem basing on the notion of belief propagation which is a special use of "message passing" technique. The required relationship is that the given set of local domains can be organised into a junction tree. In other words, we create a graph theoretic tree with the elements of as the vertices of the tree , such that for any two arbitrary vertices say and where and there exists an edge between these two vertices, then the intersection of corresponding labels, viz , is a subset of the label on each vertex on the unique path from to .

For example,

Example 1: Consider the following nine local domains:

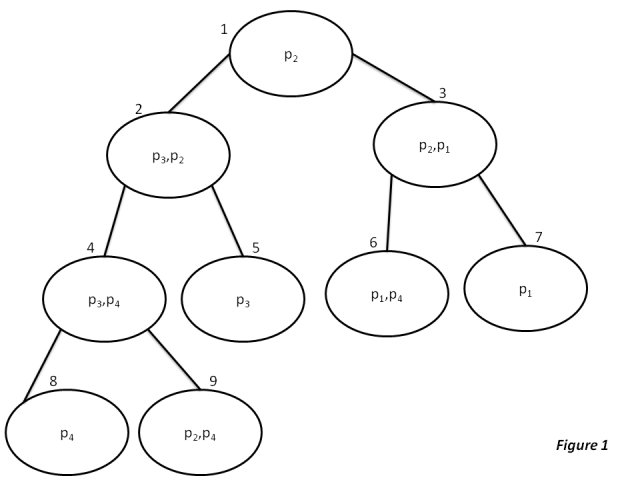

For the above given set of local domains, one can organize them into a junction tree as shown below:

Similarly If another set like the following is given

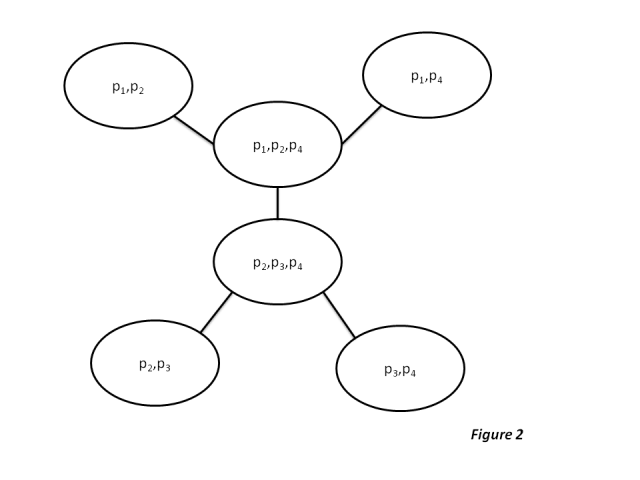

Example 2: Consider the following four local domains:

Then constructing the tree only with these local domains is not possible since this set of values has no common domains which can be placed between any two values of the above set. But however, if add the two dummy domains as shown below then organizing the updated set into a junction tree would be possible and easy too.

5.,

6.,

Similarly for these set of domains, the junction tree looks like shown below:

Generalized distributive law (GDL) algorithm

Input: A set of local domains.

Output: For the given set of domains, possible minimum number of operations that is required to solve the problem is computed.

So, if and are connected by an edge in the junction tree, then a message from to is a set/table of values given by a function: :. To begin with all the functions i.e. for all combinations of and in the given tree, is defined to be identically and when a particular message is update, it follows the equation given below.

- =

where means that is an adjacent vertex to in tree.

Similarly each vertex has a state which is defined as a table containing the values from the function , Just like how messages initialize to 1 identically, state of is defined to be local kernel , but whenever gets updated, it follows the following equation:

Basic working of the algorithm

For the given set of local domains as input, we find out if we can create a junction tree, either by using the set directly or by adding dummy domains to the set first and then creating the junction tree, if construction junction is not possible then algorithm output that there is no way to reduce the number of steps to compute the given equation problem, but once we have junction tree, algorithm will have to schedule messages and compute states, by doing these we can know where steps can be reduced, hence will be discusses this below.

Scheduling of the message passing and the state computation

There are two special cases we are going to talk about here namely Single Vertex Problem in which the objective function is computed at only one vertex and the second one is All Vertices Problem where the goal is to compute the objective function at all vertices.

Lets begin with the single-vertex problem, GDL will start by directing each edge towards the targeted vertex . Here messages are sent only in the direction towards the targeted vertex. Note that all the directed messages are sent only once. The messages are started from the leaf nodes(where the degree is 1) go up towards the target vertex . The message travels from the leaves to its parents and then from there to their parents and so on until it reaches the target vertex . The target vertex will compute its state only when it receives all messages from all its neighbors. Once we have the state, We have got the answer and hence the algorithm terminates.

For Example, let us consider a junction tree constructed from the set of local domains given above i.e. the set from example 1,

Now the Scheduling table for these domains is (where the target vertex is ).

Thus the complexity for Single Vertex GDL can be shown as

arithmetic operations

Where (Note: The explanation for the above equation is explained later in the article )

is the label of .

is the degree of (i.e. number of vertices adjacent to v).

To solve the All-Vertices problem, we can schedule GDL in several ways, some of them are parallel implementation where in each round, every state is updated and every message is computed and transmitted at the same time. In this type of implementation the states and messages will stabilizes after number of rounds that is at most equal to the diameter of the tree. At this point all the all states of the vertices will be equal to the desired objective function.

Another way to schedule GDL for this problem is serial implementation where its similar to the Single vertex problem except that we don't stop the algorithm until all the vertices of a required set have not got all the messages from all their neighbors and have compute their state.

Thus the number of arithmetic this implementation requires is at most arithmetic operations.

Constructing a junction tree

The key to constructing a junction tree lies in the local domain graph , which is a weighted complete graph with vertices i.e. one for each local domain, having the weight of the edge defined by

.

if , then we say is contained in. Denoted by (the weight of a maximal-weight spanning tree of ), which is defined by

where n is the number of elements in that set. For more clarity and details, please refer to these.[3][4]

Scheduling theorem

Let be a junction tree with vertex set and edge set . In this algorithm, the messages are sent in both the direction on any edge, so we can say/regard the edge set E as set of ordered pairs of vertices. For example, from Figure 1 can be defined as follows

NOTE: above gives you all the possible directions that a message can travel in the tree.

The schedule for the GDL is defined as a finite sequence of subsets of. Which is generally represented by {}, Where is the set of messages updated during the round of running the algorithm.

Having defined/seen some notations, we will see want the theorem says, When we are given a schedule , the corresponding message trellis as a finite directed graph with Vertex set of , in which a typical element is denoted by for , Then after completion of the message passing, state at vertex will be the objective defined in

and iff there is a path from to

Computational complexity

Here we try to explain the complexity of solving the MPF problem in terms of the number of mathematical operations required for the calculation. i.e. We compare the number of operations required when calculated using the normal method (Here by normal method we mean by methods that do not use message passing or junction trees in short methods that do not use the concepts of GDL)and the number of operations using the generalized distributive law.

Example: Consider the simplest case where we need to compute the following expression .

To evaluate this expression naively requires two multiplications and one addition. The expression when expressed using the distributive law can be written as a simple optimization that reduces the number of operations to one addition and one multiplication.

Similar to the above explained example we will be expressing the equations in different forms to perform as few operation as possible by applying the GDL.

As explained in the previous sections we solve the problem by using the concept of the junction trees. The optimization obtained by the use of these trees is comparable to the optimization obtained by solving a semi group problem on trees. For example, to find the minimum of a group of numbers we can observe that if we have a tree and the elements are all at the bottom of the tree, then we can compare the minimum of two items in parallel and the resultant minimum will be written to the parent. When this process is propagated up the tree the minimum of the group of elements will be found at the root.

The following is the complexity for solving the junction tree using message passing

We rewrite the formula used earlier to the following form. This is the eqn for a message to be sent from vertex v to w

- ----message equation

Similarly we rewrite the equation for calculating the state of vertex v as follows

We first will analyze for the single-vertex problem and assume the target vertex is and hence we have one edge from to . Suppose we have an edge we calculate the message using the message equation. To calculate requires

additions and

multiplications.

(We represent the as .)

But there will be many possibilities for hence

possibilities for .

Thus the entire message will need

additions and

multiplications

The total number of arithmetic operations required to send a message towards along the edges of tree will be

additions and

multiplications.

Once all the messages have been transmitted the algorithm terminates with the computation of state at The state computation requires more multiplications. Thus number of calculations required to calculate the state is given as below

additions and

multiplications

Thus the grand total of the number of calculations is

- ----

where is an edge and its size is defined by

The formula above gives us the upper bound.

If we define the complexity of the edge as

Therefore, can be written as

We now calculate the edge complexity for the problem defined in Figure 1 as follows

The total complexity will be which is considerably low compared to the direct method. (Here by direct method we mean by methods that do not use message passing. The time taken using the direct method will be the equivalent to calculating message at each node and time to calculate the state of each of the nodes.)

Now we consider the all-vertex problem where the message will have to be sent in both the directions and state must be computed at both the vertexes. This would take but by precomputing we can reduce the number of multiplications to . Here is the degree of the vertex. Ex : If there is a set with numbers. It is possible to compute all the d products of of the with at most multiplications rather than the obvious . We do this by precomputing the quantities and this takes multiplications. Then if denotes the product of all except for we have and so on will need another multiplications making the total

There is not much we can do when it comes to the construction of the junction tree except that we may have many maximal weight spanning tree and we should choose the spanning tree with the least and sometimes this might mean adding a local domain to lower the junction tree complexity.

It may seem that GDL is correct only when the local domains can be expressed as a junction tree. But even in cases where there are cycles and a number of iterations the messages will approximately be equal to the objective function. The experiments on Gallager–Tanner–Wiberg algorithm for low density parity-check codes were supportive of this claim.

References

- ↑ 1.0 1.1 1.2 Aji, S.M.; McEliece, R.J. (Mar 2000). "The generalized distributive law". IEEE Transactions on Information Theory 46 (2): 325–343. doi:10.1109/18.825794. https://authors.library.caltech.edu/1541/1/AJIieeetit00.pdf.

- ↑ "distributive law". Encyclopædia Britannica. Encyclopædia Britannica Online. Encyclopædia Britannica Inc. http://www.britannica.com/EBchecked/topic/166204/distributive-law. Retrieved 1 May 2012.

- ↑ "Archived copy". Archived from the original on 2015-03-19. https://web.archive.org/web/20150319085443/https://ai.stanford.edu/~paskin/gm-short-course/lec3.pdf. Retrieved 2015-03-19. The Junction Tree Algorithms

- ↑ http://www-anw.cs.umass.edu/~cs691t/SS02/lectures/week7.PDF The Junction Tree Algorithm

|