Hodges' estimator

In statistics, Hodges' estimator[1] (or the Hodges–Le Cam estimator[2]), named for Joseph Hodges, is a famous counterexample of an estimator which is "superefficient",[3] i.e. it attains smaller asymptotic variance than regular efficient estimators. The existence of such a counterexample is the reason for the introduction of the notion of regular estimators. Hodges' estimator improves upon a regular estimator at a single point. In general, any superefficient estimator may surpass a regular estimator at most on a set of Lebesgue measure zero.[4]

Although Hodges discovered the estimator he never published it; the first publication was in the doctoral thesis of Lucien Le Cam.[5]

Construction

Suppose [math]\displaystyle{ \hat{\theta}_n }[/math] is a "common" estimator for some parameter [math]\displaystyle{ \theta }[/math]: it is consistent, and converges to some asymptotic distribution [math]\displaystyle{ L_\theta }[/math] (usually this is a normal distribution with mean zero and variance which may depend on [math]\displaystyle{ \theta }[/math]) at the [math]\displaystyle{ \sqrt{n} }[/math]-rate:

- [math]\displaystyle{ \sqrt{n}(\hat\theta_n - \theta)\ \xrightarrow{d}\ L_\theta\ . }[/math]

Then the Hodges' estimator [math]\displaystyle{ \hat{\theta}_n^H }[/math] is defined as[6]

- [math]\displaystyle{ \hat\theta_n^H = \begin{cases}\hat\theta_n, & \text{if } |\hat\theta_n| \geq n^{-1/4}, \text{ and} \\ 0, & \text{if } |\hat\theta_n| \lt n^{-1/4}.\end{cases} }[/math]

This estimator is equal to [math]\displaystyle{ \hat{\theta}_n }[/math] everywhere except on the small interval [math]\displaystyle{ [-n^{-1/4},n^{-1/4}] }[/math], where it is equal to zero. It is not difficult to see that this estimator is consistent for [math]\displaystyle{ \theta }[/math], and its asymptotic distribution is[7]

- [math]\displaystyle{ \begin{align} & n^\alpha(\hat\theta_n^H - \theta) \ \xrightarrow{d}\ 0, \qquad\text{when } \theta = 0, \\ &\sqrt{n}(\hat\theta_n^H - \theta)\ \xrightarrow{d}\ L_\theta, \quad \text{when } \theta\neq 0, \end{align} }[/math]

for any [math]\displaystyle{ \alpha\in\mathbb{R} }[/math]. Thus this estimator has the same asymptotic distribution as [math]\displaystyle{ \hat{\theta}_n }[/math] for all [math]\displaystyle{ \theta\neq 0 }[/math], whereas for [math]\displaystyle{ \theta=0 }[/math] the rate of convergence becomes arbitrarily fast. This estimator is superefficient, as it surpasses the asymptotic behavior of the efficient estimator [math]\displaystyle{ \hat{\theta}_n }[/math] at least at one point [math]\displaystyle{ \theta=0 }[/math].

It is not true that the Hodges estimator is equivalent to the sample mean, but much better when the true mean is 0. The correct interpretation is that, for finite [math]\displaystyle{ n }[/math], the truncation can lead to worse square error than the sample mean estimator for [math]\displaystyle{ E[X] }[/math] close to 0, as is shown in the example in the following section.[8]

Le Cam shows that this behaviour is typical: superefficiency at the point θ implies the existence of a sequence [math]\displaystyle{ \theta_n \rightarrow \theta }[/math] such that [math]\displaystyle{ \lim \inf E \theta_n \ell (\sqrt n (\hat \theta_n - \theta_n )) }[/math] is strictly larger than the Cramer-Rao bound. For the extreme case where the asymptotic risk at θ is zero, the [math]\displaystyle{ \liminf }[/math] is even infinite for a sequence [math]\displaystyle{ \theta_n \rightarrow \theta }[/math].[9]

In general, superefficiency may only be attained on a subset of Lebesgue measure zero of the parameter space [math]\displaystyle{ \Theta }[/math].[10]

Example

Suppose x1, ..., xn is an independent and identically distributed (IID) random sample from normal distribution N(θ, 1) with unknown mean but known variance. Then the common estimator for the population mean θ is the arithmetic mean of all observations: [math]\displaystyle{ \scriptstyle\bar{x} }[/math]. The corresponding Hodges' estimator will be [math]\displaystyle{ \scriptstyle\hat\theta^H_n \;=\; \bar{x}\cdot\mathbf{1}\{|\bar x|\,\geq\,n^{-1/4}\} }[/math], where 1{...} denotes the indicator function.

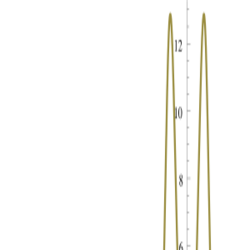

The mean square error (scaled by n) associated with the regular estimator x is constant and equal to 1 for all θ's. At the same time the mean square error of the Hodges' estimator [math]\displaystyle{ \scriptstyle\hat\theta_n^H }[/math] behaves erratically in the vicinity of zero, and even becomes unbounded as n → ∞. This demonstrates that the Hodges' estimator is not regular, and its asymptotic properties are not adequately described by limits of the form (θ fixed, n → ∞).

See also

Notes

- ↑ (Vaart 1998)

- ↑ (Kale 1985)

- ↑ (Bickel 1998)

- ↑ (Vaart 1998)

- ↑ Le Cam, Lucien M.; University of California, Berkeley. (1953). On some asymptotic properties of maximum likelihood estimates and related Bayes' estimates.. University of California publications in statistics ;v. 1, no. 11. Berkeley: University of California press. https://catalog.hathitrust.org/Record/005761137.

- ↑ (Stoica Ottersten)

- ↑ (Vaart 1998)

- ↑ Vaart AW van der. Asymptotic Statistics. Cambridge University Press; 1998.

- ↑ van der Vaart, A. W., & Wellner, J. A. (1996). Weak Convergence and Empirical Processes. In Springer Series in Statistics. Springer New York. https://doi.org/10.1007/978-1-4757-2545-2

- ↑ Vaart AW van der. Asymptotic Statistics. Cambridge University Press; 1998.

- ↑ (Vaart 1998)

References

- Bickel, Peter J.; Klaassen, Chris A.J.; Ritov, Ya’acov; Wellner, Jon A. (1998). Efficient and adaptive estimation for semiparametric models. Springer: New York. ISBN 0-387-98473-9.

- Kale, B.K. (1985). "A note on the super efficient estimator". Journal of Statistical Planning and Inference 12: 259–263. doi:10.1016/0378-3758(85)90074-6.

- Stoica, P.; Ottersten, B. (1996). "The evil of superefficiency". Signal Processing 55: 133–136. doi:10.1016/S0165-1684(96)00159-4.

- Vaart, A. W. van der (1998). Asymptotic statistics. Cambridge University Press. ISBN 978-0-521-78450-4.

|