Look-ahead (backtracking)

In backtracking algorithms, look ahead is the generic term for a subprocedure that attempts to foresee the effects of choosing a branching variable to evaluate one of its values. The two main aims of look-ahead are to choose a variable to evaluate next and to choose the order of values to assign to it.

Constraint satisfaction

In a general constraint satisfaction problem, every variable can take a value in a domain. A backtracking algorithm therefore iteratively chooses a variable and tests each of its possible values; for each value the algorithm is recursively run. Look ahead is used to check the effects of choosing a given variable to evaluate or to decide the order of values to give to it.

Look ahead techniques

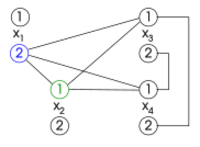

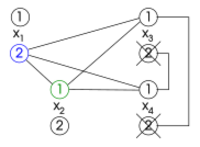

The simpler technique for evaluating the effect of a specific assignment to a variable is called forward checking.[1] Given the current partial solution and a candidate assignment to evaluate, it checks whether another variable can take a consistent value. In other words, it first extends the current partial solution with the tentative value for the considered variable; it then considers every other variable [math]\displaystyle{ x_k }[/math] that is still unassigned, and checks whether there exists an evaluation of [math]\displaystyle{ x_k }[/math] that is consistent with the extended partial solution. More generally, forward checking determines the values for [math]\displaystyle{ x_k }[/math] that are consistent with the extended assignment.

A look-ahead technique that may be more time-consuming but may produce better results is based on arc consistency. Namely, given a partial solution extended with a value for a new variable, it enforces arc consistency for all unassigned variables. In other words, for any unassigned variables, the values that cannot consistently be extended to another variable are removed. The difference between forward checking and arc consistency is that the former only checks a single unassigned variable at time for consistency, while the second also checks pairs of unassigned variables for mutual consistency. The most common way of using look-ahead for solving constraint satisfaction problems is the maintaining arc-consistency (MAC) algorithm.[2]

Two other methods involving arc consistency are full and partial look ahead. They enforce arc consistency, but not for every pair of variables. In particular, full look considers every pair of unassigned variables [math]\displaystyle{ x_i,x_j }[/math], and enforces arc consistency between them. This is different than enforcing global arc consistency, which may possibly require a pair of variables to be reconsidered more than once. Instead, once full look ahead has enforced arc consistency between a pair of variables, the pair is not considered any more. Partial look ahead is similar, but a given order of variables is considered, and arc consistency is only enforced once for every pair [math]\displaystyle{ x_i,x_j }[/math] with [math]\displaystyle{ i \lt j }[/math].

Look ahead based on arc consistency can also be extended to work with path consistency and general i-consistency or relational arc consistency.

Use of look ahead

The results of look ahead are used to decide the next variable to evaluate and the order of values to give to this variable. In particular, for any unassigned variable and value, look-ahead estimates the effects of setting that variable to that value.

The choice of the next variable and the choice of the next value to give it are complementary, in that the value is typically chosen in such a way that a solution (if any) is found as quickly as possible, while the next variable is typically chosen in such a way unsatisfiability (if the current partial solution is unsatisfiable) is proven as quickly as possible.

The choice of the next variable to evaluate is particularly important, as it may produce exponential differences in running time. In order to prove unsatisfiability as quickly as possible, variables leaving few alternatives after being assigned are the preferred ones. This idea can be implemented by checking only satisfiability or unsatisfiability of variable/value pairs. In particular, the next variable that is chosen is the one having a minimal number of values that are consistent with the current partial solution. In turn, consistency can be evaluated by simply checking partial consistency, or by using any of the considered look ahead techniques discussed above.

The following are three methods for ordering the values to tentatively assign to a variable:

- min-conflicts: the preferred values are those removing the least total values from the domain of unassigned variables as evaluated by look ahead;

- max-domain-size: the preferred values are those maximising the number of values in the smallest domain they produce for the unassigned variables, as evaluated by look ahead;

- estimate solutions: the preferred values are those producing the maximal number of solutions, as evaluated by look ahead making the assumption that all values left in the domains of unassigned variables are consistent with each other; in other words, the preference for a value is obtained by multiplying the size of all domains resulting from look ahead.

Experiments proved that these techniques are useful for large problems, especially the min-conflicts one.[citation needed]

Randomization is also sometimes used for choosing a variable or value. For example, if two variables are equally preferred according to some measure, the choice can be done randomly.

References

- ↑ R.M. Haralick and G.L. Elliott (1980), "Increasing tree search efficiency for constraint satisfaction problems". Artificial Intelligence, 14, pp. 263–313.

- ↑ Sabin, Daniel and Eugene C. Freuder (1994), “Contradicting Conventional Wisdom in Constraint Satisfaction.” Principles and Practice of Constraint Programming, pp. 10-20.

- Dechter, Rina (2003). Constraint Processing. Morgan Kaufmann. http://www.ics.uci.edu/~dechter/books/index.html. ISBN:1-55860-890-7

- Ouyang, Ming (1998). "How Good Are Branching Rules in DPLL?". Discrete Applied Mathematics 89 (1–3): 281–286. doi:10.1016/S0166-218X(98)00045-6.

|