Master theorem (analysis of algorithms)

In the analysis of algorithms, the master theorem for divide-and-conquer recurrences provides an asymptotic analysis for many recurrence relations that occur in the analysis of divide-and-conquer algorithms. The approach was first presented by Jon Bentley, Dorothea Blostein (née Haken), and James B. Saxe in 1980, where it was described as a "unifying method" for solving such recurrences.[1] The name "master theorem" was popularized by the widely-used algorithms textbook Introduction to Algorithms by Cormen, Leiserson, Rivest, and Stein.

Not all recurrence relations can be solved by this theorem; its generalizations include the Akra–Bazzi method.

Introduction

Consider a problem that can be solved using a recursive algorithm such as the following:

procedure p(input x of size n):

if n < some constant k:

Solve x directly without recursion

else:

Create a subproblems of x, each having size n/b

Call procedure p recursively on each subproblem

Combine the results from the subproblems

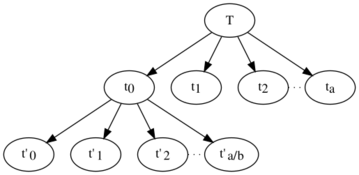

The above algorithm divides the problem into a number of subproblems recursively, each subproblem being of size n/b. Its solution tree has a node for each recursive call, with the children of that node being the other calls made from that call. The leaves of the tree are the base cases of the recursion, the subproblems (of size less than k) that do not recurse. The above example would have a child nodes at each non-leaf node. Each node does an amount of work that corresponds to the size of the subproblem n passed to that instance of the recursive call and given by [math]\displaystyle{ f(n) }[/math]. The total amount of work done by the entire algorithm is the sum of the work performed by all the nodes in the tree.

The runtime of an algorithm such as the p above on an input of size n, usually denoted [math]\displaystyle{ T(n) }[/math], can be expressed by the recurrence relation

- [math]\displaystyle{ T(n) = a \; T\left(\frac{n}{b}\right) + f(n), }[/math]

where [math]\displaystyle{ f(n) }[/math] is the time to create the subproblems and combine their results in the above procedure. This equation can be successively substituted into itself and expanded to obtain an expression for the total amount of work done.[2] The master theorem allows many recurrence relations of this form to be converted to Θ-notation directly, without doing an expansion of the recursive relation.

Generic form

The master theorem always yields asymptotically tight bounds to recurrences from divide and conquer algorithms that partition an input into smaller subproblems of equal sizes, solve the subproblems recursively, and then combine the subproblem solutions to give a solution to the original problem. The time for such an algorithm can be expressed by adding the work that they perform at the top level of their recursion (to divide the problems into subproblems and then combine the subproblem solutions) together with the time made in the recursive calls of the algorithm. If [math]\displaystyle{ T(n) }[/math] denotes the total time for the algorithm on an input of size [math]\displaystyle{ n }[/math], and [math]\displaystyle{ f(n) }[/math] denotes the amount of time taken at the top level of the recurrence then the time can be expressed by a recurrence relation that takes the form:

- [math]\displaystyle{ T(n) = a \; T\!\left(\frac{n}{b}\right) + f(n) }[/math]

Here [math]\displaystyle{ n }[/math] is the size of an input problem, [math]\displaystyle{ a }[/math] is the number of subproblems in the recursion, and [math]\displaystyle{ b }[/math] is the factor by which the subproblem size is reduced in each recursive call (b>1). Crucially, [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math] must not depend on [math]\displaystyle{ n }[/math]. The theorem below also assumes that, as a base case for the recurrence, [math]\displaystyle{ T(n)=\Theta(1) }[/math] when [math]\displaystyle{ n }[/math] is less than some bound [math]\displaystyle{ \kappa \gt 0 }[/math], the smallest input size that will lead to a recursive call.

Recurrences of this form often satisfy one of the three following regimes, based on how the work to split/recombine the problem [math]\displaystyle{ f(n) }[/math] relates to the critical exponent [math]\displaystyle{ c_{\operatorname{crit}}=\log_b a }[/math]. (The table below uses standard big O notation).

- [math]\displaystyle{ c_{\operatorname{crit}} = \log_b a = \log(\#\text{subproblems})/\log(\text{relative subproblem size}) }[/math]

| Case | Description | Condition on [math]\displaystyle{ f(n) }[/math] in relation to [math]\displaystyle{ c_{\operatorname{crit}} }[/math], i.e. [math]\displaystyle{ \log_b a }[/math] | Master Theorem bound | Notational examples |

|---|---|---|---|---|

| 1 | Work to split/recombine a problem is dwarfed by subproblems.

i.e. the recursion tree is leaf-heavy |

When [math]\displaystyle{ f(n) = O(n^{c}) }[/math] where [math]\displaystyle{ c\lt c_{\operatorname{crit}} }[/math]

(upper-bounded by a lesser exponent polynomial) |

... then [math]\displaystyle{ T(n)= \Theta\left( n^{c_{\operatorname{crit}}} \right) }[/math]

(The splitting term does not appear; the recursive tree structure dominates.) |

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = O(n^{1/2-\epsilon}) }[/math], then [math]\displaystyle{ T(n) = \Theta(n^{1/2}) }[/math]. |

| 2 | Work to split/recombine a problem is comparable to subproblems. | When [math]\displaystyle{ f(n) = \Theta(n^{c_{\operatorname{crit}}}\log^{k} n) }[/math] for a [math]\displaystyle{ k \ge 0 }[/math]

(rangebound by the critical-exponent polynomial, times zero or more optional [math]\displaystyle{ \log }[/math]s) |

... then [math]\displaystyle{ T(n)= \Theta\left( n^{c_{\operatorname{crit}}} \log^{k+1} n \right) }[/math]

(The bound is the splitting term, where the log is augmented by a single power.) |

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = \Theta(n^{1/2}) }[/math], then [math]\displaystyle{ T(n) = \Theta(n^{1/2} \log n) }[/math].

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = \Theta(n^{1/2} \log n) }[/math], then [math]\displaystyle{ T(n) = \Theta(n^{1/2} \log^{2} n) }[/math]. |

| 3 | Work to split/recombine a problem dominates subproblems.

i.e. the recursion tree is root-heavy. |

When [math]\displaystyle{ f(n) = \Omega(n^{c}) }[/math] where [math]\displaystyle{ c\gt c_{\operatorname{crit}} }[/math]

(lower-bounded by a greater-exponent polynomial) |

... this doesn't necessarily yield anything. Furthermore, if

then the total is dominated by the splitting term [math]\displaystyle{ f(n) }[/math]:

|

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = \Omega(n^{1/2+\epsilon}) }[/math] and the regularity condition holds, then [math]\displaystyle{ T(n) = \Theta(f(n)) }[/math]. |

A useful extension of Case 2 handles all values of [math]\displaystyle{ k }[/math]:[3]

| Case | Condition on [math]\displaystyle{ f(n) }[/math] in relation to [math]\displaystyle{ c_{\operatorname{crit}} }[/math], i.e. [math]\displaystyle{ \log_b a }[/math] | Master Theorem bound | Notational examples |

|---|---|---|---|

| 2a | When [math]\displaystyle{ f(n) = \Theta(n^{c_{\operatorname{crit}}}\log^{k} n) }[/math] for any [math]\displaystyle{ k \gt -1 }[/math] | ... then [math]\displaystyle{ T(n)= \Theta\left( n^{c_{\operatorname{crit}}} \log^{k+1} n \right) }[/math]

(The bound is the splitting term, where the log is augmented by a single power.) |

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = \Theta(n^{1/2}/\log^{1/2} n) }[/math], then [math]\displaystyle{ T(n) = \Theta(n^{1/2} \log^{1/2} n) }[/math]. |

| 2b | When [math]\displaystyle{ f(n) = \Theta(n^{c_{\operatorname{crit}}}\log^{k} n) }[/math] for [math]\displaystyle{ k = -1 }[/math] | ... then [math]\displaystyle{ T(n)= \Theta\left( n^{c_{\operatorname{crit}}} \log \log n \right) }[/math]

(The bound is the splitting term, where the log reciprocal is replaced by an iterated log.) |

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = \Theta(n^{1/2}/\log n) }[/math], then [math]\displaystyle{ T(n) = \Theta(n^{1/2} \log \log n) }[/math]. |

| 2c | When [math]\displaystyle{ f(n) = \Theta(n^{c_{\operatorname{crit}}}\log^{k} n) }[/math] for any [math]\displaystyle{ k \lt -1 }[/math] | ... then [math]\displaystyle{ T(n)= \Theta\left( n^{c_{\operatorname{crit}}} \right) }[/math]

(The bound is the splitting term, where the log disappears.) |

If [math]\displaystyle{ b=a^2 }[/math] and [math]\displaystyle{ f(n) = \Theta(n^{1/2}/\log^2 n) }[/math], then [math]\displaystyle{ T(n) = \Theta(n^{1/2}) }[/math]. |

Examples

Case 1 example

- [math]\displaystyle{ T(n) = 8 T\left(\frac{n}{2}\right) + 1000n^2 }[/math]

As one can see from the formula above:

- [math]\displaystyle{ a = 8, \, b = 2, \, f(n) = 1000n^2 }[/math], so

- [math]\displaystyle{ f(n) = O\left(n^c\right) }[/math], where [math]\displaystyle{ c = 2 }[/math]

Next, we see if we satisfy the case 1 condition:

- [math]\displaystyle{ \log_b a = \log_2 8 = 3\gt c }[/math].

It follows from the first case of the master theorem that

- [math]\displaystyle{ T(n) = \Theta\left( n^{\log_b a} \right) = \Theta\left( n^{3} \right) }[/math]

(This result is confirmed by the exact solution of the recurrence relation, which is [math]\displaystyle{ T(n) = 1001 n^3 - 1000 n^2 }[/math], assuming [math]\displaystyle{ T(1) = 1 }[/math]).

Case 2 example

[math]\displaystyle{ T(n) = 2 T\left(\frac{n}{2}\right) + 10n }[/math]

As we can see in the formula above the variables get the following values:

- [math]\displaystyle{ a = 2, \, b = 2, \, c = 1, \, f(n) = 10n }[/math]

- [math]\displaystyle{ f(n) = \Theta\left(n^{c} \log^{k} n\right) }[/math] where [math]\displaystyle{ c = 1, k = 0 }[/math]

Next, we see if we satisfy the case 2 condition:

- [math]\displaystyle{ \log_b a = \log_2 2 = 1 }[/math], and therefore, c and [math]\displaystyle{ \log_b a }[/math] are equal

So it follows from the second case of the master theorem:

- [math]\displaystyle{ T(n) = \Theta\left( n^{\log_b a} \log^{k+1} n\right) = \Theta\left( n^{1} \log^{1} n\right) = \Theta\left(n \log n\right) }[/math]

Thus the given recurrence relation [math]\displaystyle{ T(n) }[/math] was in [math]\displaystyle{ \Theta(n \log n) }[/math].

(This result is confirmed by the exact solution of the recurrence relation, which is [math]\displaystyle{ T(n) = n + 10 n\log_2 n }[/math], assuming [math]\displaystyle{ T(1) = 1 }[/math]).

Case 3 example

- [math]\displaystyle{ T(n) = 2 T\left(\frac{n}{2}\right) + n^2 }[/math]

As we can see in the formula above the variables get the following values:

- [math]\displaystyle{ a = 2, \, b = 2, \, f(n) = n^2 }[/math]

- [math]\displaystyle{ f(n) = \Omega\left(n^c\right) }[/math], where [math]\displaystyle{ c = 2 }[/math]

Next, we see if we satisfy the case 3 condition:

- [math]\displaystyle{ \log_b a = \log_2 2 = 1 }[/math], and therefore, yes, [math]\displaystyle{ c \gt \log_b a }[/math]

The regularity condition also holds:

- [math]\displaystyle{ 2 \left(\frac{n^2}{4}\right) \le k n^2 }[/math], choosing [math]\displaystyle{ k = 1/2 }[/math]

So it follows from the third case of the master theorem:

- [math]\displaystyle{ T \left(n \right) = \Theta\left(f(n)\right) = \Theta \left(n^2 \right). }[/math]

Thus the given recurrence relation [math]\displaystyle{ T(n) }[/math] was in [math]\displaystyle{ \Theta(n^2) }[/math], that complies with the [math]\displaystyle{ f(n) }[/math] of the original formula.

(This result is confirmed by the exact solution of the recurrence relation, which is [math]\displaystyle{ T(n) = 2 n^2 - n }[/math], assuming [math]\displaystyle{ T(1) = 1 }[/math].)

Inadmissible equations

The following equations cannot be solved using the master theorem:[4]

- [math]\displaystyle{ T(n) = 2^nT\left (\frac{n}{2}\right )+n^n }[/math]

- a is not a constant; the number of subproblems should be fixed

- [math]\displaystyle{ T(n) = 2T\left (\frac{n}{2}\right )+\frac{n}{\log n} }[/math]

- non-polynomial difference between [math]\displaystyle{ f(n) }[/math] and [math]\displaystyle{ n^{\log_b a} }[/math] (see below; extended version applies)

- [math]\displaystyle{ T(n) = 0.5T\left (\frac{n}{2}\right )+n }[/math]

- [math]\displaystyle{ a\lt 1 }[/math] cannot have less than one sub problem

- [math]\displaystyle{ T(n) = 64T\left (\frac{n}{8}\right )-n^2\log n }[/math]

- [math]\displaystyle{ f(n) }[/math], which is the combination time, is not positive

- [math]\displaystyle{ T(n) = T\left (\frac{n}{2}\right )+n(2-\cos n) }[/math]

- case 3 but regularity violation.

In the second inadmissible example above, the difference between [math]\displaystyle{ f(n) }[/math] and [math]\displaystyle{ n^{\log_b a} }[/math] can be expressed with the ratio [math]\displaystyle{ \frac{f(n)}{n^{\log_b a}} = \frac{n / \log n}{n^{\log_2 2}} = \frac{n}{n \log n} = \frac{1}{\log n} }[/math]. It is clear that [math]\displaystyle{ \frac{1}{\log n} \lt n^\epsilon }[/math] for any constant [math]\displaystyle{ \epsilon \gt 0 }[/math]. Therefore, the difference is not polynomial and the basic form of the Master Theorem does not apply. The extended form (case 2b) does apply, giving the solution [math]\displaystyle{ T(n) = \Theta(n\log\log n) }[/math].

Application to common algorithms

| Algorithm | Recurrence relationship | Run time | Comment |

|---|---|---|---|

| Binary search | [math]\displaystyle{ T(n) = T\left(\frac{n}{2}\right) + O(1) }[/math] | [math]\displaystyle{ O(\log n) }[/math] | Apply Master theorem case [math]\displaystyle{ c = \log_b a }[/math], where [math]\displaystyle{ a = 1, b = 2, c = 0, k = 0 }[/math][5] |

| Binary tree traversal | [math]\displaystyle{ T(n) = 2 T\left(\frac{n}{2}\right) + O(1) }[/math] | [math]\displaystyle{ O(n) }[/math] | Apply Master theorem case [math]\displaystyle{ c \lt \log_b a }[/math] where [math]\displaystyle{ a = 2, b = 2, c = 0 }[/math][5] |

| Optimal sorted matrix search | [math]\displaystyle{ T(n) = 2 T\left(\frac{n}{2}\right) + O(\log n) }[/math] | [math]\displaystyle{ O(n) }[/math] | Apply the Akra–Bazzi theorem for [math]\displaystyle{ p=1 }[/math] and [math]\displaystyle{ g(u)=\log(u) }[/math] to get [math]\displaystyle{ \Theta(2n - \log n) }[/math] |

| Merge sort | [math]\displaystyle{ T(n) = 2 T\left(\frac{n}{2}\right) + O(n) }[/math] | [math]\displaystyle{ O(n \log n) }[/math] | Apply Master theorem case [math]\displaystyle{ c = \log_b a }[/math], where [math]\displaystyle{ a = 2, b = 2, c = 1, k = 0 }[/math] |

See also

- Akra–Bazzi method

- Asymptotic complexity

Notes

- ↑ Bentley, Jon Louis; Haken, Dorothea; Saxe, James B. (September 1980), "A general method for solving divide-and-conquer recurrences", ACM SIGACT News 12 (3): 36–44, doi:10.1145/1008861.1008865, http://www.dtic.mil/get-tr-doc/pdf?AD=ADA064294

- ↑ Duke University, "Big-Oh for Recursive Functions: Recurrence Relations", http://www.cs.duke.edu/~ola/ap/recurrence.html

- ↑ Chee Yap, A real elementary approach to the master recurrence and generalizations, Proceedings of the 8th annual conference on Theory and applications of models of computation (TAMC'11), pages 14–26, 2011. Online copy.

- ↑ Massachusetts Institute of Technology (MIT), "Master Theorem: Practice Problems and Solutions", https://people.csail.mit.edu/thies/6.046-web/master.pdf

- ↑ 5.0 5.1 Dartmouth College, http://www.math.dartmouth.edu/archive/m19w03/public_html/Section5-2.pdf

References

- Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein. Introduction to Algorithms, Second Edition. MIT Press and McGraw–Hill, 2001. ISBN:0-262-03293-7. Sections 4.3 (The master method) and 4.4 (Proof of the master theorem), pp. 73–90.

- Michael T. Goodrich and Roberto Tamassia. Algorithm Design: Foundation, Analysis, and Internet Examples. Wiley, 2002. ISBN:0-471-38365-1. The master theorem (including the version of Case 2 included here, which is stronger than the one from CLRS) is on pp. 268–270.

|