Multiple factor analysis

Multiple factor analysis (MFA) is a factorial method[1] devoted to the study of tables in which a group of individuals is described by a set of variables (quantitative and / or qualitative) structured in groups. It is a multivariate method from the field of ordination used to simplify multidimensional data structures. MFA treats all involved tables in the same way (symmetrical analysis). It may be seen as an extension of:

- Principal component analysis (PCA) when variables are quantitative,

- Multiple correspondence analysis (MCA) when variables are qualitative,

- Factor analysis of mixed data (FAMD) when the active variables belong to the two types.

Introductory example

Why introduce several active groups of variables in the same factorial analysis?

data

Consider the case of quantitative variables, that is to say, within the framework of the PCA. An example of data from ecological research provides a useful illustration. There are, for 72 stations, two types of measurements:

- The abundance-dominance coefficient of 50 plant species (coefficient ranging from 0 = the plant is absent, to 9 = the species covers more than three-quarters of the surface). The whole set of the 50 coefficients defines the floristic profile of a station.

- Eleven pedological measurements (Pedology = soil science): particle size, physical, chemistry, etc. The set of these eleven measures defines the pedological profile of a station.

Three analyses are possible:

- PCA of flora (pedology as supplementary): this analysis focuses on the variability of the floristic profiles. Two stations are close one another if they have similar floristic profiles. In a second step, the main dimensions of this variability (i.e. the principal components) are related to the pedological variables introduced as supplementary.

- PCA of pedology (flora as supplementary): this analysis focuses on the variability of soil profiles. Two stations are close if they have the same soil profile. The main dimensions of this variability (i.e. the principal components) are then related to the abundance of plants.

- PCA of the two groups of variables as active: one may want to study the variability of stations from both the point of view of flora and soil. In this approach, two stations should be close if they have both similar flora 'and' similar soils.

Balance between groups of variables

Methodology

The third analysis of the introductory example implicitly assumes a balance between flora and soil. However, in this example, the mere fact that the flora is represented by 50 variables and the soil by 11 variables implies that the PCA with 61 active variables will be influenced mainly by the flora at least on the first axis). This is not desirable: there is no reason to wish one group play a more important role in the analysis.

The core of MFA is based on a factorial analysis (PCA in the case of quantitative variables, MCA in the case of qualitative variables) in which the variables are weighted. These weights are identical for the variables of the same group (and vary from one group to another). They are such that the maximum axial inertia of a group is equal to 1: in other words, by applying the PCA (or, where applicable, the MCA) to one group with this weighting, we obtain a first eigenvalue equal to 1. To get this property, MFA assigns to each variable of group a weight equal to the inverse of the first eigenvalue of the analysis (PCA or MCA according to the type of variable) of the group .

Formally, noting the first eigenvalue of the factorial analysis of one group , the MFA assigns weight for each variable of the group .

Balancing maximum axial inertia rather than the total inertia (= the number of variables in standard PCA) gives the MFA several important properties for the user. More directly, its interest appears in the following example.

Example

Let two groups of variables defined on the same set of individuals.

- Group 1 is composed of two uncorrelated variables A and B.

- Group 2 is composed of two variables {C1, C2} identical to the same variable C uncorrelated with the first two.

This example is not completely unrealistic. It is often necessary to simultaneously analyse multi-dimensional and (quite) one-dimensional groups.

Each group having the same number of variables has the same total inertia.

In this example the first axis of the PCA is almost coincident with C. Indeed, in the space of variables, there are two variables in the direction of C: group 2, with all its inertia concentrated in one direction, influences predominantly the first axis. For its part, group 1, consisting of two orthogonal variables (= uncorrelated), has its inertia uniformly distributed in a plane (the plane generated by the two variables) and hardly weighs on the first axis.

Numerical Example

|

|

Table 2 summarizes the inertia of the first two axes of the PCA and of the MFA applied to Table 1.

Group 2 variables contribute to 88.95% of the inertia of the axis 1 of the PCA. The first axis () is almost coincident with C: the correlation between C and is .976;

The first axis of the MFA (on Table 1 data) shows the balance between the two groups of variables: the contribution of each group to the inertia of this axis is strictly equal to 50%.

The second axis, meanwhile, depends only on group 1. This is natural since this group is two-dimensional while the second group, being one-dimensional, can be highly related to only one axis (here the first axis).

Conclusion about the balance between groups

Introducing several active groups of variables in a factorial analysis implicitly assumes a balance between these groups.

This balance must take into account that a multidimensional group influences naturally more axes than a one-dimensional group does (which may not be closely related to one axis).

The weighting of the MFA, which makes the maximum axial inertia of each group equal to 1, plays this role.

Application examples

Survey Questionnaires are always structured according to different themes. Each theme is a group of variables, for example, questions about opinions and questions about behaviour. Thus, in this example, we may want to perform a factorial analysis in which two individuals are close if they have both expressed the same opinions and the same behaviour.

Sensory analysis A same set of products has been evaluated by a panel of experts and a panel of consumers. For its evaluation, each jury uses a list of descriptors (sour, bitter, etc.). Each judge scores each descriptor for each product on a scale of intensity ranging for example from 0 = null or very low to 10 = very strong. In the table associated with a jury, at the intersection of the row and column , is the average score assigned to product for descriptor .

Individuals are the products. Each jury is a group of variables. We want to achieve a factorial analysis in which two products are similar if they were evaluated in the same way by both juries.

Multidimensional time series variables are measured on individuals. These measurements are made at dates. There are many ways to analyse such data set. One way suggested by MFA is to consider each day as a group of variables in the analysis of the tables (each table corresponds to one date) juxtaposed row-wise (the table analysed thus has rows and x columns).

Conclusion: These examples show that in practice, variables are very often organized into groups.

Graphics from MFA

Beyond the weighting of variables, interest in MFA lies in a series of graphics and indicators valuable in the analysis of a table whose columns are organized into groups.

Graphics common to all the simple factorial analyses (PCA, MCA)

The core of MFA is a weighted factorial analysis: MFA firstly provides the classical results of the factorial analyses.

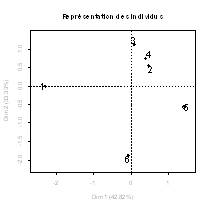

1. Representations of individuals in which two individuals are close to each other if they exhibit similar values for many variables in the different variable groups; in practice the user particularly studies the first factorial plane.

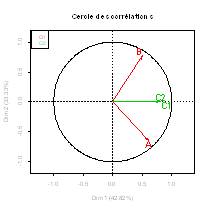

2.Representations of quantitative variables as in PCA (correlation circle).

|

|

In the example:

- The first axis mainly opposes individuals 1 and 5 (Figure 1).

- The four variables have a positive coordinate (Figure 2): the first axis is a size effect. Thus, individual 1 has low values for all the variables and individual 5 has high values for all the variables.

3. Indicators aiding interpretation: projected inertia, contributions and quality of representation. In the example, the contribution of individuals 1 and 5 to the inertia of the first axis is 45.7% + 31.5% = 77.2% which justifies the interpretation focussed on these two points.

4. Representations of categories of qualitative variables as in MCA (a category lies at the centroid of the individuals who possess it). No qualitative variables in the example.

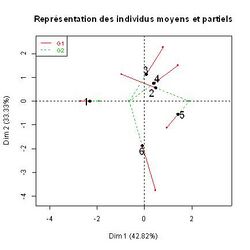

Graphics specific to this kind of multiple table

5. Superimposed representations of individuals « seen » by each group. An individual considered from the point of view of a single group is called partial individual (in parallel, an individual considered from the point of view of all variables is said mean individual because it lies at the center of gravity of its partial points). Partial cloud gathers the individuals from the perspective of the single group (ie ): that is the cloud analysed in the separate factorial analysis (PCA or MCA) of the group . The superimposed representation of the provided by the MFA is similar in its purpose to that provided by the Procrustes analysis.

In the example (figure 3), individual 1 is characterized by a small size (i.e. small values) both in terms of group 1 and group 2 (partial points of the individual 1 have a negative coordinate and are close one another). On the contrary, the individual 5 is more characterized by high values for the variables of group 2 than for the variables of group 1 (for the individual 5, group 2 partial point lies further from the origin than group 1 partial point). This reading of the graph can be checked directly in the data.

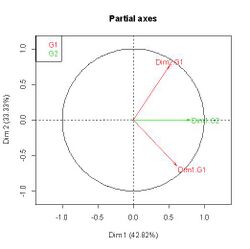

6. Representations of groups of variables as such. In these graphs, each group of variables is represented by a single point. Two groups of variables are close one another when they define the same structure on individuals. Extreme case: two groups of variables that define homothetic clouds of individuals coincide. The coordinate of group along the axis is equal to the contribution of the group to the inertia of MFA dimension of rank . This contribution can be interpreted as an indicator of relationship (between the group and the axis , hence the name relationship square given to this type of representation). This representation also exists in other factorial methods (MCA and FAMD in particular) in which case the groups of variable are each reduced to a single variable.

In the example (Figure 4), this representation shows that the first axis is related to the two groups of variables, while the second axis is related to the first group. This agrees with the representation of the variables (figure 2). In practice, this representation is especially precious when the groups are numerous and include many variables.

Other reading grid. The two groups of variables have in common the size effect (first axis) and differ according to axis 2 since this axis is specific to group 1 (he opposes the variables A and B).

7. Representations of factors of separate analyses of the different groups. These factors are represented as supplementary quantitative variables (correlation circle).

In the example (figure 5), the first axis of the MFA is relatively strongly correlated (r = .80) to the first component of the group 2. This group, consisting of two identical variables, possesses only one principal component (confounded with the variable). The group 1 consists of two orthogonal variables: any direction of the subspace generated by these two variables has the same inertia (equal to 1). So there is uncertainty in the choice of principal components and there is no reason to be interested in one of them in particular. However, the two components provided by the program are well represented: the plane of the MFA is close to the plane spanned by the two variables of group 1.

Conclusion

The numerical example illustrates the output of the MFA. Besides balancing groups of variables and besides usual graphics of PCA (of MCA in the case of qualitative variables), the MFA provides results specific of the group structure of the set of variables, that is, in particular:

- A superimposed representation of partial individuals for a detailed analysis of the data;

- A representation of groups of variables providing a synthetic image more and more valuable as that data include many groups;

- A representation of factors from separate analyses.

The small size and simplicity of the example allow simple validation of the rules of interpretation. But the method will be more valuable when the data set is large and complex. Other methods suitable for this type of data are available. Procrustes analysis is compared to the MFA in.[2]

History

MFA was developed by Brigitte Escofier and Jérôme Pagès in the 1980s. It is at the heart of two books written by these authors:[3] and.[4] The MFA and its extensions (hierarchical MFA, MFA on contingency tables, etc.) are a research topic of applied mathematics laboratory Agrocampus (LMA ²) which published a book presenting basic methods of exploratory multivariate analysis.[5]

Software

MFA is available in two R packages (FactoMineR and ADE4) and in many software packages, including SPAD, Uniwin, XLSTAT, etc. There is also a function SAS[yes|permanent dead link|dead link}}] . The graphs in this article come from the R package FactoMineR.

References

- ↑ Greenacre, Michael; Blasius, Jorg (2006-06-23). Multiple Correspondence Analysis and Related Methods. CRC Press. pp. 352–. ISBN 9781420011319. https://books.google.com/books?id=ZvYV1lfU5zIC&pg=PA352. Retrieved 11 June 2014.

- ↑ Pagès Jérôme (2014). Multiple Factor Analysis by Example Using R. Chapman & Hall/CRC The R Series, London. 272p

- ↑ Ibidem

- ↑ Escofier Brigitte & Pagès Jérôme (2008). Analyses factorielles simples et multiples; objectifs, méthodes et interprétation. Dunod, Paris. 318 p. ISBN 978-2-10-051932-3

- ↑ Husson F., Lê S. & Pagès J. (2009). Exploratory Multivariate Analysis by Example Using R. Chapman & Hall/CRC The R Series, London. ISBN 978-2-7535-0938-2

External links

- FactoMineR A R software devoted to exploratory data analysis.

|