Skinput

Skinput is an input technology that uses bio-acoustic sensing to localize finger taps on the skin. When augmented with a pico-projector, the device can provide a direct manipulation, graphical user interface on the body. The technology was developed by |Chris Harrison], Desne Tanl and Dan Morris, at Microsoft Research's Computational User Experiences Group.[1] Skinput represents one way to decouple input from electronic devices with the aim of allowing devices to become smaller without simultaneously shrinking the surface area on which input can be performed. While other systems, like SixthSense have attempted this with computer vision, Skinput employs acoustics, which take advantage of the human body's natural sound conductive properties (e.g., bone conduction).[2] This allows the body to be annexed as an input surface without the need for the skin to be invasively instrumented with sensors, tracking markers, or other items.

Microsoft has not commented on the future of the projects, other than it is under active development. In 2010, it was reported that this would not appear in commercial devices for at least 2 years.[3]

Operation

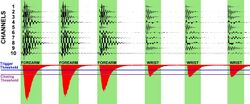

Skinput has been publicly demonstrated as an armband, which sits on the biceps. This prototype contains ten small cantilevered Piezo elements configured to be highly resonant, sensitive to frequencies between 25 and 78 Hz.[4] This configuration acts like a mechanical Fast Fourier transform and provides extreme out-of-band noise suppression, allowing the system to function even while the user is in motion. From the upper arm, the sensors can localize finger taps provided to any part of the arm, all the way down to the finger tips, with accuracies in excess of 90% (as high as 96% for five input locations).[5] Classification is driven by a support vector machine using a series of time-independent acoustic features that act like a fingerprint. Like speech recognition systems, the Skinput recognition engine must be trained on the "sound" of each input location before use. After training, locations can be bound to interactive functions, such as pause/play song, increase/decrease music volume, speed dial, and menu navigation.

With the addition of a pico-projector to the armband, Skinput allows users to interact with a graphical user interface displayed directly on the skin. This enables several interaction modalities, including button-based hierarchical navigation, list-based sliding navigation (similar to an iPod/SmartPhone/MID), text/number entry (e.g., telephone number keypad), and gaming (e.g., Tetris, Frogger)[6][7]

Demonstrations

Despite being a Microsoft Research internal project, Skinput has been demonstrated publicly several times. The first public appearance was at Microsoft's TechFest 2010, where the recognition model was trained live on stage, during the presentation, followed by an interactive walkthrough of a simple mobile application with four modes: music player, email inbox, Tetris, and voice mail.[8] A similar live demo was given at the ACM CHI 2010 conference, where the academic paper received a "Best Paper" award. Attendees were allowed to try the system. Numerous media outlets have covered the technology,[9][10][11][12][13] with several featuring live demos.[14]

References

- ↑ "Skinput:Appropriating the Body as an Input Surface". Microsoft Research Computational User Experiences Group. http://research.microsoft.com/en-us/um/redmond/groups/cue/skinput. Retrieved 26 May 2010.

- ↑ Harrison, Dan (10–15 April 2010). "Skinput:Appropriating the Body as an Input Surface". Proceedings of the ACM CHI Conference 2010. http://research.microsoft.com/en-us/um/redmond/groups/cue/publications/mkskinputchi2010.pdf.

- ↑ Goode, Lauren (26 April 2010). "The Skinny on Touch Technology". Wall Street Journal. https://blogs.wsj.com/digits/2010/04/26/the-skinny-on-touch-technology.

- ↑ Sutter, John (19 April 2010). "Microsoft's Skinput turns hands, arms into buttons". CNN. http://www.cnn.com/2010/TECH/04/19/microsoft.skinput/index.html.

- ↑ Ward, Mark (26 March 2010). "Sensors turn skin into gadget control pad". BBC News. http://news.bbc.co.uk/2/hi/8587486.stm.

- ↑ "Skinput: Appropriating the Body as an Input Surface" (blog). Chrisharrison.net. http://www.chrisharrison.net/projects/skinput.

- ↑ "Skinput: Appropriating the Body as an Input Surface". Youtube (from CHI 2010 conference). https://www.youtube.com/watch?v=g3XPUdW9Ryg.

- ↑ Dudley, Brier (1 March 2010). "A peek at where Microsoft thinks we're going tomorrow". Seattle Times. http://seattletimes.nwsource.com/html/businesstechnology/2011227913_techfest02.html.

- ↑ Hope, Dan (4 March 2010). "'Skinput' turns body into touchscreen interface". NBC News. http://www.nbcnews.com/id/35708587.

- ↑ Hornyak, Tom (2 March 2010). "Turn your arm into a phone with Skinput". CNET. http://news.cnet.com/8301-17938_105-10462255-1.html.

- ↑ Marks, Paul (1 March 2010). "Body acoustics can turn your arm into a touchscreen". New Scientist. https://www.newscientist.com/article/dn18591-body-acoustics-can-turn-your-arm-into-a-touchscreen.html.

- ↑ Dillow, Clay (3 March 2010). "Skinput Turns Any Bodily Surface Into a Touch Interface". Popular Science. http://www.popsci.com/gadgets/article/2010-03/skinput-turns-your-skin-peripheral-input-device-youll-never-misplace.

- ↑ "Technology: Skin Used As An Input Device" (interview transcript). National Public Radio. 4 March 2010. https://www.npr.org/templates/story/story.php?storyId=124303315&sc=emaf.

- ↑ Savov, Vladislav (2 March 2010). "Skinput: because touchscreens never felt right anyway" (video). Engadget. https://www.engadget.com/2010/03/02/skinput-because-touchscreens-never-felt-right-anyway-video/.

External links

|