Software:Apache Hop

| |

| Original author(s) | Bart Maertens |

|---|---|

| Developer(s) | Apache Hop |

| Initial release | September 21, 2021[1] |

| Stable release | 1.1.0

/ January 21, 2022 |

| Repository | Hop Repository |

| Written in | Java |

| Operating system | Microsoft Windows, macOS, Linux, Web |

| Available in | Java |

| Type | Data orchestration, data engineering, ETL |

| License | Apache License 2.0 |

| Website | {{{1}}} |

Apache Hop is a data orchestration and data integration platform.[2] It is an open-source[3] software platform developed by the Apache Software Foundation written in Java. The project provides a visual development environment (IDE) for the development of workflows and pipelines. Hop uses metadata to process data and provides tools and functionality to manage a data project throughout its entire life cycle.[4] Hop can process data in batch, streaming and hybrid modes, and can run in cloud, on-premise or hybrid cloud.

History

Apache Hop started as Project Hop in the summer of 2019 as a fork of Kettle (or Pentaho Data Integration) 8.2.0.7.[5] Hop was redesigned and re-architected from the ground up, and was intended to be a separate and incompatible project from the start. After entering the Apache Incubator in October 2020[6], the project was renamed to Apache Hop. Hop graduated as an Apache Top-Level Project in December 2021.

Architecture

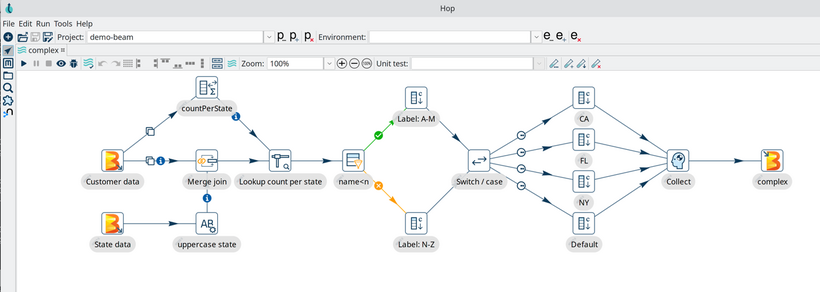

Hop's main file types are pipelines and workflows. Pipelines operate on the data: they read, modify, enrich, combine and perform other operations on the data before writing out to one or more target platforms. Workflows don't act on the data directly, but perform orchestration tasks like performing environment checks and error handling, and execute pipelines and child workflows. Pipelines are a collection of transforms, workflows are a collection of actions. Both the transforms in a pipeline and the actions in a workflow are connected through hops. Pipelines are executed in parallel, workflows are executed sequentially.

Hop consists of a small and robust engine (kernel). All non-core functionality is added through plugins. One of the available plugins in Hop are the runtime engines that run pipelines and workflows. Workflows can run locally and remotely in Hop's native run configuration. Pipelines can also run in the Hop native local and remote run configuration, but can also run on Apache Spark, Apache Flink or Google Cloud Dataflow through Apache Beam.

Hop considers data projects to be more than just pipelines and workflows. The platform offers functionality to not only develop pipelines and workflows, but also to support data teams in a project's entire life cycle. This includes functionality to organize work in projects and environments, to build and run unit tests, integrate with CI/CD platforms, version control (git) and more.[7]

Tools

The Apache Hop platform comes with a variety of tools:

- Hop Gui - the visual IDE where Hop developers build, run and test data pipelines and workflows

- Hop Conf - command line tool to manage all aspects of a Hop installation and configuration (e.g. projects and environments, cloud configuration)

- Hop Import - command line tool to import Pentaho Data Integration (Kettle) jobs and transformation to workflows and pipelines and upgrade them to Hop projects

- Hop Run - command line tool to run workflows and pipelines

- Hop Search - command line tool to search in a project's (or all of Hop's) metadata

- Hop Server - light-weight web server to run workflows and pipelines remotely

- Hop Translator - GUI tool to assist non-developers in translating the Hop tools

External links

References

- ↑ "Hop 1.0.0". https://hop.apache.org/blog/2021/10/hop-1.0.0/.

- ↑ States, Houston TX United (2022-01-19). "ETL Tool Apache Hop Graduates Incubator". https://www.datanami.com/2022/01/18/etl-tool-apache-hop-graduates-incubator/.

- ↑ "Hop Repository". https://github.com/apache/hop.

- ↑ "Apache Hop Harnesses Metadata to Create Visual Data Pipelines" (in en-US). 2022-02-02. https://thenewstack.io/apache-hop-harnesses-metadata-to-create-visual-data-pipelines/.

- ↑ "Apache Hop data orchestration hits open source milestone" (in en). https://www.techtarget.com/searchdatamanagement/news/252512339/Apache-Hop-data-orchestration-hits-open-source-milestone.

- ↑ "Apache Hop joins the Apache Software Foundation". https://hop.apache.org/blog/2020/10/hop-joins-the-asf/.

- ↑ "Data Science: Apache Hop Orchestration Platform 1.0 works without code" (in en-US). 2021-10-13. https://marketresearchtelecast.com/data-science-apache-hop-orchestration-platform-1-0-works-without-code/177381/.

Category:Free software Category:Metadata