Software:Azure Event Hubs

| |

| Owner | Microsoft |

|---|---|

| Website | azure |

| Commercial | Yes |

| Registration | Required (included in free tier layer) |

| Launched | 2014 |

| Current status | Active |

Azure Event Hubs is a real-time data streaming message broker Platform as a Service (PaaS) that aims to support large-scale event ingestion and streaming. Azure Event Hubs support streaming data using open standards such as Advanced Message Queuing Protocol(AMQP), HTTPs and Apache Kafka.

History

Azure Event Hubs was developed by Microsoft and launched[1] in 2014 as a PaaS to support event streaming using open standards such as AMQP and HTTPs. Support for Apache Kafka support was added[2] in 2018.

Overview

Modern software applications require analyzing streams of events or data that is continuously generated by different sources in real-time and capture actionable insights. Event streaming analytics is being heavily used to:

- Perform web clickstream, anomaly, and fraud detection

- Generate insights from application logs

- Derive business insights from market data feeds

- Process IoT sensor data

- Process game telemetry

- Real-time ET

- Process change data capture feeds and more.

Azure Event Hubs aims to provide an event streaming Platform as a Service that enables client applications to ingest streams of events and process with multiple downstream applications.

Architecture

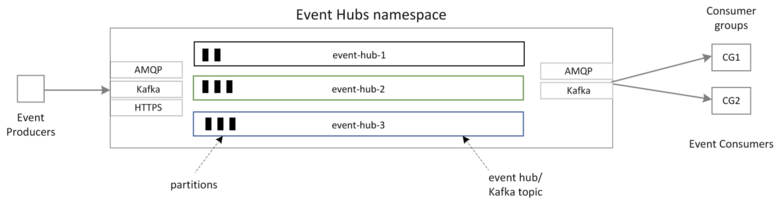

Event Hubs represents the "front door" for an event pipeline, and it sits between event producers and event consumers to decouple the production of an event stream from the consumption of those events. Event producers can ingest data to Event Hubs using AMQP, Apache Kafka, and HTTPS protocols. Event consumers can consume event streams using AMQP or Apache Kafka.

Events are organized into event hubs or topics (in Kafka parlance) and each event hubs can have one or more partitions. Event Hubs architecture is based on the partitioned consumer model where users can use topic partitions to parallelize the consumption to stream large volumes of data.

On the event consumer side, consumer groups are used for event consumption. A consumer group is a view of an entire event hub. Therefore, consumer groups enable multiple consuming applications to each have a separate view of the event stream, and to read the stream independently at their own pace and with their own offsets. All event hubs or Kafka topics are grouped into a management component known as a 'namespace'. Event Hubs namespace is used to configure scaling units, security and availability event streams.

Event Hubs for Apache Kafka

Event Hubs supports[3] most of the Apache Kafka workloads so that existing Kafka clients and applications can integrate with Event Hubs.

Event Hubs does not run or host Apache Kafka brokers. Instead, Event Hubs broker implementation supports Apache Kafka as a protocol head. Therefore, there are several Kafka features[4] that are not compatible with Event Hubs.

Known limitations.

Azure Event Hubs is available only as a Platform as a Service (PaaS). Therefore, users cannot run it on-premises or hybrid deployments. Also, Event Hubs is not 100% compatible with Apache Kafka features. The known feature differences are listed described in Event Hubs documentation[4].

Building event streaming pipelines

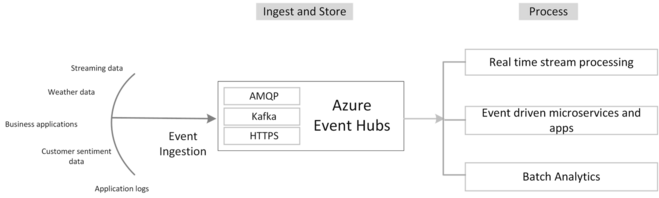

Using Azure Event Hubs as the data ingestion layer, users can build event streaming pipelines that consists of real-time streaming application, event driven microservices or application that perform batch analytics.

Users can consume data using any generic client application that is based on either Apache Kafka Clients or Azure Event Hubs SDK. Also data ingested to Event Hubs can be consumed by other frameworks such as Apache Spark and other Azure services.

Schema Registry for schema validation

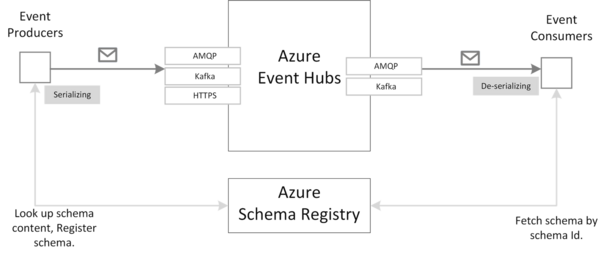

Client applications of Event Hubs can use the built-in scheme registry to serialize and de-serialize events using schema formats such as Apache Avro.

It also provides a simple governance framework for reusable schemas and defines the relationships between schemas through a grouping construct. It’s also compatible with Apache Kafka applications that use schema-driven serialization.

Client SDKs

To send and receive events from Azure Event Hubs, users can either use the native client SDK or any Kafka client SDK which is compatible with Apache Kafka API version 1.0 and above.

Using Event Hubs SDK/AMQP

Azure Event Hubs offers runtime client libraries for a wide range of programming languages.

Using Apache Kafka APIs

Azure Event Hubs is compatible with any Kafka SDK/client library. Apache Kafka producer and consumer samples can be found here for a wide range of programming languages and frameworks.

See also

Note: This topic belongs to "Free and open-source software " portal

- Apache Kafka

- Apache Pulsar

- AWS Kinesis

- Event-driven SOA

- Enterprise Integration Patterns

- Enterprise messaging system

- Streaming analytics

References

- ↑ "Announcing Azure Event Hubs General Availability" (in en). https://azure.microsoft.com/en-us/blog/announcing-azure-event-hubs-general-availability/.

- ↑ "Announcing the general availability of Azure Event Hubs for Apache Kafka®" (in en). https://azure.microsoft.com/en-us/blog/announcing-the-general-availability-of-azure-event-hubs-for-apache-kafka/.

- ↑ "Event-Driven Architecture with Apache Kafka for .NET Developers Part 3: Azure Event Hubs - DZone" (in en). https://thecloudblog.net/post/event-driven-architecture-with-apache-kafka-for-net-developers-part-3-azure-event-hubs/.

- ↑ 4.0 4.1 spelluru. "Use event hub from Apache Kafka app - Azure Event Hubs - Azure Event Hubs" (in en-us). https://learn.microsoft.com/en-us/azure/event-hubs/event-hubs-for-kafka-ecosystem-overview.