Software:OpenSAF

| |

| Original author(s) | Motorola |

|---|---|

| Developer(s) | OpenSAF Foundation |

| Initial release | 31 June 2007 |

| Stable release | 5.21.03

/ 1 March 2021 |

| Written in | C++ |

| Type | Cluster management software |

| Website | {{{1}}} |

OpenSAF (commonly styled SAF, the Service Availability Framework[1]) is an open-source service-orchestration system for automating computer application deployment, scaling, and management. OpenSAF is consistent with, and expands upon, Service Availability Forum (SAF) and SCOPE Alliance standards.[2]

It was originally designed by Motorola ECC, and is maintained by the OpenSAF Project.[3] OpenSAF is the most complete implementation of the SAF AIS specifications, providing a platform for automating deployment, scaling, and operations of application services across clusters of hosts.[4] It works across a range of virtualization tools and runs services in a cluster, often integrating with JVM, Vagrant, and/or Docker runtimes. OpenSAF originally interfaced with standard C Application Programming interfaces (APIs), but has added Java and Python bindings.[2]

OpenSAF is focused on Service Availability beyond High Availability (HA) requirements. While little formal research is published to improve high availability and fault tolerance techniques for containers and cloud,[5] research groups are actively exploring these challenges with OpenSAF.

History

OpenSAF was founded by an Industry consortium, including Ericsson, HP, and Nokia Siemens Networks, and first announced by Motorola ECC, acquired by Emerson Network Power, on February 28, 2007.[6] The OpenSAF Foundation was officially launched on January 22, 2008. Membership evolved to include Emerson Network Power, SUN Microsystems, ENEA, Wind River, Huawei, IP Infusion, Tail-f, Aricent, GoAhead Software, and Rancore Technologies.[2][7] GoAhead Software joined OpenSAF in 2010 before being acquired by Oracle.[8] OpenSAF's development and design are heavily influenced by Mission critical system requirements, including Carrier Grade Linux, SAF, ATCA and Hardware Platform Interface. OpenSAF was a milestone in accelerating adoption of Linux in Telecommunications and embedded systems.[9]

The goal of the Foundation was to accelerate the adoption of OpenSAF in commercial products. The OpenSAF community held conferences between 2008-2010; the first conference hosted by Nokia Siemens Networks in Munich (Germany), second hosted by Huawei in Shenzhen (China), and third hosted by HP in Palo Alto (USA). In February 2010, the first commercial deployment of OpenSAF in carrier networks was announced.[10] Academic and industry groups have independently published books describing OpenSAF-based solutions.[2][11] A growing body of research in service availability is accelerating the development of OpenSAF features supporting mission-critical cloud and microservices deployments, and service orchestration.[12][13]

OpenSAF 1.0 was released January 22, 2008. It comprised the NetPlane Core Service (NCS) codebase contributed by Motorola ECC.[14] Along with the OpenSAF 1.0 release, the OpenSAF foundation was incepted.[6] OpenSAF 2.0 released on August 12, 2008, was the first release developed by the OpenSAF community. This release included Log service and 64-bit support.[14] OpenSAF 3.0 released on June 17, 2009, included platform management, usability improvements, and Java API support.[15]

OpenSAF 4.0 was a milestone release in July 2010.[2] Nicknamed the "Architecture release", it introduced significant changes including closing functional gaps, settling internal architecture, enabling in-service upgrade, clarify APIs, and improve modularity.[16] Receiving significant interest from industry and academics, OpenSAF held two community conferences in 2011, one hosted by MIT University in Boston MA, and a second hosted by Ericsson in Stockholm.

| Version | Release date | Notes |

|---|---|---|

| 1.0 | 22 January 2008 | Original codebase of NetPlane Core Service (NCS) codebase contributed by Motorola ECC to OpenSAF project. |

| 2.0 | 12 August 2008 | |

| 3.0 | 17 June 2009 | The second release (counting from v2.0 onwards), took about 1.5 years, with contributions from Wind River Systems.[17] |

| 4.0 | 1 July 2010 | The "Architecture" release. First viable carrier-grade deployment candidate.[18] |

| 4.2 | 16 March 2012 | Improved manageability, enhanced availability modelling. |

| 5.0 | 5 May 2016 | A significant release. Support for spare system controllers (2N + spares), headless cluster(cloud resilience), enhanced Python bindings, node name logging.[19] |

| 5.20 | 1 June 2021 | |

| {{{2}}} | ||

Concepts

OpenSAF defines a set of building blocks, collectively providing a mechanism to manage Service Availability (SA) of applications based on resource-capability models.[20] SA and High Availability (HA) is the probability of a service being available at a random point in time; mission-critical systems require at least 99.999% (five nines) availability. HA and SA are essentially the same, but SA goes further (i.e. in-service upgrades of hardware and software).[21] OpenSAF is designed for loosely coupled systems with fast interconnections between nodes (i.e. using TIPC/TCP),[22] and extensible to meet different workloads; components communicate between themselves using any protocol. This extensibility is provided in large part by the IMM API, used by internal components and core services. The platform can exert control over compute and storage resources by defining as Objects, to be managed as (component service) instances and/or node constraints.[2][20][23]

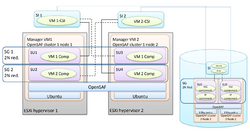

OpenSAF software is distributed in nature, following the primary/replica architecture. In an `OpenSAF' cluster, there are two types of nodes which can be divided into those that manage an individual node and control plane. One system controller runs in "active" mode, another in "standby" mode, and remaining system controllers (if any) are spares ready to take over as Active or Standby role in case of a fault. Nodes can run headless, without control plane, adding cloud resilience.[16][24]

System Model

The OpenSAF System Model is the key enabler API, allowing OpenSAF to process and validate requests, and update the state of objects in the AMF model, allowing directors to schedule workloads and service groups across worker/payload nodes. AMF behavior is changed via a configuration object.[24] Services can use ‘No Redundancy’, 2N, N+M, N-way, and N-way Active redundancy models.[20] OpenSAF lacks obvious modeling toolchains to simplify design and generation of AMF configuration Models. Ongoing research to address this gap,[25][26] needs to deliver ecosystem tools, to better support modeling and automation of carrier-grade and Cloud Native Computing Foundation use cases.

Control Plane

The OpenSAF System Controller (SC) is the main controlling unit of the cluster, managing its workload and directing communication across the system. The OpenSAF control plane consists of various components, each its own process, that can run both on a single SC node or on multiple SC nodes, supporting high-availability clusters and service availability.[2][24] The various components of the OpenSAF control plane are as follows:

- Information Model Manager (IMM) is a persistent data store that reliably stores the configuration data of the cluster, representing the overall state of the cluster at any given time. Provides a means to define and manage middleware and application configuration and state information in the form of managed objects and their corresponding attributes.[23] IMM is implemented as an in-memory database that replicates its data on all nodes. IMM can use SQLite as a persistent backend. Like Apache ZooKeeper, IMM guarantees transaction-level consistency of configuration data over availability/performance (see CAP theorem).[2][23][27] The IMM service follows the three-tier OpenSAF "Service Director" framework, comprising IMM Director (IMMD), IMM Node Director (IMMND), and IMM Agent library (IMMA). IMMD is implemented as a daemon on controllers using a 2N redundancy model, the active controller instance is "primary replica", the standby controller instance kept up-to-date by a message based checkpointing service. IMMD tracks cluster membership (using MDS), provides data store access control, and administrative interface for all OpenSAF services .[28][2]

- Availability Management Framework (AMF) serves high availability and workload management framework with robust support (in conjunction with other AIS services) for the full fault management lifecycle (detection, isolation, recovery, repair, and notification). AMF follows the three-tier OpenSAF "Service Director", comprising director (AmfD), node director (AmfND), and agents (AmfA), and an internal watchdog for AmfND protection. The active AmfD service is responsible for realizing service configuration, persisted in IMM, across system/cluster scope. Node directors perform the same function for any component within its scope.[2] It ensures state models are in agreement by acting as the main information and API bridge across all components. AMF monitors the IMM state, applying configuration changes or simply restore any divergences back to "wanted configuration" using fault management escalation policies to schedule the creation of the wanted deployment.[16]

- AMF Directors (AmfD) are schedulers that decides which nodes an unscheduled Service Group (a redundant service instance) runs on. This decision is based on current v.s. "desired" availability and capability models, service redundancy models, and constraints such as quality-of-service, affinity/anti-affinity, etc. AMF directors match resource "supply" to workload "demand", and its behavior can be manipulated through an IMM system object.[2][16]

Component

The Component is a logical entity of the AMF system model and represents a normalized view of a computing resource such as processes, drivers, or storage. Components are grouped into logical Service Units (SU), according to fault inter-dependencies, and associated with a Node. The SU is an instantiable unit of workload controlled by an AMF redundancy model, either active, standby, or failed state. SU of the same type is grouped into Service Groups (SG) which exhibit particular redundancy modeling characteristics. SU within an SG gets assigned to Service Instances (SI) and given an Availability state of active or standby. SI's are scalable redundant logical services protected by AMF.[2][16]

Node

A Node is a compute instance (a blade, hypervisor, or VM) where service instances (workload) are deployed. The set of nodes belonging to the same communication subnet (no routing) comprise the logical Cluster. Every node in the cluster must run an execution environment for services, as well as OpenSAF services listed below:

- Node director (AmfND): The AmfND is responsible for the running state of each node, ensuring all active SU on that node are healthy. It takes care of starting, stopping, and maintaining CSI, and/or SUs organized into SG as directed by the control plane. The AmfND service enforces the desired AMF configuration, persisted in IMM, on the node. When a node failure is detected, the director (AmfD) observes this state change and launches a service unit on another eligible healthy node.[2][16]

- Non-SA-Aware component: OpenSAF can provide HA (but not SA) for instantiable components originating from cloud computing, Containerization, Virtualization, and JVM domains, by modeling the component and service lifecycle commands (start/stop/health check) in the AMF Model.[2]

- Container-contained: An AMF container-contained can reside inside a SU. The container-contained is the lowest level of runtime which can be instantiated. The SA-Aware container-contained component currently targets a Java Virtual Machine (JVM) per JSR139.[29][2]

Service Unit

The basic scheduling unit in OpenSAF is a Service Unit (SU). A SU is a grouping of components. A SU consists of one or more components that are guaranteed to be co-located on the same node. SUs are not assigned IP addresses by default but may contain some component that does. A SU can be administratively managed using an object address. AmfND monitors the state of SUs, and if not in the desired state, re-deploys to the same node if possible. AmfD can start the SU on another Node if required by the redundancy model.[2] A SU can define a volume, such as a local disk directory or a network disk, and expose it to the Components in the SU.[39] SU can be administratively managed through the AMF CLI, or management can be delegated to AMF. Such volumes are also the basis for Persistent Storage.[2][16]

Service Group

The purpose of a Service Group is to maintain a stable set of replica SU's running at any given time. It can be used to guarantee the availability of a specified number of identical SU's based on selected configured redundancy model: N-Way, N-way-Active, 2N, N+M, or 'No-redundancy'. The SG is a grouping mechanism that lets OpenSAF maintain the number of instances declared for a given SG. The definition of an SG identifies all associated SU and their state (active, standby, failed).[2][16]

Service Instance

An OpenSAF Service Instance (SI) is a set of SU that work together, such as one tier of a multi-tier application. The set of SU that protects a service is defined by the SG. Multi-instance SG (N-way-active, N-way, N+M) requires a stable IP address, DNS name, and load balancer to distribute the traffic of that IP address among active SU in that SG (even if failures cause the SU's to move from machine to machine). By default, a service is exposed inside a cluster (e.g. SU[TypeA] is grouped into one SG, with requests from the SU[typeB] load-balanced among them), but service can also be exposed outside a cluster (e.g., for clients to reach front-end SUs).[2][16]

Volumes

Filesystems available to OpenSAF SU's are potentially ephemeral storage, by default. If the node is destroyed/recreated the data is lost on that Node. One solution is a Network File System (NFS) shared storage, accessible to all payload nodes.[30] Other technical solutions are possible - what is important is that Volumes (File Share, mount point) can be modeled in AMF. Highly available Volumes provide persistent storage that exists for the lifetime of the SU itself. This storage can also be used as a shared disk space for SU within the SG. Volumes mounted at specific mount points on the Node are owned by a specific SG, so that instance cannot be shared with other SG using the same file system mount point.

Architecture

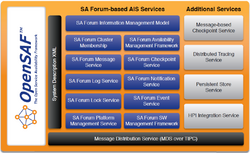

The OpenSAF architecture is distributed and runs in a cluster of logical nodes. All of the OpenSAF services either have 3-Tier or 2-Tier architecture. In the 3-Tiered architecture, OpenSAF services are partitioned into a service Director, a service Node-Director and an Agent. The Director is part of an OpenSAF service with central service intelligence. Typically it is a process on the controller node. The Node Directors co-ordinate node scoped service activities such as messaging with its central Director and its local Agents. The Agent provides service capabilities available to clients by way of a (shared) linkable library that exposes well-defined service APIs to application processes. Agents typically talk to their service Node Directors or Servers. The OpenSAF services are modularly classified as below[22]

- Core services – AMF, CLM, IMM, LOG, NTF

- Optional services – EVT, CKPT, LCK, MSG, PLM, SMF

The optional services can be enabled or disabled during the build/packaging of OpenSAF. OpenSAF can be configured to use TCP or TIPC as the underlying transport. Nodes can be dynamically added/deleted to/from the OpenSAF cluster at run time. OpenSAF cluster scales well up several hundred nodes. OpenSAF supports the following language bindings for the AIS interface APIs:

- C/C++

- Java bindings (for AMF and CLM services)

- Python bindings

- OpenSAF provides command-line tools and utilities for the management of the OpenSAF cluster and applications.

The modular architecture enables the addition of new services as well as the adaptation of the existing services. All OpenSAF services are designed to support in-service upgrades.

Services

The following SA Forum's AIS services are implemented by OpenSAF 5.0.[23]

- Availability Management Framework (AMF) - described above.

- Cluster Membership Service (CLM) – Determines whether a node is healthy enough to be a part of the cluster. Provides a mechanism to track the cluster nodes by interacting with PLM for tracking the status of underlying OS/hardware.

- Checkpoint Service (CKPT) – For saving application states and incremental updates that can be used to restore service during failover or switchover.

- Event Service (EVT) – Provides a publish-subscribe messaging model that can be used for keeping applications and management entities in sync about events happening in the cluster.

- Information Model Management Service (IMM) - described above.

- Lock Service (LCK) – Supports a distributed lock service model with support for shared locks and exclusive locks.

- Log Service (LOG) – Means for recording (in log files) the functional changes happening in the cluster, with support for logging in diverse log record formats. Not for debugging or error tracking. Supports logging of alarms and notifications occurring in the cluster.

- Messaging Service (MSG) – Supports cluster-wide messaging mechanism with multiple senders – single receiver as well as message-group mechanisms.

- Notification Service (NTF) – Provides a producer/subscriber model for system management notifications to enable fault handling. Used for alarm and fault notifications with support for recording history for fault analysis. Supports notification formats of ITU-T X.730, X.731, X.733, X.736 recommendations.

- Platform Management Service (PLM) – provides a mechanism to configure a logical view of the underlying hardware (FRU) and the OS. Provides a mechanism to track the status of the OS, the hardware (FRU) and to perform administrative operations in coordination with the OpenSAF services and applications.

- Software Management Framework (SMF) – Support for an automated in-service upgrade of application, middleware, and OS across the cluster.

Supporters

Network Equipment Providers will be the primary users of products based on the OpenSAF code base, integrating them into their products for network service providers, carriers, and operators. Many network equipment providers have demonstrated their support for OpenSAF by joining the Foundation and/or contributing to the Open Source project. Current Foundation Members include: Ericsson, HP, and Oracle. Several providers of computing and communications technology also have indicated support for the OpenSAF initiative including, OpenClovis SAFplus, Emerson Network Power Embedded Computing, Continuous Computing, Wind River, IP Infusion, Tail-f, Aricent, Rancore Technologies, GoAhead Software, and MontaVista Software.

Uses

OpenSAF is commonly used as a way to achieve carrier-grade (five-nines) service availability. OpenSAF is functionally complete but lacks the ecosystem of modeling tools available to other open-source solutions like Kubernetes and Docker Swarm.

See also

- SAForum

- SCOPE Alliance

- OpenHPI

- List of cluster management software

- Cloud Native Computing Foundation

References

- ↑ "OpenSAF/About" (in en). https://sourceforge.net/p/opensaf/wiki/About%20OpenSAF/.

- ↑ 2.00 2.01 2.02 2.03 2.04 2.05 2.06 2.07 2.08 2.09 2.10 2.11 2.12 2.13 2.14 2.15 2.16 2.17 2.18 Maria Toeroe; Francis Tam (2012). Service Availability: Principles and Practice. John Wiley & Sons. ISBN 978-1-1199-4167-5. https://books.google.com/books?id=Ql1oHlKkHOsC.

- ↑ "OpenSAF Readme" (in en). https://sourceforge.net/p/opensaf/code/ci/develop/tree/README.

- ↑ "OpenSAF". 19 March 2014. https://opensaf.sourceforge.io/index.html.

- ↑ "Fault-Tolerant Containers Using NiLiCon" (in en). http://web.cs.ucla.edu/~tamir/papers/ipdps20.pdf.

- ↑ 6.0 6.1 Carolyn Mathas (28 February 2007). "OpenSAF project" (in en). https://www.eetimes.com/opensaf-project/#.

- ↑ ED News Staff (2007). "Industry Leaders To Establish Consortium On OpenSAF Project". https://www.electronicdesign.com/news/article/21753060/industry-leaders-to-establish-consortium-on-opensaf-project.

- ↑ OpenSaf Foundation (2010). "GoAhead Software Joins OpenSAF(TM)" (Press release). Archived from the original on 2020-12-29.

- ↑ cook (2007). "Motorola launches open-source High Availability Operating Environment". https://lwn.net/Articles/224074/.

- ↑ OpenSAF Foundation (2010). "OpenSAF in Commercial Deployment" (Press release). Archived from the original on 2018-06-25.

- ↑ Madhusanka Liyanage; Andrei Gurtov; Mika Ylianttila (2015). Software Defined Mobile Networks (SDMN): Beyond LTE Network Architecture. John Wiley & Sons, Ltd.. doi:10.1002/9781118900253. ISBN 9781118900253. https://onlinelibrary.wiley.com/doi/book/10.1002/9781118900253.

- ↑ Yanal Alahmad; Tariq Daradkeh; Anjali Agarwal (2018). "Availability-Aware Container Scheduler for Application Services in Cloud". 2018 IEEE 37th International Performance Computing and Communications Conference (IPCCC). pp. 1–6. doi:10.1109/PCCC.2018.8711295. ISBN 978-1-5386-6808-5.

- ↑ Leila Abdollahi Vayghan; Mohamed Aymen Saied; Maria Toeroe; Ferhat Khendek (2019). "Kubernetes as an Availability Manager for Microservice Applications". Journal of Network and Computer Applications.

- ↑ 14.0 14.1 "OpenSAF Releases 2.0". https://www.lightreading.com/atca/opensaf-releases-20/d/d-id/660133.

- ↑ "Open source Carrier Grade Linux middleware rev'd (LinuxDevices)". https://lwn.net/Articles/337766/.

- ↑ 16.0 16.1 16.2 16.3 16.4 16.5 16.6 16.7 16.8 "OpenSAF Release 4 Overview "The Architecture Release"". https://docs.huihoo.com/opensaf/developer-days-2010/3-OpenSAF-Release-4-Overview.pdf.

- ↑ Hans J. Rauscher (22 June 2009). "OpenSAF 3.0 released". https://blogs.windriver.com/wind_river_blog/2009/06/opensaf-30-released/.

- ↑ "OpenSAF Project Releases Major Update to High Availability Middleware". http://picmg.mil-embedded.com/news/opensaf-update-high-availability-middleware/.

- ↑ "Announcement of 5.0.0 GA release and 4.7.1, 4.6.2 maintenance releases". https://sourceforge.net/p/opensaf/news/2016/05/announcement-of-50-ga-release-and-471-462-maintenance-releases/?version=4.

- ↑ 20.0 20.1 20.2 SA Forum (2010). "SAI-AIS-AMF-B.04.01 Section 3.6". https://opensaf.sourceforge.io/SAI-AIS-AMF-B.04.01.AL.pdf.

- ↑ Anders Widell; Mathivanan NP (2012). "OpenSAF in the Cloud. Why an HA Middleware is still needed". https://events.static.linuxfound.org/sites/events/files/slides/OpenSAF%20HA%20in%20the%20cloud_0.pdf.

- ↑ 22.0 22.1 Jon Paul Maloy (2004). "TIPC: Providing Communication for Linux Clusters". Linux Symposium, Volume Two. https://www.kernel.org/doc/ols/2004/ols2004v2-pages-61-70.pdf.

- ↑ 23.0 23.1 23.2 23.3 OpenSAF TSC (2016). "Opensaf" (in en). https://wiki.opnfv.org/display/PROJ/Opensaf.

- ↑ 24.0 24.1 24.2 OpenSAF Project (2020). "OpenSAF README" (in en). https://sourceforge.net/p/opensaf/code/ci/develop/tree/README.

- ↑ Maxime TURENNE (2015). "A NEW DOMAIN SPECIFIC LANGUAGE FOR GENERATING AND VALIDATING MIDDLEWARE CONFIGURATIONS FOR HIGHLY AVAILABLE APPLICATIONS" (in en). https://espace.etsmtl.ca/id/eprint/1563/1/TURENNE_Maxime.pdf.

- ↑ Pejman Salehi; Abdelwahab Hamou-Lhadj; Maria Toeroe; Ferhat Khendek (2016). "A UML-based domain specific modeling language for service availability management" (in en). Computer Standards & Interfaces (Elsevier Science Publishers B. V.) Computer Standards & Interfaces, Vol. 44, No. C: 63–83. doi:10.1016/j.csi.2015.09.009. https://doi.org/10.1016/j.csi.2015.09.009. Retrieved 2020-12-28.

- ↑ OPNFV HA Project (2016). "Scenario Analysis for High Availability in NFV, Section 5.4.2" (in en). https://privatewiki.opnfv.org/_media/releases/brahmaputra/scenario_analysis_for_high_availability_in_nfv.pdf.

- ↑ OpenSAF Project (2020). "OpenSAF IMM README" (in en). https://sourceforge.net/p/opensaf/code/ci/develop/tree/src/imm/README.

- ↑ Jens Jensen; Expert Group (2010). "JSR 319: Availability Management for Java" (in en). https://jcp.org/en/jsr/detail?id=319.

- ↑ Ferhat Khendek (2013). "OpenSAF and VMware from the Perspective of High Availability" (in en). https://www.dmtf.org/sites/default/files/SVM_2013-Khendek.pdf.

External links

- No URL found. Please specify a URL here or add one to Wikidata.

- OpenSAF on SourceForge.net

|