Algorithmic probability

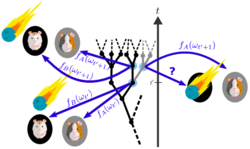

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s.[2] It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs.[3]

In the mathematical formalism used, the observations have the form of finite binary strings viewed as outputs of Turing machines, and the universal prior is a probability distribution over the set of finite binary strings calculated from a probability distribution over programs (that is, inputs to a universal Turing machine). The prior is universal in the Turing-computability sense, i.e. no string has zero probability. It is not computable, but it can be approximated.[4]

Overview

Algorithmic probability is the main ingredient of Solomonoff's theory of inductive inference, the theory of prediction based on observations; it was invented with the goal of using it for machine learning; given a sequence of symbols, which one will come next? Solomonoff's theory provides an answer that is optimal in a certain sense, although it is incomputable. Unlike, for example, Karl Popper's informal inductive inference theory,[clarification needed] Solomonoff's is mathematically rigorous.

Four principal inspirations for Solomonoff's algorithmic probability were: Occam's razor, Epicurus' principle of multiple explanations, modern computing theory (e.g. use of a universal Turing machine) and Bayes’ rule for prediction.[5]

Occam's razor and Epicurus' principle are essentially two different non-mathematical approximations of the universal prior.

- Occam's razor: among the theories that are consistent with the observed phenomena, one should select the simplest theory.[6]

- Epicurus' principle of multiple explanations: if more than one theory is consistent with the observations, keep all such theories.[7]

At the heart of the universal prior is an abstract model of a computer, such as a universal Turing machine.[8] Any abstract computer will do, as long as it is Turing-complete, i.e. every computable function has at least one program that will compute its application on the abstract computer.

The abstract computer is used to give precise meaning to the phrase "simple explanation". In the formalism used, explanations, or theories of phenomena, are computer programs that generate observation strings when run on the abstract computer. Each computer program is assigned a weight corresponding to its length. The universal probability distribution is the probability distribution on all possible output strings with random input, assigning for each finite output prefix q the sum of the probabilities of the programs that compute something starting with q.[9] Thus, a simple explanation is a short computer program. A complex explanation is a long computer program. Simple explanations are more likely, so a high-probability observation string is one generated by a short computer program, or perhaps by any of a large number of slightly longer computer programs. A low-probability observation string is one that can only be generated by a long computer program.

Algorithmic probability is closely related to the concept of Kolmogorov complexity. Kolmogorov's introduction of complexity was motivated by information theory and problems in randomness, while Solomonoff introduced algorithmic complexity for a different reason: inductive reasoning. A single universal prior probability that can be substituted for each actual prior probability in Bayes's rule was invented by Solomonoff with Kolmogorov complexity as a side product.[10] It predicts the most likely continuation of that observation, and provides a measure of how likely this continuation will be.[citation needed]

Solomonoff's enumerable measure is universal in a certain powerful sense, but the computation time can be infinite. One way of dealing with this issue is a variant of Leonid Levin's Search Algorithm,[11] which limits the time spent computing the success of possible programs, with shorter programs given more time. When run for longer and longer periods of time, it will generate a sequence of approximations which converge to the universal probability distribution. Other methods of dealing with the issue include limiting the search space by including training sequences.

Solomonoff proved this distribution to be machine-invariant within a constant factor (called the invariance theorem).[12]

Fundamental Theorems

I. Kolmogorov's Invariance Theorem

Kolmogorov's Invariance theorem clarifies that the Kolmogorov Complexity, or Minimal Description Length, of a dataset is invariant to the choice of Turing-Complete language used to simulate a Universal Turing Machine:

- [math]\displaystyle{ \forall x \in \{0,1\}^*, |K_U(x)-K_{U'}(x) | \leq \mathcal{O}(1) }[/math]

where [math]\displaystyle{ K_U(x) = \min_{p} \{|p|: U(p) = x\} }[/math].

Interpretation

The minimal description [math]\displaystyle{ p }[/math] such that [math]\displaystyle{ U \circ p = x }[/math] serves as a natural representation of the string [math]\displaystyle{ x }[/math] relative to the Turing-Complete language [math]\displaystyle{ U }[/math]. Moreover, as [math]\displaystyle{ x }[/math] can't be compressed further [math]\displaystyle{ p }[/math] is an incompressible and hence uncomputable string. This corresponds to a scientists' notion of randomness and clarifies the reason why Kolmogorov Complexity is not computable.

It follows that any piece of data has a necessary and sufficient representation in terms of a random string.

Proof

The following is taken from [13]

From the theory of compilers, it is known that for any two Turing-Complete languages [math]\displaystyle{ U_1 }[/math] and [math]\displaystyle{ U_2 }[/math], there exists a compiler [math]\displaystyle{ \Lambda_1 }[/math] expressed in [math]\displaystyle{ U_1 }[/math] that translates programs expressed in [math]\displaystyle{ U_2 }[/math] into functionally-equivalent programs expressed in [math]\displaystyle{ U_1 }[/math].

It follows that if we let [math]\displaystyle{ p }[/math] be the shortest program that prints a given string [math]\displaystyle{ x }[/math] then:

- [math]\displaystyle{ K_{U_1}(x) \leq |\Lambda_1| + |p| \leq K_{U_2}(x) + \mathcal{O}(1) }[/math]

where [math]\displaystyle{ |\Lambda_1| = \mathcal{O}(1) }[/math], and by symmetry we obtain the opposite inequality.

II. Levin's Universal Distribution

Given that any uniquely-decodable code satisfies the Kraft-McMillan inequality, prefix-free Kolmogorov Complexity allows us to derive the Universal Distribution:

- [math]\displaystyle{ P(x) = \sum_{U \circ P = x} P(U \circ p = x) = \sum_{U \circ p = x} 2^{-K_U(p)} \leq 1 }[/math]

where the fact that [math]\displaystyle{ U }[/math] may simulate a prefix-free UTM implies that for two distinct descriptions [math]\displaystyle{ p }[/math] and [math]\displaystyle{ p' }[/math], [math]\displaystyle{ p }[/math] isn't a substring of [math]\displaystyle{ p' }[/math] and [math]\displaystyle{ p' }[/math] isn't a substring of [math]\displaystyle{ p }[/math].

Interpretation

In a Computable Universe, given a phenomenon with encoding [math]\displaystyle{ x \in \{0,1\}^* }[/math] generated by a physical process the probability of that phenomenon is well-defined and equal to the sum over the probabilities of distinct and independent causes. The prefix-free criterion is precisely what guarantees causal independence.

Proof

This is an immediate consequence of the Kraft-McMillan inequality.

Kraft's inequality states that given a sequence of strings [math]\displaystyle{ \{x_i\}_{i=1}^n }[/math] there exists a prefix code with codewords [math]\displaystyle{ \{\sigma_i\}_{i=1}^n }[/math] where [math]\displaystyle{ \forall i, |\sigma_i|=k_i }[/math] if and only if:

- [math]\displaystyle{ \sum_{i=1}^n s^{-k_i} \leq 1 }[/math]

where [math]\displaystyle{ s }[/math] is the size of the alphabet [math]\displaystyle{ S }[/math].

Without loss of generality, let's suppose we may order the [math]\displaystyle{ k_i }[/math] such that:

- [math]\displaystyle{ k_1 \leq k_2 \leq ... \leq k_n }[/math]

Now, there exists a prefix code if and only if at each step [math]\displaystyle{ j }[/math] there is at least one codeword to choose that does not contain any of the previous [math]\displaystyle{ j-1 }[/math] codewords as a prefix. Due to the existence of a codeword at a previous step [math]\displaystyle{ i\lt j, s^{k_j-k_i} }[/math] codewords are forbidden as they contain [math]\displaystyle{ \sigma_i }[/math] as a prefix. It follows that in general a prefix code exists if and only if:

- [math]\displaystyle{ \forall j \geq 2, s^{k_j} \gt \sum_{i=1}^{j-1} s^{k_j - k_i} }[/math]

Dividing both sides by [math]\displaystyle{ s^{k_j} }[/math], we find:

- [math]\displaystyle{ \sum_{i=1}^n s^{-k_i} \leq 1 }[/math]

QED.

History

Solomonoff invented the concept of algorithmic probability with its associated invariance theorem around 1960,[14] publishing a report on it: "A Preliminary Report on a General Theory of Inductive Inference."[15] He clarified these ideas more fully in 1964 with "A Formal Theory of Inductive Inference," Part I[16] and Part II.[17]

Key people

See also

- Solomonoff's theory of inductive inference

- Algorithmic information theory

- Bayesian inference

- Inductive inference

- Inductive probability

- Kolmogorov complexity

- Universal Turing machine

- Information-based complexity

References

- ↑ Markus Müller. Law without Law: from observer states to physics via algorithmic information theory. Quantum: the open journal for quantum science. 06 June 2020.

- ↑ Solomonoff, R., "A Preliminary Report on a General Theory of Inductive Inference", Report V-131, Zator Co., Cambridge, Ma. (Nov. 1960 revision of the Feb. 4, 1960 report).

- ↑ Li, M. and Vitanyi, P., An Introduction to Kolmogorov Complexity and Its Applications, 3rd Edition, Springer Science and Business Media, N.Y., 2008

- ↑ Hutter, M., Legg, S., and Vitanyi, P., "Algorithmic Probability", Scholarpedia, 2(8):2572, 2007.

- ↑ Li and Vitanyi, 2008, p. 347

- ↑ Li and Vitanyi, 2008, p. 341

- ↑ Li and Vitanyi, 2008, p. 339.

- ↑ Hutter, M., "Algorithmic Information Theory", Scholarpedia, 2(3):2519.

- ↑ Solomonoff, R., "The Kolmogorov Lecture: The Universal Distribution and Machine Learning" The Computer Journal, Vol 46, No. 6 p 598, 2003.

- ↑ Gács, P. and Vitányi, P., "In Memoriam Raymond J. Solomonoff", IEEE Information Theory Society Newsletter, Vol. 61, No. 1, March 2011, p 11.

- ↑ Levin, L.A., "Universal Search Problems", in Problemy Peredaci Informacii 9, pp. 115–116, 1973

- ↑ Solomonoff, R., "Complexity-Based Induction Systems: Comparisons and Convergence Theorems," IEEE Trans. on Information Theory, Vol. IT-24, No. 4, pp. 422-432, July 1978

- ↑ Grünwald, P. and Vitany, P. Algorithmic Information Theory. Arxiv. 2008.

- ↑ Solomonoff, R., "The Discovery of Algorithmic Probability", Journal of Computer and System Sciences, Vol. 55, No. 1, pp. 73-88, August 1997.

- ↑ Solomonoff, R., "A Preliminary Report on a General Theory of Inductive Inference", Report V-131, Zator Co., Cambridge, Ma. (Nov. 1960 revision of the Feb. 4, 1960 report).

- ↑ Solomonoff, R., "A Formal Theory of Inductive Inference, Part I". Information and Control, Vol 7, No. 1 pp 1-22, March 1964.

- ↑ Solomonoff, R., "A Formal Theory of Inductive Inference, Part II" Information and Control, Vol 7, No. 2 pp 224–254, June 1964.

Sources

- Li, M. and Vitanyi, P., An Introduction to Kolmogorov Complexity and Its Applications, 3rd Edition, Springer Science and Business Media, N.Y., 2008

Further reading

- Rathmanner, S and Hutter, M., "A Philosophical Treatise of Universal Induction" in Entropy 2011, 13, 1076-1136: A very clear philosophical and mathematical analysis of Solomonoff's Theory of Inductive Inference

External links

|