Software:NumPy

| |

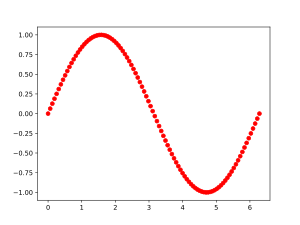

Plot of y=sin(x) function, created with NumPy and Matplotlib libraries | |

| Original author(s) | Travis Oliphant |

|---|---|

| Developer(s) | Community project |

| Initial release | As Numeric, 1995; as NumPy, 2006 |

| Written in | Python, C |

| Operating system | Cross-platform |

| Type | Numerical analysis |

| License | BSD[1] |

NumPy (pronounced /ˈnʌmpaɪ/ NUM-py) is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays.[2] The predecessor of NumPy, Numeric, was originally created by Jim Hugunin with contributions from several other developers. In 2005, Travis Oliphant created NumPy by incorporating features of the competing Numarray into Numeric, with extensive modifications. NumPy is open-source software and has many contributors. NumPy is a NumFOCUS fiscally sponsored project.[3]

History

matrix-sig

The Python programming language was not originally designed for numerical computing, but attracted the attention of the scientific and engineering community early on. In 1995 the special interest group (SIG) matrix-sig was founded with the aim of defining an array computing package; among its members was Python designer and maintainer Guido van Rossum, who extended Python's syntax (in particular the indexing syntax[4]) to make array computing easier.[5]

Numeric

An implementation of a matrix package was completed by Jim Fulton, then generalized[further explanation needed] by Jim Hugunin and called Numeric[5] (also variously known as the "Numerical Python extensions" or "NumPy"), with influences from the APL family of languages, Basis, MATLAB, FORTRAN, S and S+, and others.[6][7] Hugunin, a graduate student at the Massachusetts Institute of Technology (MIT),[7]:10 joined the Corporation for National Research Initiatives (CNRI) in 1997 to work on JPython,[5] leaving Paul Dubois of Lawrence Livermore National Laboratory (LLNL) to take over as maintainer.[7]:10 Other early contributors include David Ascher, Konrad Hinsen and Travis Oliphant.[7]:10

Numarray

A new package called Numarray was written as a more flexible replacement for Numeric.[8] Like Numeric, it too is now deprecated.[9][10] Numarray had faster operations for large arrays, but was slower than Numeric on small ones,[11] so for a time both packages were used in parallel for different use cases. The last version of Numeric (v24.2) was released on 11 November 2005, while the last version of numarray (v1.5.2) was released on 24 August 2006.[12]

There was a desire to get Numeric into the Python standard library, but Guido van Rossum decided that the code was not maintainable in its state then.[when?][13]

NumPy

In early 2005, NumPy developer Travis Oliphant wanted to unify the community around a single array package and ported Numarray's features to Numeric, releasing the result as NumPy 1.0 in 2006.[8] This new project was part of SciPy. To avoid installing the large SciPy package just to get an array object, this new package was separated and called NumPy. Support for Python 3 was added in 2011 with NumPy version 1.5.0.[14]

In 2011, PyPy started development on an implementation of the NumPy API for PyPy.[15] As of 2023, it is not yet fully compatible with NumPy.[16]

Features

NumPy targets the CPython reference implementation of Python, which is a non-optimizing bytecode interpreter. Mathematical algorithms written for this version of Python often run much slower than compiled equivalents due to the absence of compiler optimization. NumPy addresses the slowness problem partly by providing multidimensional arrays and functions and operators that operate efficiently on arrays; using these requires rewriting some code, mostly inner loops, using NumPy.

Using NumPy in Python gives functionality comparable to MATLAB since they are both interpreted,[17] and they both allow the user to write fast programs as long as most operations work on arrays or matrices instead of scalars. In comparison, MATLAB boasts a large number of additional toolboxes, notably Simulink, whereas NumPy is intrinsically integrated with Python, a more modern and complete programming language. Moreover, complementary Python packages are available; SciPy is a library that adds more MATLAB-like functionality and Matplotlib is a plotting package that provides MATLAB-like plotting functionality. Although matlab can perform sparse matrix operations, numpy alone cannot perform such operations and requires the use of the scipy.sparse library. Internally, both MATLAB and NumPy rely on BLAS and LAPACK for efficient linear algebra computations.

Python bindings of the widely used computer vision library OpenCV utilize NumPy arrays to store and operate on data. Since images with multiple channels are simply represented as three-dimensional arrays, indexing, slicing or masking with other arrays are very efficient ways to access specific pixels of an image. The NumPy array as universal data structure in OpenCV for images, extracted feature points, filter kernels and many more vastly simplifies the programming workflow and debugging.[citation needed]

Importantly, many NumPy operations release the GIL, which allows for multithreaded processing.[18]

NumPy also provides a C API, which allows Python code to interoperate with external libraries written in low-level languages.[19]

The ndarray data structure

The core functionality of NumPy is its "ndarray", for n-dimensional array, data structure. These arrays are strided views on memory.[8] In contrast to Python's built-in list data structure, these arrays are homogeneously typed: all elements of a single array must be of the same type.

Such arrays can also be views into memory buffers allocated by C/C++, Python, and Fortran extensions to the CPython interpreter without the need to copy data around, giving a degree of compatibility with existing numerical libraries. This functionality is exploited by the SciPy package, which wraps a number of such libraries (notably BLAS and LAPACK). NumPy has built-in support for memory-mapped ndarrays.[8]

Limitations

Inserting or appending entries to an array is not as trivially possible as it is with Python's lists.

The np.pad(...) routine to extend arrays actually creates new arrays of the desired shape and padding values, copies the given array into the new one and returns it.

NumPy's np.concatenate([a1,a2]) operation does not actually link the two arrays but returns a new one, filled with the entries from both given arrays in sequence.

Reshaping the dimensionality of an array with np.reshape(...) is only possible as long as the number of elements in the array does not change.

These circumstances originate from the fact that NumPy's arrays must be views on contiguous memory buffers. A replacement package called Blaze attempts to overcome this limitation.[20]

Algorithms that are not expressible as a vectorized operation will typically run slowly because they must be implemented in "pure Python", while vectorization may increase memory complexity of some operations from constant to linear, because temporary arrays must be created that are as large as the inputs. Runtime compilation of numerical code has been implemented by several groups to avoid these problems; open source solutions that interoperate with NumPy include numexpr[21] and Numba.[22] Cython and Pythran are static-compiling alternatives to these.

Many modern large-scale scientific computing applications have requirements that exceed the capabilities of the NumPy arrays. For example, NumPy arrays are usually loaded into a computer's memory, which might have insufficient capacity for the analysis of large datasets. Further, NumPy operations are executed on a single CPU. However, many linear algebra operations can be accelerated by executing them on clusters of CPUs or of specialized hardware, such as GPUs and TPUs, which many deep learning applications rely on. As a result, several alternative array implementations have arisen in the scientific python ecosystem over the recent years, such as Dask for distributed arrays and TensorFlow or JAX for computations on GPUs. Because of its popularity, these often implement a subset of NumPy's API or mimic it, so that users can change their array implementation with minimal changes to their code required.[2] A recently introduced library named CuPy,[23] accelerated by Nvidia's CUDA framework, has also shown potential for faster computing, being a 'drop-in replacement' of NumPy.[24]

Examples

import numpy as np from numpy.random import rand from numpy.linalg import solve, inv a=np.array(1,2,3,4],[3,4,6,7],[5,9,0,5) a.transpose()

Basic operations

>>> a = np.array([1, 2, 3, 6]) >>> b = np.linspace(0, 2, 4) # create an array with four equally spaced points starting with 0 and ending with 2. >>> c = a - b >>> c array([ 1. , 1.33333333, 1.66666667, 2. ]) >>> a**2 array([ 1, 4, 9, 36])

Universal functions

>>> a = np.linspace(-np.pi, np.pi, 100) >>> b = np.sin(a) >>> c = np.cos(a) >>> >>> # Functions can take both numbers and arrays as parameters. >>> np.sin(1) 0.8414709848078965 >>> np.sin(np.array([1, 2, 3])) array([0.84147098, 0.90929743, 0.14112001])

Linear algebra

>>> from numpy.random import rand

>>> from numpy.linalg import solve, inv

>>> a = np.array(1, 2, 3], [3, 4, 6.7], [5, 9.0, 5)

>>> a.transpose()

array([[ 1. , 3. , 5. ],

[ 2. , 4. , 9. ],

[ 3. , 6.7, 5. ]])

>>> inv(a)

array([[-2.27683616, 0.96045198, 0.07909605],

[ 1.04519774, -0.56497175, 0.1299435 ],

[ 0.39548023, 0.05649718, -0.11299435]])

>>> b = np.array([3, 2, 1])

>>> solve(a, b) # solve the equation ax = b

array([-4.83050847, 2.13559322, 1.18644068])

>>> c = rand(3, 3) * 20 # create a 3x3 random matrix of values within [0,1] scaled by 20

>>> c

array([[ 3.98732789, 2.47702609, 4.71167924],

[ 9.24410671, 5.5240412 , 10.6468792 ],

[ 10.38136661, 8.44968437, 15.17639591]])

>>> np.dot(a, c) # matrix multiplication

array([[ 53.61964114, 38.8741616 , 71.53462537],

[ 118.4935668 , 86.14012835, 158.40440712],

[ 155.04043289, 104.3499231 , 195.26228855]])

>>> a @ c # Starting with Python 3.5 and NumPy 1.10

array([[ 53.61964114, 38.8741616 , 71.53462537],

[ 118.4935668 , 86.14012835, 158.40440712],

[ 155.04043289, 104.3499231 , 195.26228855]])

Tensors

>>> M = np.zeros(shape=(2, 3, 5, 7, 11)) >>> T = np.transpose(M, (4, 2, 1, 3, 0)) >>> T.shape (11, 5, 3, 7, 2)

Incorporation with OpenCV

>>> import numpy as np

>>> import cv2

>>> r = np.reshape(np.arange(256*256)%256,(256,256)) # 256x256 pixel array with a horizontal gradient from 0 to 255 for the red color channel

>>> g = np.zeros_like(r) # array of same size and type as r but filled with 0s for the green color channel

>>> b = r.T # transposed r will give a vertical gradient for the blue color channel

>>> cv2.imwrite('gradients.png', np.dstack([b,g,r])) # OpenCV images are interpreted as BGR, the depth-stacked array will be written to an 8bit RGB PNG-file called 'gradients.png'

True

Nearest Neighbor Search

Iterative Python algorithm and vectorized NumPy version.

>>> # # # Pure iterative Python # # #

>>> points = 9,2,8],[4,7,2],[3,4,4],[5,6,9],[5,0,7],[8,2,7],[0,3,2],[7,3,0],[6,1,1],[2,9,6

>>> qPoint = [4,5,3]

>>> minIdx = -1

>>> minDist = -1

>>> for idx, point in enumerate(points): # iterate over all points

... dist = sum([(dp-dq)**2 for dp,dq in zip(point,qPoint)])**0.5 # compute the euclidean distance for each point to q

... if dist < minDist or minDist < 0: # if necessary, update minimum distance and index of the corresponding point

... minDist = dist

... minIdx = idx

>>> print(f'Nearest point to q: {points[minIdx]}')

Nearest point to q: [3, 4, 4]

>>> # # # Equivalent NumPy vectorization # # #

>>> import numpy as np

>>> points = np.array(9,2,8],[4,7,2],[3,4,4],[5,6,9],[5,0,7],[8,2,7],[0,3,2],[7,3,0],[6,1,1],[2,9,6)

>>> qPoint = np.array([4,5,3])

>>> minIdx = np.argmin(np.linalg.norm(points-qPoint,axis=1)) # compute all euclidean distances at once and return the index of the smallest one

>>> print(f'Nearest point to q: {points[minIdx]}')

Nearest point to q: [3 4 4]

F2PY

Quickly wrap native code for faster scripts.[25][26][27]

! Python Fortran native code call example

! f2py -c -m foo *.f90

! Compile Fortran into python named module using intent statements

! Fortran subroutines only not functions--easier than JNI with C wrapper

! requires gfortran and make

subroutine ftest(a, b, n, c, d)

implicit none

integer, intent(in) :: a

integer, intent(in) :: b

integer, intent(in) :: n

integer, intent(out) :: c

integer, intent(out) :: d

integer :: i

c = 0

do i = 1, n

c = a + b + c

end do

d = (c * n) * (-1)

end subroutine ftest

>>> import numpy as np

>>> import foo

>>> a = foo.ftest(1, 2, 3) # or c,d = instead of a.c and a.d

>>> print(a)

(9,-27)

>>> help('foo.ftest') # foo.ftest.__doc__

See also

- Array programming

- List of numerical-analysis software

- Theano

- Matplotlib

- Fortran

- Row- and column-major order

References

- ↑ "NumPy — NumPy". NumPy developers. https://numpy.org/.

- ↑ 2.0 2.1 , Wikidata Q99413970

- ↑ "NumFOCUS Sponsored Projects". NumFOCUS. https://numfocus.org/sponsored-projects.

- ↑ "Indexing — NumPy v1.20 Manual". https://numpy.org/doc/stable/reference/arrays.indexing.html.

- ↑ 5.0 5.1 5.2 Millman, K. Jarrod; Aivazis, Michael (2011). "Python for Scientists and Engineers". Computing in Science and Engineering 13 (2): 9–12. doi:10.1109/MCSE.2011.36. Bibcode: 2011CSE....13b...9M. http://www.computer.org/csdl/mags/cs/2011/02/mcs2011020009.html. Retrieved 2014-07-07.

- ↑ Travis Oliphant (2007). "Python for Scientific Computing". Computing in Science and Engineering. http://www.vision.ime.usp.br/~thsant/pool/oliphant-python_scientific.pdf. Retrieved 2013-10-12.

- ↑ 7.0 7.1 7.2 7.3 "Numerical Python". 1999. http://www.cs.mcgill.ca/~hv/articles/Numerical/numpy.pdf.

- ↑ 8.0 8.1 8.2 8.3 van der Walt, Stéfan; Colbert, S. Chris; Varoquaux, Gaël (2011). "The NumPy array: a structure for efficient numerical computation". Computing in Science and Engineering (IEEE) 13 (2): 22. doi:10.1109/MCSE.2011.37. Bibcode: 2011CSE....13b..22V.

- ↑ "Numarray Homepage". http://www.stsci.edu/resources/software_hardware/numarray.

- ↑ Travis E. Oliphant (7 December 2006). Guide to NumPy. https://archive.org/details/NumPyBook. Retrieved 2 February 2017.

- ↑ Travis Oliphant and other SciPy developers. "[Numpy-discussion] Status of Numeric". https://mail.scipy.org/pipermail/numpy-discussion/2004-January/002645.html.

- ↑ "NumPy Sourceforge Files". http://sourceforge.net/project/showfiles.php?group_id=1369.

- ↑ "History_of_SciPy - SciPy wiki dump". https://scipy.github.io/old-wiki/pages/History_of_SciPy.html.

- ↑ "NumPy 1.5.0 Release Notes". http://sourceforge.net/projects/numpy/files//NumPy/1.5.0/NOTES.txt/view.

- ↑ "PyPy Status Blog: NumPy funding and status update". http://morepypy.blogspot.com/2011/10/numpy-funding-and-status-update.html.

- ↑ "NumPyPy Status". http://buildbot.pypy.org/numpy-status/latest.html.

- ↑ The SciPy Community. "NumPy for Matlab users". https://docs.scipy.org/doc/numpy-dev/user/numpy-for-matlab-users.html.

- ↑ "numpy release notes". https://numpy.org/devdocs/release/1.9.0-notes.html.

- ↑ McKinney, Wes (2014). "NumPy Basics: Arrays and Vectorized Computation". Python for Data Analysis (First Edition, Third release ed.). O'Reilly. p. 79. ISBN 978-1-449-31979-3.

- ↑ "Blaze Ecosystem Docs". https://blaze.readthedocs.io/.

- ↑ Francesc Alted. "numexpr". https://github.com/pydata/numexpr.

- ↑ "Numba". http://numba.pydata.org/.

- ↑ (in en) Shohei Hido - CuPy: A NumPy-compatible Library for GPU - PyCon 2018, https://www.youtube.com/watch?v=MAz1xolSB68, retrieved 2021-05-11

- ↑ Entschev, Peter Andreas (2019-07-23). "Single-GPU CuPy Speedups" (in en). https://medium.com/rapids-ai/single-gpu-cupy-speedups-ea99cbbb0cbb.

- ↑ "F2PY docs from NumPy". NumPy. https://numpy.org/doc/stable/f2py/usage.html?highlight=f2py.

- ↑ Worthey, Guy (3 January 2022). "A python vs. Fortran smackdown". Guy Worthey. https://guyworthey.net/2022/01/03/a-python-vs-fortran-smackdown/.

- ↑ Shell, Scott. "Writing fast Fortran routines for Python". University of California, Santa Barbara. https://sites.engineering.ucsb.edu/~shell/che210d/f2py.pdf.

Further reading

- Bressert, Eli (2012). Scipy and Numpy: An Overview for Developers.. O'Reilly. ISBN 978-1-4493-0546-8.

- McKinney, Wes (2017). Python for Data Analysis : Data Wrangling with Pandas, NumPy, and IPython (2nd ed.). Sebastopol: O'Reilly. ISBN 978-1-4919-5766-0.

- VanderPlas, Jake (2016). "Introduction to NumPy". Python Data Science Handbook: Essential Tools for Working with Data. O'Reilly. pp. 33–96. ISBN 978-1-4919-1205-8.

External links

|