Astronomy:Monochrome-astrophotography-techniques

Monochrome photography is one of the earliest styles of photography and dates back to the 1800s.[1] Monochrome photography is also a popular technique among astrophotographers. This is due to the omission of the Bayer filter, a colour filter array that sits in front of the CMOS or CCD sensor, allowing for a single sensor to produce a colour image.

Sensor design

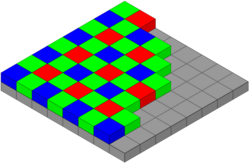

Colour cameras produce colour images using a Bayer matrix, a colour filter array that sits in front of the sensor. The matrix allows light of primary colours, red, green and blue, to enter the sensor. A typical matrix arrangement consists of a 25% red pass through area, 25% blue, and 50% green. The Bayer matrix allows a single chip sensor to produce a colour image.[2]

Many objects in deep space are made up of hydrogen, oxygen and sulphur. These elements emit light in the red, blue and red/orange spectrum respectively.[3]

When imaging an object rich in Hydrogen, the object will primarily emit light in the hydrogen-alpha/red wavelengths. In this scenario, the Bayer matrix will only allow 25% off the incoming light from the nebula to reach the sensor, as only 25% of the matrix area will allow red light to pass through.[2]

A monochromatic sensor does not have a Bayer matrix. This means the entire sensor can be utilised to capture specific wavelengths using specialised colour filters known as narrowband filters.[4] Many nebulae are made up of hydrogen, oxygen and sulphur. These nebulae emit light in red, blue and orange wavelengths respectively. A narrowband filter can be used for each colour to produce three discrete monochrome images. These images can then be combined to produce a colour image.

Advantages

Monochrome astrophotography has gained its popularity as a method of combating the effects of modern light-pollution. The Bayer matrix in a traditional sensor will limit the available sensor area capable of collecting light from deep space objects to approximately 25%. The remaining 75% however is still capable of collecting light, often in the form of surrounding light pollution. This can adversely affect the signal-to-noise ratio.[5]

Removing the Bayer matrix means a narrowband filter can be used to only allow specific wavelengths of light to reach the sensor. This has the benefit of utilising the entire sensor area to maximise the amount of light collected, whilst also rejecting sources of external light pollution, vastly improving the signal-to-noise ratio.[6]

Monochrome image processing

Colour images in typical cameras are made by combining data from red, green and blue pixels.[7] In order to produce a colour image using a monochrome sensor, three monochrome images must be produced and combined to produce a colour image. The three monochrome images are mapped to the respective red, green and blue channels. In the case of astrophotography, this can vary to some degree, although a common colour palette is the Hubble palette, often known as "SHO". In the Hubble pallet, Sulphur is mapped to the red channel, hydrogen-alpha signals are mapped to green, and oxygen is mapped to blue[8]

Monochrome astrophotography also requires a greater number of calibration frames. Calibration frames are used capture artefacts and dust on the image sensor and filter, and light gradients due to internal reflections in the optical train. These can then be removed from the final image. Monochrome imaging requires the use of three individual filters to produce a colour image. This means three sets of calibration frames must be generated and applied during the image processing stage. This therefore increases the amount of images that need to be stored, requiring greater amounts of storage space.[9]

Monochrome photography also requires additional equipment. Due to the requirement of multiple filters, amateur astrophotographers often use an electronic filter wheel. This allows multiple filters to be installed, and a computer can be used to control the wheel and change filters throughout the night [10]

References

- ↑ Robert, Hirsch (2000). Seizing the Light: A History of Photography. McGraw-Hill. ISBN 9780697143617.

- ↑ 2.0 2.1 Wang, Peng; Menon, Rajesh (2015-11-20). "Ultra-high-sensitivity color imaging via a transparent diffractive-filter array and computational optics" (in EN). Optica 2 (11): 933–939. doi:10.1364/OPTICA.2.000933. ISSN 2334-2536. Bibcode: 2015Optic...2..933W. https://www.osapublishing.org/optica/abstract.cfm?uri=optica-2-11-933.

- ↑ "Hubble Captures a Perfect Storm of Turbulent Gases" (in en). http://hubblesite.org/contents/news-releases/2003/news-2003-13.

- ↑ Bull, David (2014). Digital Picture Formats and Representations. pp. Section 4.5.3. ISBN 9780124059061.

- ↑ "Deep Sky Astrophotography in City Light Pollution | Results with DSLR Camera" (in en-US). 2018-10-12. https://astrobackyard.com/astrophotography-light-pollution/.

- ↑ "Narrowband Imaging Primer | Beginners Guide to the Hubble Palette & More" (in en-US). https://astrobackyard.com/narrowband-imaging/.

- ↑ Bayer, Bryce E., "Color imaging array", US patent 3971065, published 1976-07-20, assigned to -Eastman Kodak Co.

- ↑ "The Truth About Hubble, JWST, and False Color" (in en-US). https://asd.gsfc.nasa.gov/blueshift/index.php/2016/09/13/hubble-false-color/.

- ↑ "Demystifying Flat-Frame Calibration" (in en-US). 2021-06-17. https://skyandtelescope.org/astronomy-blogs/imaging-foundations-richard-wright/demystifying-flat-frame-calibration/.

- ↑ "Do You Need a Filter Wheel for Astrophotography? (LRGB Imaging)" (in en-US). https://astrobackyard.com/filter-wheel/.

|