Bernoulli sampling

In the theory of finite population sampling, Bernoulli sampling is a sampling process where each element of the population is subjected to an independent Bernoulli trial which determines whether the element becomes part of the sample. An essential property of Bernoulli sampling is that all elements of the population have equal probability of being included in the sample.[1]

Bernoulli sampling is therefore a special case of Poisson sampling. In Poisson sampling each element of the population may have a different probability of being included in the sample. In Bernoulli sampling, the probability is equal for all the elements.

Because each element of the population is considered separately for the sample, the sample size is not fixed but rather follows a binomial distribution.

Example

The most basic Bernoulli method generates n random variates to extract a sample from a population of n items. Suppose you want to extract a given percentage pct of the population. The algorithm can be described as follows:[2]

for each item in the set

generate a random non-negative integer R

if (R mod 100) < pct then

select item

A percentage of 20%, say, is usually expressed as a probability p=0.2. In that case, random variates are generated in the unit interval. After running the algorithm, a sample of size k will have been selected. One would expect to have

, which is more and more likely as n grows. In fact, It is possible to calculate the probability of obtaining a sample size of k by the Binomial distribution:

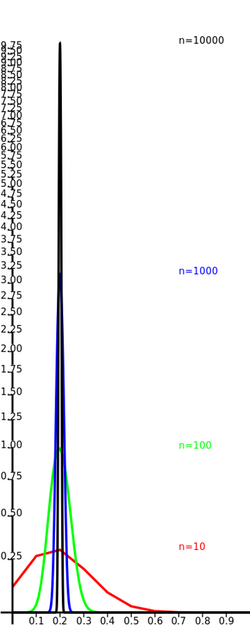

On the left this function is shown for four values of and . In order to compare the values for different values of , the 's in abscissa are scaled from to the unit interval, while the value of the function, in ordinate, is multiplied by the inverse, so that the area under the graph maintains the same value —that area is related to the corresponding cumulative distribution function. The values are shown in logarithmic scale.

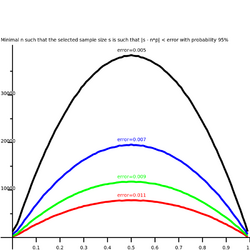

On the right the minimum values of that satisfy given error bounds with 95% probability. Given an error, the set of 's within bounds can be described as follows:

The probability to end up within is given again by the binomial distribution as:

The picture shows the lowest values of such that the sum is at least 0.95. For and the algorithm delivers exact results for all 's. The 's in between are obtained by bisection. Note that, if is an integer percentage, , guarantees that . Values as high as can be required for such an exact match.

See also

References

- ↑ Carl-Erik Sarndal; Bengt Swensson; Jan Wretman (1992). Model Assisted Survey Sampling. ISBN 978-0-387-97528-3.

- ↑ Voratas Kachitvichyanukul; Bruce W. Schmeise (1 February 1988). "Binomial Random Variate Generation". Communications of the ACM 31 (2): 216–222. doi:10.1145/42372.42381. https://dl.acm.org/doi/10.1145/42372.42381.

External links

|