Communication-avoiding algorithm

Communication-avoiding algorithms minimize movement of data within a memory hierarchy for improving its running-time and energy consumption. These minimize the total of two costs (in terms of time and energy): arithmetic and communication. Communication, in this context refers to moving data, either between levels of memory or between multiple processors over a network. It is much more expensive than arithmetic.[1]

Formal theory

Two-level memory model

A common computational model in analyzing communication-avoiding algorithms is the two-level memory model:

- There is one processor and two levels of memory.

- Level 1 memory is infinitely large. Level 0 memory ("cache") has size .

- In the beginning, input resides in level 1. In the end, the output resides in level 1.

- Processor can only operate on data in cache.

- The goal is to minimize data transfers between the two levels of memory.

Matrix multiplication

[2] Corollary 6.2:

Theorem — Given matrices of sizes , then has communication complexity .

This lower bound is achievable by tiling matrix multiplication.

More general results for other numerical linear algebra operations can be found in.[3] The following proof is from.[4]

We can draw the computation graph of as a cube of lattice points, each point is of form . Since , computing requires the processor to have access to each point within the cube at least once. So the problem becomes covering the lattice points with a minimal amount of communication.

If is large, then we can simply load all entries then write entries. This is uninteresting.

If is small, then we can divide the minimal-communication algorithm into separate segments. During each segment, it performs exactly reads to cache, and any number of writes from cache.

During each segment, the processor has access to at most different points from .

Let be the set of lattice points covered during this segment. Then by the Loomis–Whitney inequality,

with constraint .

By the inequality of arithmetic and geometric means, we have , with extremum reached when .

Thus the arithmetic intensity is bounded above by where , and so the communication is bounded below by .

Direct computation verifies that the tiling matrix multiplication algorithm reaches the lower bound.

Motivation

Consider the following running-time model:[5]

- Measure of computation = Time per FLOP = γ

- Measure of communication = No. of words of data moved = β

⇒ Total running time = γ·(no. of FLOPs) + β·(no. of words)

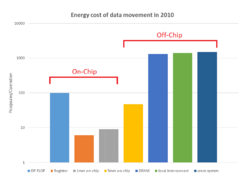

From the fact that β >> γ as measured in time and energy, communication cost dominates computation cost. Technological trends[6] indicate that the relative cost of communication is increasing on a variety of platforms, from cloud computing to supercomputers to mobile devices. The report also predicts that gap between DRAM access time and FLOPs will increase 100× over coming decade to balance power usage between processors and DRAM.[1]

| FLOP rate (γ) | DRAM bandwidth (β) | Network bandwidth (β) |

|---|---|---|

| 59% / year | 23% / year | 26% / year |

Energy consumption increases by orders of magnitude as we go higher in the memory hierarchy.[7]

United States president Barack Obama cited communication-avoiding algorithms in the FY 2012 Department of Energy budget request to Congress:[1]

New Algorithm Improves Performance and Accuracy on Extreme-Scale Computing Systems. On modern computer architectures, communication between processors takes longer than the performance of a floating-point arithmetic operation by a given processor. ASCR researchers have developed a new method, derived from commonly used linear algebra methods, to minimize communications between processors and the memory hierarchy, by reformulating the communication patterns specified within the algorithm. This method has been implemented in the TRILINOS framework, a highly-regarded suite of software, which provides functionality for researchers around the world to solve large scale, complex multi-physics problems.

Objectives

Communication-avoiding algorithms are designed with the following objectives:

- Reorganize algorithms to reduce communication across all memory hierarchies.

- Attain the lower-bound on communication when possible.

The following simple example[1] demonstrates how these are achieved.

Matrix multiplication example

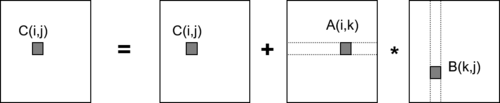

Let A, B and C be square matrices of order n × n. The following naive algorithm implements C = C + A * B:

for i = 1 to n

for j = 1 to n

for k = 1 to n

C(i,j) = C(i,j) + A(i,k) * B(k,j)

Arithmetic cost (time-complexity): n2(2n − 1) for sufficiently large n or O(n3).

Rewriting this algorithm with communication cost labelled at each step

for i = 1 to n

{read row i of A into fast memory} - n² reads

for j = 1 to n

{read C(i,j) into fast memory} - n² reads

{read column j of B into fast memory} - n³ reads

for k = 1 to n

C(i,j) = C(i,j) + A(i,k) * B(k,j)

{write C(i,j) back to slow memory} - n² writes

Fast memory may be defined as the local processor memory (CPU cache) of size M and slow memory may be defined as the DRAM.

Communication cost (reads/writes): n3 + 3n2 or O(n3)

Since total running time = γ·O(n3) + β·O(n3) and β >> γ the communication cost is dominant. The blocked (tiled) matrix multiplication algorithm[1] reduces this dominant term:

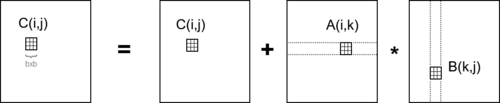

Blocked (tiled) matrix multiplication

Consider A, B and C to be n/b-by-n/b matrices of b-by-b sub-blocks where b is called the block size; assume three b-by-b blocks fit in fast memory.

for i = 1 to n/b

for j = 1 to n/b

{read block C(i,j) into fast memory} - b² × (n/b)² = n² reads

for k = 1 to n/b

{read block A(i,k) into fast memory} - b² × (n/b)³ = n³/b reads

{read block B(k,j) into fast memory} - b² × (n/b)³ = n³/b reads

C(i,j) = C(i,j) + A(i,k) * B(k,j) - {do a matrix multiply on blocks}

{write block C(i,j) back to slow memory} - b² × (n/b)² = n² writes

Communication cost: 2n3/b + 2n2 reads/writes << 2n3 arithmetic cost

Making b as large possible:

- 3b2 ≤ M

we achieve the following communication lower bound:

- 31/2n3/M1/2 + 2n2 or Ω (no. of FLOPs / M1/2)

Previous approaches for reducing communication

Most of the approaches investigated in the past to address this problem rely on scheduling or tuning techniques that aim at overlapping communication with computation. However, this approach can lead to an improvement of at most a factor of two. Ghosting is a different technique for reducing communication, in which a processor stores and computes redundantly data from neighboring processors for future computations. Cache-oblivious algorithms represent a different approach introduced in 1999 for fast Fourier transforms,[8] and then extended to graph algorithms, dynamic programming, etc. They were also applied to several operations in linear algebra[9][10][11] as dense LU and QR factorizations. The design of architecture specific algorithms is another approach that can be used for reducing the communication in parallel algorithms, and there are many examples in the literature of algorithms that are adapted to a given communication topology.[12]

See also

- Data locality

References

- ↑ 1.0 1.1 1.2 1.3 1.4 Demmel, Jim. "Communication avoiding algorithms". 2012 SC Companion: High Performance Computing, Networking Storage and Analysis. IEEE, 2012.

- ↑ Jia-Wei, Hong; Kung, H. T. (1981). "I/O complexity". Proceedings of the thirteenth annual ACM symposium on Theory of computing - STOC '81. New York, New York, USA: ACM Press. pp. 326–333. doi:10.1145/800076.802486. http://dx.doi.org/10.1145/800076.802486.

- ↑ Ballard, G.; Carson, E.; Demmel, J.; Hoemmen, M.; Knight, N.; Schwartz, O. (May 2014). "Communication lower bounds and optimal algorithms for numerical linear algebra". Acta Numerica 23: 1–155. doi:10.1017/s0962492914000038. ISSN 0962-4929. http://dx.doi.org/10.1017/s0962492914000038.

- ↑ Demmel, James; Dinh, Grace (2018-04-24). "Communication-Optimal Convolutional Neural Nets". arXiv:1802.06905 [cs.DS].

- ↑ Demmel, James, and Kathy Yelick. "Communication Avoiding (CA) and Other Innovative Algorithms". The Berkeley Par Lab: Progress in the Parallel Computing Landscape: 243–250.

- ↑ Bergman, Keren, et al. "Exascale computing study: Technology challenges in exascale computing systems." Defense Advanced Research Projects Agency Information Processing Techniques Office (DARPA IPTO), Tech. Rep 15 (2008).

- ↑ Shalf, John, Sudip Dosanjh, and John Morrison. "Exascale computing technology challenges". High Performance Computing for Computational Science–VECPAR 2010. Springer Berlin Heidelberg, 2011. 1–25.

- ↑ M. Frigo, C. E. Leiserson, H. Prokop, and S. Ramachandran, “Cacheoblivious algorithms”, In FOCS ’99: Proceedings of the 40th Annual Symposium on Foundations of Computer Science, 1999. IEEE Computer Society.

- ↑ S. Toledo, “Locality of reference in LU Decomposition with partial pivoting,” SIAM J. Matrix Anal. Appl., vol. 18, no. 4, 1997.

- ↑ F. Gustavson, “Recursion Leads to Automatic Variable Blocking for Dense Linear-Algebra Algorithms,” IBM Journal of Research and Development, vol. 41, no. 6, pp. 737–755, 1997.

- ↑ E. Elmroth, F. Gustavson, I. Jonsson, and B. Kagstrom, “Recursive blocked algorithms and hybrid data structures for dense matrix library software,” SIAM Review, vol. 46, no. 1, pp. 3–45, 2004.

- ↑ Grigori, Laura. "Introduction to communication avoiding linear algebra algorithms in high performance computing.

|