Deviation (statistics)

In mathematics and statistics, deviation serves as a measure to quantify the disparity between an observed value of a variable and another designated value, frequently the mean of that variable. Deviations with respect to the sample mean and the population mean (or "true value") are called errors and residuals, respectively. The sign of the deviation reports the direction of that difference: the deviation is positive when the observed value exceeds the reference value. The absolute value of the deviation indicates the size or magnitude of the difference. In a given sample, there are as many deviations as sample points. Summary statistics can be derived from a set of deviations, such as the standard deviation and the mean absolute deviation, measures of dispersion, and the mean signed deviation, a measure of bias.[1]

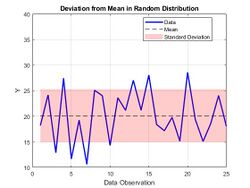

The deviation of each data point is calculated by subtracting the mean of the data set from the individual data point. Mathematically, the deviation d of a data point x in a data set is given by

This calculation represents the "distance" of a data point from the mean and provides information about how much individual values vary from the average. Positive deviations indicate values above the mean, while negative deviations indicate values below the mean.[1]

The sum of squared deviations is a key component in the calculation of variance, another measure of the spread or dispersion of a data set. Variance is calculated by averaging the squared deviations. Deviation is a fundamental concept in understanding the distribution and variability of data points in statistical analysis.[1]

Types

A deviation that is a difference between an observed value and the true value of a quantity of interest (where true value denotes the Expected Value, such as the population mean) is an error.[2]

Signed deviations

A deviation that is a difference between an observed value and the true value of a quantity of interest (such as the population mean) is an error.

A deviation that is the difference between the observed value and an estimate of the true value (e.g. the sample mean) is a residual. These concepts are applicable for data at the interval and ratio levels of measurement.[3]

Unsigned or absolute deviation

- Absolute deviation in statistics is a metric that measures the overall difference between individual data points and a central value, typically the mean or median of a dataset. It is determined by taking the absolute value of the difference between each data point and the central value and then averaging these absolute differences.[4] The formula is expressed as follows:

where

- Di is the absolute deviation,

- xi is the data element,

- m(X) is the chosen measure of central tendency of the data set—sometimes the mean (), but most often the median.

The average absolute deviation (AAD) in statistics is a measure of the dispersion or spread of a set of data points around a central value, usually the mean or median. It is calculated by taking the average of the absolute differences between each data point and the chosen central value. AAD provides a measure of the typical magnitude of deviations from the central value in a dataset, giving insights into the overall variability of the data.[5]

Least absolute deviation (LAD) is a statistical method used in regression analysis to estimate the coefficients of a linear model. Unlike the more common least squares method, which minimizes the sum of squared vertical distances (residuals) between the observed and predicted values, the LAD method minimizes the sum of the absolute vertical distances.

In the context of linear regression, if (x1,y1), (x2,y2), ... are the data points, and a and b are the coefficients to be estimated for the linear model

the least absolute deviation estimates (a and b) are obtained by minimizing the sum.

The LAD method is less sensitive to outliers compared to the least squares method, making it a robust regression technique in the presence of skewed or heavy-tailed residual distributions.[6]

Summary statistics

Mean signed deviation

For an unbiased estimator, the average of the signed deviations across the entire set of all observations from the unobserved population parameter value averages zero over an arbitrarily large number of samples. However, by construction the average of signed deviations of values from the sample mean value is always zero, though the average signed deviation from another measure of central tendency, such as the sample median, need not be zero.

Mean Signed Deviation is a statistical measure used to assess the average deviation of a set of values from a central point, usually the mean. It is calculated by taking the arithmetic mean of the signed differences between each data point and the mean of the dataset.

The term "signed" indicates that the deviations are considered with their respective signs, meaning whether they are above or below the mean. Positive deviations (above the mean) and negative deviations (below the mean) are included in the calculation. The mean signed deviation provides a measure of the average distance and direction of data points from the mean, offering insights into the overall trend and distribution of the data.[3]

Dispersion

Statistics of the distribution of deviations are used as measures of statistical dispersion.

- Standard deviation is a widely used measure of the spread or dispersion of a dataset. It quantifies the average amount of variation or deviation of individual data points from the mean of the dataset. It uses squared deviations, and has desirable properties. Standard deviation is sensitive to extreme values, making it not robust.[7]

- Average absolute deviation is a measure of the dispersion in a dataset that is less influenced by extreme values. It is calculated by finding the absolute difference between each data point and the mean, summing these absolute differences, and then dividing by the number of observations. This metric provides a more robust estimation of variability compared to standard deviation.[8]

- Median absolute deviation is a robust statistic that employs the median, rather than the mean, to measure the spread of a dataset. It is calculated by finding the absolute difference between each data point and the median, then computing the median of these absolute differences. This makes median absolute deviation less sensitive to outliers, offering a robust alternative to standard deviation.[9]

- Maximum absolute deviation is a straightforward measure of the maximum difference between any individual data point and the mean of the dataset. However, it is highly non-robust, as it can be disproportionately influenced by a single extreme value. This metric may not provide a reliable measure of dispersion when dealing with datasets containing outliers.[8]

Normalization

Deviations, which measure the difference between observed values and some reference point, inherently carry units corresponding to the measurement scale used. For example, if lengths are being measured, deviations would be expressed in units like meters or feet. To make deviations unitless and facilitate comparisons across different datasets, one can nondimensionalize.

One common method involves dividing deviations by a measure of scale(statistical dispersion), with the population standard deviation used for standardizing or the sample standard deviation for studentizing (e.g., Studentized residual).

Another approach to nondimensionalization focuses on scaling by location rather than dispersion. The percent deviation offers an illustration of this method, calculated as the difference between the observed value and the accepted value, divided by the accepted value, and then multiplied by 100%. By scaling the deviation based on the accepted value, this technique allows for expressing deviations in percentage terms, providing a clear perspective on the relative difference between the observed and accepted values. Both methods of nondimensionalization serve the purpose of making deviations comparable and interpretable beyond the specific measurement units.[10]

Examples

In one example, a series of measurements of the speed are taken of sound in a particular medium. The accepted or expected value for the speed of sound in this medium, based on theoretical calculations, is 343 meters per second.

Now, during an experiment, multiple measurements are taken by different researchers. Researcher A measures the speed of sound as 340 meters per second, resulting in a deviation of −3 meters per second from the expected value. Researcher B, on the other hand, measures the speed as 345 meters per second, resulting in a deviation of +2 meters per second.

In this scientific context, deviation helps quantify how individual measurements differ from the theoretically predicted or accepted value. It provides insights into the accuracy and precision of experimental results, allowing researchers to assess the reliability of their data and potentially identify factors contributing to discrepancies.

In another example, suppose a chemical reaction is expected to yield 100 grams of a specific compound based on stoichiometry. However, in an actual laboratory experiment, several trials are conducted with different conditions.

In Trial 1, the actual yield is measured to be 95 grams, resulting in a deviation of −5 grams from the expected yield. In Trial 2, the actual yield is measured to be 102 grams, resulting in a deviation of +2 grams. These deviations from the expected value provide valuable information about the efficiency and reproducibility of the chemical reaction under different conditions.

Scientists can analyze these deviations to optimize reaction conditions, identify potential sources of error, and improve the overall yield and reliability of the process. The concept of deviation is crucial in assessing the accuracy of experimental results and making informed decisions to enhance the outcomes of scientific experiments.

See also

- Anomaly (natural sciences)

- Squared deviations

- Deviate (statistics)

- Variance

References

- ↑ 1.0 1.1 1.2 Lee, Dong Kyu; In, Junyong; Lee, Sangseok (2015). "Standard deviation and standard error of the mean". Korean Journal of Anesthesiology 68 (3): 220. doi:10.4097/kjae.2015.68.3.220. ISSN 2005-6419. PMC 4452664. http://dx.doi.org/10.4097/kjae.2015.68.3.220.

- ↑ Livingston, Edward H. (June 2004). "The mean and standard deviation: what does it all mean?". Journal of Surgical Research 119 (2): 117–123. doi:10.1016/j.jss.2004.02.008. ISSN 0022-4804. https://doi.org/10.1016/j.jss.2004.02.008.

- ↑ 3.0 3.1 Dodge, Yadolah, ed (2003-08-07). The Oxford Dictionary Of Statistical Terms. Oxford University Press, Oxford. ISBN 978-0-19-850994-3. http://dx.doi.org/10.1093/oso/9780198509943.001.0001.

- ↑ Konno, Hiroshi; Koshizuka, Tomoyuki (2005-10-01). "Mean-absolute deviation model". IIE Transactions 37 (10): 893–900. doi:10.1080/07408170591007786. ISSN 0740-817X. http://www.tandfonline.com/doi/abs/10.1080/07408170591007786.

- ↑ Pham-Gia, T.; Hung, T. L. (2001-10-01). "The mean and median absolute deviations". Mathematical and Computer Modelling 34 (7): 921–936. doi:10.1016/S0895-7177(01)00109-1. ISSN 0895-7177. https://www.sciencedirect.com/science/article/pii/S0895717701001091.

- ↑ Chen, Kani; Ying, Zhiliang (1996-04-01). "A counterexample to a conjecture concerning the Hall-Wellner band". The Annals of Statistics 24 (2). doi:10.1214/aos/1032894456. ISSN 0090-5364. http://dx.doi.org/10.1214/aos/1032894456.

- ↑ "2. Mean and standard deviation | The BMJ" (in en). 2020-10-28. https://www.bmj.com/about-bmj/resources-readers/publications/statistics-square-one/2-mean-and-standard-deviation.

- ↑ 8.0 8.1 Pham-Gia, T.; Hung, T. L. (2001-10-01). "The mean and median absolute deviations". Mathematical and Computer Modelling 34 (7): 921–936. doi:10.1016/S0895-7177(01)00109-1. ISSN 0895-7177. https://www.sciencedirect.com/science/article/pii/S0895717701001091.

- ↑ Jones, Alan R. (2018-10-09) (in en). Probability, Statistics and Other Frightening Stuff. Routledge. pp. 73. ISBN 978-1-351-66138-6. https://books.google.com/books?id=OvtsDwAAQBAJ.

- ↑ Freedman, David; Pisani, Robert; Purves, Roger (2007). Statistics (4 ed.). New York: Norton. ISBN 978-0-393-93043-6.

|