Event detection for WSN

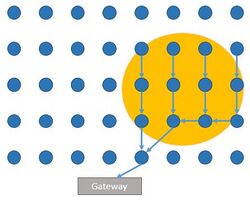

Wireless sensor networks (WSN) are a spatially distributed network of autonomous sensors used for monitoring an environment. Energy cost is a major limitation for WSN requiring the need for energy efficient networks and processing. One of major energy costs in WSN is the energy spent on communication between nodes and it is sometimes desirable to only send data to a gateway node when an event of interest is triggered at a sensor. Sensors will then only open communication during a probable event, saving on communication costs. Fields interested in this type of network include surveillance, home automation, disaster relief, traffic control, health care and more.

Energy savings come from the fact that nodes only communicate back to the gateway when there is an event to report. This may be difficult in dynamic environments where much of the data collected may not be of interest. To overcome this issue constraints on what defines an event are relaxed and usually modeled as a set of thresholds or probabilities.

Event detection falls under three main categories: threshold based, supervised and unsupervised.[1]

Threshold based detection

Threshold based detection is a simple way events may be detected through the use of various parameters that can hint as to whether an event has occurred or not. Data collected at a sensor node, also called the data-event, is analyzed to see if it has reached a certain threshold point to be considered an event. For example, a light detector may only report back to a gateway if it detects light above a certain intensity. The data-event can also be the result of a combination of outputs from different types of sensors that either collaborate at the gateway or through small hop peer-to-peer communication. Two important parameters that affect the effectiveness of the detection is sampling rate and event area.[2] The sampling rate will depend on whether events occur over long periods of time like tides in the ocean or over short periods of time such as cars passing a building. The event area will determine how many sensors need to report values above a threshold value for the likelihood of an event to be high enough to be considered.

If sensors collect multiple data points at a given time then another method of using threshold based detection is to measure the variance of the data and only send data to a gateway node if the variance reaches a certain threshold. Events may occur in only a small portion of the data set. In this case it is important to break the data into smaller segments and take into account the variance of each individual segment. Consider an N-dimensional data set that can be divided into four equal quadrants. The sum of absolute difference can be calculated for all the samples in that section and can be used as an approximation of the variance of that quadrant. Below is what the equation would look like for one quadrant.

[math]\displaystyle{ V_1=\sum_{\mathbf{s}}|I_{(\mathbf{s})}-Iref_{(\mathbf{s})}| }[/math] , where [math]\displaystyle{ 0 \le s_i \le \frac{m}{2} }[/math] , where [math]\displaystyle{ \mathbf{s} }[/math] is the N-dimensional sampled area in the region of support ranging from 0 to [math]\displaystyle{ m }[/math] in each dimension, [math]\displaystyle{ I_{(s)} }[/math] is the set of sampled points at point [math]\displaystyle{ \mathbf{s} }[/math], and [math]\displaystyle{ Iref_{(s)} }[/math] is the reference set of data points.

The sum of absolute difference above is one quadrant of the total space sampled and can represent the pseudo-variance of a subset of the data. By collecting all the variances for the different quadrants it is more likely to detect the occurrence of an event even if the event only affects a portion of the total region of support.[3]

Supervised detection

Supervised detection is when events are known ahead of time and can be described by patterns that can be compared to live data. It requires some information to be known about the events in question and the environment a priori. This is usually done by taking sample measurements, or training vectors, across the network during an event and creating a signature of what the data looks like.

Classifying an event can be generally described as the probability that an observed measurement x was a result of event ω. Given a set of N-dimensional vectors [math]\displaystyle{ \{ \mathbf{x} \} }[/math] and a predetermined set of events [math]\displaystyle{ \{ \mathbf{\omega} \} }[/math], the goal is to achieve the minimum error of misclassification, or Bayes error. The sensor needs to decide if [math]\displaystyle{ \mathbf{x} }[/math] belongs to [math]\displaystyle{ \omega_i }[/math], by ensuring that [math]\displaystyle{ p(\omega_{i}|\mathbf{x}) \gt p(\omega_{j}|\mathbf{x}) }[/math] for [math]\displaystyle{ j \ne i }[/math].[4] Once the most probable event has been determined and the probability of [math]\displaystyle{ \mathbf{x} }[/math] belonging to an event is calculated it can be checked against a threshold. If the probability of an event is large enough then the data is forwarded to the gateway node. Below are a few examples of algorithms that solve the optimal Bayes classifier. They all require a set of training vectors to simulate or re-enact events of interest.

k-Nearest neighbor (k-NN) classifier

The k-NN algorithm is a well known pattern recognition algorithm where a set of predetermined prototypes {pk} are used during the sample, or testing phase, of a supposed event. The prototypes model the events that are of interest in the application. The distance between each test vector and each prototype is calculated and the k test vectors closest to the prototype vectors are taken as the most likely classification or group of classifications. From there the probability that x belongs to the prototype event can be calculated. This approach, however, requires much memory and processing power as the number of prototypes increases and thus it is not a very practical choice for WSNs. It does however act as a good baseline to gauge performance of other classifiers since it is well known and that probability of misclassification when k=1 approaches twice the optimal Bayes error.[5]

Maximum Likelihood Classifier

The Maximum Likelihood Classifier models the distribution of training vectors from the same class as a mixture of Gaussian density functions. The probability of a given training vector x, given a class ωi is

[math]\displaystyle{ p(\mathbf{x}|w_i)=G(\mathbf{x}|\theta_i)=\sum_k \alpha_{ik}|\mathbf{\Lambda}_{ik}|^{-N/2}exp(-1/2(\mathbf{x}-\mathbf{m}_{ik})^T\mathbf{\Lambda}_{ik}^{-1}(\mathbf{x}-\mathbf{m}_{ik}) }[/math]

where [math]\displaystyle{ \theta_i=[\mathbf{\alpha}_{i1},...\mathbf{\alpha}_{iP},\mathbf{m}_{i1},...\mathbf{m}_{iP},\mathbf{\Lambda}_{i1},...\mathbf{\Lambda}_{iP}] }[/math] are the mixture, mean, and co-variance matrix parameters of the P mixture densities corresponding to class ωi. Identifying the matrix parameters can be done through other algorithms for finding the mixture densities of the training vectors, such as the k-means algorithm or the expectation-maximization algorithm.[4]

Support vector machine classifier

A support vector machine maps a set of linear transformations [math]\displaystyle{ \{\phi(\mathbf{x}) \}_{i=1}^M }[/math] from the N-dimensional input vector to a higher M-dimensional feature space. A linear classifier can be described as a determinant function [math]\displaystyle{ \mathfrak{g}(\mathbf{x}) }[/math] that satisfies [math]\displaystyle{ \mathfrak{g}_i(\mathbf{x}) \gt \mathfrak{g}_j(\mathbf{x}) }[/math] , if [math]\displaystyle{ p(\omega_{i}|\mathbf{x}) \gt p(\omega_{j}|\mathbf{x}) }[/math] for [math]\displaystyle{ j \ne i }[/math].

The linear classifier for this support vector machine classifier is,

[math]\displaystyle{ \mathfrak{g}(\mathbf{x}) = \sum_{i=1}^Q \alpha_i K(\mathbf{x}, \mathbf{x}_i)+b }[/math] ,where [math]\displaystyle{ K(\mathbf{x}, \mathbf{x}_i) = \sum_{j=1}^M \phi_j(\mathbf{x})\phi_i(\mathbf{x}_i) }[/math], and [math]\displaystyle{ b }[/math] is the bias parameter and [math]\displaystyle{ \alpha_i }[/math] is a constant associated with the weight of each dimension from the transform.[4]

The determinant function can be used to approximate the probabilities for each class.

Unsupervised detection

When events of interest may be events that are unknown or have not been seen before then it is necessary to use unsupervised detection. This requires some machine learning algorithms that over time find anomalous events compared to normal happenings. This is an active area of research for event detection and other applications in WSNs.

References

- ↑ Bahrepour, M.; Meratnia, N.; Havinga, P.J.M. (2011-12-01). "Online unsupervised event detection in wireless sensor networks". 2011 Seventh International Conference on Intelligent Sensors, Sensor Networks and Information Processing. pp. 306–311. doi:10.1109/ISSNIP.2011.6146583. ISBN 978-1-4577-0674-5. https://ris.utwente.nl/ws/files/5501186/Bahrepour11online.pdf.

- ↑ Vairo, C.; Amato, G.; Chessa, S.; Valleri, P. (2010-10-01). "Modeling detection and tracking of complex events in wireless sensor networks". 2010 IEEE International Conference on Systems, Man and Cybernetics. pp. 235–242. doi:10.1109/ICSMC.2010.5642242. ISBN 978-1-4244-6586-6.

- ↑ Veeraraghavan, K.; Peng, Dongming; Sharif, H. (2005-05-01). "Energy Efficient Multi-Resolution Visual Surveillance on Wireless Sensor Networks". 2005 IEEE International Conference on Electro Information Technology. pp. 6 pp.–6. doi:10.1109/EIT.2005.1626975. ISBN 0-7803-9232-9.

- ↑ 4.0 4.1 4.2 Li, Dan; Wong, K.D.; Hu, Yu Hen; Sayeed, A.M. (2002-03-01). "Detection, classification, and tracking of targets". IEEE Signal Processing Magazine 19 (2): 17–29. doi:10.1109/79.985674. ISSN 1053-5888. Bibcode: 2002ISPM...19R..17L.

- ↑ Duda, R.O.; Hart, P.E. (1973). Pattern Classification and Scene Analysis. New York: Wiley. Bibcode: 1973pcsa.book.....D.

|