Garsia–Wachs algorithm

The Garsia–Wachs algorithm is an efficient method for computers to construct optimal binary search trees and alphabetic Huffman codes, in linearithmic time. It is named after Adriano Garsia and Michelle L. Wachs.

Problem description

The input to the problem, for an integer , consists of a sequence of non-negative weights . The output is a rooted binary tree with internal nodes, each having exactly two children. Such a tree has exactly leaf nodes, which can be identified (in the order given by the binary tree) with the input weights. The goal of the problem is to find a tree, among all of the possible trees with internal nodes, that minimizes the weighted sum of the external path lengths. These path lengths are the numbers of steps from the root to each leaf. They are multiplied by the weight of the leaf and then summed to give the quality of the overall tree.[1]

This problem can be interpreted as a problem of constructing a binary search tree for ordered keys, with the assumption that the tree will be used only to search for values that are not already in the tree. In this case, the keys partition the space of search values into intervals, and the weight of one of these intervals can be taken as the probability of searching for a value that lands in that interval. The weighted sum of external path lengths controls the expected time for searching the tree.[1]

Alternatively, the output of the problem can be used as a Huffman code, a method for encoding given values unambiguously by using variable-length sequences of binary values. In this interpretation, the code for a value is given by the sequence of left and right steps from a parent to the child on the path from the root to a leaf in the tree (e.g. with 0 for left and 1 for right). Unlike standard Huffman codes, the ones constructed in this way are alphabetical, meaning that the sorted order of these binary codes is the same as the input ordering of the values. If the weight of a value is its frequency in a message to be encoded, then the output of the Garsia–Wachs algorithm is the alphabetical Huffman code that compresses the message to the shortest possible length.[1]

Algorithm

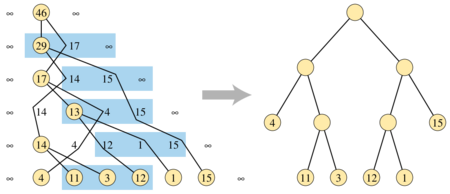

Overall, the algorithm consists of three phases:[1]

- Build a binary tree having the values as leaves but possibly in the wrong order.

- Compute each leaf's distance from the root in the resulting tree.

- Build another binary tree with the leaves at the same distances but in the correct order.

The first phase of the algorithm is easier to describe if the input is augmented with two sentinel values, (or any sufficiently large finite value) at the start and end of the sequence.[2]

The first phase maintains a forest of trees, initially a single-node tree for each non-sentinel input weight, which will eventually become the binary tree that it constructs. Each tree is associated with a value, the sum of the weights of its leaves makes a tree node for each non-sentinel input weight. The algorithm maintains a sequence of these values, with the two sentinel values at each end. The initial sequence is just the order in which the leaf weights were given as input. It then repeatedly performs the following steps, each of which reduces the length of the input sequence, until there is only one tree containing all the leaves:[1]

- Find the first three consecutive weights , , and in the sequence for which . There always exists such a triple, because the final sentinel value is larger than any previous two finite values.

- Remove and from the sequence, and make a new tree node to be the parent of the nodes for and . Its value is .

- Reinsert the new node immediately after the rightmost earlier position whose value is greater than or equal to . There always exists such a position, because of the left sentinel value.

To implement this phase efficiently, the algorithm can maintain its current sequence of values in any self-balancing binary search tree structure. Such a structure allows the removal of and , and the reinsertion of their new parent, in logarithmic time. In each step, the weights up to in the even positions of the array form a decreasing sequence, and the weights in the odd positions form another decreasing sequence. Therefore, the position to reinsert may be found in logarithmic time by using the balanced tree to perform two binary searches, one for each of these two decreasing sequences. The search for the first position for which can be performed in linear total time by using a sequential search that begins at the from the previous triple.[1]

It is nontrivial to prove that, in the third phase of the algorithm, another tree with the same distances exists and that this tree provides the optimal solution to the problem. But assuming this to be true, the second and third phases of the algorithm are straightforward to implement in linear time. Therefore, the total time for the algorithm, on an input of length , is .

History

The Garsia–Wachs algorithm is named after Adriano Garsia and Michelle L. Wachs, who published it in 1977.[1][3] Their algorithm simplified an earlier method of T. C. Hu and Alan Tucker,[1][4] and (although it is different in internal details) it ends up making the same comparisons in the same order as the Hu–Tucker algorithm.[5] The original proof of correctness of the Garsia–Wachs algorithm was complicated, and was later simplified by (Kingston 1988)[1][2] and (Karpinski Larmore).[6]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 1.8 "Algorithm G (Garsia–Wachs algorithm for optimum binary trees)", The Art of Computer Programming, Vol. 3: Sorting and Searching (2nd ed.), Addison–Wesley, 1998, pp. 451–453. See also History and bibliography, pp. 453–454.

- ↑ 2.0 2.1 Kingston, Jeffrey H. (1988), "A new proof of the Garsia–Wachs algorithm", Journal of Algorithms 9 (1): 129–136, doi:10.1016/0196-6774(88)90009-0

- ↑ "A new algorithm for minimum cost binary trees", SIAM Journal on Computing 6 (4): 622–642, 1977, doi:10.1137/0206045

- ↑ "Optimal computer search trees and variable-length alphabetical codes", SIAM Journal on Applied Mathematics 21 (4): 514–532, 1971, doi:10.1137/0121057

- ↑ "On the isomorphism of two algorithms: Hu/Tucker and Garsia/Wachs", Les arbres en algèbre et en programmation (4ème Colloq., Lille, 1979), Univ. Lille I, Lille, 1979, pp. 159–172

- ↑ "Correctness of constructing optimal alphabetic trees revisited", Theoretical Computer Science 180 (1–2): 309–324, 1997, doi:10.1016/S0304-3975(96)00296-4

Further reading

- Filliatre, Jean-Christophe (2008), "A functional implementation of the Garsia–Wachs algorithm (functional pearl)", Proceedings of the 2008 ACM SIGPLAN Workshop on ML (ML '08), New York, NY, USA: Association for Computing Machinery, pp. 91–96, doi:10.1145/1411304.1411317, ISBN 978-1-60558-062-3

|