Hamming space

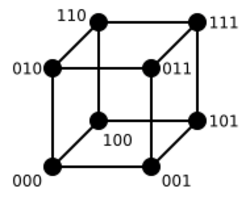

In statistics and coding theory, a Hamming space is usually the set of all binary strings of length N, where different binary strings are considered to be adjacent when they differ only in one position. The total distance between any two binary strings is then the total number of positions at which the corresponding bits are different, called the Hamming distance.[1][2] Hamming spaces are named after American mathematician Richard Hamming, who introduced the concept in 1950.[3] They are used in the theory of coding signals and transmission.

More generally, a Hamming space can be defined over any alphabet (set) Q as the set of words of a fixed length N with letters from Q.[4][5] If Q is a finite field, then a Hamming space over Q is an N-dimensional vector space over Q. In the typical, binary case, the field is thus GF(2) (also denoted by Z2).[4]

In coding theory, if Q has q elements, then any subset C (usually assumed of cardinality at least two) of the N-dimensional Hamming space over Q is called a q-ary code of length N; the elements of C are called codewords.[4][5] In the case where C is a linear subspace of its Hamming space, it is called a linear code.[4] A typical example of linear code is the Hamming code. Codes defined via a Hamming space necessarily have the same length for every codeword, so they are called block codes when it is necessary to distinguish them from variable-length codes that are defined by unique factorization on a monoid.

The Hamming distance endows a Hamming space with a metric, which is essential in defining basic notions of coding theory such as error detecting and error correcting codes.[4]

Hamming spaces over non-field alphabets have also been considered, especially over finite rings (most notably over Z4) giving rise to modules instead of vector spaces and ring-linear codes (identified with submodules) instead of linear codes. The typical metric used in this case the Lee distance. There exist a Gray isometry between (i.e. GF(22m)) with the Hamming distance and (also denoted as GR(4,m)) with the Lee distance.[6][7][8]

References

- ↑ Baylis, D. J. (1997), Error Correcting Codes: A Mathematical Introduction, Chapman Hall/CRC Mathematics Series, 15, CRC Press, p. 62, ISBN 9780412786907, https://books.google.com/books?id=ZAdDuZoJdn8C&pg=PA62

- ↑ Cohen, G.; Honkala, I.; Litsyn, S.; Lobstein, A. (1997), Covering Codes, North-Holland Mathematical Library, 54, Elsevier, p. 1, ISBN 9780080530079, https://books.google.com/books?id=7KBYOt44sugC&pg=PA1

- ↑ Hamming, R. W. (April 1950). "Error detecting and error correcting codes". The Bell System Technical Journal 29 (2): 147–160. doi:10.1002/j.1538-7305.1950.tb00463.x. ISSN 0005-8580. https://calhoun.nps.edu/bitstream/10945/46756/1/Hamming_1982.pdf.

- ↑ 4.0 4.1 4.2 4.3 4.4 Derek J.S. Robinson (2003). An Introduction to Abstract Algebra. Walter de Gruyter. pp. 254–255. ISBN 978-3-11-019816-4.

- ↑ 5.0 5.1 Cohen et al., Covering Codes, p. 15

- ↑ Marcus Greferath (2009). "An Introduction to Ring-Linear Coding Theory". Gröbner Bases, Coding, and Cryptography. Springer Science & Business Media. ISBN 978-3-540-93806-4.

- ↑ "Kerdock and Preparata codes - Encyclopedia of Mathematics". http://www.encyclopediaofmath.org/index.php/Kerdock_and_Preparata_codes.

- ↑ J.H. van Lint (1999). Introduction to Coding Theory (3rd ed.). Springer. Chapter 8: Codes over . ISBN 978-3-540-64133-9. https://archive.org/details/introductiontoco0000lint_a3b9.

|