Moment problem

In mathematics, a moment problem arises as the result of trying to invert the mapping that takes a measure to the sequence of moments

More generally, one may consider

for an arbitrary sequence of functions .

Introduction

In the classical setting, is a measure on the real line, and is the sequence . In this form the question appears in probability theory, asking whether there is a probability measure having specified mean, variance and so on, and whether it is unique.

There are three named classical moment problems: the Hamburger moment problem in which the support of is allowed to be the whole real line; the Stieltjes moment problem, for ; and the Hausdorff moment problem for a bounded interval, which without loss of generality may be taken as .

The moment problem also extends to complex analysis as the trigonometric moment problem in which the Hankel matrices are replaced by Toeplitz matrices and the support of μ is the complex unit circle instead of the real line.[1]

Existence

A sequence of numbers is the sequence of moments of a measure if and only if a certain positivity condition is fulfilled; namely, the Hankel matrices ,

should be positive semi-definite. This is because a positive-semidefinite Hankel matrix corresponds to a linear functional such that and (non-negative for sum of squares of polynomials). Assume can be extended to . In the univariate case, a non-negative polynomial can always be written as a sum of squares. So the linear functional is positive for all the non-negative polynomials in the univariate case. By Haviland's theorem, the linear functional has a measure form, that is . A condition of similar form is necessary and sufficient for the existence of a measure supported on a given interval .

One way to prove these results is to consider the linear functional that sends a polynomial

to

If are the moments of some measure supported on , then evidently

-

for any polynomial that is non-negative on .

()

-

Vice versa, if (1) holds, one can apply the M. Riesz extension theorem and extend to a functional on the space of continuous functions with compact support ), so that

-

for any

()

-

By the Riesz representation theorem, (2) holds iff there exists a measure supported on , such that

for every .

Thus the existence of the measure is equivalent to (1). Using a representation theorem for positive polynomials on , one can reformulate (1) as a condition on Hankel matrices.[2][3]

Uniqueness (or determinacy)

The uniqueness of in the Hausdorff moment problem follows from the Weierstrass approximation theorem, which states that polynomials are dense under the uniform norm in the space of continuous functions on . For the problem on an infinite interval, uniqueness is a more delicate question.[4] There are distributions, such as log-normal distributions, which have finite moments for all the positive integers but where other distributions have the same moments.

Formal solution

When the solution exists, it can be formally written using derivatives of the Dirac delta function as

- .

The expression can be derived by taking the inverse Fourier transform of its characteristic function.

Variations

An important variation is the truncated moment problem, which studies the properties of measures with fixed first k moments (for a finite k). Results on the truncated moment problem have numerous applications to extremal problems, optimisation and limit theorems in probability theory.[3]

Probability

The moment problem has applications to probability theory. The following is commonly used:[5]

Theorem (Fréchet-Shohat) — If is a determinate measure (i.e. its moments determine it uniquely), and the measures are such that then in distribution.

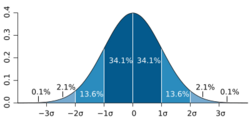

By checking Carleman's condition, we know that the standard normal distribution is a determinate measure, thus we have the following form of the central limit theorem:

Corollary — If a sequence of probability distributions satisfy then converges to in distribution.

See also

- Carleman's condition

- Hamburger moment problem

- Hankel matrix

- Hausdorff moment problem

- Moment (mathematics)

- Stieltjes moment problem

- Trigonometric moment problem

Notes

- ↑ Schmüdgen 2017, p. 257.

- ↑ Shohat & Tamarkin 1943.

- ↑ 3.0 3.1 Kreĭn & Nudel′man 1977.

- ↑ Akhiezer 1965.

- ↑ Sodin, Sasha (March 5, 2019). "The classical moment problem". https://webspace.maths.qmul.ac.uk/a.sodin/teaching/moment/clmp.pdf.

References

- Shohat, James Alexander; Tamarkin, Jacob D. (1943). The Problem of Moments. New York: American mathematical society. ISBN 978-1-4704-1228-9.

- Akhiezer, Naum I. (1965). The classical moment problem and some related questions in analysis. New York: Hafner Publishing Co.. https://archive.org/details/classicalmomentp0000akhi. (translated from the Russian by N. Kemmer)

- Kreĭn, M. G.; Nudel′man, A. A. (1977). The Markov Moment Problem and Extremal Problems. Translations of Mathematical Monographs. Providence, Rhode Island: American Mathematical Society. doi:10.1090/mmono/050. ISBN 978-0-8218-4500-4.

- Schmüdgen, Konrad (2017). The Moment Problem. Graduate Texts in Mathematics. 277. Cham: Springer International Publishing. doi:10.1007/978-3-319-64546-9. ISBN 978-3-319-64545-2.

|