MotionParallax3D

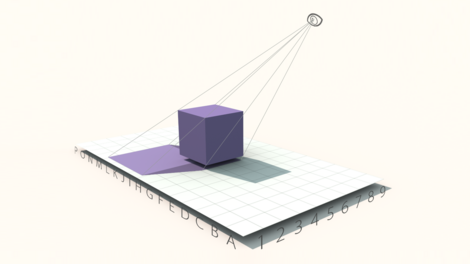

The MotionParallax3D displays are a class of virtual reality devices that create the illusion of volumetric objects by displaying a projection of a virtual object generated to match the viewer's position relative to the screen.

Principles

MotionParallax3D displays contain one or more flat or curved screens. These screens have different sizes, shapes, and mutual disposition depending on the form factor of the device. The projections of virtual objects are formed so that the virtual image the user sees looks exactly the same as the image that would be seen if the virtual object actually existed. In order to display the correct projections of virtual objects, the virtual reality system requires the current coordinates of the observer's eyes.[1]

In contrast with stereo displays involving only binocular vision, the MotionParallax3D displays utilize one of the most important principles of human spatial perception—motion parallax. Motion parallax is a displacement of the parts of the image relative to each other with the angular velocity proportional to the distance difference between the parts of the image and a viewer when the relative position of the viewer and the observed object changes.[2] The MotionParallax3D displays use this mechanism of perceiving volume by constantly changing the image displayed based on the current coordinates of the user's eyes. Due to this, the virtual objects are shifted relative to each other and relative to the visible real objects according to the laws and principles applying to the real world's objects. It allows the brain to build the a holistic view of the world containing both real and virtual objects with indistinguishable behavior. Most MotionParallax3D displays are based on stereo displays and display separate images to the left and right eyes. This gives a substantial advantage in building a complete system of virtual reality, but is not necessary for the perception of volume since the motion parallax mechanism alone is enough for the brain to perceive virtual objects as having a definite shape, volume, and a distance from the user's eyes.[1][3]

Tracking systems

To make the image projection display correctly, the system requires up-to-date and exact coordinates of the user's eyes. The MotionParallax3D displays get these coordinates from the head-tracking systems.

The tracking systems for MotionParallax3D displays can be based on different principles:[citation needed]

- Optical:

- Based on active markers (NettleBox)

- Based on passive markers (EON ICube)

- Based on face, eye, head contour etc. recognition (Amazon Fire Phone)

- Ultrasonic (RUCAP UM-5)

- Electromagnetic

- Mechanical

- Mixed, combining several tracking mechanisms e.g. using gyroscopes and accelerometers in addition to optical markers.

Quality indicators

The quality of MotionParallax3D displays, which is mostly based on how realistically a virtual image can be displayed, is determined by a combination of three main characteristics:

- Stereoscopic separation quality (if it is done)

- Rendering quality

- Geometric accuracy of the projection.

Stereoscopic separation quality decreases due to ghosting – a phenomenon where each eye, in addition to the image intended for it, perceives the image intended for the other eye. Ghosting can be caused by different factors, e.g. luminophore afterglow in plasma screens or the incomplete matching of the polarization direction during polarization stereoscopic separation.[4]

Rendering quality is generally not a problem for modern MotionParallax3D displays, however, the high detalization of rendering and the use of special effects allow psychological mechanisms for volume perception such as texture gradient, shading, etc. and they help increase the reality of the perception.[2]

The geometric accuracy of the projection of 3D-scenes is the most important indicator of MotionParallax3D display quality. It is influenced by the accuracy of the head-tracking and the interval between the moment when the tracking starts and the moment when the image is displayed. Tracking accuracy affects the accuracy of the projection. The architecture and geometry of the tracking devices, quality of calibration, and the integrated value of inaccuracy, which is affected by noise, can all contribute to a reduction in projection correctness. The interval between when the user's head-tracking starts, and when the image is displayed, is the primary cause of geometric incorrectness of 3D-scenes projection in MotionParallax3D systems. Latency occurs because operations such as head-tracking, rendering and displaying the projection require time.[5][6]

"If large amounts of latency are present in the VR system, users may still be able to perform tasks, but it will be by the much less rewarding means of using their head as a controller, rather than accepting that their head is naturally moving around in a stable virtual world."[5] John Carmack

"When it comes to VR and AR, latency is fundamental – if you don’t have low enough latency, it’s impossible to deliver good experiences, by which I mean virtual objects that your eyes and brain accept as real. By "real," I don’t mean that you can’t tell they’re virtual by looking at them, but rather that your perception of them as part of the world as you move your eyes, head, and body is indistinguishable from your perception of real objects. The key to this is that virtual objects have to stay in very nearly the same perceived real-world locations as you move; that is, they have to register as being in almost exactly the right position all the time. Being right 99 percent of the time is no good, because the occasional mis-registration is precisely the sort of thing your visual system is designed to detect, and will stick out like a sore thumb. Assuming accurate, consistent tracking (and that’s a big if, as I’ll explain one of these days), the enemy of virtual registration is latency. If too much time elapses between the time your head starts to turn and the time the image is redrawn to account for the new pose, the virtual image will drift far enough so that it has clearly wobbled (in VR), or so that is obviously no longer aligned with the same real-world features (in AR)."[6] Michael Abrash

Modern MotionParallax3D displays use head-tracking forecast technology that compensates for the delay partially, but the accuracy and the forecast horizon are highly dependent on the quality of the initial data (accuracy) and also the quantity of the datasets with the user's position coordinates that the system receives per time unit.

The peculiarity of visual perception is that the brain interprets the latency of virtual object images not as latency but as a distortion of the geometry of virtual objects. In this case, the dissonance between the information the user receives from the visual perception and the vestibular apparatus can cause symptoms of virtual reality sickness which include nausea, headache, and ophthalmalgia (eye pain). Higher quality MotionParallax3D displays reduce the chances of these symptoms occurring. However, even in case of a perfect MotionParallax3D display, the symptoms of cybersickness can occur in susceptible individuals due to the dissonance of the focusing and convergence visual mechanisms; if a virtual object is located at a considerable distance from the surface on which the projected image is displayed, an attempt to fix the eyes and focus on the nearby virtual objects can lead to the opposite result.[2]

"Perceiving latency in the response to head motion is also one of the primary causes of simulator sickness."[5] John Carmack

The Place of MotionParallax3D displays among VR systems

Currently all widely used computer virtual reality devices can be divided into two classes:

- MotionParallax3D displays

- HMD (head-mounted display) displays.

In addition to these two classes there are other more exotic variants: e.g. the CastAR system, under development, in which the projection of the correct image onto a surface is achieved by placing the projectors directly on the glasses. However such devices are not so widespread at the moment and exist only as prototypes.[7]

Head-mounted displays usually isolate users from the real world, whereas MotionParallax3D displays allow users to keep their orientation in the environment. This also imposes restrictions on MotionParallax3D displays; since the user sees both real and virtual objects, it is necessary to make their behavior identical which is achieved by reducing latency to an acceptable level (no more than 20 ms).[6]

Implementations

Because MotionParallax3D displays are a tracking system connected to devices that form and display the images, there are a lot of form factors with disparities in size: from the smartphones to the virtual reality rooms to full immersion systems. Below are the most common representatives of each class.

Amazon Fire Phone

- Stereo 3D: not present

- Tracking system : optical, tracking of the user's eyes position

The ZSpace virtual reality system

- Stereo 3D: passive stereo

- Tracking system: optical, tracking of passive markers

The NettleBox virtual reality system

- Stereo 3D: Active 3D

- Tracking system: optical, tracking of active markers

EON ICube CAVE

- Stereo 3D: Active 3D

- Tracking system: optical, tracking of the passive markers position

See also

- Augmented reality

- Cave automatic virtual environment

- Depth perception

- Head-mounted display

- Motion capture

- Virtual reality

- Virtual reality sickness

References

- ↑ 1.0 1.1 MotionParallax3D Technology

- ↑ 2.0 2.1 2.2 Howard, Ian P. (2012-02-24). Perceiving in Depth, Volume 3: Other Mechanisms of Depth Perception (Oxford Psychology Series). Oxford University Press. pp. 84–122. ISBN 978-0-19-976416-7.

- ↑ Nawrot Mark, Joyce Lindsey (2006). "The pursuit theory of motion parallax". Vision Research (Elsevier B.V.) 46 (28): 4709–4725. doi:10.1016/j.visres.2006.07.006. PMID 17083957.

- ↑ "Understanding Stereoscopic 3D in After Effects". Adobe Systems Incorporated. https://helpx.adobe.com/after-effects/kb/stereoscopic-3d-effects.html. Retrieved 31 May 2016.

- ↑ 5.0 5.1 5.2 Sterling, Bruce (24 February 2013). "John Carmack's Latency Mitigation Strategies". Wired (Condé Nast). https://www.wired.com/2013/02/john-carmacks-latency-mitigation-strategies/. Retrieved 31 May 2016.

- ↑ 6.0 6.1 6.2 Abrash, Michael. "Latency – the sine qua non of AR and VR". valvesoftware.com. http://blogs.valvesoftware.com/abrash/latency-the-sine-qua-non-of-ar-and-vr/. Retrieved 31 May 2016.

- ↑ "CastAR will return $1M in Kickstarter money and postpone augmented reality glasses shipments". Venture Beat. 16 December 2015. https://venturebeat.com/2015/12/16/castar-will-return-1m-in-kickstarter-money-and-postpone-augmented-reality-glasses-shipments/. Retrieved 31 May 2016.