Overlap–add method

In signal processing, the overlap–add method is an efficient way to evaluate the discrete convolution of a very long signal with a finite impulse response (FIR) filter :

-

()

where for outside the region

This article uses common abstract notations, such as or in which it is understood that the functions should be thought of in their totality, rather than at specific instants (see Convolution).

Algorithm

The concept is to divide the problem into multiple convolutions of with short segments of :

where is an arbitrary segment length. Then:

and can be written as a sum of short convolutions:[1]

where the linear convolution is zero outside the region And for any parameter [upper-alpha 1] it is equivalent to the -point circular convolution of with in the region The advantage is that the circular convolution can be computed more efficiently than linear convolution, according to the circular convolution theorem:

-

()

where:

- DFTN and IDFTN refer to the Discrete Fourier transform and its inverse, evaluated over discrete points, and

- is customarily chosen such that is an integer power-of-2, and the transforms are implemented with the FFT algorithm, for efficiency.

Pseudocode

The following is a pseudocode representation of the algorithm:

(Overlap-add algorithm for linear convolution) h = FIR_filter M = length(h) Nx = length(x) N = 8 × 2^ceiling( log2(M) ) (8 times the smallest power of two bigger than filter length M. See next section for a slightly better choice.) step_size = N - (M-1) (L in the text above) H = DFT(h, N) position = 0 y(1 : Nx + M-1) = 0 while position + step_size ≤ Nx do y(position+(1:N)) = y(position+(1:N)) + IDFT(DFT(x(position+(1:step_size)), N) × H) position = position + step_size end

Efficiency considerations

When the DFT and IDFT are implemented by the FFT algorithm, the pseudocode above requires about N (log2(N) + 1) complex multiplications for the FFT, product of arrays, and IFFT.[upper-alpha 2] Each iteration produces N-M+1 output samples, so the number of complex multiplications per output sample is about:

-

()

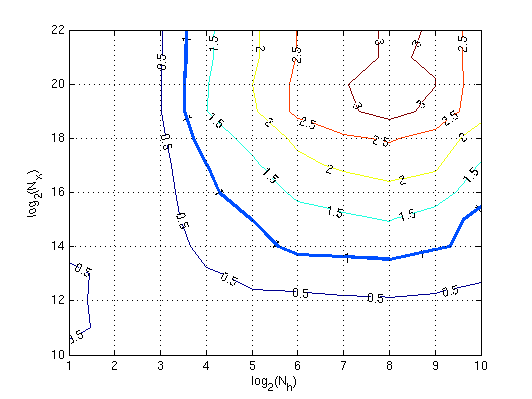

For example, when and Eq.3 equals whereas direct evaluation of Eq.1 would require up to complex multiplications per output sample, the worst case being when both and are complex-valued. Also note that for any given Eq.3 has a minimum with respect to Figure 2 is a graph of the values of that minimize Eq.3 for a range of filter lengths ().

Instead of Eq.1, we can also consider applying Eq.2 to a long sequence of length samples. The total number of complex multiplications would be:

Comparatively, the number of complex multiplications required by the pseudocode algorithm is:

Hence the cost of the overlap–add method scales almost as while the cost of a single, large circular convolution is almost . The two methods are also compared in Figure 3, created by Matlab simulation. The contours are lines of constant ratio of the times it takes to perform both methods. When the overlap-add method is faster, the ratio exceeds 1, and ratios as high as 3 are seen.

See also

Notes

- ↑ This condition implies that the segment has at least appended zeros, which prevents circular overlap of the output rise and fall transients.

- ↑ Cooley–Tukey FFT algorithm for N=2k needs (N/2) log2(N) – see FFT – Definition and speed

References

- ↑ Rabiner, Lawrence R.; Gold, Bernard (1975). "2.25". Theory and application of digital signal processing. Englewood Cliffs, N.J.: Prentice-Hall. pp. 63–65. ISBN 0-13-914101-4. https://archive.org/details/theoryapplicatio00rabi/page/63.

Further reading

- Oppenheim, Alan V.; Schafer, Ronald W. (1975). Digital signal processing. Englewood Cliffs, N.J.: Prentice-Hall. ISBN 0-13-214635-5.

- Hayes, M. Horace (1999). Digital Signal Processing. Schaum's Outline Series. New York: McGraw Hill. ISBN 0-07-027389-8.

- Senobari, Nader Shakibay; Funning, Gareth J.; Keogh, Eamonn; Zhu, Yan; Yeh, Chin-Chia Michael; Zimmerman, Zachary; Mueen, Abdullah (2019). "Super-Efficient Cross-Correlation (SEC-C): A Fast Matched Filtering Code Suitable for Desktop Computers". Seismological Research Letters 90 (1): 322–334. doi:10.1785/0220180122. ISSN 0895-0695. https://www.cs.ucr.edu/~eamonn/SuperEfficientCrossCorrelation.pdf.

|