Social:Digital infinity

Digital infinity is a technical term in theoretical linguistics. Alternative formulations are "discrete infinity" and "the infinite use of finite means". The idea is that all human languages follow a simple logical principle, according to which a limited set of digits—irreducible atomic sound elements—are combined to produce an infinite range of potentially meaningful expressions.

Language is, at its core, a system that is both digital and infinite. To my knowledge, there is no other biological system with these properties....

– Noam Chomsky[1]

It remains for us to examine the spiritual element of speech ... this marvelous invention of composing from twenty-five or thirty sounds an infinite variety of words, which, although not having any resemblance in themselves to that which passes through our minds, nevertheless do not fail to reveal to others all of the secrets of the mind, and to make intelligible to others who cannot penetrate into the mind all that we conceive and all of the diverse movements of our souls.

– Antoine Arnauld and Claude Lancelot[2]

Noam Chomsky cites Galileo as perhaps the first to recognise the significance of digital infinity. This principle, notes Chomsky, is "the core property of human language, and one of its most distinctive properties: the use of finite means to express an unlimited array of thoughts". In his Dialogo, Galileo describes with wonder the discovery of a means to communicate one's "most secret thoughts to any other person ... with no greater difficulty than the various collocations of twenty-four little characters upon a paper." "This is the greatest of all human inventions," Galileo continues, noting it to be "comparable to the creations of a Michelangelo".[1]

The computational theory of mind

'Digital infinity' corresponds to Noam Chomsky's 'universal grammar' mechanism, conceived as a computational module inserted somehow into Homo sapiens' otherwise 'messy' (non-digital) brain. This conception of human cognition—central to the so-called 'cognitive revolution' of the 1950s and 1960s—is generally attributed to Alan Turing, who was the first scientist to argue that a man-made machine might truly be said to 'think'. But his often forgotten conclusion however was in line with previous observations that a "thinking" machine would be absurd, since we have no formal idea what "thinking" is — and indeed we still don't. Chomsky frequently pointed this out. Chomsky agreed that while a mind can be said to "compute"—as we have some idea of what computing is and some good evidence the brain is doing it on at least some level—we cannot however claim that a computer or any other machine is "thinking" because we have no coherent definition of what thinking is. Taking the example of what's called 'consciousness,' Chomsky said that, "We don't even have bad theories"—echoing the famous physics criticism that a theory is "not even wrong." From Turing's seminal 1950 article, "Computing Machinery and Intelligence", published in Mind, Chomsky provides the example of a submarine being said to "swim." Turing clearly derided the idea. "If you want to call that swimming, fine," Chomsky says, repeatedly explaining in print and video how Turing is consistently misunderstood on this, one of his most cited observations.

Previously the idea of a thinking machine was famously dismissed by René Descartes as theoretically impossible. Neither animals nor machines can think, insisted Descartes, since they lack a God-given soul.[3] Turing was well aware of this traditional theological objection, and explicitly countered it.[4]

Today's digital computers are instantiations of Turing's theoretical breakthrough in conceiving the possibility of a man-made universal thinking machine—known nowadays as a 'Turing machine'. No physical mechanism can be intrinsically 'digital', Turing explained, since—examined closely enough—its possible states will vary without limit. But if most of these states can be profitably ignored, leaving only a limited set of relevant distinctions, then functionally the machine may be considered 'digital':[4]

The digital computers considered in the last section may be classified amongst the "discrete-state machines." These are the machines which move by sudden jumps or clicks from one quite definite state to another. These states are sufficiently different for the possibility of confusion between them to be ignored. Strictly speaking, there are no such machines. Everything really moves continuously. But there are many kinds of machine which can profitably be thought of as being discrete-state machines. For instance in considering the switches for a lighting system it is a convenient fiction that each switch must be definitely on or definitely off. There must be intermediate positions, but for most purposes we can forget about them.

– Alan Turing 1950

An implication is that 'digits' don't exist: they and their combinations are no more than convenient fictions, operating on a level quite independent of the material, physical world. In the case of a binary digital machine, the choice at each point is restricted to 'off' versus 'on'. Crucially, the intrinsic properties of the medium used to encode signals then have no effect on the message conveyed. 'Off' (or alternatively 'on') remains unchanged regardless of whether the signal consists of smoke, electricity, sound, light or anything else. In the case of analog (more-versus-less) gradations, this is not so because the range of possible settings is unlimited. Moreover, in the analog case it does matter which particular medium is being employed: equating a certain intensity of smoke with a corresponding intensity of light, sound or electricity is just not possible. In other words, only in the case of digital computation and communication can information be truly independent of the physical, chemical or other properties of the materials used to encode and transmit messages.

This way, digital computation and communication operates independently of the physical properties of the computing machine. As scientists and philosophers during the 1950s digested the implications, they exploited the insight to explain why 'mind' apparently operates on so different a level from 'matter'. Descartes's celebrated distinction between immortal 'soul' and mortal 'body' was conceptualised, following Turing, as no more than the distinction between (digitally encoded) information on the one hand, and, on the other, the particular physical medium—light, sound, electricity or whatever—chosen to transmit the corresponding signals. Note that the Cartesian assumption of mind's independence of matter implied—in the human case at least—the existence of some kind of digital computer operating inside the human brain.

Information and computation reside in patterns of data and in relations of logic that are independent of the physical medium that carries them. When you telephone your mother in another city, the message stays the same as it goes from your lips to her ears even as it physically changes its form, from vibrating air, to electricity in a wire, to charges in silicon, to flickering light in a fibre optic cable, to electromagnetic waves, and then back again in reverse order. ... Likewise, a given programme can run on computers made of vacuum tubes, electromagnetic switches, transistors, integrated circuits, or well-trained pigeons, and it accomplishes the same things for the same reasons.

This insight, first expressed by the mathematician Alan Turing, the computer scientists Alan Newell, Herbert Simon, and Marvin Minsky, and the philosophers Hilary Putnam and Jerry Fodor, is now called the computational theory of mind. It is one of the great ideas in intellectual history, for it solves one of the puzzles that make up the 'mind-body problem', how to connect the ethereal world of meaning and intention, the stuff of our mental lives, with a physical hunk of matter like the brain. ... For millennia this has been a paradox. ... The computational theory of mind resolves the paradox.

– Steven Pinker[5]

A digital apparatus

Turing did not claim that the human mind really is a digital computer. More modestly, he proposed that digital computers might one day qualify in human eyes as machines endowed with "mind". However, it was not long before philosophers (most notably Hilary Putnam) took what seemed to be the next logical step—arguing that the human mind itself is a digital computer, or at least that certain mental "modules" are best understood that way.

Noam Chomsky rose to prominence as one of the most audacious champions of this 'cognitive revolution'. Language, he proposed, is a computational 'module' or 'device' unique to the human brain. Previously, linguists had thought of language as learned cultural behaviour: chaotically variable, inseparable from social life and therefore beyond the remit of natural science. The Swiss linguist Ferdinand de Saussure, for example, had defined linguistics as a branch of 'semiotics', this in turn being inseparable from anthropology, sociology and the study of man-made conventions and institutions. By picturing language instead as the natural mechanism of 'digital infinity', Chomsky promised to bring scientific rigour to linguistics as a branch of strictly natural science.

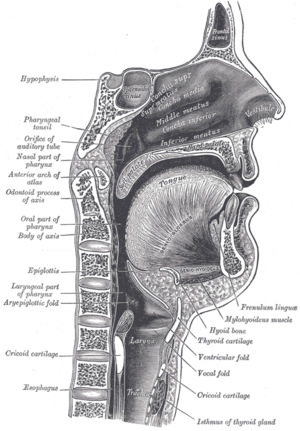

In the 1950s, phonology was generally considered the most rigorously scientific branch of linguistics. For phonologists, "digital infinity" was made possible by the human vocal apparatus conceptualised as a kind of machine consisting of a small number of binary switches. For example, "voicing" could be switched 'on' or 'off', as could palatisation, nasalisation and so forth. Take the consonant [b], for example, and switch voicing to the 'off' position—and you get [p]. Every possible phoneme in any of the world's languages might in this way be generated by specifying a particular on/off configuration of the switches ('articulators') constituting the human vocal apparatus. This approach became celebrated as 'distinctive features' theory, in large part credited to the Russian linguist and polymath Roman Jakobson. The basic idea was that every phoneme in every natural language could in principle be reduced to its irreducible atomic components—a set of 'on' or 'off' choices ('distinctive features') allowed by the design of a digital apparatus consisting of the human tongue, soft palate, lips, larynx and so forth.

Chomsky's original work was in morphophonemics. During the 1950s, he became inspired by the prospect of extending Roman Jakobson's 'distinctive features' approach—now hugely successful—far beyond its original field of application. Jakobson had already persuaded a young social anthropologist—Claude Lévi-Strauss—to apply distinctive features theory to the study of kinship systems, in this way inaugurating 'structural anthropology'. Chomsky—who got his job at the Massachusetts Institute of Technology thanks to the intervention of Jakobson and his student, Morris Halle—hoped to explore the extent to which similar principles might be applied to the various sub-disciplines of linguistics, including syntax and semantics.[6] If the phonological component of language was demonstrably rooted in a digital biological 'organ' or 'device', why not the syntactic and semantic components as well? Might not language as a whole prove to be a digital organ or device?

This led some of Chomsky's early students to the idea of 'generative semantics'—the proposal that the speaker generates word and sentence meanings by combining irreducible constituent elements of meaning, each of which can be switched 'on' or 'off'. To produce 'bachelor', using this logic, the relevant component of the brain must switch 'animate', 'human' and 'male' to the 'on' (+) position while keeping 'married' switched 'off' (-). The underlying assumption here is that the requisite conceptual primitives—irreducible notions such as 'animate', 'male', 'human', 'married' and so forth—are genetically determined internal components of the human language organ. This idea would rapidly encounter intellectual difficulties—sparking controversies culminating in the so-called 'linguistics wars' as described in Randy Allen Harris's 1993 publication by that name.[7] The linguistic wars attracted young and ambitious scholars impressed by the recent emergence of computer science and its promise of scientific parsimony and unification. If the theory worked, the simple principle of digital infinity would apply to language as a whole. Linguistics in its entirety might then lay claim to the coveted status of natural science. No part of the discipline—not even semantics—need be "contaminated" any longer by association with such 'un-scientific' disciplines as cultural anthropology or social science.[8][9]: 3 [10]

See also

- Decoding Chomsky

- Generative linguistics

- The Library of Babel

- Origin of language

- Origin of speech

- Recursion

References

- ↑ 1.0 1.1 Noam Chomsky, 1991. Linguistics and Cognitive Science: Problems and Mysteries. in Asa Kasher (ed.), The Chomskyan Turn. Oxford: Blackwell, pp. 26-53, p. 50.

- ↑ Antoine Arnauld and Claude Lancelot, 1975 (1660). The Port-Royal Grammar. The Hague: Mouton, pp. 65-66.

- ↑ Rene Descartes, 1985 [1637]. 'Discourse on the Method.' In The Philosophical Writings of Descartes. Translated by J. Cottingham, R. Stoothoff and D. Murdoch. Cambridge: Cambridge University Press, Vol. 1, pp. 139-141.

- ↑ 4.0 4.1 Turing, Alan (1950). "Computing Machinery and Intelligence". Mind 59 (236): 433–60. doi:10.1093/mind/LIX.236.433.

- ↑ Steven Pinker, 1997. How the Mind Works. London: Allen Lane, Penguin, p. 24.

- ↑ Chomsky, N. 1965. Aspects of the Theory of Syntax. Cambridge, MA: MIT Press, pp. 64-127.

- ↑ Harris, Randy Allen (1993). The Linguistics Wars. New York and Oxford: Oxford University Press. OCLC summary: "When it was first published in 1957, Noam Chomsky's Syntactic Structure seemed to be just a logical expansion of the reigning approach to linguistics. Soon, however, there was talk from Chomsky and his associates about plumbing mental structure; then there was a new phonology; and then there was a new set of goals for the field, cutting it off completely from its anthropological roots and hitching it to a new brand of psychology. Rapidly, all of Chomsky's ideas swept the field. While the entrenched linguists were not looking for a messiah, apparently many of their students were."

- ↑ Knight, Chris (2004). "Decoding Chomsky". European Review (London, UK: Academia Europaea) 12 (4): 581–603. doi:10.1017/S1062798704000493. http://radicalanthropologygroup.org/sites/default/files/pdf/class_text_070.pdf. Retrieved 15 January 2020. ""For Chomsky, the only channels of communication that are free from such ideological contamination are those of genuine natural science."".

- ↑ Leech, Geoffrey Neil (1983). Principles of pragmatics. Longman Linguistics Library. London: Longman. pp. 250. ISBN 0582551102. OCLC 751316590. ""It has the advantage of maintaining the integrity of linguistics, as within a walled city, away from the contaminating influences of use and context. But many have grave doubts about the narrowness of this paradigm’s definition of language, and about the high degree of abstraction and idealization of data which it requires.""

- ↑ Just as Bloomfield's mentalism was one way of keeping meaning away from form, by consigning it to psychology and sociology, so Chomsky's performance is a way to keep meaning and other contaminants away from form, by consigning them to "memory limitations, distractions, shifts of attention and interest" as well as to "the physical and social conditions of language , use" (1965 [1964]:3; 1977:3)—to psychology and sociology

Further reading

- Knight, C. and C. Power 2008. 'Unravelling digital infinity'. Paper presented to the 7th International Conference on the Evolution of Language (EVOLANG), Barcelona, 2008.

- Knight, C. 2008. 'Honest fakes' and language origins. Journal of Consciousness Studies, 15, No. 10–11, 2008, pp. 236–48.

|