Volume ray casting

Volume ray casting, sometimes called volumetric ray casting, volumetric ray tracing, or volume ray marching, is an image-based volume rendering technique. It computes 2D images from 3D volumetric data sets (3D scalar fields). Volume ray casting, which processes volume data, must not be mistaken with ray casting in the sense used in ray tracing, which processes surface data. In the volumetric variant, the computation doesn't stop at the surface but "pushes through" the object, sampling the object along the ray. Unlike ray tracing, volume ray casting does not spawn secondary rays.[1] When the context/application is clear, some authors simply call it ray casting.[1][2] Because ray marching does not necessarily require an exact solution to ray intersection and collisions, it is suitable for real time computing for many applications for which ray tracing is unsuitable.

Classification

The technique of volume ray casting can be derived directly from the rendering equation. It provides results of very high quality rendering. Volume ray casting is classified as an image-based volume rendering technique, as the computation emanates from the output image and not the input volume data, as is the case with object-based techniques.

Basic algorithm

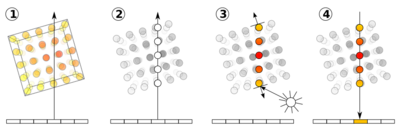

In its basic form, the volume ray casting algorithm comprises four steps:

- Ray casting. For each pixel of the final image, a ray of sight is shot ("cast") through the volume. At this stage it is useful to consider the volume being touched and enclosed within a bounding primitive, a simple geometric object — usually a cuboid — that is used to intersect the ray of sight and the volume.

- Sampling. Along the part of the ray of sight that lies within the volume, equidistant sampling points or samples are selected. In general, the volume is not aligned with the ray of sight, and sampling points will usually be located in between voxels. Because of that, it is necessary to interpolate the values of the samples from its surrounding voxels (commonly using trilinear interpolation).

- Shading. For each sampling point, a transfer function retrieves an RGBA material colour and a gradient of illumination values is computed. The gradient represents the orientation of local surfaces within the volume. The samples are then shaded (i.e. coloured and lit) according to their surface orientation and the location of the light source in the scene.

- Compositing. After all sampling points have been shaded, they are composited along the ray of sight, resulting in the final colour value for the pixel that is currently being processed. The composition is derived directly from the rendering equation and is similar to blending acetate sheets on an overhead projector. It may work back-to-front, i.e. computation starts with the sample farthest from the viewer and ends with the one nearest to the viewer. This work flow direction ensures that masked parts of the volume do not affect the resulting pixel. The front-to-back order could be more computationally efficient since, the residual ray energy is getting down while ray travels away from camera; so, the contribution to the rendering integral is diminishing therefore more aggressive speed/quality compromise may be applied (increasing of distances between samples along ray is one of such speed/quality trade-offs).

Advanced adaptive algorithms

The adaptive sampling strategy dramatically reduces the rendering time for high-quality rendering – the higher the quality and/or size of the data-set, the more significant the advantage over the regular/even sampling strategy.[1] However, adaptive ray casting upon a projection plane and adaptive sampling along each individual ray do not map well to the SIMD architecture of modern GPU. Multi-core CPUs, however, are a perfect fit for this technique, making them suitable for interactive ultra-high quality volumetric rendering.

Examples of high quality volumetric ray casting

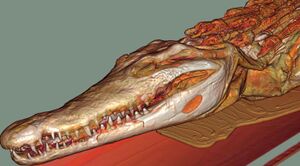

This gallery represents a collection of images rendered using high quality volume ray casting. Commonly the crisp appearance of volume ray casting images distinguishes them from output of texture mapping VR due to higher accuracy of volume ray casting renderings.

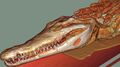

The CT scan of the crocodile mummy has resolution 3000×512×512 (16bit), the skull data-set has resolution 512×512×750 (16bit).

Ray marching

The term ray marching is more broad and refers to methods in which simulated rays are traversed iteratively, effectively dividing each ray into smaller ray segments, sampling some function at each step. These methods are often used in cases where creating explicit geometry, such as triangles, is not a good option.

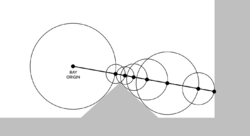

Other examples of ray marching

- In SDF ray marching, or sphere tracing,[3] an intersection point is approximated between the ray and a surface defined by a signed distance function (SDF). The SDF is evaluated for each iteration in order to be able take as large steps as possible without missing any part of the surface. A threshold is used to cancel further iteration when a point has reached that is close enough to the surface. This method is often used for 3D fractal rendering.[4]

- When rendering screen space effects, such as screen space reflection (SSR) and screen space shadows, rays are traced using G-buffers, where depth and surface normal data is stored per each 2D pixel.

See also

- Amira – commercial 3D visualization and analysis software (for life sciences and biomedical) that uses a ray-casting volume rendering engine (based on Open Inventor)

- Avizo – commercial 3D visualization and analysis software that uses a ray-casting volume rendering engine (also based on Open Inventor)

- Shadertoy - online community and platform for Computer graphics professionals, academics and enthusiasts who share, learn and experiment with rendering techniques and procedural art through GLSL code

- Volumetric path tracing

References

- ↑ 1.0 1.1 1.2 Daniel Weiskopf (2006). GPU-Based Interactive Visualization Techniques. Springer Science & Business Media. p. 21. ISBN 978-3-540-33263-3.

- ↑ Barton F. Branstetter (2009). Practical Imaging Informatics: Foundations and Applications for PACS Professionals. Springer Science & Business Media. p. 126. ISBN 978-1-4419-0485-0. https://books.google.com/books?id=Q6Hc0oMyiYYC&pg=PA126.

- ↑ Hart, John C. (June 1995), "Sphere Tracing: A Geometric Method for the Antialiased Ray Tracing of Implicit Surfaces", The Visual Computer, http://graphics.stanford.edu/courses/cs348b-20-spring-content/uploads/hart.pdf

- ↑ Hart, John C.; Sandin, Daniel J.; Kauffman, Louis H. (July 1989), "Ray Tracing Deterministic 3-D Fractals", Computer Graphics, http://graphics.stanford.edu/courses/cs348b-20-spring-content/uploads/hart.pdf

External links

- Acceleration Techniques for GPU-based Volume Rendering (J. Krüger, R. Westermann, IEEE Visualization 2003)

- A single-pass GPU ray casting framework for interactive out-of-core rendering of massive volumetric datasets (E. Gobbetti, F. Marton, J.A. Iglesias Guitian, The Visual Computer 2008)

|