Engineering:Atari Sierra

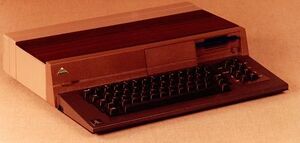

The only known image of the Sierra mock-up. It looks similar to the Amiga 1000 but has an attached keyboard and an Atari-style joystick port can be seen on the left. | |

| Also known as | Rainbow |

|---|---|

| Developer | Atari, Inc. |

| Type | 16-bit/32-bit personal computer |

| Release date | Prototype 1983 - Project Cancelled |

Sierra was the code name for a 16-bit/32-bit personal computer designed by the Sunnyvale Research Lab (SRL) of Atari, Inc. starting around 1983. The design was one of several new 16-bit computer systems proposing to use a new chipset from Atari Corporate Research.

The graphics portion consisted of a two chip system called "Silver and Gold", Gold generated the video output while Silver was a sprite processor that fed data to Gold. The chipset was collectively known as Rainbow, and the system is sometimes referred to by this name.[lower-alpha 1] The audio portion of the chipset consisted of a powerful sound synthesizer known as AMY. The CPU had not been chosen, but the Motorola 68000, National Semiconductor 32016 and Intel 286 were being considered. Several proposed operating systems were considered including VisiCorps Visi On and Atari's internal OS code-named "Snowcap".

Sierra was bogged down since its inception through a committee process that never came to a consensus on the design specifications. A second project, Atari Gaza, ran in parallel, designing an upscale workstation machine running either BSD Unix or CP/M-68k. Atari management concluded they had no way to sell into the business market, redirecting Gaza engineers to a new low-cost machine based on the Amiga chipset, "Mickey". All of these systems were still incomplete when the company was purchased by Jack Tramiel in July 1984 and the majority of the staff was laid off. Only the synthesizer caught the interest of Tramel Technology lead engineer Shiraz Shivji and the rest of the projects disappeared.

History

Earlier 8-bit designs

Atari's earlier consoles and computers generally used an off-the-shelf 8-bit central processor with custom chips to improve performance and capabilities. With most designs of the era, graphics, sound and similar tasks would normally be handled by the main CPU, and converted to output using relatively simple analog-to-digital converters. Offloading these duties to the custom chips allowed the CPU in Atari's design to spend less time on housekeeping chores. Atari referred to these chips as co-processors, sharing the main memory to communicate instructions and data. In modern terminology, these would be known as integrated graphics and sound, now a common solution for mainstream offerings.[1]

In the Atari 2600, a single all-in-one support chip known as the TIA provided graphics and sound support to its stripped-down MOS Technology 6502-derivative, the 6507. Due to the high price of computer memory, the TIA was designed to use almost no traditional RAM. The screen was drawn from a single line in memory, which the program had to quickly change the memory on-the-fly as the television drew down the screen. This led to both a quirky design as well as surprising programming flexibility; it was some time before programmers learned the knack of "racing the beam", but when they did, 2600 games began to rapidly improve compared to early efforts.[1]

The much more powerful Atari 8-bit family used the same basic design concept, but this time supported by three chips. The C/GTIA was a graphics chip, greatly updated compared to the TIA, sound was moved to the new POKEY which provided four-channel sound as well as handing some basic input/output tasks like keyboard handling, and finally, the software-based display system used in the 2600 was implemented in hardware in the ANTIC, which was responsible for handling background graphics (bitmaps) and character-based output. ANTIC allowed the programmer to provide a simple list of instructions which it would then convert into data to be fed to the C/GTIA, freeing the programmer of this task. This separation of duties allowed each sub-system to be more powerful than the all-in-one TIA, while their updated design also greatly reduced programming complexity compared to the 2600.[2]

Rainbow

By the early 1980s, a new generation of CPU designs was coming to market with much greater capability than the earlier 8-bit designs. Notable among these were the Intel 8088 and Zilog Z8000, designs using 16-bit internals, which initially became available as daughtercards on S-100 bus machines and other platforms as early as the late 1970s.[3] But even as these were coming to market, more powerful 32-bit designs were emerging, notably the Motorola 68000 (m68k) which was announced in 1979[4] and led several other companies to begin development their own 32-bit designs.[5]

Atari's Sunnyvale Research Lab (SRL),[lower-alpha 2] run by Alan Kay and Kristina Hooper Woolsey, was tasked with keeping the company on the leading edge, exploring projects beyond the next fiscal year.[lower-alpha 3] They began experimenting with the new 16-and 32-bit chips in the early 1980s. By 1982 it was clear Atari was not moving forward with these new chips as rapidly as other companies. Some panic ensued, and a new effort began to develop a working system.[6]

Steve Saunders began the process in late 1982 by sitting down with the guru of the 8-bit series chips.[7][lower-alpha 4] He was astonished at the system's limitations and was determined to design something better. His design tracked a set of rectangular areas with different origin points and a priority. The chipset would search through the rectangles in priority order until it found the first one that contained a color value that was visible on the screen at that location. One color from each rectangle's color lookup table could be defined as transparent, allowing objects below it to be visible even at a lower priority. In this way, the system would offer the fundamental basis for windowing support.[9]

Each rectangle in the display could be as large or small as required. One might, for instance, make a rectangle that was larger than the screen, which would allow it to be scrolled simply by updating the origin point in its description block. If this was moved off the screen, it would be ignored during drawing, meaning one could use rectangles as offscreen drawing areas and then "flip" them onto the visible screen by changing their origin point once the drawing was complete. Small rectangles could be used for movable objects whereas earlier Atari designs used custom sprite hardware for this task. Each of the rectangles had its own bit depth, 1, 2, 4 or 8-bit, and each one had its own color lookup table that mapped the 1, 4, 16 or 256 color registers of the selected bit depth onto an underlying hardware pallet of 4,096 colors. The data could be encoded using run length encoding (RLE) to reduce memory needs.[10] The display was constructed one line at a time into an internal buffer which was then output to the Gold as it asked for data.[11]

Work on Rainbow continued through 1983, mainly by Saunders and Bob Alkire, who would continue developing the system on a large whiteboard. A polaroid image of the design was made after every major change.[12] A significant amount of effort was applied to considering the timing of the access process searching through the rectangles for a displayed pixel; it was possible to overload the system, asking it to consider too much memory in the available time, but that was considered suitable as this could be addressed in software.[13]

Jack Palevich produced a simulator of the system and George Wang of Atari Semiconductor produced a logic design.[14] The logic was initially implemented as a single-chip design,[15] but the only cost-effective chip packaging at the time was the 40-pin DIP, which required the system to be reimplemented as two separate VLSI chips. This led to the creation of the "Silver" and "Gold" chips,[lower-alpha 5] each of which implemented one portion of the Rainbow concept.[16] Silver was responsible for maintaining the rectangle data and priority system and using that to fetch the appropriate data from memory to produce any given pixel, while Gold took the resulting data from Silver, performed color lookup, and produced the video output using a bank of timers that implemented the NTSC or PAL signal output.[17]

Sierra

Sierra came about through a conversation between Alkire and Doug Crockford. Alkire borrowed Palevich's new Mac computer, using it to make block diagrams of a machine that slowly emerged as the Sierra effort.[18] Each engineer in SRL had their own favorite new CPU design, and the preferred selection changed constantly as work on Rainbow continued.[19] Numerous options were explored, including the Intel 80186 and 286, National Semiconductor NS16032, Motorola 68000 and Zilog Z8000.[20] Each of these was compared for its price/performance ratio for a wide variety of machines.[21]

The design, then, was more of an outline than a concrete design, the only portions that were positively selected was the use of Rainbow for graphics and a new synthesizer chip known as "Amy" for sound.[21] Tying all of this together would be a new operating system known as "Eva", although the nature of the OS changed as well. At least one design document outlining the entire system exists, referring to the platform as "GUMP", a reference to a character in The Marvelous Land of Oz.[22] The original design documents suggest different Sierra concepts aimed at the home computer market with a price point as low as $300 using a low power CPU, all the way through business machines, student computers and low-end workstations.[21] It was during this point that the wooden mockup was constructed.[23]

By early 1984 it was clear the project was going to be shut down, and the engineers began looking for other jobs.[24] With Rainbow largely complete by this time, at the point of tape out, some effort was put into saving the design by licensing it to a 3rd party. Meetings were made with several potential customers, including Tramel Technology, AMD and others.[25] HP Labs hired a group of thirty engineers from SRL, including Alkire and Saunders, and the Rainbow effort ended.[26]

Other designs

Sierra proceeded alongside similar projects within Atari being run by other divisions, including an upscale m68k machine known as Gaza.[27][lower-alpha 6] Arguments broke out in Atari's management over how to best position any 32-bit machine, and which approach better served the company's needs. The home computer market was in the midst of a price war that was destroying it,[28] and it was not clear that a high-end machine would not become embroiled in a similar price war. The business computing market appeared to be immune to the price war and the IBM PC was finally starting to sell in quantity despite being much less sophisticated than Sierra or Gaza. But Atari had no presence in the business world and it was not clear how they could sell into this market. Workstations were an emerging niche that the company might be able to sell into, but the market was very new. Management vacillated on which of these markets offered a greater chance of success.[29]

Work on the various Sierra concepts continued through 1983 and into 1984, by which point little progress had been made on the complete design. Several mock-ups of various complexity had been constructed, but no working machines existed. Likewise, little concrete work on the operating system had taken place, and the idea of using a Unix System V port was being considered. Only the Amy chip had made considerable progress by this point; the first version to be fabbed, the AMY-1, was moving into production for late 1984.[30]

At the same time, a team of former Atari engineers now working at third-party design firms like Mindset and Amiga. Amiga, led by Jay Miner who had led the design of the original Atari 8-bit machines, had been making progress with their new platform, codenamed "Lorraine".[31] Lorraine was also based on the 68000 and generally similar to Sierra and Gaza in almost every design note, which is not surprising given that the teams originally came from the same company. By early 1984, Lorraine was farther along in design and essentially ready for production. Atari had already licensed the Lorraine chipset for a games console machine, and the Gaza team was told to drop their efforts and begin work on a desktop computer design using Lorraine, codenamed "Mickey" (semi-officially known as the Atari 1850XLD).[29]

Tramiel takeover

In July 1984, Jack Tramiel purchased Atari and the company became Atari Corporation. In a desperate measure to restore cash-flow, whole divisions of the company were laid off over a period of a few weeks.[32] This included the vast majority of the SRL staff. The Amy team convinced the Tramiels that their work could be used in other platforms, and their project continued. The rest of the Sierra team were scattered.

As a result, any progress on the Sierra platform ended, Gaza was completed and demonstrated and Mickey was completed, awaiting the Amiga chipset that would never arrive. The "Cray" development frame for Gaza and reused for Mickey was used by the Tramiel engineers to develop the Atari ST prototype. The company's option to use Lorraine for a games console also ended, and Amiga would later sign a deal with Commodore International to produce a machine very similar to Mickey, the Amiga 1000.[33] The Atari ST, Atari Corp's 68k-based machine, would be built with custom chips and off-the-shelf hardware, and was significantly less advanced than Sierra, GAZA or Mickey.

Description

As implemented, the Silver and Gold design was based on an internal buffer that constructed the screen one line at a time. This was an effort to relax the timing requirements between the main memory and the video output. Previous designs had generally used one of two solutions; one was to carefully time the CPU and GPU so they could access memory within the timing constraints of the video system, while other platforms used some sort of system to pause the CPU during the times the GPU needed memory.[34] By the time of Rainbow's design, the cost of implementing a buffer had become a non-issue, allowing the system to access memory with some flexibility in timing.[35]

The system could be used to construct any display from 512 to 768 pixels wide and 384 to 638 lines high. The mode that it was designed to support was 640 x 480 at a maximum 8-bit color depth. The colors were selected from a color lookup table of 4,096 colors. The background color, assuming no data was specified for a given pixel, was set in an internal register. The system naturally output RGB and could be converted to NTSC or PAL using commonly available chips.[35]

As implemented in Silver, the object buffer could contain up to twelve "objects" representing rectangular areas. This does not appear to be a design limitation, simply the implementation of this particular chip. Each of the object records contained a pointer to the location in memory for the underlying data. Using line-end interrupts, programs could modify these pointers on-the-fly as the screen was drawn, allowing the system to display different objects on each line. Similar techniques had been used in earlier Atari machines to increase the number of sprites on a single screen. Because Silver required control of the memory, it operated as the bus master and also handled DRAM refresh duties.[36]

Notes

- ↑ Some documents suggest "Rainbow" referred to AMY as well, others suggest otherwise.

- ↑ Sometimes referred to as CRG, for Corporate Research Group.

- ↑ One SRL employee stated the goal was to plan for the CES after the next one.

- ↑ Saunders does not note who this guru was but later suggests it might have been Jim Dunion.[8]

- ↑ Although some sources suggest that Rainbow and Silver/Gold were two different GPU systems, documentation from the era clearly shows the latter to be part of Rainbow.

- ↑ There are numerous claims that Gaza was a dual-m68k machine, but this is unlikely due to the way these chips accessed memory. Comments by the engineers suggest the multiple CPUs are referring to coprocessors in the traditional Atari usage of the term.

References

Citations

- ↑ 1.0 1.1 Montfort, Nick; Bogost, Ian (2009). Racing the Beam. MIT Press. http://mitpress.mit.edu/books/racing-beam.

- ↑ Crawford, Chris (1982). De Re Atari. Atari Program Exchange. http://www.atariarchives.org/dere/.

- ↑ "S-100 and the 8086". 2011-10-13. http://www.retrotechnology.com/herbs_stuff/s_8086.html.

- ↑ Ken Polsson. "Chronology of Microprocessors". Processortimeline.info. http://processortimeline.info/.

- ↑ "National Semiconductor's Series 32000 Family". http://www.cpu-ns32k.net/CPUs.html.

- ↑ Rainbow 2016, 10:00.

- ↑ Rainbow 2016, 10:30.

- ↑ Rainbow 2016, 25:30.

- ↑ Rainbow 2016, 12:00.

- ↑ Rainbow 2016, 31:15.

- ↑ Rainbow 2016, 32:15.

- ↑ Rainbow 2016, 14:00.

- ↑ Rainbow 2016, 15:30.

- ↑ Rainbow 2016, 16:10.

- ↑ Rainbow 2016, 20:10.

- ↑ Rainbow 2016, 21:00.

- ↑ Rainbow 2016, 19:00.

- ↑ Rainbow 2016, 32:45.

- ↑ Rainbow 2016, 34:00.

- ↑ Morrison 1983, pp. 6-7.

- ↑ 21.0 21.1 21.2 Morrison 1983.

- ↑ Goldberg & Vendel 2012, p. 732.

- ↑ Rainbow 2016, 35:30.

- ↑ Rainbow 2016, 39:15.

- ↑ Rainbow 2016, 40:30.

- ↑ Rainbow 2016, 41:00.

- ↑ Goldberg & Vendel 2012, p. 733.

- ↑ Knight, Daniel (10 January 2016). "The 1983 Home Computer Price War". https://lowendmac.com/2016/the-1983-home-computer-price-war/.

- ↑ 29.0 29.1 Goldberg & Vendel 2012, p. 737.

- ↑ Template:Cite tech report

- ↑ Goldberg & Vendel 2012, p. 708.

- ↑ Goldberg & Vendel 2012, pp. 748-749.

- ↑ Goldberg & Vendel 2012, pp. 745.

- ↑ Wang 1983, 6.3.1.

- ↑ 35.0 35.1 Wang 1983, 2.

- ↑ Wang 1983, 6.1.

Bibliography

- Goldberg, Marty; Vendel, Curt (2012). Atari Inc. Business Is Fun. Syzygy Press. ISBN 9780985597405. https://archive.org/details/atariincbusiness0000gold.

- Bob Alkire and Steve Saunders (10 June 2016). Bob Alkire and Steve Saunders, Rainbow GPU (Audio). Antic Podcast.

- Template:Cite tech report

External links

|