Physics:Event camera

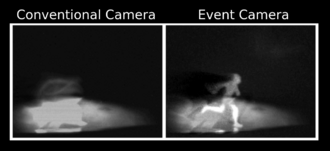

An event camera, also known as a neuromorphic camera,[1] silicon retina[2] or dynamic vision sensor,[3] is an imaging sensor that responds to local changes in brightness. Event cameras do not capture images using a shutter as conventional (frame) cameras do. Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

Functional description

Event camera pixels independently respond to changes in brightness as they occur.[4] Each pixel stores a reference brightness level, and continuously compares it to the current brightness level. If the difference in brightness exceeds a threshold, that pixel resets its reference level and generates an event: a discrete packet that contains the pixel address and timestamp. Events may also contain the polarity (increase or decrease) of a brightness change, or an instantaneous measurement of the illumination level.[5] Thus, event cameras output an asynchronous stream of events triggered by changes in scene illumination.

Event cameras have microsecond temporal resolution, 120 dB dynamic range, and less under/overexposure and motion blur[4][6] than frame cameras. This allows them to track object and camera movement (optical flow) more accurately. They yield grey-scale information. Initially (2014), resolution was limited to 100 pixels. A later entry reached 640x480 resolution in 2019. Because individual pixels fire independently, event cameras appear suitable for integration with asynchronous computing architectures such as neuromorphic computing. Pixel independence allows these cameras to cope with scenes with brightly and dimly lit regions without having to average across them.[7]

| Sensor | Dynamic

range (dB) |

Equivalent

framerate* (fps) |

Spatial

resolution (MP) |

Power

consumption (mW) |

|---|---|---|---|---|

| Human eye | 30–40 | 200-300 | - | 10[8] |

| High-end DSLR camera (Nikon D850) | 44.6[9] | 120 | 2–8 | - |

| Ultrahigh-speed camera (Phantom v2640)[10] | 64 | 12,500 | 0.3–4 | - |

| Event camera[11] | 120 | 1,000,000 | 0.1–0.2 | 30 |

*Indicates temporal resolution since human eyes and event cameras do not output frames.

Types

Temporal contrast sensors (such as DVS[4] (Dynamic Vision Sensor), or sDVS[12] (sensitive-DVS)) produce events that indicate polarity (increase or decrease in brightness), while temporal image sensors[5] indicate the instantaneous intensity with each event. The DAVIS[13] (Dynamic and Active-pixel Vision Sensor) contains a global shutter active pixel sensor (APS) in addition to the dynamic vision sensor (DVS) that shares the same photosensor array. Thus, it has the ability to produce image frames alongside events. Many event cameras additionally carry an inertial measurement unit (IMU).

| Name | Event output | Image frames | Color | IMU | Manufacturer | Commercially available | Resolution |

|---|---|---|---|---|---|---|---|

| DVS128[4] | Polarity | No | No | No | Inivation | No | 128x128 |

| sDVS128[12] | Polarity | No | No | No | CSIC | No | 128x128 |

| DAVIS240[13] | Polarity | Yes | No | Yes | Inivation | No | 240x180 |

| DAVIS346[14] | Polarity | Yes | No | Yes | Inivation | Yes | 346 x 260 |

| DVXplorer[15] | Polarity | No | No | Yes | Inivation | Yes | 640 x 480 |

| SEES[16] | Polarity | Yes | No | Yes | Insightness | Yes | |

| Metavision Packaged Generation 3 Sensor[17] | Polarity | No | No | No | Prophesee | Yes | 640x480 |

| SilkyEvCam camera (Prophesee Gen 3)[18] | Polarity | No | No | No | Century Arks / Prophesee | Yes | 640×480 |

| VisionCamEB camera (Prophesee Gen 3) [19] | Polarity | No | No | No | Imago Technologies / Prophesee | Yes | 640×480 |

| Samsung DVS[20] | Polarity | No | No | Yes | Samsung | Yes | 640×480 |

| Onboard[5] | Polarity | No | No | Yes | Prophesee | No | 640×480 |

| Celex[21] | Intensity | Yes | No | Yes | CelePixel | Yes | 64x64 |

| IMX636[22] sensor | Polarity | Yes | No | No | Sony / Prophesee | Yes | 1280x720 |

| EVK3[23] camera (IMX636 ES/ Prophesee Gen 3.1) | Polarity | No | No | No | Prophesee | Yes | 1280x720 / 640×480 |

| EVK4[24] camera (IMX636 ES) | Polarity | No | No | No | Prophesee | Yes | 1280x720 |

| IMX637[25] sensor | Polarity | Yes | No | No | Sony / Prophesee | No | 640x512 |

Retinomorphic sensors

Another class of event sensors are so-called retinomorphic sensors. While the term retinomorphic has been used to describe event sensors generally,[26][27] in 2020 it was adopted as the name for a specific sensor design based on a resistor and photosensitive capacitor in series.[28] These capacitors are distinct from photocapacitors, which are used to store solar energy,[29] and are instead designed to change capacitance under illumination. They charge/discharge slightly when the capacitance is changed, but otherwise remain in equilibrium. When a photosensitive capacitor is placed in series with a resistor, and an input voltage is applied across the circuit, the result is a sensor that outputs a voltage when the light intensity changes, but otherwise does not.

Unlike other event sensors (typically a photodiode and some other circuit elements), these sensors produce the signal inherently. They can hence be considered a single device that produces the same result as a small circuit in other event cameras. Retinomorphic sensors have to-date only been studied in a research environment.[30][31][32][33]

Algorithms

Image reconstruction

Image reconstruction from events has the potential to create images and video with high dynamic range, high temporal resolution and reduced motion blur. Image reconstruction can be achieved using temporal smoothing, e.g. high-pass or complementary filter.[34] Alternative methods include optimization[35] and gradient estimation[36] followed by Poisson integration.

Spatial convolutions

The concept of spatial event-driven convolution was postulated in 1999[37] (before the DVS), but later generalized during EU project CAVIAR[38] (during which the DVS was invented) by projecting event-by-event an arbitrary convolution kernel around the event coordinate in an array of integrate-and-fire pixels.[39] Extension to multi-kernel event-driven convolutions[40] allows for event-driven deep convolutional neural networks.[41]

Motion detection and tracking

Segmentation and detection of moving objects viewed by an event camera can seem to be a trivial task, as it is done by the sensor on-chip. However, these tasks are difficult, because events carry little information[42] and do not contain useful visual features like texture and color.[43] These tasks become further challenging given a moving camera,[42] because events are triggered everywhere on the image plane, produced by moving objects and the static scene (whose apparent motion is induced by the camera’s ego-motion). Some of the recent approaches to solving this problem include the incorporation of motion-compensation models[44][45] and traditional clustering algorithms.[46][47][43][48]

Potential applications

Potential applications include object recognition, autonomous vehicles, and robotics.[32] The US military is considering infrared and other event cameras because of their lower power consumption and reduced heat generation.[7]

See also

References

- ↑ Li, Hongmin; Liu, Hanchao; Ji, Xiangyang; Li, Guoqi; Shi, Luping (2017). "CIFAR10-DVS: An Event-Stream Dataset for Object Classification" (in English). Frontiers in Neuroscience 11: 309. doi:10.3389/fnins.2017.00309. ISSN 1662-453X. PMID 28611582.

- ↑ Sarmadi, Hamid; Muñoz-Salinas, Rafael; Olivares-Mendez, Miguel A.; Medina-Carnicer, Rafael (2021). "Detection of Binary Square Fiducial Markers Using an Event Camera". IEEE Access 9: 27813–27826. doi:10.1109/ACCESS.2021.3058423. ISSN 2169-3536. https://ieeexplore.ieee.org/document/9351958.

- ↑ Liu, Min; Delbruck, Tobi (May 2017). "Block-matching optical flow for dynamic vision sensors: Algorithm and FPGA implementation". 2017 IEEE International Symposium on Circuits and Systems (ISCAS). pp. 1–4. doi:10.1109/ISCAS.2017.8050295. ISBN 978-1-4673-6853-7. https://ieeexplore.ieee.org/document/8050295. Retrieved 27 June 2021.

- ↑ 4.0 4.1 4.2 4.3 Lichtsteiner, P.; Posch, C.; Delbruck, T. (February 2008). "A 128×128 120 dB 15μs Latency Asynchronous Temporal Contrast Vision Sensor". IEEE Journal of Solid-State Circuits 43 (2): 566–576. doi:10.1109/JSSC.2007.914337. ISSN 0018-9200. Bibcode: 2008IJSSC..43..566L. https://www.zora.uzh.ch/id/eprint/17629/1/Lichtsteiner_Latency_V.pdf.

- ↑ 5.0 5.1 5.2 Posch, C.; Matolin, D.; Wohlgenannt, R. (January 2011). "A QVGA 143 dB Dynamic Range Frame-Free PWM Image Sensor With Lossless Pixel-Level Video Compression and Time-Domain CDS". IEEE Journal of Solid-State Circuits 46 (1): 259–275. doi:10.1109/JSSC.2010.2085952. ISSN 0018-9200. Bibcode: 2011IJSSC..46..259P.

- ↑ Longinotti, Luca. "Product Specifications". https://inivation.com/support/product-specifications/.

- ↑ 7.0 7.1 "A new type of camera". The Economist. 2022-01-29. ISSN 0013-0613. https://www.economist.com/science-and-technology/a-new-type-of-camera/21807384.

- ↑ Skorka, Orit (2011-07-01). "Toward a digital camera to rival the human eye". Journal of Electronic Imaging 20 (3): 033009–033009–18. doi:10.1117/1.3611015. ISSN 1017-9909. Bibcode: 2011JEI....20c3009S.

- ↑ DxO. "Nikon D850 : Tests and Reviews | DxOMark". https://www.dxomark.com/Cameras/Nikon/D850.

- ↑ "Phantom v2640". https://www.phantomhighspeed.com/products/cameras/ultrahighspeed/v2640.

- ↑ Longinotti, Luca. "Product Specifications". https://inivation.com/support/product-specifications/.

- ↑ 12.0 12.1 Serrano-Gotarredona, T.; Linares-Barranco, B. (March 2013). "A 128x128 1.5% Contrast Sensitivity 0.9% FPN 3μs Latency 4mW Asynchronous Frame-Free Dynamic Vision Sensor Using Transimpedance Amplifiers". IEEE Journal of Solid-State Circuits 48 (3): 827–838. doi:10.1109/JSSC.2012.2230553. ISSN 0018-9200. Bibcode: 2013IJSSC..48..827S. http://www.imse-cnm.csic.es/~bernabe/jssc13_AuthorAcceptedVersion.pdf.

- ↑ 13.0 13.1 Brandli, C.; Berner, R.; Yang, M.; Liu, S.; Delbruck, T. (October 2014). "A 240 × 180 130 dB 3 µs Latency Global Shutter Spatiotemporal Vision Sensor". IEEE Journal of Solid-State Circuits 49 (10): 2333–2341. doi:10.1109/JSSC.2014.2342715. ISSN 0018-9200. Bibcode: 2014IJSSC..49.2333B.

- ↑ Taverni, Gemma; Paul Moeys, Diederik; Li, Chenghan; Cavaco, Celso; Motsnyi, Vasyl; San Segundo Bello, David; Delbruck, Tobi (May 2018). "Front and Back Illuminated Dynamic and Active Pixel Vision Sensors Comparison". IEEE Transactions on Circuits and Systems II: Express Briefs 65 (5): 677–681. doi:10.1109/TCSII.2018.2824899. ISSN 1549-7747. https://www.zora.uzh.ch/id/eprint/168572/1/Taverni_BSIvsFSI_Davis_comparision_IEEE_TCAS1_express_briefs_2018.pdf.

- ↑ "Inivation Specifications - Current models". https://inivation.com/wp-content/uploads/2023/02/2022-09-iniVation-devices-Specifications.pdf.

- ↑ "Insightness – Sight for your device". http://www.insightness.com/.

- ↑ "Metavision® Packaged Sensor" (in en-US). https://www.prophesee.ai/event-based-sensor-packaged/.

- ↑ "Event Based Vision Camera - Century Arks". https://www.centuryarks.com/en/products/silkyevcam.

- ↑ "IMAGO I PROPHESEE first industrial grade event-based vision system" (in en-US). 2019-02-14. https://www.prophesee.ai/2019/02/14/imago-prophesee/.

- ↑ Son, Bongki; Suh, Yunjae; Kim, Sungho; Jung, Heejae; Kim, Jun-Seok; Shin, Changwoo; Park, Keunju; Lee, Kyoobin et al. (February 2017). "4.1 A 640×480 dynamic vision sensor with a 9µm pixel and 300Meps address-event representation". 2017 IEEE International Solid-State Circuits Conference (ISSCC). San Francisco, CA, USA: IEEE. pp. 66–67. doi:10.1109/ISSCC.2017.7870263. ISBN 9781509037582.

- ↑ Chen, Shoushun; Tang, Wei; Zhang, Xiangyu; Culurciello, Eugenio (December 2012). "A 64 $\times$ 64 Pixels UWB Wireless Temporal-Difference Digital Image Sensor". IEEE Transactions on Very Large Scale Integration (VLSI) Systems 20 (12): 2232–2240. doi:10.1109/TVLSI.2011.2172470. ISSN 1063-8210.

- ↑ "Sony to Release Two Types of Stacked Event-Based Vision Sensors with the Industry's Smallest 4.86μm Pixel Size for Detecting Subject Changes Only Delivering High-Speed, High-Precision Data Acquisition to Improve Industrial Equipment Productivity|News Releases|Sony Semiconductor Solutions Group" (in ja). https://www.sony-semicon.co.jp/e/news/2021/2021090901.html.

- ↑ "Event-Based Evaluation Kit 3 - VGA / HD" (in en-US). https://www.prophesee.ai/event-based-evk-3/.

- ↑ Staff, Embedded (2022-04-13). "Eval kit for Sony sensor featuring Prophesee event-based vision tech" (in en-US). https://www.embedded.com/eval-kit-for-sony-sensor-featuring-prophesee-event-based-vision-tech/.

- ↑ "Sony® Launches the new IMX636 and IMX637 Event-Based Vision Sensors" (in en-US). https://www.framos.com/en/news/sony-launches-the-new-imx636-and-imx637-event-based-vision-sensors.

- ↑ Boahen, K. (1996). "Retinomorphic vision systems". Proceedings of Fifth International Conference on Microelectronics for Neural Networks. pp. 2–14. doi:10.1109/MNNFS.1996.493766. ISBN 0-8186-7373-7. https://ieeexplore.ieee.org/document/493766.

- ↑ Posch, Christoph; Serrano-Gotarredona, Teresa; Linares-Barranco, Bernabe; Delbruck, Tobi (2014). "Retinomorphic Event-Based Vision Sensors: Bioinspired Cameras With Spiking Output". Proceedings of the IEEE 102 (10): 1470–1484. doi:10.1109/JPROC.2014.2346153. ISSN 1558-2256. https://ieeexplore.ieee.org/document/6887319.

- ↑ Trujillo Herrera, Cinthya; Labram, John G. (2020-12-07). "A perovskite retinomorphic sensor". Applied Physics Letters 117 (23): 233501. doi:10.1063/5.0030097. ISSN 0003-6951. Bibcode: 2020ApPhL.117w3501T.

- ↑ Miyasaka, Tsutomu; Murakami, Takurou N. (2004-10-25). "The photocapacitor: An efficient self-charging capacitor for direct storage of solar energy". Applied Physics Letters 85 (17): 3932–3934. doi:10.1063/1.1810630. ISSN 0003-6951. Bibcode: 2004ApPhL..85.3932M. https://aip.scitation.org/doi/10.1063/1.1810630.

- ↑ "Perovskite sensor sees more like the human eye" (in en-GB). 2021-01-18. https://physicsworld.com/perovskite-sensor-sees-more-like-the-human-eye/.

- ↑ "Simple Eyelike Sensors Could Make AI Systems More Efficient" (in en). 8 December 2020. https://insidescience.org/news/simple-eyelike-sensors-could-make-ai-systems-more-efficient.

- ↑ 32.0 32.1 Hambling, David. "AI vision could be improved with sensors that mimic human eyes" (in en-US). https://www.newscientist.com/article/2259491-ai-vision-could-be-improved-with-sensors-that-mimic-human-eyes/.

- ↑ "An eye for an AI: Optic device mimics human retina" (in en). https://www.sciencefocus.com/news/an-eye-for-an-ai-optic-device-mimics-human-retina/.

- ↑ 34.0 34.1 Scheerlinck, Cedric; Barnes, Nick; Mahony, Robert (2019). "Continuous-Time Intensity Estimation Using Event Cameras" (in en). Computer Vision – ACCV 2018. Lecture Notes in Computer Science. 11365. Springer International Publishing. pp. 308–324. doi:10.1007/978-3-030-20873-8_20. ISBN 9783030208738.

- ↑ Pan, Liyuan; Scheerlinck, Cedric; Yu, Xin; Hartley, Richard; Liu, Miaomiao; Dai, Yuchao (June 2019). "Bringing a Blurry Frame Alive at High Frame-Rate With an Event Camera". 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, CA, USA: IEEE. pp. 6813–6822. doi:10.1109/CVPR.2019.00698. ISBN 978-1-7281-3293-8. https://ieeexplore.ieee.org/document/8953329.

- ↑ Scheerlinck, Cedric; Barnes, Nick; Mahony, Robert (April 2019). "Asynchronous Spatial Image Convolutions for Event Cameras". IEEE Robotics and Automation Letters 4 (2): 816–822. doi:10.1109/LRA.2019.2893427. ISSN 2377-3766.

- ↑ Serrano-Gotarredona, T.; Andreou, A.; Linares-Barranco, B. (Sep 1999). "AER Image Filtering Architecture for Vision Processing Systems". IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications 46 (9): 1064–1071. doi:10.1109/81.788808. ISSN 1057-7122.

- ↑ Serrano-Gotarredona, R.; et, al (Sep 2009). "CAVIAR: A 45k-Neuron, 5M-Synapse, 12G-connects/sec AER Hardware Sensory-Processing-Learning-Actuating System for High Speed Visual Object Recognition and Tracking". IEEE Transactions on Neural Networks 20 (9): 1417–1438. doi:10.1109/TNN.2009.2023653. ISSN 1045-9227. PMID 19635693.

- ↑ Serrano-Gotarredona, R.; Serrano-Gotarredona, T.; Acosta-Jimenez, A.; Linares-Barranco, B. (Dec 2006). "A Neuromorphic Cortical-Layer Microchip for Spike-Based Event Processing Vision Systems". IEEE Transactions on Circuits and Systems I: Regular Papers 53 (12): 2548–2566. doi:10.1109/TCSI.2006.883843. ISSN 1549-8328.

- ↑ Camuñas-Mesa, L.; et, al (Feb 2012). "An Event-Driven Multi-Kernel Convolution Processor Module for Event-Driven Vision Sensors". IEEE Journal of Solid-State Circuits 47 (2): 504–517. doi:10.1109/JSSC.2011.2167409. ISSN 0018-9200. Bibcode: 2012IJSSC..47..504C.

- ↑ Pérez-Carrasco, J.A.; Zhao, B.; Serrano, C.; Acha, B.; Serrano-Gotarredona, T.; Chen, S.; Linares-Barranco, B. (November 2013). "Mapping from Frame-Driven to Frame-Free Event-Driven Vision Systems by Low-Rate Rate-Coding and Coincidence Processing. Application to Feed-Forward ConvNets". IEEE Transactions on Pattern Analysis and Machine Intelligence 35 (11): 2706–2719. doi:10.1109/TPAMI.2013.71. ISSN 0162-8828. PMID 24051730. http://www.imse-cnm.csic.es/~bernabe/tpami2013_AdditionalMaterialVideo.wmv.

- ↑ 42.0 42.1 Gallego, Guillermo; Delbruck, Tobi; Orchard, Garrick Michael; Bartolozzi, Chiara; Taba, Brian; Censi, Andrea; Leutenegger, Stefan; Davison, Andrew et al. (2020). "Event-based Vision: A Survey". IEEE Transactions on Pattern Analysis and Machine Intelligence PP (1): 154–180. doi:10.1109/TPAMI.2020.3008413. ISSN 1939-3539. PMID 32750812. https://ieeexplore.ieee.org/document/9138762.

- ↑ 43.0 43.1 Mondal, Anindya; R, Shashant; Giraldo, Jhony H.; Bouwmans, Thierry; Chowdhury, Ananda S. (2021). "Moving Object Detection for Event-based Vision using Graph Spectral Clustering" (in en). 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). pp. 876–884. doi:10.1109/ICCVW54120.2021.00103. ISBN 978-1-6654-0191-3. https://openaccess.thecvf.com/content/ICCV2021W/GSP-CV/html/Mondal_Moving_Object_Detection_for_Event-Based_Vision_Using_Graph_Spectral_Clustering_ICCVW_2021_paper.html.

- ↑ Mitrokhin, Anton; Fermuller, Cornelia; Parameshwara, Chethan; Aloimonos, Yiannis (October 2018). "Event-Based Moving Object Detection and Tracking". 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Madrid: IEEE. pp. 1–9. doi:10.1109/IROS.2018.8593805. ISBN 978-1-5386-8094-0. https://ieeexplore.ieee.org/document/8593805.

- ↑ Stoffregen, Timo; Gallego, Guillermo; Drummond, Tom; Kleeman, Lindsay; Scaramuzza, Davide (2019). "Event-Based Motion Segmentation by Motion Compensation". 2019 IEEE/CVF International Conference on Computer Vision (ICCV). pp. 7244–7253. doi:10.1109/ICCV.2019.00734. ISBN 978-1-7281-4803-8. https://openaccess.thecvf.com/content_ICCV_2019/html/Stoffregen_Event-Based_Motion_Segmentation_by_Motion_Compensation_ICCV_2019_paper.html.

- ↑ Piątkowska, Ewa; Belbachir, Ahmed Nabil; Schraml, Stephan; Gelautz, Margrit (June 2012). "Spatiotemporal multiple persons tracking using Dynamic Vision Sensor". 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. pp. 35–40. doi:10.1109/CVPRW.2012.6238892. ISBN 978-1-4673-1612-5. https://ieeexplore.ieee.org/document/6238892.

- ↑ Chen, Guang; Cao, Hu; Aafaque, Muhammad; Chen, Jieneng; Ye, Canbo; Röhrbein, Florian; Conradt, Jörg; Chen, Kai et al. (2018-12-02). "Neuromorphic Vision Based Multivehicle Detection and Tracking for Intelligent Transportation System" (in en). Journal of Advanced Transportation 2018: e4815383. doi:10.1155/2018/4815383. ISSN 0197-6729.

- ↑ Mondal, Anindya; Das, Mayukhmali (2021-11-08). "Moving Object Detection for Event-based Vision using k-means Clustering". 2021 IEEE 8th Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON). pp. 1–6. doi:10.1109/UPCON52273.2021.9667636. ISBN 978-1-6654-0962-9.

|