Quasi-opportunistic supercomputing

Quasi-opportunistic supercomputing is a computational paradigm for supercomputing on a large number of geographically disperse computers.[3] Quasi-opportunistic supercomputing aims to provide a higher quality of service than opportunistic resource sharing.[4]

The quasi-opportunistic approach coordinates computers which are often under different ownerships to achieve reliable and fault-tolerant high performance with more control than opportunistic computer grids in which computational resources are used whenever they may become available.[3]

While the "opportunistic match-making" approach to task scheduling on computer grids is simpler in that it merely matches tasks to whatever resources may be available at a given time, demanding supercomputer applications such as weather simulations or computational fluid dynamics have remained out of reach, partly due to the barriers in reliable sub-assignment of a large number of tasks as well as the reliable availability of resources at a given time.[5][6]

The quasi-opportunistic approach enables the execution of demanding applications within computer grids by establishing grid-wise resource allocation agreements; and fault tolerant message passing to abstractly shield against the failures of the underlying resources, thus maintaining some opportunism, while allowing a higher level of control.[3]

Opportunistic supercomputing on grids

The general principle of grid computing is to use distributed computing resources from diverse administrative domains to solve a single task, by using resources as they become available. Traditionally, most grid systems have approached the task scheduling challenge by using an "opportunistic match-making" approach in which tasks are matched to whatever resources may be available at a given time.[5]

BOINC, developed at the University of California, Berkeley is an example of a volunteer-based, opportunistic grid computing system.[2] The applications based on the BOINC grid have reached multi-petaflop levels by using close to half a million computers connected on the internet, whenever volunteer resources become available.[7] Another system, Folding@home, which is not based on BOINC, computes protein folding, has reached 8.8 petaflops by using clients that include GPU and PlayStation 3 systems.[8][9][2] However, these results are not applicable to the TOP500 ratings because they do not run the general purpose Linpack benchmark.

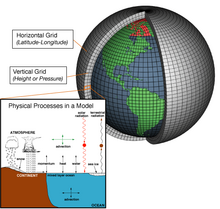

A key strategy for grid computing is the use of middleware that partitions pieces of a program among the different computers on the network.[10] Although general grid computing has had success in parallel task execution, demanding supercomputer applications such as weather simulations or computational fluid dynamics have remained out of reach, partly due to the barriers in reliable sub-assignment of a large number of tasks as well as the reliable availability of resources at a given time.[2][10][9]

The opportunistic Internet PrimeNet Server supports GIMPS, one of the earliest grid computing projects since 1997, researching Mersenne prime numbers. (As of May 2011), GIMPS's distributed research currently achieves about 60 teraflops as an volunteer-based computing project.[11] The use of computing resources on "volunteer grids" such as GIMPS is usually purely opportunistic: geographically disperse distributively owned computers are contributing whenever they become available, with no preset commitments that any resources will be available at any given time. Hence, hypothetically, if many of the volunteers unwittingly decide to switch their computers off on a certain day, grid resources will become significantly reduced.[12][2][9] Furthermore, users will find it exceedingly costly to organize a very large number of opportunistic computing resources in a manner that can achieve reasonable high performance computing.[12][13]

Quasi-control of computational resources

An example of a more structured grid for high performance computing is DEISA, a supercomputer project organized by the European Community which uses computers in seven European countries.[14] Although different parts of a program executing within DEISA may be running on computers located in different countries under different ownerships and administrations, there is more control and coordination than with a purely opportunistic approach. DEISA has a two level integration scheme: the "inner level" consists of a number of strongly connected high performance computer clusters that share similar operating systems and scheduling mechanisms and provide a homogeneous computing environment; while the "outer level" consists of heterogeneous systems that have supercomputing capabilities.[15] Thus DEISA can provide somewhat controlled, yet dispersed high performance computing services to users.[15][16]

The quasi-opportunistic paradigm aims to overcome this by achieving more control over the assignment of tasks to distributed resources and the use of pre-negotiated scenarios for the availability of systems within the network. Quasi-opportunistic distributed execution of demanding parallel computing software in grids focuses on the implementation of grid-wise allocation agreements, co-allocation subsystems, communication topology-aware allocation mechanisms, fault tolerant message passing libraries and data pre-conditioning.[17] In this approach, fault tolerant message passing is essential to abstractly shield against the failures of the underlying resources.[3]

The quasi-opportunistic approach goes beyond volunteer computing on a highly distributed systems such as BOINC, or general grid computing on a system such as Globus by allowing the middleware to provide almost seamless access to many computing clusters so that existing programs in languages such as Fortran or C can be distributed among multiple computing resources.[3]

A key component of the quasi-opportunistic approach, as in the Qoscos Grid, is an economic-based resource allocation model in which resources are provided based on agreements among specific supercomputer administration sites. Unlike volunteer systems that rely on altruism, specific contractual terms are stipulated for the performance of specific types of tasks. However, "tit-for-tat" paradigms in which computations are paid back via future computations is not suitable for supercomputing applications, and is avoided.[18]

The other key component of the quasi-opportunistic approach is a reliable message passing system to provide distributed checkpoint restart mechanisms when computer hardware or networks inevitably experience failures.[18] In this way, if some part of a large computation fails, the entire run need not be abandoned, but can restart from the last saved checkpoint.[18]

See also

- Grid computing

- History of supercomputing

- Qoscos Grid

- Supercomputer architecture

- Supercomputer operating systems

References

- ↑ NASA website

- ↑ 2.0 2.1 2.2 2.3 2.4 Parallel and Distributed Computational Intelligence by Francisco Fernández de Vega 2010 ISBN:3-642-10674-9 pages 65-68

- ↑ 3.0 3.1 3.2 3.3 3.4 Quasi-opportunistic supercomputing in grids by Valentin Kravtsov, David Carmeli, Werner Dubitzky, Ariel Orda, Assaf Schuster, Benny Yoshpa, in IEEE International Symposium on High Performance Distributed Computing, 2007, pages 233-244 [1]

- ↑ Computational Science - Iccs 2008: 8th International Conference edited by Marian Bubak 2008 ISBN:978-3-540-69383-3 pages 112-113 [2]

- ↑ 5.0 5.1 Grid computing: experiment management, tool integration, and scientific workflows by Radu Prodan, Thomas Fahringer 2007 ISBN:3-540-69261-4 pages 1-4

- ↑ Computational Science - Iccs 2009: 9th International Conference edited by Gabrielle Allen, Jarek Nabrzyski 2009 ISBN:3-642-01969-2 pages 387-388 [3]

- ↑ BOIN statistics, 2011

- ↑ "Folding@home statistics, 2011". http://fah-web.stanford.edu/cgi-bin/main.py?qtype=osstats.

- ↑ 9.0 9.1 9.2 Euro-par 2010, Parallel Processing Workshop edited by Mario R. Guarracino 2011 ISBN:3-642-21877-6 pages 274-277

- ↑ 10.0 10.1 Languages and Compilers for Parallel Computing by Guang R. Gao 2010 ISBN:3-642-13373-8 pages 10-11

- ↑ "Internet PrimeNet Server Distributed Computing Technology for the Great Internet Mersenne Prime Search". GIMPS. http://www.mersenne.org/primenet.

- ↑ 12.0 12.1 Grid Computing: Towards a Global Interconnected Infrastructure edited by Nikolaos P. Preve 2011 ISBN:0-85729-675-2 page 71

- ↑ Cooper, Curtis and Steven Boone. "The Great Internet Mersenne Prime Search at the University of Central Missouri". The University of Central Missouri. http://www.math-cs.ucmo.edu/~gimps/gimps.

- ↑ High Performance Computing - HiPC 2008 edited by P. Sadayappan 2008 ISBN:3-540-89893-X page 1

- ↑ 15.0 15.1 Euro-Par 2006 workshops: parallel processing: CoreGRID 2006 edited by Wolfgang Lehner 2007 ISBN:3-540-72226-2 pages

- ↑ Grid computing: International Symposium on Grid Computing (ISGC 2007) edited by Stella Shen 2008 ISBN:0-387-78416-0 page 170

- ↑ Kravtsov, Valentin; Carmeli, David; Dubitzky, Werner; Orda, Ariel; Schuster, Assaf; Yoshpa, Benny. "Quasi-opportunistic supercomputing in grids, hot topic paper (2007)". IEEE International Symposium on High Performance Distributed Computing. IEEE. http://citeseer.ist.psu.edu/viewdoc/summary?doi=10.1.1.135.8993.

- ↑ 18.0 18.1 18.2 Algorithms and architectures for parallel processing by Anu G. Bourgeois 2008 ISBN:3-540-69500-1 pages 234-242

|