Microsoft SenseCam

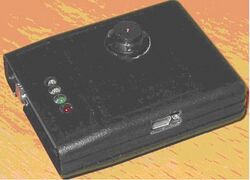

Microsoft's SenseCam is a lifelogging camera with a fisheye lens and trigger sensors, such as accelerometers, heat sensing, and audio, invented by Lyndsay Williams, a patent[1] granted in 2009. Usually worn around the neck, Sensecam is used for the MyLifeBits project, a lifetime storage database. Early developers were James Srinivasan and Trevor Taylor.

Earlier work on neck-worn sensor cameras with fisheye lenses was done by Steve Mann, and published in 2001.[2][3]

Microsoft Sensecam, Mann's earlier sensor cameras, and subsequent similar products like Autographer, Glogger and the Narrative Clip are all examples of Wearable Computing.[4]

Wearable neck-worn cameras contribute to an easier way of collecting and indexing one's daily experiences by unobtrusively taking photographs whenever a change in temperature, movement, or lighting triggers the internal sensor. The Sensecam[5] is also equipped with an accelerometer, which is used to trigger images and can also stabilise images so as to reduce blurriness. The camera is usually worn around the neck via a lanyard.

The photos represent almost every experience of its wearer's day. They are taken via a wide-angle lens to capture an image likely to contain most of what the wearer can see. The SenseCam uses a flash memory, which has the means to store upwards of 2,000 photos per day as .jpg files, though more recent models with larger and faster memory cards mean a wearer typically stores up to 4,000 images per day. These files can then be uploaded and automatically viewed as a daily movie, which can be easily reviewed and indexed using a custom viewer application running on a PC. It is possible to replay the images from a single day in a few minutes.[5] An alternative way of viewing images is to have a day's worth of data automatically segmented into 'events' and to use an event-based browser which can view each event (of 50, 100 or more individual SenseCam images) using a keyframe chosen as a representative of that event.

SenseCams have mostly been used in medical applications, particularly to aid those with poor memory as a result of disease or brain trauma. Several studies have been published by Chris Moulin, Aiden R. Doherty and Alan F. Smeaton[6] showing how reviewing one's SenseCam images can lead to what Martin A. Conway, a memory researcher from the University of Leeds, calls "Proustian moments",[7] characterised as floods of recalled details of some event in the past. SenseCams have also been used in lifelogging, and one researcher at Dublin City University, Ireland, has been wearing a SenseCam for most of his waking hours since 2006 and has generated over 13 million SenseCam images of his life.[8]

In October 2009, SenseCam technology was adopted by Vicon Revue and is now available as a product.[9]

There is a wiki dedicated to SenseCam technical issues, software, news, and various research activities and publications about, and using, SenseCam.[10]

Projections

Microsoft Research has contributed a device to aid lifebloggers among several potential users. SenseCam was first developed to help people with memory loss, but the camera is currently being tested to aid those with serious cognitive memory loss. The SenseCam produces images very similar to one's memory, particularly episodic memory, which is usually in the form of visual imagery.[11] By reviewing the day's filmstrip, patients with Alzheimer's, amnesia, and other memory impairments found it much easier to retrieve lost memories.

Microsoft Research has also tested internal audio level detection and audio recording for the SenseCam, although there are no plans to build these into the research prototypes at the moment. The research team is also exploring the potential of including sensors that will monitor the wearer's heart rate, body temperature, and other physiological changes, along with an electrocardiogram recorder when capturing pictures.

Other possible applications include using the camera's records for ethnographic studies of social phenomena, monitoring food intake, and assessing an environment's accessibility for people with disabilities.[12]

See also

- Gordon Bell

- Cathal Gurrin

- The Circle (Eggers novel)

References

- ↑ B10 EP1571634 B1 patent 0

- ↑ Intelligent Image Processing, John Wiley and Sons, 2001, 384p

- ↑ Cyborg: Digital Destiny and Human Possibility in the Age of the Wearable Computer, Randomhouse Doubleday, Steve Mann and Hal Niedzviecki, 304p, 2001

- ↑ Wearable Computing, Mads Soegaard, Encyclopedia of Interaction-Design and Human Computer Interaction, Chapter 23

- ↑ 5.0 5.1 SenseCam

- ↑ Aiden R. Doherty , Katalin Pauly-Takacs , Niamh Caprani , Cathal Gurrin , Chris J. A. Moulin , Noel E. O'Connor & (2012). "Experiences of Aiding Autobiographical Memory Using the SenseCam". Human-Computer Interaction 27 (1–2): 151–174. doi:10.1080/07370024.2012.656050. https://eprints.leedsbeckett.ac.uk/id/eprint/498/1/doherty_et_al_camera_ready.pdf.

- ↑ "Home | the Psychologist". http://www.thepsychologist.org.uk/archive/archive_home.cfm?volumeID=24&editionID=197&ArticleID=1805.

- ↑ "Summary". http://www.computing.dcu.ie/~cgurrin/.

- ↑ "Various applications for Vicon Revue". Archived from the original on 2013-04-12. https://archive.today/20130412033106/http://viconrevue.com/product.html. Retrieved 2013-02-20.

- ↑ "Welcome - SenseCam Wiki". http://www.clarity-centre.org/sensecamwiki/index.php/Main_Page.

- ↑ Memory SC

- ↑ SenseCam Applications

Further reading

- Microsoft Research Projects: SenseCam

- SenseCam: A Retrospective Memory Aid

- SenseCam Applications

- Vicon Revue

- A Personal Perspective on Living Daily Life With A SenseCam

- An FAQ About Daily Living With A SenseCam

|