Basic feasible solution

In the theory of linear programming, a basic feasible solution (BFS) is a solution with a minimal set of non-zero variables. Geometrically, each BFS corresponds to a vertex of the polyhedron of feasible solutions. If there exists an optimal solution, then there exists an optimal BFS. Hence, to find an optimal solution, it is sufficient to consider the BFS-s. This fact is used by the simplex algorithm, which essentially travels from one BFS to another until an optimal solution is found.[1]

Definitions

Preliminaries: equational form with linearly-independent rows

For the definitions below, we first present the linear program in the so-called equational form:

- maximize [math]\displaystyle{ \mathbf{c^T} \mathbf{x} }[/math]

- subject to [math]\displaystyle{ A\mathbf{x} = \mathbf{b} }[/math] and [math]\displaystyle{ \mathbf{x} \ge 0 }[/math]

where:

- [math]\displaystyle{ \mathbf{c^T} }[/math] and [math]\displaystyle{ \mathbf{x} }[/math] are vectors of size n (the number of variables);

- [math]\displaystyle{ \mathbf{b} }[/math] is a vector of size m (the number of constraints);

- [math]\displaystyle{ A }[/math] is an m-by-n matrix;

- [math]\displaystyle{ \mathbf{x} \ge 0 }[/math] means that all variables are non-negative.

Any linear program can be converted into an equational form by adding slack variables.

As a preliminary clean-up step, we verify that:

- The system [math]\displaystyle{ A\mathbf{x} = \mathbf{b} }[/math] has at least one solution (otherwise the whole LP has no solution and there is nothing more to do);

- All m rows of the matrix [math]\displaystyle{ A }[/math] are linearly independent, i.e., its rank is m (otherwise we can just delete redundant rows without changing the LP).

Feasible solution

A feasible solution of the LP is any vector [math]\displaystyle{ \mathbf{x} \ge 0 }[/math] such that [math]\displaystyle{ A\mathbf{x} = \mathbf{b} }[/math]. We assume that there is at least one feasible solution. If m = n, then there is only one feasible solution. Typically m < n, so the system [math]\displaystyle{ A\mathbf{x} = \mathbf{b} }[/math] has many solutions; each such solution is called a feasible solution of the LP.

Basis

A basis of the LP is a nonsingular submatrix of A, with all m rows and only m<n columns.

Sometimes, the term basis is used not for the submatrix itself, but for the set of indices of its columns. Let B be a subset of m indices from {1,...,n}. Denote by [math]\displaystyle{ A_B }[/math] the square m-by-m matrix made of the m columns of [math]\displaystyle{ A }[/math] indexed by B. If [math]\displaystyle{ A_B }[/math] is nonsingular, the columns indexed by B are a basis of the column space of [math]\displaystyle{ A }[/math]. In this case, we call B a basis of the LP.

Since the rank of [math]\displaystyle{ A }[/math] is m, it has at least one basis; since [math]\displaystyle{ A }[/math] has n columns, it has at most [math]\displaystyle{ \binom{n}{m} }[/math] bases.

Basic feasible solution

Given a basis B, we say that a feasible solution [math]\displaystyle{ \mathbf{x} }[/math] is a basic feasible solution with basis B if all its non-zero variables are indexed by B, that is, for all [math]\displaystyle{ j\not\in B: ~~ x_j = 0 }[/math].

Properties

1. A BFS is determined only by the constraints of the LP (the matrix [math]\displaystyle{ A }[/math] and the vector [math]\displaystyle{ \mathbf{b} }[/math]); it does not depend on the optimization objective.

2. By definition, a BFS has at most m non-zero variables and at least n-m zero variables. A BFS can have less than m non-zero variables; in that case, it can have many different bases, all of which contain the indices of its non-zero variables.

3. A feasible solution [math]\displaystyle{ \mathbf{x} }[/math] is basic if-and-only-if the columns of the matrix [math]\displaystyle{ A_K }[/math] are linearly independent, where K is the set of indices of the non-zero elements of [math]\displaystyle{ \mathbf{x} }[/math].[1]:45

4. Each basis determines a unique BFS: for each basis B of m indices, there is at most one BFS [math]\displaystyle{ \mathbf{x_B} }[/math] with basis B. This is because [math]\displaystyle{ \mathbf{x_B} }[/math] must satisfy the constraint [math]\displaystyle{ A_B \mathbf{x_B} = b }[/math], and by definition of basis the matrix [math]\displaystyle{ A_B }[/math] is non-singular, so the constraint has a unique solution:

[math]\displaystyle{ \mathbf{x_B} = {A_B}^{-1}\cdot b }[/math]

The opposite is not true: each BFS can come from many different bases. If the unique solution of [math]\displaystyle{ \mathbf{x_B} = {A_B}^{-1}\cdot b }[/math] satisfies the non-negativity constraints [math]\displaystyle{ \mathbf{x_B} \geq 0 }[/math], then B is called a feasible basis.

5. If a linear program has an optimal solution (i.e., it has a feasible solution, and the set of feasible solutions is bounded), then it has an optimal BFS. This is a consequence of the Bauer maximum principle: the objective of a linear program is convex; the set of feasible solutions is convex (it is an intersection of hyperspaces); therefore the objective attains its maximum in an extreme point of the set of feasible solutions.

Since the number of BFS-s is finite and bounded by [math]\displaystyle{ \binom{n}{m} }[/math], an optimal solution to any LP can be found in finite time by just evaluating the objective function in all [math]\displaystyle{ \binom{n}{m} }[/math]BFS-s. This is not the most efficient way to solve an LP; the simplex algorithm examines the BFS-s in a much more efficient way.

Examples

Consider a linear program with the following constraints:

[math]\displaystyle{ \begin{align} x_1 + 5 x_2 + 3 x_3 + 4 x_4 + 6 x_5 &= 14 \\ x_2 + 3 x_3 + 5 x_4 + 6 x_5 &= 7 \\ \forall i\in\{1,\ldots,5\}: x_i&\geq 0 \end{align} }[/math]

The matrix A is:

[math]\displaystyle{ A = \begin{pmatrix} 1 & 5 & 3 & 4 & 6 \\ 0 & 1 & 3 & 5 & 6 \end{pmatrix} ~~~~~ \mathbf{b} = (14~~7) }[/math]

Here, m=2 and there are 10 subsets of 2 indices, however, not all of them are bases: the set {3,5} is not a basis since columns 3 and 5 are linearly dependent.

The set B={2,4} is a basis, since the matrix [math]\displaystyle{ A_B = \begin{pmatrix} 5 & 4 \\ 1 & 5 \end{pmatrix} }[/math] is non-singular.

The unique BFS corresponding to this basis is [math]\displaystyle{ x_B = (0~~2~~0~~1~~0) }[/math].

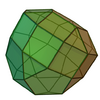

Geometric interpretation

The set of all feasible solutions is an intersection of hyperspaces. Therefore, it is a convex polyhedron. If it is bounded, then it is a convex polytope. Each BFS corresponds to a vertex of this polytope.[1]:53–56

Basic feasible solutions for the dual problem

As mentioned above, every basis B defines a unique basic feasible solution [math]\displaystyle{ \mathbf{x_B} = {A_B}^{-1}\cdot b }[/math] . In a similar way, each basis defines a solution to the dual linear program:

- minimize [math]\displaystyle{ \mathbf{b^T} \mathbf{y} }[/math]

- subject to [math]\displaystyle{ A^T\mathbf{y} \geq \mathbf{c} }[/math].

The solution is [math]\displaystyle{ \mathbf{y_B} = {A^T_B}^{-1}\cdot c }[/math].

Finding an optimal BFS

There are several methods for finding a BFS that is also optimal.

Using the simplex algorithm

In practice, the easiest way to find an optimal BFS is to use the simplex algorithm. It keeps, at each point of its execution, a "current basis" B (a subset of m out of n variables), a "current BFS", and a "current tableau". The tableau is a representation of the linear program where the basic variables are expressed in terms of the non-basic ones:[1]:65[math]\displaystyle{ \begin{align} x_B &= p + Q x_N \\ z &= z_0 + r^T x_N \end{align} }[/math]where [math]\displaystyle{ x_B }[/math] is the vector of m basic variables, [math]\displaystyle{ x_N }[/math] is the vector of n non-basic variables, and [math]\displaystyle{ z }[/math] is the maximization objective. Since non-basic variables equal 0, the current BFS is [math]\displaystyle{ p }[/math], and the current maximization objective is [math]\displaystyle{ z_0 }[/math].

If all coefficients in [math]\displaystyle{ r }[/math] are negative, then [math]\displaystyle{ z_0 }[/math] is an optimal solution, since all variables (including all non-basic variables) must be at least 0, so the second line implies [math]\displaystyle{ z\leq z_0 }[/math].

If some coefficients in [math]\displaystyle{ r }[/math] are positive, then it may be possible to increase the maximization target. For example, if [math]\displaystyle{ x_5 }[/math] is non-basic and its coefficient in [math]\displaystyle{ r }[/math] is positive, then increasing it above 0 may make [math]\displaystyle{ z }[/math] larger. If it is possible to do so without violating other constraints, then the increased variable becomes basic (it "enters the basis"), while some basic variable is decreased to 0 to keep the equality constraints and thus becomes non-basic (it "exits the basis").

If this process is done carefully, then it is possible to guarantee that [math]\displaystyle{ z }[/math] increases until it reaches an optimal BFS.

Converting any optimal solution to an optimal BFS

In the worst case, the simplex algorithm may require exponentially many steps to complete. There are algorithms for solving an LP in weakly-polynomial time, such as the ellipsoid method; however, they usually return optimal solutions that are not basic.

However, Given any optimal solution to the LP, it is easy to find an optimal feasible solution that is also basic.[2](psee also "external links" below.)

Finding a basis that is both primal-optimal and dual-optimal

A basis B of the LP is called dual-optimal if the solution [math]\displaystyle{ \mathbf{y_B} = {A^T_B}^{-1}\cdot c }[/math] is an optimal solution to the dual linear program, that is, it minimizes [math]\displaystyle{ \mathbf{b^T} \mathbf{y} }[/math]. In general, a primal-optimal basis is not necessarily dual-optimal, and a dual-optimal basis is not necessarily primal-optimal (in fact, the solution of a primal-optimal basis may even be unfeasible for the dual, and vice versa).

If both [math]\displaystyle{ \mathbf{x_B} = {A_B}^{-1}\cdot b }[/math] is an optimal BFS of the primal LP, and [math]\displaystyle{ \mathbf{y_B} = {A^T_B}^{-1}\cdot c }[/math] is an optimal BFS of the dual LP, then the basis B is called PD-optimal. Every LP with an optimal solution has a PD-optimal basis, and it is found by the Simplex algorithm. However, its run-time is exponential in the worst case. Nimrod Megiddo proved the following theorems:[2]

- There exists a strongly polynomial time algorithm that inputs an optimal solution to the primal LP and an optimal solution to the dual LP, and returns an optimal basis.

- If there exists a strongly polynomial time algorithm that inputs an optimal solution to only the primal LP (or only the dual LP) and returns an optimal basis, then there exists a strongly-polynomial time algorithm for solving any linear program (the latter is a famous open problem).

Megiddo's algorithms can be executed using a tableau, just like the simplex algorithm.

External links

- How to move from an optimal feasible solution to an optimal basic feasible solution. Paul Robin, Operations Research Stack Exchange.

References

- ↑ 1.0 1.1 1.2 1.3 Gärtner, Bernd; Matoušek, Jiří (2006). Understanding and Using Linear Programming. Berlin: Springer. ISBN 3-540-30697-8.:44–48

- ↑ 2.0 2.1 Megiddo, Nimrod (1991-02-01). "On Finding Primal- and Dual-Optimal Bases". ORSA Journal on Computing 3 (1): 63–65. doi:10.1287/ijoc.3.1.63. ISSN 0899-1499. https://pubsonline.informs.org/doi/abs/10.1287/ijoc.3.1.63.

|