Dual linear program

The dual of a given linear program (LP) is another LP that is derived from the original (the primal) LP in the following schematic way:

- Each variable in the primal LP becomes a constraint in the dual LP;

- Each constraint in the primal LP becomes a variable in the dual LP;

- The objective direction is inversed – maximum in the primal becomes minimum in the dual and vice versa.

The weak duality theorem states that the objective value of the dual LP at any feasible solution is always a bound on the objective of the primal LP at any feasible solution (upper or lower bound, depending on whether it is a maximization or minimization problem). In fact, this bounding property holds for the optimal values of the dual and primal LPs.

The strong duality theorem states that, moreover, if the primal has an optimal solution then the dual has an optimal solution too, and the two optima are equal.[1]

These theorems belong to a larger class of duality theorems in optimization. The strong duality theorem is one of the cases in which the duality gap (the gap between the optimum of the primal and the optimum of the dual) is 0.

Form of the dual LP

Suppose we have the linear program:

Maximize cTx subject to Ax ≤ b, x ≥ 0.

We would like to construct an upper bound on the solution. So we create a linear combination of the constraints, with positive coefficients, such that the coefficients of x in the constraints are at least cT. This linear combination gives us an upper bound on the objective. The variables y of the dual LP are the coefficients of this linear combination. The dual LP tries to find such coefficients that minimize the resulting upper bound. This gives the following LP:[1]: 81–83

Minimize bTy subject to ATy ≥ c, y ≥ 0

This LP is called the dual of the original LP.

Interpretation

The duality theorem has an economic interpretation.[2][3] If we interpret the primal LP as a classical "resource allocation" problem, its dual LP can be interpreted as a "resource valuation" problem.

Consider a factory that is planning its production of goods , which it produces using raw materials . To produce one unit of good , the factory needs units of raw material . Let be the factory's production schedule (produce units of good ), let be the market prices (a unit of good can sell for ), and let be the amounts of raw material that the factory has available (it has units of raw material ). The constraints are that (it cannot produce negative goods), and that the factory can only produce as many goods as is allowed by its amounts of raw materials, i.e., . The factory wishes to maximize its total revenue .

Thus, the constrained revenue maximization is the primal LP:

Maximize

subject to

Now consider another factory that wishes to purchase the entire stock of raw material

from the previous factory. It offers a price vector of

(a unit of raw material

for

). For the offer to be accepted, it should be the case that

, as otherwise the first factory could earn more cash by producing a certain product than selling off the raw material used to produce the goods. It also should be

, since the first factory would not sell its materials for a negative price. The second factory wishes to minimize the amount

that it pays for the first factory's entire stock of raw materials. Then, the second factory's optimization problem is the dual LP:

Minimize

subject to

The duality theorem states that the duality gap between the two LP problems is non-negative. In other words, an optimal solution to this dual LP is at least as large as an optimal solution to the primal LP, meaning that an optimal offer

by the second factory will always be no less than the first factory's optimal revenue

. If the first factory is given an offer to buy its entire stock of raw material, at a per-item price of

, such that

, then it should take the offer. It will make at least as much revenue as it could producing finished goods.

The strong duality theorem further states that the duality gap is zero. With strong duality, the dual solution is, economically speaking, the "equilibrium price" (see shadow price) for the raw material that a factory with production matrix and raw material stock would accept for raw material, given the market price for finished goods . (Note that may not be unique, so the equilibrium price may not be fully determined by , , and .)

To see why, consider if the raw material prices are such that for some , then the factory would purchase more raw material to produce more of good , since the prices are "too low". Conversely, if the raw material prices satisfy , but does not minimize , then the factory would make more money by selling its raw material than producing goods, since the prices are "too high". At equilibrium price , the factory cannot increase its profit by purchasing or selling off raw material.

The duality theorem has a physical interpretation too.[1]: 86–87

Constructing the dual LP

In general, given a primal LP, the following algorithm can be used to construct its dual LP.[1]: 85 The primal LP is defined by:

- A set of n variables: .

- For each variable , a sign constraint – it should be either non-negative (), or non-positive (), or unconstrained ().

- An objective function:

- A list of m constraints. Each constraint j is: where the symbol before the can be one of or or .

The dual LP is constructed as follows.

- Each primal constraint becomes a dual variable. So there are m variables: .

- The sign constraint of each dual variable is "opposite" to the sign of its primal constraint. So "" becomes and "" becomes and "" becomes .

- The dual objective function is

- Each primal variable becomes a dual constraint. So there are n constraints. The coefficient of a dual variable in the dual constraint is the coefficient of its primal variable in its primal constraint. So each constraint i is: , where the symbol before the is similar to the sign constraint on variable i in the primal LP. So becomes "" and becomes "" and becomes "".

From this algorithm, it is easy to see that the dual of the dual is the primal.

Vector formulations

If all constraints have the same sign, it is possible to present the above recipe in a shorter way using matrices and vectors. The following table shows the relation between various kinds of primals and duals.

| Primal | Dual | Note |

|---|---|---|

| Maximize cTx subject to Ax ≤ b, x ≥ 0 | Minimize bTy subject to ATy ≥ c, y ≥ 0 | This is called a "symmetric" dual problem |

| Maximize cTx subject to Ax ≤ b | Minimize bTy subject to ATy = c, y ≥ 0 | This is called an "asymmetric" dual problem |

| Maximize cTx subject to Ax = b, x ≥ 0 | Minimize bTy subject to ATy ≥ c |

The duality theorems

Below, suppose the primal LP is "maximize cTx subject to [constraints]" and the dual LP is "minimize bTy subject to [constraints]".

Weak duality

The weak duality theorem says that, for each feasible solution x of the primal and each feasible solution y of the dual: cTx ≤ bTy. In other words, the objective value in each feasible solution of the dual is an upper-bound on the objective value of the primal, and objective value in each feasible solution of the primal is a lower-bound on the objective value of the dual. Here is a proof for the primal LP "Maximize cTx subject to Ax ≤ b, x ≥ 0":

- cTx

- = xTc [since this just a scalar product of the two vectors]

- ≤ xT(ATy) [since ATy ≥ c by the dual constraints, and x ≥ 0]

- = (xTAT)y [by associativity]

- = (Ax)Ty [by properties of transpose]

- ≤ bTy [since Ax ≤ b by the primal constraints, and y ≥ 0]

Weak duality implies:

maxx cTx ≤ miny bTy

In particular, if the primal is unbounded (from above) then the dual has no feasible solution, and if the dual is unbounded (from below) then the primal has no feasible solution.

Strong duality

The strong duality theorem says that if one of the two problems has an optimal solution, so does the other one and that the bounds given by the weak duality theorem are tight, i.e.:

maxx cTx = miny bTy

The strong duality theorem is harder to prove; the proofs usually use the weak duality theorem as a sub-routine.

One proof uses the simplex algorithm and relies on the proof that, with the suitable pivot rule, it provides a correct solution. The proof establishes that, once the simplex algorithm finishes with a solution to the primal LP, it is possible to read from the final tableau, a solution to the dual LP. So, by running the simplex algorithm, we obtain solutions to both the primal and the dual simultaneously.[1]: 87–89

Another proof uses the Farkas lemma.[1]: 94

Theoretical implications

1. The weak duality theorem implies that finding a single feasible solution is as hard as finding an optimal feasible solution. Suppose we have an oracle that, given an LP, finds an arbitrary feasible solution (if one exists). Given the LP "Maximize cTx subject to Ax ≤ b, x ≥ 0", we can construct another LP by combining this LP with its dual. The combined LP has both x and y as variables:

Maximize 1

subject to Ax ≤ b, ATy ≥ c, cTx ≥ bTy, x ≥ 0, y ≥ 0

If the combined LP has a feasible solution (x,y), then by weak duality, cTx = bTy. So x must be a maximal solution of the primal LP and y must be a minimal solution of the dual LP. If the combined LP has no feasible solution, then the primal LP has no feasible solution either.

2. The strong duality theorem provides a "good characterization" of the optimal value of an LP in that it allows us to easily prove that some value t is the optimum of some LP. The proof proceeds in two steps:[4]: 260–261

- Show a feasible solution to the primal LP with value t; this proves that the optimum is at least t.

- Show a feasible solution to the dual LP with value t; this proves that the optimum is at most t.

Examples

Tiny example

Consider the primal LP, with two variables and one constraint:

Applying the recipe above gives the following dual LP, with one variable and two constraints:

It is easy to see that the maximum of the primal LP is attained when x1 is minimized to its lower bound (0) and x2 is maximized to its upper bound under the constraint (7/6). The maximum is 4 ⋅ 7/6 = 14/3.

Similarly, the minimum of the dual LP is attained when y1 is minimized to its lower bound under the constraints: the first constraint gives a lower bound of 3/5 while the second constraint gives a stricter lower bound of 4/6, so the actual lower bound is 4/6 and the minimum is 7 ⋅ 4/6 = 14/3.

In accordance with the strong duality theorem, the maximum of the primal equals the minimum of the dual.

We use this example to illustrate the proof of the weak duality theorem. Suppose that, in the primal LP, we want to get an upper bound on the objective . We can use the constraint multiplied by some coefficient, say . For any we get: . Now, if and , then , so . Hence, the objective of the dual LP is an upper bound on the objective of the primal LP.

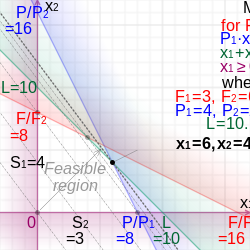

Farmer example

Consider a farmer who may grow wheat and barley with the set provision of some L land, F fertilizer and P pesticide. To grow one unit of wheat, one unit of land, units of fertilizer and units of pesticide must be used. Similarly, to grow one unit of barley, one unit of land, units of fertilizer and units of pesticide must be used.

The primal problem would be the farmer deciding how much wheat () and barley () to grow if their sell prices are and per unit.

| Maximize: | (maximize the revenue from producing wheat and barley) | |

| subject to: | (cannot use more land than available) | |

| (cannot use more fertilizer than available) | ||

| (cannot use more pesticide than available) | ||

| (cannot produce negative quantities of wheat or barley). | ||

In matrix form this becomes:

- Maximize:

- subject to:

For the dual problem assume that y unit prices for each of these means of production (inputs) are set by a planning board. The planning board's job is to minimize the total cost of procuring the set amounts of inputs while providing the farmer with a floor on the unit price of each of his crops (outputs), S1 for wheat and S2 for barley. This corresponds to the following LP:

| Minimize: | (minimize the total cost of the means of production as the "objective function") | |

| subject to: | (the farmer must receive no less than S1 for his wheat) | |

| (the farmer must receive no less than S2 for his barley) | ||

| (prices cannot be negative). | ||

In matrix form this becomes:

- Minimize:

- subject to:

The primal problem deals with physical quantities. With all inputs available in limited quantities, and assuming the unit prices of all outputs is known, what quantities of outputs to produce so as to maximize total revenue? The dual problem deals with economic values. With floor guarantees on all output unit prices, and assuming the available quantity of all inputs is known, what input unit pricing scheme to set so as to minimize total expenditure?

To each variable in the primal space corresponds an inequality to satisfy in the dual space, both indexed by output type. To each inequality to satisfy in the primal space corresponds a variable in the dual space, both indexed by input type.

The coefficients that bound the inequalities in the primal space are used to compute the objective in the dual space, input quantities in this example. The coefficients used to compute the objective in the primal space bound the inequalities in the dual space, output unit prices in this example.

Both the primal and the dual problems make use of the same matrix. In the primal space, this matrix expresses the consumption of physical quantities of inputs necessary to produce set quantities of outputs. In the dual space, it expresses the creation of the economic values associated with the outputs from set input unit prices.

Since each inequality can be replaced by an equality and a slack variable, this means each primal variable corresponds to a dual slack variable, and each dual variable corresponds to a primal slack variable. This relation allows us to speak about complementary slackness.

Infeasible program

A LP can also be unbounded or infeasible. Duality theory tells us that:

- If the primal is unbounded, then the dual is infeasible;

- If the dual is unbounded, then the primal is infeasible.

However, it is possible for both the dual and the primal to be infeasible. Here is an example:

| Maximize: | |

| Subject to: | |

Viewing the solution to a linear programming problem as a (generalized) eigenvector

There is a close connection between linear programming problems, eigenequations, and von Neumann's general equilibrium model. The solution to a linear programming problem can be regarded as a generalized eigenvector.

The eigenequations of a square matrix are as follows:

where and are the left and right eigenvectors of the square matrix , respectively, and is the eigenvalue.

The above eigenequations for the square matrix can be extended to von Neumann's general equilibrium model:[5] [6]

where the economic meanings of and are the equilibrium prices of various goods and the equilibrium activity levels of various economic agents, respectively.

The von Neumann's equilibrium model can be further extended to the following structural equilibrium model with and as matrix-valued functions:[7]

where the economic meaning of is the utility levels of various consumers. A special case of the above model is

This form of the structural equilibrium model and linear programming problems can often be converted to each other, that is, the solutions to these two types of problems are often consistent.

If we define , , , , then the structural equilibrium model can be written as

Let us illustrate the structural equilibrium model with the previously discussed tiny example. In this example, we have , and .

To solve the structural equilibrium model, we obtain [8]

These are consistent with the solutions to the linear programming problems.

We substitute the above calculation results into the structural equilibrium model, obtaining

Applications

The max-flow min-cut theorem is a special case of the strong duality theorem: flow-maximization is the primal LP, and cut-minimization is the dual LP. See Max-flow min-cut theorem.

Other graph-related theorems can be proved using the strong duality theorem, in particular, Konig's theorem.[9]

The Minimax theorem for zero-sum games can be proved using the strong-duality theorem.[1]: sub.8.1

Alternative algorithm

Sometimes, one may find it more intuitive to obtain the dual program without looking at the program matrix. Consider the following linear program:

| Minimize | |||

| subject to | |||

We have m + n conditions and all variables are non-negative. We shall define m + n dual variables: yj and si. We get:

| Minimize | |||

| subject to | |||

Since this is a minimization problem, we would like to obtain a dual program that is a lower bound of the primal. In other words, we would like the sum of all right hand side of the constraints to be the maximal under the condition that for each primal variable the sum of its coefficients do not exceed its coefficient in the linear function. For example, x1 appears in n + 1 constraints. If we sum its constraints' coefficients we get a1,1y1 + a1,2y2 + ... + a1,;;n;;yn + f1s1. This sum must be at most c1. As a result, we get:

| Maximize | |||

| subject to | |||

Note that we assume in our calculations steps that the program is in standard form. However, any linear program may be transformed to standard form and it is therefore not a limiting factor.

See also

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Gärtner, Bernd; Matoušek, Jiří (2006). Understanding and Using Linear Programming. Berlin: Springer. ISBN 3-540-30697-8. Pages 81–104.

- ↑ Sakarovitch, Michel (1983), Complements on Duality: Economic Interpretation of Dual Variables, Springer Texts in Electrical Engineering, New York, NY: Springer New York, pp. 142–155, doi:10.1007/978-1-4757-4106-3_9, ISBN 978-0-387-90829-8, http://dx.doi.org/10.1007/978-1-4757-4106-3_9, retrieved 2022-12-23

- ↑ Dorfman, Robert (1987). Linear programming and economic analysis. Paul A. Samuelson, Robert M. Solow. New York: Dover Publications. ISBN 0-486-65491-5. OCLC 16577541.

- ↑ Lovász, László; Plummer, M. D. (1986), Matching Theory, Annals of Discrete Mathematics, 29, North-Holland, ISBN 0-444-87916-1

- ↑ von Neumann, J. (1945). "A Model of General Economic Equilibrium". The Review of Economic Studies 13: 1–9.:

- ↑ Kemeny, J. G.; Morgenstern, O.; Thompson, G. L. (1956). "A Generalization of the von Neumann Model of an Expanding Economy". Econometrica 24: 115–135.

- ↑ Li, Wu (2019) (in zh). General Equilibrium and Structural Dynamics: Perspectives of New Structural Economics. Beijing: Economic Science Press. pp. 122–125. ISBN 978-7-5218-0422-5.

- ↑ "General Equilibrium Model and Dual Linear Program". https://search.r-project.org/CRAN/refmans/GE/html/gemDualLinearProgramming.html. "Detailed documentation on the General Equilibrium Model and Dual Linear Program in R."

- ↑ A. A. Ahmadi (2016). "Lecture 6: linear programming and matching". http://www.princeton.edu/~amirali/Public/Teaching/ORF523/S16/ORF523_S16_Lec6_gh.pdf.

|