Circular uniform distribution

In probability theory and directional statistics, a circular uniform distribution is a probability distribution on the unit circle whose density is uniform for all angles.

Description

Definition

The probability density function (pdf) of the circular uniform distribution, e.g. with , is:

Moments with respect to a parametrization

We consider the circular variable with at base angle . In these terms, the circular moments of the circular uniform distribution are all zero, except for :

where is the Kronecker delta symbol.

Descriptive statistics

Here the mean angle is undefined, and the length of the mean resultant is zero.

Distribution of the mean

The sample mean of a set of N measurements drawn from a circular uniform distribution is defined as:

where the average sine and cosine are:[1]

and the average resultant length is:

and the mean angle is:

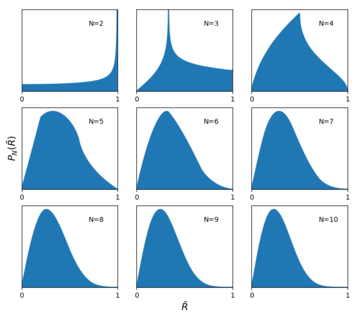

The sample mean for the circular uniform distribution will be concentrated about zero, becoming more concentrated as N increases. The distribution of the sample mean for the uniform distribution is given by:[2]

where consists of intervals of in the variables, subject to the constraint that and are constant, or, alternatively, that and are constant. The distribution of the angle is uniform

and the distribution of is given by:[2]

where is the Bessel function of order zero. There is no known general analytic solution for the above integral, and it is difficult to evaluate due to the large number of oscillations in the integrand. A 10,000 point Monte Carlo simulation of the distribution of the mean for N=3 is shown in the figure.

For certain special cases, the above integral can be evaluated:

For large N, the distribution of the mean can be determined from the central limit theorem for directional statistics. Since the angles are uniformly distributed, the individual sines and cosines of the angles will be distributed as:

where or . It follows that they will have zero mean and a variance of 1/2. By the central limit theorem, in the limit of large N, and , being the sum of a large number of i.i.d's, will be normally distributed with mean zero and variance . The mean resultant length , being the square root of the sum of squares of two normally distributed independent variables, will be Chi-distributed with two degrees of freedom (i.e.Rayleigh-distributed) and variance :

Entropy

The differential information entropy of the uniform distribution is simply

where is any interval of length . This is the maximum entropy any circular distribution may have.

See also

References

- ↑ Transmit beamforming for radar applications using circularly tapered random arrays - IEEE Conference Publication. doi:10.1109/RADAR.2017.7944181.

- ↑ 2.0 2.1 Jammalamadaka, S. Rao; Sengupta, A. (2001). Topics in Circular Statistics. World Scientific Publishing Company. ISBN 978-981-02-3778-3.

|