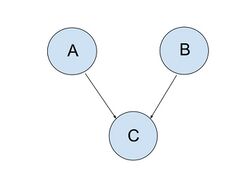

Conditional dependence

In probability theory, conditional dependence is a relationship between two or more events that are dependent when a third event occurs.[1][2] For example, if and are two events that individually increase the probability of a third event and do not directly affect each other, then initially (when it has not been observed whether or not the event occurs)[3][4] ( are independent).

But suppose that now is observed to occur. If event occurs then the probability of occurrence of the event will decrease because its positive relation to is less necessary as an explanation for the occurrence of (similarly, event occurring will decrease the probability of occurrence of ). Hence, now the two events and are conditionally negatively dependent on each other because the probability of occurrence of each is negatively dependent on whether the other occurs. We have[5]

Conditional dependence of A and B given C is the logical negation of conditional independence .[6] In conditional independence two events (which may be dependent or not) become independent given the occurrence of a third event.[7]

Example

In essence probability is influenced by a person's information about the possible occurrence of an event. For example, let the event be 'I have a new phone'; event be 'I have a new watch'; and event be 'I am happy'; and suppose that having either a new phone or a new watch increases the probability of my being happy. Let us assume that the event has occurred – meaning 'I am happy'. Now if another person sees my new watch, he/she will reason that my likelihood of being happy was increased by my new watch, so there is less need to attribute my happiness to a new phone.

To make the example more numerically specific, suppose that there are four possible states given in the middle four columns of the following table, in which the occurrence of event is signified by a in row and its non-occurrence is signified by a and likewise for and That is, and The probability of is for every

| Event | Probability of event | ||||

|---|---|---|---|---|---|

| 0 | 1 | 0 | 1 | ||

| 0 | 0 | 1 | 1 | ||

| 0 | 1 | 1 | 1 |

and so

| Event | Probability of event | ||||

|---|---|---|---|---|---|

| 0 | 0 | 0 | 1 | ||

| 0 | 1 | 0 | 1 | ||

| 0 | 0 | 1 | 1 | ||

| 0 | 0 | 0 | 1 |

In this example, occurs if and only if at least one of occurs. Unconditionally (that is, without reference to ), and are independent of each other because —the sum of the probabilities associated with a in row —is while But conditional on having occurred (the last three columns in the table), we have while Since in the presence of the probability of is affected by the presence or absence of and are mutually dependent conditional on

See also

- Conditional independence – Probability theory concept

- De Finetti's theorem – Conditional independence of exchangeable observations

- Conditional expectation – Expected value of a random variable given that certain conditions are known to occur

References

- ↑ Introduction to Artificial Intelligence by Sebastian Thrun and Peter Norvig, 2011 "Unit 3: Conditional Dependence"[yes|permanent dead link|dead link}}]

- ↑ Introduction to learning Bayesian Networks from Data by Dirk Husmeier [1][yes|permanent dead link|dead link}}] "Introduction to Learning Bayesian Networks from Data -Dirk Husmeier"

- ↑ Conditional Independence in Statistical theory "Conditional Independence in Statistical Theory", A. P. Dawid"

- ↑ Probabilistic independence on Britannica "Probability->Applications of conditional probability->independence (equation 7) "

- ↑ Introduction to Artificial Intelligence by Sebastian Thrun and Peter Norvig, 2011 "Unit 3: Explaining Away"[yes|permanent dead link|dead link}}]

- ↑ Bouckaert, Remco R. (1994). "11. Conditional dependence in probabilistic networks". in Cheeseman, P. (in EN). Selecting Models from Data, Artificial Intelligence and Statistics IV. Lecture Notes in Statistics. 89. Springer-Verlag. pp. 101-111, especially 104. ISBN 978-0-387-94281-0.

- ↑ Conditional Independence in Statistical theory "Conditional Independence in Statistical Theory", A. P. Dawid

|