Controlled grammar

Controlled grammars[1] are a class of grammars that extend, usually, the context-free grammars with additional controls on the derivations of a sentence in the language. A number of different kinds of controlled grammars exist, the four main divisions being Indexed grammars, grammars with prescribed derivation sequences, grammars with contextual conditions on rule application, and grammars with parallelism in rule application. Because indexed grammars are so well established in the field, this article will address only the latter three kinds of controlled grammars.

Control by prescribed sequences

Grammars with prescribed sequences are grammars in which the sequence of rule application is constrained in some way. There are four different versions of prescribed sequence grammars: language controlled grammars (often called just controlled grammars), matrix grammars, vector grammars, and programmed grammars.

In the standard context-free grammar formalism, a grammar itself is viewed as a 4-tuple, , where N is a set of non-terminal/phrasal symbols, T is a disjoint set of terminal/word symbols, S is a specially designated start symbol chosen from N, and P is a set of production rules like , where X is some member of N, and is some member of .

Productions over such a grammar are sequences of rules in P that, when applied in order of the sequence, lead to a terminal string. That is, one can view the set of imaginable derivations in G as the set , and the language of G as being the set of terminal strings . Control grammars take seriously this definition of the language generated by a grammar, concretizing the set-of-derivations as an aspect of the grammar. Thus, a prescribed sequence controlled grammar is at least approximately a 5-tuple where everything except R is the same as in a CFG, and R is an infinite set of valid derivation sequences .

The set R, due to its infinitude, is almost always (though not necessarily) described via some more convenient mechanism, such as a grammar (as in language controlled grammars), or a set of matrices or vectors (as in matrix and vector grammars). The different variations of prescribed sequence grammars thus differ by how the sequence of derivations is defined on top of the context-free base. Because matrix grammars and vector grammars are essentially special cases of language controlled grammars, examples of the former two will not be provided below.

Language controlled grammars

Language controlled grammars are grammars in which the production sequences constitute a well-defined language of arbitrary nature, usually though not necessarily regular, over a set of (again usually though not necessarily) context-free production rules. They also often have a sixth set in the grammar tuple, making it , where F is a set of productions that are allowed to apply vacuously. This version of language controlled grammars, ones with what is called "appearance checking", is the one henceforth.

Proof-theoretic description

We let a regularly controlled context-free grammar with appearance checking be a 6-tuple where N, T, S, and P are defined as in CFGs, R is a subset of P* constituting a regular language over P, and F is some subset of P. We then define the immediately derives relation as follows:

Given some strings x and y, both in , and some rule ,

holds if either

- and , or

- and

Intuitively, this simply spells out that a rule can apply to a string if the rule's left-hand-side appears in that string, or if the rule is in the set of "vacuously applicable" rules which can "apply" to a string without changing anything. This requirement that the non-vacuously applicable rules must apply is the appearance checking aspect of such a grammar. The language for this kind of grammar is then simply set of terminal strings .

Example

Consider a simple (though not the simplest) context-free grammar that generates the language :

Let , where

In language controlled form, this grammar is simply (where is a regular expression denoting the set of all sequences of production rules). A simple modification to this grammar, changing is control sequence set R into the set , and changing its vacuous rule set F to , yields a grammar which generates the non-CF language . To see how, consider the general case of some string with n instances of S in it, i.e. (the special case trivially derives the string a which is , an uninteresting fact).

If we chose some arbitrary production sequence , we can consider three possibilities: , , and When we rewrite all n instances of S as AA, by applying rule f to the string u times, and proceed to apply g, which applies vacuously (by virtue of being in F) . When , we rewrite all n instances of S as AA, and then try to perform the n+1 rewrite using rule f, but this fails because there are no more Ss to rewrite, and f is not in F and so cannot apply vacuously, thus when , the derivation fails. Lastly, then , we rewrite u instances of S, leaving at least one instance of S to be rewritten by the subsequent application of g, rewriting S as X. Given that no rule of this grammar ever rewrites X, such a derivation is destined to never produce a terminal string. Thus only derivations with will ever successfully rewrite the string . Similar reasoning holds of the number of As and v. In general, then, we can say that the only valid derivations have the structure will produce terminal strings of the grammar. The X rules, combined with the structure of the control, essentially force all Ss to be rewritten as AAs prior to any As being rewritten as Ss, which again is forced to happen prior to all still later iterations over the S-to-AA cycle. Finally, the Ss are rewritten as as. In this way, the number of Ss doubles each for each instantiation of that appears in a terminal-deriving sequence.

Choosing two random non-terminal deriving sequences, and one terminal-deriving one, we can see this in work:

Let , then we get the failed derivation:

Let , then we get the failed derivation:

Let , then we get the successful derivation:

Similar derivations with a second cycle of produce only SSSS. Showing only the (continued) successful derivation:

Matrix grammars

Matrix grammars (expanded on in their own article) are a special case of regular controlled context-free grammars, in which the production sequence language is of the form , where each "matrix" is a single sequence. For convenience, such a grammar is not represented with a grammar over P, but rather with just a set of the matrices in place of both the language and the production rules. Thus, a matrix grammar is the 5-tuple , where N, T, S, and F are defined essentially as previously done (with F a subset of M this time), and M is a set of matrices where each is a context-free production rule.

The derives relation in a matrix grammar is thus defined simply as:

Given some strings x and y, both in , and some matrix ,

holds if either

- , , and , or

- and

Informally, a matrix grammar is simply a grammar in which during each rewriting cycle, a particular sequence of rewrite operations must be performed, rather than just a single rewrite operation, i.e. one rule "triggers" a cascade of other rules. Similar phenomena can be performed in the standard context-sensitive idiom, as done in rule-based phonology and earlier Transformational grammar, by what are known as "feeding" rules, which alter a derivation in such a way as to provide the environment for a non-optional rule that immediately follows it.

Vector grammars

Vector grammars are closely related to matrix grammars, and in fact can be seen as a special class of matrix grammars, in which if , then so are all of its permutations . For convenience, however, we will define vector grammars as follows: a vector grammar is a 5-tuple , where N, T, and F are defined previously (F being a subset of M again), and where M is a set of vectors , each vector being a set of context free rules.

The derives relation in a vector grammar is then:

Given some strings x and y, both in , and some matrix ,

holds if either

- , , and , where , or

- and

Notice that the number of production rules used in the derivation sequence, n, is the same as the number of production rules in the vector. Informally, then, a vector grammar is one in which a set of productions is applied, each production applied exactly once, in arbitrary order, to derive one string from another. Thus vector grammars are almost identical to matrix grammars, minus the restriction on the order in which the productions must occur during each cycle of rule application.

Programmed grammars

Programmed grammars are relatively simple extensions to context-free grammars with rule-by-rule control of the derivation. A programmed grammar is a 4-tuple , where N, T, and S are as in a context-free grammar, and P is a set of tuples , where p is a context-free production rule, is a subset of P (called the success field), and is a subset of P (called the failure field). If the failure field of every rule in P is empty, the grammar lacks appearance checking, and if at least one failure field is not empty, the grammar has appearance checking. The derivation relation on a programmed grammar is defined as follows:

Given two strings , and some rule ,

- and , or

- and A does not appear in x.

The language of a programmed grammar G is defined by constraining the derivation rule-wise, as , where for each , either or .

Intuitively, when applying a rule p in a programmed grammar, the rule can either succeed at rewriting a symbol in the string, in which case the subsequent rule must be in ps success field, or the rule can fail to rewrite a symbol (thus applying vacuously), in which case the subsequent rule must be in ps failure field. The choice of which rule to apply to the start string is arbitrary, unlike in a language controlled grammar, but once a choice is made the rules that can be applied after it constrain the sequence of rules from that point on.

Example

As with so many controlled grammars, programmed grammars can generate the language :

Let , where

The derivation for the string aaaa is as follows:

As can be seen from the derivation and the rules, each time and succeed, they feed back to themselves, which forces each rule to continue to rewrite the string over and over until it can do so no more. Upon failing, the derivation can switch to a different rule. In the case of , that means rewriting all Ss as AAs, then switching to . In the case of , it means rewriting all As as Ss, then switching either to , which will lead to doubling the number of Ss produced, or to which converts the Ss to as then halts the derivation. Each cycle through then therefore either doubles the initial number of Ss, or converts the Ss to as. The trivial case of generating a, in case it is difficult to see, simply involves vacuously applying , thus jumping straight to which also vacuously applies, then jumping to which produces a.

Control by context conditions

Unlike grammars controlled by prescribed sequences of production rules, which constrain the space of valid derivations but do not constrain the sorts of sentences that a production rule can apply to, grammars controlled by context conditions have no sequence constraints, but permit constraints of varying complexity on the sentences to which a production rule applies. Similar to grammars controlled by prescribed sequences, there are multiple different kinds of grammars controlled by context conditions: conditional grammars, semi-conditional grammars, random context grammars, and ordered grammars.

Conditional grammars

Conditional grammars are the simplest version of grammars controlled by context conditions. The structure of a conditional grammar is very similar to that of a normal rewrite grammar: , where N, T, and S are as defined in a context-free grammar, and P is a set of pairs of the form where p is a production rule (usually context-free), and R is a language (usually regular) over . When R is regular, R can just be expressed as a regular expression.

Proof-theoretic definition

With this definition of a conditional grammar, we can define the derives relation as follows:

Given two strings , and some production rule ,

- if and only if , , and

Informally then, the production rule for some pair in P can apply only to strings that are in its context language. Thus, for example, if we had some pair , we can only apply this to strings consisting of any number of as followed by exactly only S followed by any number of bs, i.e. to sentences in , such as the strings S, aSb, aaaS, aSbbbbbb, etc. It cannot apply to strings like xSy, aaaSxbbb, etc.

Example

Conditional grammars can generate the context-sensitive language .

Let , where

We can then generate the sentence aaaa with the following derivation:

Semi-conditional grammars

A semi-conditional grammar is very similar to a conditional grammar, and technically the class of semi-conditional grammars are a subset of the conditional grammars. Rather than specifying what the whole of the string must look like for a rule to apply, semi-conditional grammars specify that a string must have as substrings all of some set of strings, and none of another set, in order for a rule to apply. Formally, then, a semi-conditional grammar is a tuple , where, N, T, and S are defined as in a CFG, and P is a set of rules like where p is a (usually context-free) production rule, and R and Q are finite sets of strings. The derives relation can then be defined as follows.

For two strings , and some rule ,

- if and only if every string in R is a substring of , and no string in Q is a substring of

The language of a semi-conditional grammar is then trivially the set of terminal strings .

An example of a semi-conditional grammar is given below also as an example of random context grammars.

Random context grammars

A random context grammar is a semi-conditional grammar in which the R and Q sets are all subsets of N. Because subsets of N are finite sets over , it is clear that random context grammars are indeed kinds of semi-conditional grammars.

Example

Like conditional grammars, random context grammars (and thus semi-conditional grammars) can generate the language . One grammar which can do this is:

Let , where

Consider now the production for aaaa:

The behavior of the R sets here is trivial: any string can be rewritten according to them, because they do not require any substrings to be present. The behavior of the Q sets, however, are more interesting. In , we are forced by the Q set to rewrite an S, thus beginning an S-doubling process, only when no Ys or As are present in the string, which means only when a prior S-doubling process has been fully initiated, eliminating the possibility of only doubling some of the Ss. In , which moves the S-doubling process into its second stage, we cannot begin this process until the first stage is complete and there are no more Ss to try to double, because the Q set prevents the rule from applying if there is an S symbol still in the string. In , we complete the doubling stage by introducing the Ss back only when there are no more Xs to rewrite, thus when the second stage is complete. We can cycle through these stages as many times as we want, rewriting all Ss to XXs before then rewriting each X to a Y, and then each Y to an S, finally ending by replacing each S with an A and then an a. Because the rule for replacing S with A prohibits application to a string with an X in it, we cannot apply this in the middle of the first stage of the S-doubling process, thus again preventing us from only doubling some Ss.

Ordered grammars

Ordered grammars are perhaps one of the simpler extensions of grammars into the controlled grammar domain. An ordered grammar is simply a tuple where N, T, and S are identical to those in a CFG, and P is a set of context-free rewrite rules with a partial ordering . The partial ordering is then used to determine which rule to apply to a string, when multiple rules are applicable. The derives relation is then:

Given some strings and some rule ,

- if and only if there is no rule such that .

Example

Like many other contextually controlled grammars, ordered grammars can enforce the application of rules in a particular order. Since this is the essential property of previous grammars that could generate the language , it should be no surprise that a grammar that explicitly uses rule ordering, rather than encoding it via string contexts, should similarly be able to capture that language. And as it turns out, just such an ordered grammar exists:

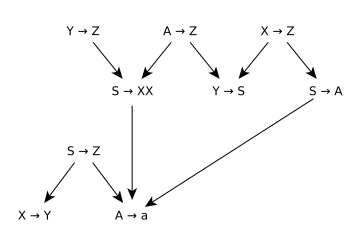

Let , where P is the partially ordered set described by the Hasse diagram

The derivation for the string aaaa is simply:

At each step of the way, the derivation proceeds by rewriting in cycles. Notice that if at the fifth step SY, we had four options: , the first two of which halt the derivation, as Z cannot be rewritten. In the example, we used to derive SS, but consider if we had chosen instead. We would have produced the string AS, the options for which are and , both of which halt the derivation. Thus with the string SY, and conversely with YS, we must rewrite the Y to produce SS. The same hold for other combinations, so that overall, the ordering forces the derivation to halt, or else proceed by rewriting all Ss to XXs, then all Xs to Ys, then all Ys to Ss, and so on, then finally all Ss to As then all As to as. In this way, a string can only ever be rewritten as which produces as, or as . Starting with n = 0, it should be clear that this grammar only generates the language .

Grammars with parallelism

A still further class of controlled grammars is the class of grammars with parallelism in the application of a rewrite operation, in which each rewrite step can (or must) rewrite more than one non-terminal simultaneously. These, too, come in several flavors: Indian parallel grammars, k-grammars, scattered context grammars, unordered scattered context grammars, and k-simple matrix grammars. Again, the variants differ in how the parallelism is defined.

Indian parallel grammars

An Indian parallel grammar is simply a CFG in which to use a rewrite rule, all instances of the rules non-terminal symbol must be rewritten simultaneously. Thus, for example, given the string aXbYcXd, with two instances of X, and some rule , the only way to rewrite this string with this rule is to rewrite it as awbYcwd; neither awbYcXd nor aXbYcwd are valid rewrites in an Indian parallel grammar, because they did not rewrite all instances of X.

Indian parallel grammars can easily produce the language :

Let , where

Generating then is quite simple:

The language is even simpler:

Let , where P consists of

It should be obvious, just from the first rule, and the requirement that all instances of a non-terminal are rewritten simultaneously with the same rule, that the number of Ss doubles on each rewrite step using the first rule, giving the derivation steps . Final application of the second rule replaces all the Ss with as, thus showing how this simple language can produce the language .

K-grammars

A k-grammar is yet another kind of parallel grammar, very different from an Indian parallel grammar, but still with a level of parallelism. In a k-grammar, for some number k, exactly k non-terminal symbols must be rewritten at every step (except the first step, where the only symbol in the string is the start symbol). If the string has less than k non-terminals, the derivation fails.

A 3-grammar can produce the language , as can be seen below:

Let , where P consists of:

With the following derivation for aaabbbccc:

At each step in the derivation except the first and last, we used the self-recursive rules . If we had not use the recursive rules, instead using, say, , where one of the rules is not self-recursive, the number of non-terminals would have decreased to 2, thus making the string unable to be derived further because it would have too few non-terminals to be rewritten.

Russian parallel grammars

Russian parallel grammars[2] are somewhere between Indian parallel grammars and k-grammars, defined as , where N, T, and S are as in a context-free grammar, and P is a set of pairs , where is a context-free production rule, and k is either 1 or 2. Application of a rule involves rewriting k occurrences of A to w simultaneously.

Scattered context grammars

A scattered context grammar is a 4-tuple where N, T, and S are defined as in a context-free grammar, and P is a set of tuples called matrixes , where can vary according to the matrix. The derives relation for such a grammar is

- if and only if

- , and

- , for

Intuitively, then, the matrixes in a scattered context grammar provide a list of rules which must each be applied to non-terminals in a string, where those non-terminals appear in the same linear order as the rules that rewrite them.

An unordered scattered context grammar is a scattered context grammar in which, for every rule in P, each of its permutations is also in P. As such, a rule and its permutations can instead be represented as a set rather than as tuples.

Example

Scattered context grammars are capable of describing the language quite easily.

Let , where

Deriving then is trivial:

References

|