Feasible region

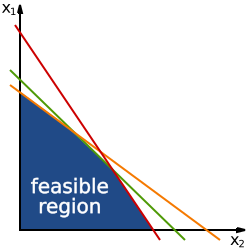

In mathematical optimization and computer science, a feasible region, feasible set, or solution space is the set of all possible points (sets of values of the choice variables) of an optimization problem that satisfy the problem's constraints, potentially including inequalities, equalities, and integer constraints.[1] This is the initial set of candidate solutions to the problem, before the set of candidates has been narrowed down.

For example, consider the problem of minimizing the function with respect to the variables and subject to and Here the feasible set is the set of pairs (x, y) in which the value of x is at least 1 and at most 10 and the value of y is at least 5 and at most 12. The feasible set of the problem is separate from the objective function, which states the criterion to be optimized and which in the above example is

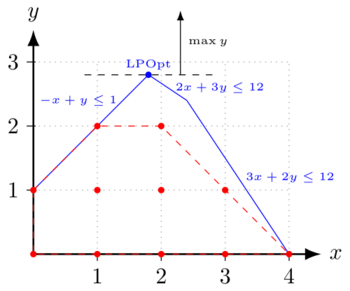

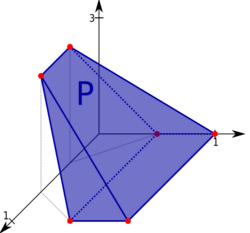

In many problems, the feasible set reflects a constraint that one or more variables must be non-negative. In pure integer programming problems, the feasible set is the set of integers (or some subset thereof). In linear programming problems, the feasible set is a convex polytope: a region in multidimensional space whose boundaries are formed by hyperplanes and whose corners are vertices.

Constraint satisfaction is the process of finding a point in the feasible region.

Convex feasible set

A convex feasible set is one in which a line segment connecting any two feasible points goes through only other feasible points, and not through any points outside the feasible set. Convex feasible sets arise in many types of problems, including linear programming problems, and they are of particular interest because, if the problem has a convex objective function that is to be minimized, it will generally be easier to solve in the presence of a convex feasible set and any local optimum will also be a global optimum.

No feasible set

If the constraints of an optimization problem are mutually contradictory, there are no points that satisfy all the constraints and thus the feasible region is the empty set. In this case the problem has no solution and is said to be infeasible.

Bounded and unbounded feasible sets

Feasible sets may be bounded or unbounded. For example, the feasible set defined by the constraint set {x ≥ 0, y ≥ 0} is unbounded because in some directions there is no limit on how far one can go and still be in the feasible region. In contrast, the feasible set formed by the constraint set {x ≥ 0, y ≥ 0, x + 2y ≤ 4} is bounded because the extent of movement in any direction is limited by the constraints.

In linear programming problems with n variables, a necessary but insufficient condition for the feasible set to be bounded is that the number of constraints be at least n + 1 (as illustrated by the above example).

If the feasible set is unbounded, there may or may not be an optimum, depending on the specifics of the objective function. For example, if the feasible region is defined by the constraint set {x ≥ 0, y ≥ 0}, then the problem of maximizing x + y has no optimum since any candidate solution can be improved upon by increasing x or y; yet if the problem is to minimize x + y, then there is an optimum (specifically at (x, y) = (0, 0)).

Candidate solution

In optimization and other branches of mathematics, and in search algorithms (a topic in computer science), a candidate solution is a member of the set of possible solutions in the feasible region of a given problem.[2] A candidate solution does not have to be a likely or reasonable solution to the problem—it is simply in the set that satisfies all constraints; that is, it is in the set of feasible solutions. Algorithms for solving various types of optimization problems often narrow the set of candidate solutions down to a subset of the feasible solutions, whose points remain as candidate solutions while the other feasible solutions are henceforth excluded as candidates.

The space of all candidate solutions, before any feasible points have been excluded, is called the feasible region, feasible set, search space, or solution space.[2] This is the set of all possible solutions that satisfy the problem's constraints. Constraint satisfaction is the process of finding a point in the feasible set.

Genetic algorithm

In the case of the genetic algorithm, the candidate solutions are the individuals in the population being evolved by the algorithm.[3]

Calculus

In calculus, an optimal solution is sought using the first derivative test: the first derivative of the function being optimized is equated to zero, and any values of the choice variable(s) that satisfy this equation are viewed as candidate solutions (while those that do not are ruled out as candidates). There are several ways in which a candidate solution might not be an actual solution. First, it might give a minimum when a maximum is being sought (or vice versa), and second, it might give neither a minimum nor a maximum but rather a saddle point or an inflection point, at which a temporary pause in the local rise or fall of the function occurs. Such candidate solutions may be able to be ruled out by use of the second derivative test, the satisfaction of which is sufficient for the candidate solution to be at least locally optimal. Third, a candidate solution may be a local optimum but not a global optimum.

In taking antiderivatives of monomials of the form the candidate solution using Cavalieri's quadrature formula would be This candidate solution is in fact correct except when

Linear programming

In the simplex method for solving linear programming problems, a vertex of the feasible polytope is selected as the initial candidate solution and is tested for optimality; if it is rejected as the optimum, an adjacent vertex is considered as the next candidate solution. This process is continued until a candidate solution is found to be the optimum.

References

- ↑ Beavis, Brian; Dobbs, Ian (1990). Optimisation and Stability Theory for Economic Analysis. New York: Cambridge University Press. p. 32. ISBN 0-521-33605-8. https://books.google.com/books?id=L7HMACFgnXMC&pg=PA32.

- ↑ 2.0 2.1 Boyd, Stephen; Vandenberghe, Lieven (2004-03-08). Convex Optimization. Cambridge University Press. doi:10.1017/cbo9780511804441. ISBN 978-0-521-83378-3. http://dx.doi.org/10.1017/cbo9780511804441.

- ↑ Whitley, Darrell (1994). "A genetic algorithm tutorial". Statistics and Computing 4 (2): 65–85. doi:10.1007/BF00175354. https://cobweb.cs.uga.edu/~potter/CompIntell/ga_tutorial.pdf.

|