Gauss–Kuzmin distribution

|

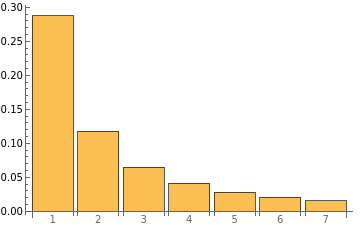

Probability mass function  | |||

|

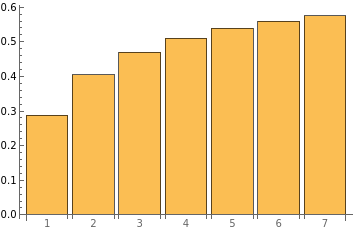

Cumulative distribution function  | |||

| Parameters | (none) | ||

|---|---|---|---|

| Support | [math]\displaystyle{ k \in \{1,2,\ldots\} }[/math] | ||

| pmf | [math]\displaystyle{ -\log_2\left[ 1-\frac{1}{(k+1)^2}\right] }[/math] | ||

| CDF | [math]\displaystyle{ 1 - \log_2\left(\frac{k+2}{k+1}\right) }[/math] | ||

| Mean | [math]\displaystyle{ +\infty }[/math] | ||

| Median | [math]\displaystyle{ 2\, }[/math] | ||

| Mode | [math]\displaystyle{ 1\, }[/math] | ||

| Variance | [math]\displaystyle{ +\infty }[/math] | ||

| Skewness | (not defined) | ||

| Kurtosis | (not defined) | ||

| Entropy | 3.432527514776...[1][2][3] | ||

In mathematics, the Gauss–Kuzmin distribution is a discrete probability distribution that arises as the limit probability distribution of the coefficients in the continued fraction expansion of a random variable uniformly distributed in (0, 1).[4] The distribution is named after Carl Friedrich Gauss, who derived it around 1800,[5] and Rodion Kuzmin, who gave a bound on the rate of convergence in 1929.[6][7] It is given by the probability mass function

- [math]\displaystyle{ p(k) = - \log_2 \left( 1 - \frac{1}{(1+k)^2}\right)~. }[/math]

Gauss–Kuzmin theorem

Let

- [math]\displaystyle{ x = \cfrac{1}{k_1 + \cfrac{1}{k_2 + \cdots}} }[/math]

be the continued fraction expansion of a random number x uniformly distributed in (0, 1). Then

- [math]\displaystyle{ \lim_{n \to \infty} \mathbb{P} \left\{ k_n = k \right\} = - \log_2\left(1 - \frac{1}{(k+1)^2}\right)~. }[/math]

Equivalently, let

- [math]\displaystyle{ x_n = \cfrac{1}{k_{n+1} + \cfrac{1}{k_{n+2} + \cdots}}~; }[/math]

then

- [math]\displaystyle{ \Delta_n(s) = \mathbb{P} \left\{ x_n \leq s \right\} - \log_2(1+s) }[/math]

tends to zero as n tends to infinity.

Rate of convergence

In 1928, Kuzmin gave the bound

- [math]\displaystyle{ |\Delta_n(s)| \leq C \exp(-\alpha \sqrt{n})~. }[/math]

In 1929, Paul Lévy[8] improved it to

- [math]\displaystyle{ |\Delta_n(s)| \leq C \, 0.7^n~. }[/math]

Later, Eduard Wirsing showed[9] that, for λ = 0.30366... (the Gauss–Kuzmin–Wirsing constant), the limit

- [math]\displaystyle{ \Psi(s) = \lim_{n \to \infty} \frac{\Delta_n(s)}{(-\lambda)^n} }[/math]

exists for every s in [0, 1], and the function Ψ(s) is analytic and satisfies Ψ(0) = Ψ(1) = 0. Further bounds were proved by K. I. Babenko.[10]

See also

References

- ↑ Blachman, N. (1984). "The continued fraction as an information source (Corresp.)". IEEE Transactions on Information Theory 30 (4): 671–674. doi:10.1109/TIT.1984.1056924.

- ↑ Kornerup, Peter; Matula, David W. (July 1995). "LCF: A Lexicographic Binary Representation of the Rationals". J.UCS the Journal of Universal Computer Science. 1. 484–503. doi:10.1007/978-3-642-80350-5_41. ISBN 978-3-642-80352-9.

- ↑ Vepstas, L. (2008), Entropy of Continued Fractions (Gauss-Kuzmin Entropy), http://linas.org/math/entropy.pdf

- ↑ Weisstein, Eric W.. "Gauss–Kuzmin Distribution". http://mathworld.wolfram.com/Gauss-KuzminDistribution.html.

- ↑ Gauss, Johann Carl Friedrich. Werke Sammlung. 10/1. pp. 552–556. http://gdz.sub.uni-goettingen.de/dms/load/img/?PPN=PPN236018647.

- ↑ Kuzmin, R. O. (1928). "On a problem of Gauss". Dokl. Akad. Nauk SSSR: 375–380.

- ↑ Kuzmin, R. O. (1932). "On a problem of Gauss". Atti del Congresso Internazionale dei Matematici, Bologna 6: 83–89.

- ↑ Lévy, P. (1929). "Sur les lois de probabilité dont dépendant les quotients complets et incomplets d'une fraction continue". Bulletin de la Société Mathématique de France 57: 178–194. doi:10.24033/bsmf.1150. http://www.numdam.org/item?id=BSMF_1929__57__178_0.

- ↑ Wirsing, E. (1974). "On the theorem of Gauss–Kusmin–Lévy and a Frobenius-type theorem for function spaces". Acta Arithmetica 24 (5): 507–528. doi:10.4064/aa-24-5-507-528.

- ↑ Babenko, K. I. (1978). "On a problem of Gauss". Soviet Math. Dokl. 19: 136–140.

|