Neyman construction

Neyman construction, named after Jerzy Neyman, is a frequentist method to construct an interval at a confidence level such that if we repeat the experiment many times the interval will contain the true value of some parameter a fraction of the time.

Theory

Assume are random variables with joint pdf , which depends on k unknown parameters. For convenience, let be the sample space defined by the n random variables and subsequently define a sample point in the sample space as

Neyman originally proposed defining two functions and such that for any sample point,,

- L and U are single valued and defined.

Given an observation, , the probability that lies between and is defined as with probability of or . These calculated probabilities fail to draw meaningful inference about since the probability is simply zero or unity. Furthermore, under the frequentist construct the model parameters are unknown constants and not permitted to be random variables.[1] For example if , then . Likewise, if , then

As Neyman describes in his 1937 paper, suppose that we consider all points in the sample space, that is, , which are a system of random variables defined by the joint pdf described above. Since and are functions of they too are random variables and one can examine the meaning of the following probability statement:

- Under the frequentist construct the model parameters are unknown constants and not permitted to be random variables. Considering all the sample points in the sample space as random variables defined by the joint pdf above, that is all it can be shown that and are functions of random variables and hence random variables. Therefore one can look at the probability of and for some . If is the true value of , we can define and such that the probability and is equal to pre-specified confidence level.

That is, where and and are the upper and lower confidence limits for [1]

Coverage probability

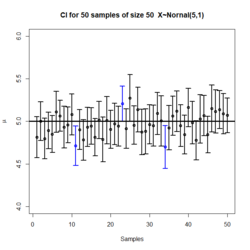

The coverage probability, , for Neyman construction is the frequency of experiments in which the confidence interval contains the actual value of interest. Generally, the coverage probability is set to a confidence. For Neyman construction, the coverage probability is set to some value where . This value tells how confident we are that the true value will be contained in the interval.

Implementation

A Neyman construction can be carried out by performing multiple experiments that construct data sets corresponding to a given value of the parameter. The experiments are fitted with conventional methods, and the space of fitted parameter values constitutes the band which the confidence interval can be selected from.

Classic example

Suppose , where and are unknown constants where we wish to estimate . We can define (2) single value functions, and , defined by the process above such that given a pre-specified confidence level, , and random sample

where is the standard error, and the sample mean and standard deviation are:

The factor follows a t distribution with (n-1) degrees of freedom, ~t [2]

Another Example

are iid random variables, and let . Suppose . Now to construct a confidence interval with level of confidence. We know is sufficient for . So,

This produces a confidence interval for where,

- .

See also

References

- ↑ 1.0 1.1 Neyman, J. (1937). "Outline of a Theory of Statistical Estimation Based on the Classical Theory of Probability". Philosophical Transactions of the Royal Society of London. Series A, Mathematical and Physical Sciences 236 (767): 333–380. doi:10.1098/rsta.1937.0005.

- ↑ Rao, C. Radhakrishna (13 April 1973). Linear Statistical Inference and its Applications: Second Edition. John Wiley & Sons. pp. 470–472. ISBN 9780471708230.

- ↑ Samaniego, Francisco J. (2014-01-14). Stochastic Modeling and Mathematical Statistics. Chapman and Hall/CRC. pp. 347. ISBN 9781466560468.

|