Projections onto convex sets

In mathematics, projections onto convex sets (POCS), sometimes known as the alternating projection method, is a method to find a point in the intersection of two closed convex sets. It is a very simple algorithm and has been rediscovered many times.[1] The simplest case, when the sets are affine spaces, was analyzed by John von Neumann.[2][3] The case when the sets are affine spaces is special, since the iterates not only converge to a point in the intersection (assuming the intersection is non-empty) but to the orthogonal projection of the point onto the intersection. For general closed convex sets, the limit point need not be the projection. Classical work on the case of two closed convex sets shows that the rate of convergence of the iterates is linear.[4][5] There are now extensions that consider cases when there are more than two sets, or when the sets are not convex,[6] or that give faster convergence rates. Analysis of POCS and related methods attempt to show that the algorithm converges (and if so, find the rate of convergence), and whether it converges to the projection of the original point. These questions are largely known for simple cases, but a topic of active research for the extensions. There are also variants of the algorithm, such as Dykstra's projection algorithm. See the references in the further reading section for an overview of the variants, extensions and applications of the POCS method; a good historical background can be found in section III of.[7]

Algorithm

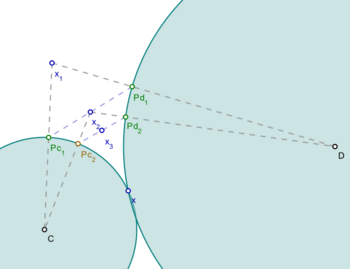

The POCS algorithm solves the following problem:

where C and D are closed convex sets.

To use the POCS algorithm, one must know how to project onto the sets C and D separately, via the projections .

The algorithm starts with an arbitrary value for and then generates the sequence

The simplicity of the algorithm explains some of its popularity. If the intersection of C and D is non-empty, then the sequence generated by the algorithm will converge to some point in this intersection.

Unlike Dykstra's projection algorithm, the solution need not be a projection onto the intersection C and D.

Related algorithms

The method of averaged projections is quite similar. For the case of two closed convex sets C and D, it proceeds by

It has long been known to converge globally.[8] Furthermore, the method is easy to generalize to more than two sets; some convergence results for this case are in.[9]

The averaged projections method can be reformulated as alternating projections method using a standard trick. Consider the set

which is defined in the product space . Then define another set, also in the product space:

Thus finding is equivalent to finding .

To find a point in , use the alternating projection method. The projection of a vector onto the set F is given by . Hence

Since and assuming , then for all , and hence we can simplify the iteration to .

References

- ↑ Bauschke, H.H.; Borwein, J.M. (1996). "On projection algorithms for solving convex feasibility problems". SIAM Review 38 (3): 367–426. doi:10.1137/S0036144593251710.

- ↑ J. von Neumann,Neumann, John Von (1949). "On rings of operators. Reduction theory". Ann. of Math. 50 (2): 401–485. doi:10.2307/1969463. (a reprint of lecture notes first distributed in 1933)

- ↑ J. von Neumann. Functional Operators, volume II. Princeton University Press, Princeton, NJ, 1950. Reprint of mimeographed lecture notes first distributed in 1933.

- ↑ Gubin, L.G.; Polyak, B.T.; Raik, E.V. (1967). "The method of projections for finding the common point of convex sets". U.S.S.R. Computational Mathematics and Mathematical Physics 7 (6): 1–24. doi:10.1016/0041-5553(67)90113-9.

- ↑ Bauschke, H.H.; Borwein, J.M. (1993). "On the convergence of von Neumann's alternating projection algorithm for two sets". Set-Valued Analysis 1 (2): 185–212. doi:10.1007/bf01027691.

- ↑ Lewis, Adrian S.; Malick, Jérôme (2008). "Alternating Projections on Manifolds". Mathematics of Operations Research 33: 216–234. doi:10.1287/moor.1070.0291.

- ↑ Combettes, P. L. (1993). "The foundations of set theoretic estimation". Proceedings of the IEEE 81 (2): 182–208. doi:10.1109/5.214546. http://www.ann.jussieu.fr/~plc/proc.pdf. Retrieved 2012-10-09.

- ↑ A. Auslender. Methodes Numeriques pour la Resolution des Problems d’Optimisation avec Constraintes. PhD thesis, Faculte des Sciences, Grenoble, 1969

- ↑ Lewis, A. S.; Luke, D. R.; Malick, J. (2009). "Local convergence for alternating and averaged nonconvex projections". Foundations of Computational Mathematics 9 (4): 485–513. doi:10.1007/s10208-008-9036-y.

Further reading

- Book from 2011: Alternating Projection Methods by René Escalante and Marcos Raydan (2011), published by SIAM.

|